Distributed Systems

advertisement

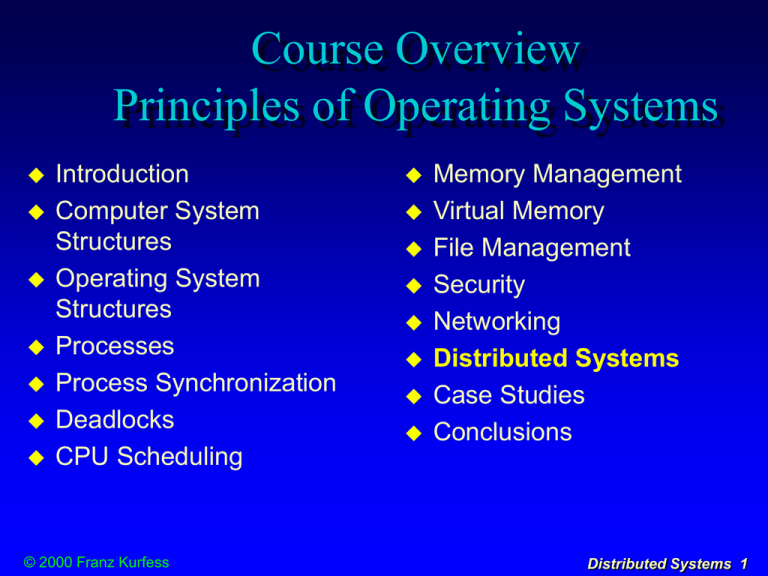

Course Overview Principles of Operating Systems Introduction Computer System Structures Operating System Structures Processes Process Synchronization Deadlocks CPU Scheduling © 2000 Franz Kurfess Memory Management Virtual Memory File Management Security Networking Distributed Systems Case Studies Conclusions Distributed Systems 1 Chapter Overview Distributed Systems Motivation Objectives Distributed System Structures Distributed Communication Task Distribution Process Migration Distributed File Systems Naming and Transparency Remote File Access Stateful/Stateless Service File Replication © 2000 Franz Kurfess Message Passing Remote Procedure Call Shared Memory Distributed Coordination Distributed Processing Network Operating Systems Distributed OS Remote Services Event Ordering Mutual Exclusion Atomicity Distributed Deadlock Important Concepts and Terms Chapter Summary Distributed Systems 2 Motivation the next step after connecting computers via networks are distributed systems resources are transparently available throughout the system users don’t need to be aware of the system structure environment independent of the local machine the location and execution of processes is distributed load balancing process migration © 2000 Franz Kurfess Distributed Systems 3 Objectives be aware of benefits and potential problems of distributed systems understand location independence and location transparency understand mechanisms for distributing data and computation know extensions of previous concepts to distributed systems © 2000 Franz Kurfess Distributed Systems 4 Characteristics of Modern Operating Systems (review OS Structures chapter) microkernel architecture multithreading symmetric multiprocessing distributed operating systems object-oriented design © 2000 Franz Kurfess Distributed Systems 5 Distributed Operating Systems all resources within the distributed system are available to all processes if they have the right permissions users and processes don’t need to be aware of the exact location of resources the execution of tasks can be distributed over several nodes transparent to the task, user and programmer there is one single file system encompassing all files on all nodes transparent © 2000 Franz Kurfess to the user Distributed Systems 6 Applications Distributed SystemsOS Diagram Users and Distributed User Programs Hardware Operating System Server Processes Personalities MicroKernel Computer Node © 2000 Franz Kurfess MicroKernel System Services MicroKernel Device Drivers File System MicroKernel MicroKernel MicroKernel Computer Computer Computer Computer Computer Node Node Node Node Node Distributed Systems 7 Distributed System Structures Network Operating Systems Distributed Operating Systems Remote Services © 2000 Franz Kurfess Distributed Systems 8 Network Operating Systems users are aware of the individual machines in the network resources are accessible via login or explicit transfer of data remote © 2000 Franz Kurfess login, ftp, etc. Distributed Systems 9 Distributed Operating Systems users are unaware of the underlying machines and networks in practice, users still have knowledge about particular machines remote resources are accessible in the same way as local resources practical limitations physical access (printer, removable media) movement of data and processes is under the control of the distributed OS © 2000 Franz Kurfess Distributed Systems 10 Distributed Processing Task Distribution Data Migration Computation Migration Process Migration © 2000 Franz Kurfess Distributed Systems 11 Task Distribution allocation of tasks to nodes static: at compile or load time dynamical: at run time separation usually © 2000 Franz Kurfess of a task into subtasks by or with the help of the programmer Distributed Systems 12 Data Migration movement of data within a distributed system transfer of whole files whenever access to a file is requested, the whole file is transferred to the node all future accesses in that session are local transfer of necessary parts only those parts of a file that are actually needed are transferred similar to demand paging used in many modern systems Sun NFS, Andrews, MS SMB protocol © 2000 Franz Kurfess Distributed Systems 13 Computation Migration the computation (task, process, thread) is moved to the location of the data large quantities of data time to transfer data vs. time to execute remote commands remote a procedure call predefined procedure is invoked on a remote system message passing a message with a request for an action is sent to the remote system the remote system creates a process to execute the requested task, and returns a message with the result © 2000 Franz Kurfess Distributed Systems 14 Process Migration extension to computation migration a process may be executed at a site different from the one where it was initiated reasons for process migration load balancing computation speedup specialized hardware specialized software access to data OS decides the allocation of processes practical © 2000 Franz Kurfess limitations (specialized hardware and software) Distributed Systems 15 Remote Services (see the chapter on processes) remote procedure calls (RPC) threads © 2000 Franz Kurfess Distributed Systems 16 Remote Procedure Calls usually implemented on top of a message-based communication scheme far less reliable than local procedure calls precautions binding must be taken for failures problems local systems can integrate the procedure call into the executable this is not possible for remote calls fixed port number at compile time dynamic approach (rendezvous arranged via matchmaker port) © 2000 Franz Kurfess Distributed Systems 17 Threads often used in combination with remote procedure calls threads execute RPCs on the receiving system lower overhead than full processes a server spawns a new thread for incoming requests all threads can continue concurrently threads don’t block each other example: © 2000 Franz Kurfess Distributed Computing Environment (DCE) Distributed Systems 18 Distributed Computing Environment (DCE) threads package for standardizing network functionality and protocols system calls for various purposes thread management synchronization condition variables scheduling interoperability available © 2000 Franz Kurfess for most Unix systems, Windows NT Distributed Systems 19 Distributed File Systems Naming and Transparency Remote File Access Stateful/Stateless Service File Replication Example: Sun NFS © 2000 Franz Kurfess Distributed Systems 20 Distributed File System multi-user file system where files may reside on various nodes in a distributed system transfer time over the network as additional delay at 10 MBit/s, the transfer of a 1 MByte file will take about 1 second (under good conditions) © 2000 Franz Kurfess Distributed Systems 21 Naming and Transparency naming mapping between logical file names as seen by the user, and the physical location of the blocks that constitute a file location transparency the actual location of the file does not have to be known by the user a file may reside on a local system or on a central file server files may be cached or replicated for performance reasons location independence the file may be moved, but its name doesn’t have to be changed © 2000 Franz Kurfess Distributed Systems 22 Naming Schemes specification of host and path host:local-path/file-name not location transparent nor location independent mounting of remote directories remote directories can be attached to local directories can become cumbersome to maintain location transparent, but not location independent example: Sun NFS global name space total integration of the individual file systems location transparent, location independent example: Andrews, Sprite, Locus © 2000 Franz Kurfess Distributed Systems 23 Remote File Access remote service on top of a remote procedure call mechanism extension of system calls frequently © 2000 Franz Kurfess caching is used to improve performance Distributed Systems 24 Stateful/Stateless Service stateful file service connection between client and server is maintained for the duration of a session the server has information on the status of the client frequently better performance stateless file service each request is self-contained the server keeps no information on the status or previous activities of the client less complex © 2000 Franz Kurfess Distributed Systems 25 File Replication several copies of files are kept on different machines performance better access times redundancy loss or corruption of a file is not a big problem consistency different instances must be kept identical © 2000 Franz Kurfess Distributed Systems 26 Sun NFS widely used distributed file system interconnected workstations are viewed as independent nodes with independent file systems files can be shared between any pair of nodes not restricted to servers implemented by mounting directories into local file systems the mounted directory looks like a part of the local file system remote procedure calls enable remote file operations © 2000 Franz Kurfess Distributed Systems 27 NFS Diagram Client Server file system calls VFS Interface VFS Interface other file Unix file NFS systems system client RPC Communication Mech. NFS Unix file other file client system systems RPC Communication Mech. Request Response Operating System Operating System Hardware Platform Hardware Platform © 2000 Franz Kurfess Distributed Systems 28 Communication in Distributed Systems Message Passing Remote Procedure Call Shared Memory impractical © 2000 Franz Kurfess for distributed systems Distributed Systems 29 Message Passing a request for a service is sent from the local system to the remote system the request is sent in the form of a message the receiving process accepts the message and performs the desired service the result is also returned as a message reliability acknowledgments may be used to indicate the receipt f a message synchronous (blocking) or asynchronous (non- blocking) © 2000 Franz Kurfess Distributed Systems 30 Remote Procedure Call often built on top of message passing frequently parameter implemented as synchronous calls passing call by value is much easier to implement than call by reference parameter representation translation between programming languages or operating systems may be required © 2000 Franz Kurfess Distributed Systems 31 RPC Diagram Client Application Server Application local calls local calls Application Logic Local (Client Side) Stub RPC Communication Mech. Local Application Logic Stub (Client Side) Request Response RPC Communication Mech. Operating System Operating System Hardware Platform Hardware Platform © 2000 Franz Kurfess Distributed Systems 32 Distributed Coordination Event Ordering Mutual Exclusion Atomicity Distributed Deadlock © 2000 Franz Kurfess Distributed Systems 33 Distributed Coordination synchronization of processes across distributed systems no common memory no common clock extension of methods discussed in the chapters on process synchronization and deadlocks not all methods can be extended easily © 2000 Franz Kurfess Distributed Systems 34 Event Ordering straightforward in a single system it is always possible to determine if on event happens before, at the same time, or after another event often expressed by the happened-before relation defines a total ordering of the events timestamps can be used in distributed systems to determine a global ordering of events © 2000 Franz Kurfess Distributed Systems 35 Event Ordering Diagram A A A Time Event Process © 2000 Franz Kurfess Distributed Systems 36 Mutual Exclusion critical sections which may be used by at most one process at a time processes are distributed over several nodes approaches centralized fully distributed token passing © 2000 Franz Kurfess Distributed Systems 37 Centralized Approach one process coordinates the entry to the critical section processes wishing to enter the critical section send a request message to the coordinator one process gets permission through a reply message from the coordinator, enters the critical section, and sends a release message to the coordinator coordinator is critical if it fails, a new coordinator must be determined © 2000 Franz Kurfess Distributed Systems 38 Fully Distributed Approach far more complicated than the centralized approach based on event ordering with timestamps a process that wants to enter its critical section sends a request (including the timestamp) to all other processes it waits until it receives a reply message from all processes before entering the critical section if a process is in its critical section, it won’t send a reply message until it has left the critical section © 2000 Franz Kurfess Distributed Systems 39 Token Passing processes are arranged in a logical ring one single token is passed around the distributed system the holder of the token is allowed to enter the critical section precautions must be taken for lost tokens © 2000 Franz Kurfess Distributed Systems 40 Distributed Atomicity atomic a transaction set of operations that is either fully executed, or not at all in a distributed system, the operations grouped into one atomic transaction may be executed on different nodes/sites transaction coordinator local coordinator guarantees atomicity at one site two-phase © 2000 Franz Kurfess commit (2PC) protocol Distributed Systems 41 Two-Phase Commit Protocol makes sure that all sites involved either commit to a common transaction, or abort Phase 1 after the execution of the transaction, the transaction manager at the initiating site queries all the others if they are willing to commit their portions of the transaction Phase 2 if all answer positively within a given time, the transaction is committed; otherwise it must be aborted the outcome is reported to all sites, and they finalize the commit or abort © 2000 Franz Kurfess Distributed Systems 42 Failure Handling in 2PC participating site the affected site must either redo, undo, or contact the coordinator about the fate of the transaction coordinator if a participating site has a commit (abort) on record, the transaction must be committed (aborted) it may be impossible to determine if and what kind of decision has been made, and the sites must wait for the coordinator to recover network for one link, it is similar to the failure of a site for several links, the network may be partitioned if coordinator and all participating sites are in the same partition, the protocol can continue otherwise it is similar to site failure © 2000 Franz Kurfess Distributed Systems 43 Distributed Deadlock extensions of the methods and algorithms discussed in the chapter on deadlocks deadlock prevention and avoidance resource ordering banker’s algorithm prioritized preemption deadlock detection (simple case: one instance per resource type) centralized fully distributed © 2000 Franz Kurfess Distributed Systems 44 Deadlock Prevention resource-ordering can be enhanced by defining a global ordering on the resources in the distributed system distributed banker’s algorithm one process performs the role of the banker all requests for resources must go through the banker the banker can become the bottleneck prioritized preemption each process has a unique priority number cycles in the resource allocation graph are prevented by preempting processes with lower priorities © 2000 Franz Kurfess Distributed Systems 45 Centralized Deadlock Detection each site maintains a local wait-for graph it must be shown that the union of all the graphs contains no cycle one coordinator maintains the unified graph time delays may lead to false cycles can be avoided by using time stamps © 2000 Franz Kurfess Distributed Systems 46 Distributed Deadlock Detection partial graphs are maintained at every site if a deadlock exists, it will lead to a cycle in at least one of the partial graphs based on local wait-for graphs enhanced by a node for external processes an arc to that node exists if a process waits for an external item a cycle involving that external node indicates the possibility of a deadlock can be verified by a distributed deadlock detection algorithm involving message exchanges with affected sites © 2000 Franz Kurfess Distributed Systems 47 Important Concepts and Terms asynchronous atomic transactions client/server model communication coordination distributed deadlock distributed file system distributed operating system event ordering kernel location independence location transparency © 2000 Franz Kurfess message passing microkernel mutual exclusion naming network file system network operating system processes remote procedure call resources server, services synchronous tasks Distributed Systems 48 Chapter Summary distributed systems extend the functionality of computers connected through networks location independence and location transparency are important aspects of distributed systems distribution of data and computation can achieve better resource utilization and performance many aspects of distributed systems are more complex than for local systems © 2000 Franz Kurfess Distributed Systems 49