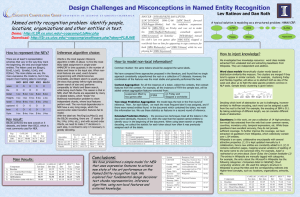

Posters - Cognitive Computation Group

advertisement

Design Challenges and Misconceptions in Named Entity Recognition Lev Ratinov and Dan Roth The Named entity recognition problem: identify people, locations, organizations and other entities in text. A typical solution is modeled as a structured problem: HMM/CRF: Demo: http://L2R.cs.uiuc.edu/~cogcomp/demos.php Download: http://L2R.cs.uiuc.edu/~cogcomp/asoftware.php How to represent NE’s? There are at least 5 representation schemas that vary in the way they mark the beginning, the end and single-token entities. The letters used are B(egin), E(nd), L(ast), I(nside), U(nit), and O(ther). The more states we use, the more expressive the model is, but it may require more training data to converge. BIO1 BIO2 IOE1 IOE2 BILOU retain O O O O O the O O O O O Golan I-loc B-loc I-loc Heights I-loc I-loc Israel B-loc B-loc I-loc B-loc B-loc E-loc E-loc L-loc E-loc U-loc captured O O O O O from O O O O O Syria I-loc B-loc I-loc Key result: E-loc U-loc BILOU performs the best overall on 5 datasets, and better than BIO2, which is most commonly used for NER. Main Results: Choice of Inference algorithm Given that NER is a sequential problem, Viterbi is the most popular inference algorithm in NER. It computes the most likely label assignment in a single-order HMM/CRF in time that is quadratic in the number of states and linear in the input size. When nonlocal features are used, exact dynamic programming with Viterbi becomes intractable, and Beam search is usually used. We show that greedy left-to-right decoding (beam search of size one) performs comparably to Viterbi and Beam search, while being much faster. The reason is that in NER, short NE-chunks are separated by many O-labeled tokens, which “break” the likelihood maximization problem to short independent chunks, where classifiers with local features perform well. The non-local dependencies in NER have a very long range, which the second-order state transition features fail to model. With the label set: Per/Org/Loc/Misc/O, and the BILOU encoding, there are 17 states (BPer, I-Per, U-Per, etc.), and with second order Viterbi, we need to make 173 decisions in each step, in contrast to only 17 necessary in greedy decoding. … B-PER I-PER B-ORG … Reggie Blinker Feyenoord How to inject knowledge? How to model non-local information? Common intuition: multiple occurrences of the same token should be assigned the same labels. We have compared three approaches proposed in the literature, and found that no single approach consistently outperformed the rest on a collection of 5 datasets. However, the combination of the approaches was the most stable and generally performed the best. Context Aggregation: collect the contexts for all instances of a token, extract features from all contexts and use them for all instances. For example, all the instances of FIFA in the sample text, will be added context aggregation features extracted from: suspension lifted by two games after FIFA We investigated two knowledge resources: word class models extracted from unlabeled text and extracting Gazetteers from Wikipedia. The approaches are independent and the contribution can be accumulated. Word class models hierarchically cluster words based on distribution-similarity-like measure. Two clusters are merged if they tend to appear in similar contexts. For example, clustering Friday and Monday together, allows us to abstract both as day of the week and helps dealing with the data sparsity problem. A sample binary clustering is given below: on Friday and Slapped a worldwide Two-stage Prediction Aggregation: the model tags the text in the first round of inference. Then , for each token, we mark the most frequent label it assigned among the multiple occurrences, and if there were named entities found in the document which contained the token, we mark this information too. We use these statistics as features in a second round of inference. Extended Prediction History: the previous two techniques treat all the tokens in the document identically. However, it is often the case that the easiest named entities to identify occur at the beginning of the document. When using beam search or greedy decoding, we collect, for each token, statistic on how often it was previously assigned each of the labels. Conclusions We have presented a conditional model for NER that uses averaged perceptron (implementation: Learning Based Java (LBJ): http://L2R.cs.uiuc.edu/~cogcomp/asoftware.php) with expressive features to achieve new state of the art performance on the Named Entity recognition task. We explored four fundamental design decisions: text chunks representation, inference algorithm, non-local features and external knowledge. Supported by NSF grant SoD-HCER-0613885, by MIAS, a DHS-IDS Center for Multimodal Information Access and Synthesis at UIUC and by the National Library of Congress project NDIIPP Deciding which level of abstraction to use is challenging; however, similarly to Hoffman encoding, each word can be assigned a path from the root to the leaf. For example, the encoding of IBM is 011. By taking substrings of different lengths, we can consider different levels of abstraction. Gazetteers: We use a collection of 14 high-precision, low-recall lists extracted from the web that cover common names, countries, monetary units, temporal expressions, etc. While these gazetteers have excellent accuracy, they do not provide sufficient coverage. To further improve the coverage, we have extracted 16 gazetteers from Wikipedia, which collectively contain over 1.5M entities. Wikipedia is an open, collaborative encyclopedia with several attractive properties. (1) It is kept updated manually by its collaborators, hence new entities are constantly added to it. (2) It contains redirection pages, mapping several variations of spelling of the same name to one canonical entry. For example, Suker is redirected to an entry about Davor Šuker, the Croatian footballer. (3) The entries in Wikipedia are manually tagged with categories. For example, the entry Microsoft in Wikipedia has the following categories: Companies listed on NASDAQ; Cloud computing vendors; etc. We used the category structure in Wikipedia to group the titles and the corresponding redirects into Higher-level concepts, such as: locations, organizations, artworks, etc.