Introduction - Computer Science Department

advertisement

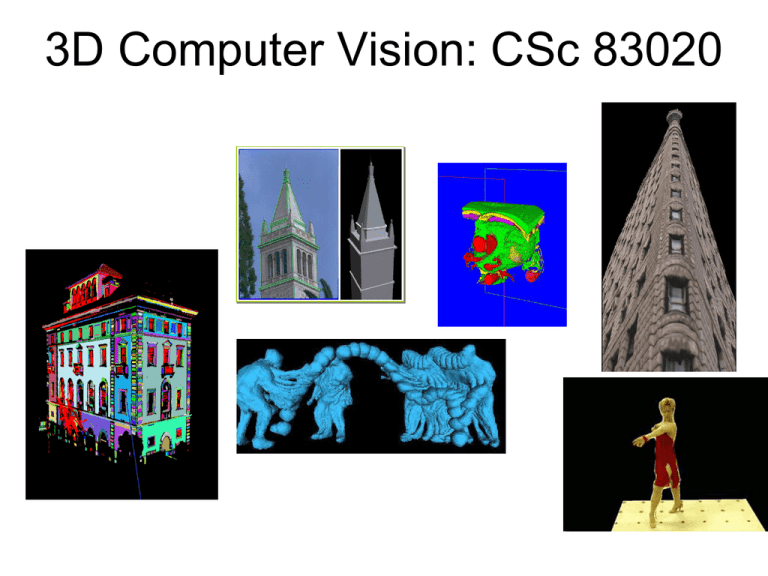

3D Computer Vision: CSc 83020 3D Computer Vision: CSc 83020 Instructor: Ioannis Stamos istamos (at) hunter.cuny.edu http://www.cs.hunter.cuny.edu/~ioannis Office Hours: Tuesdays 4-6 (at Hunter) or by appoitnment Office: 1090G Hunter North (69th street bw. Park and Lex.) Computer Vision Lab: 1090E Hunter North Course web page: http://www.cs.hunter.cuny.edu/~ioannis/3D_f12.html Goals • To familiarize you with basic the techniques and jargon in the field • To enable you to solve computer vision problems • To let you experience (and appreciate!) the difficulties of real-world computer vision • To get you excited! Class Policy • You have to – Turn in all assignments (60% of grade) – Complete a final project (30% of grade) – Actively participate in class (10% of grade) • Late policy – Six late days (but not for final project) • Teaming – For final project you can work in groups of 2 About me • 11th year at Hunter and the Graduate Center • Graduated from Columbia in ’01 – CS Ph.D. • Research Areas: – Computer Vision – 3D Modeling – Computer Graphics Books Computer Vision: Algorithms and Applications, Richard Szeliski, 2010 (available online for free) Robot Vision B. K. P. Horn, The MIT Press (great classic book) Introductory Techniques for 3-D Computer Vision Emanuele Trucco and Alessandro Verri, Prentice Hall, 1998 (algorithmic perspective) Computer Vision A Modern Approach David A. Forsyth, Jean Ponce, Prentice Hall 2003 An Invitation to 3-D Vision Yi Ma, Stefano Soatto, Jana Kosecka, S. Shankar Sastry Springer 2004. Three-Dimensional Computer Vision: A Geometric Viewpoint Olivier Faugeras The MIT Press, 1996. Journals/Web • • • • • • International Journal of Computer Vision. Computer Vision and Image Understanding. IEEE Trans. on Pattern Analysis and Machine Intelligence. SIGGRAPH (mostly Graphics) http://www.ri.cmu.edu/ (CMU’s Robotic Institute) http://www.cs.cmu.edu/~cil/vision.html (The Vision Home Page) • http://www.dai.ed.ac.uk/CVonline/ (CV Online) • http://iris.usc.edu/Vision-Notes/bibliography/contents.html (Annotated CV Bibliography) Class History • Based on class taught at Columbia University by Prof. Shree Nayar. • New material reflects modern approach. • Taught similar class at Hunter • Taught “3D Photography” class at the Graduate Center of CUNY. • My active research area – Funded by the National Science Foundation Class Schedule • Check class website • Final project proposals – Due Nov. 7 – Design your own or check list of possible projects on class website • Final project presentations and report – May 16 (last class) What is Computer Vision? Sensors Images or Video Illumination Vision System Physical 3D World Scene Description Measuring Visual Information Computer Graphics Output Image Synthetic Camera Model (slides courtesy of Michael Cohen) Computer Vision Output Model Real Scene Real Cameras (slides courtesy of Michael Cohen) Combined Output Image Model Synthetic Camera Real Scene Real Cameras (slides courtesy of Michael Cohen) Cont. • • • • • • • Vision is automating visual processes (Ball & Brown). Vision is an information processing task (Marr). Vision is inverting image formation (Horn). Vision is inverse graphics. Vision looks easy, but is difficult. Vision is difficult, but it is fun (Kanade). Vision is useful. Some Applications • Industrial – Material Handling – Inspection – Assembly Some Applications Autonomous Navigation Some Applications Vision for Graphics Film Industry Urban Planning E-commerce Virtual Reality Some Applications • Realistic 3D experience – Google Earth http://earth.google.com/ – Microsoft Photosynth http://labs.live.com/photosynth/ More Applications! • Optical Character Recognition (OCR) • Visual Databases (images or movies) – Searching for image content • • • • Face Recognition (security) Iris Recognition (security) Traffic Monitoring Systems Many more… Vision deals with images Images Look Nice… Images Look Nice… Ioannis Stamos – CSc 83020 Spring 2007 ...Essentially a 2D array of numbers 107 132 107 107 132 99 132 107 132 99 107 132 99 107 132 91 107 132 99 132 99 107 107 132 99 132 107 132 107 132 91 107 132 107 132 99 107 132 107 132 107 99 132 99 132 99 132 99 132 124 132 99 132 107 132 132 107 132 124 132 132 124 132 150 107 150 150 132 150 132 150 132 150 107 150 132 124 132 132 150 107 99 132 132 107 132 107 132 150 132 150 99 132 107 150 132 107 150 132 124 132 132 107 150 99 150 107 150 132 107 150 132 124 132 150 115 124 132 150 107 132 150 132 150 150 107 132 116 132 124 132 107 99 150 132 107 132 150 132 124 132 150 107 150 107 132 99 132 107 150 132 150 107 150 132 150 150 107 107 150 150 150 150 115 167 107 150 107 132 150 107 150 132 124 132 124 132 124 132 124 132 150 107 150 107 107 132 116 132 150 132 150 107 150 150 132 150 132 116 132 124 132 150 132 150 150 150 132 116 132 116 107 132 99 150 150 132 107 132 150 107 150 132 124 132 116 132 107 150 132 107 150 132 150 107 150 107 132 Low-Level or “Early” Vision • Considers local properties of an image “There’s an edge!” From: Szymon Rusinkiewicz, Princeton. Mid-Level Vision • Grouping and segmentation “There’s an object and a background!” High-Level Vision • Recognition “It’s a chair!” Humans Vision is easy for us. But how do we do it? Human Vision: Illusions Fraser’s spiral (Fraser 1908) Illusions Zölner Illusion (1860) Hering Illusion (1861) Wundt Illusion (1896) Visual Ambiguities Young-Girl/Old-Woman Visual Ambiguities Visual Ambiguities Seeing and Thinking Kanizsa (1979) Syllabus Overview Image Formation and Optics Light Source p Surface normal CCD Array Lens P Object Surface Projection of 3-D World on a 2-D plane Lenses Ray of light Optical Axis Image Sensors/Camera Models Typical 512x512 CCD array Imaging Area 262,144 pixels One Pixel 20μm 20μm Convert Optical Images To Electrical Signals. 512 (10.25mm) Filtering = g f g i, j f (u, v)h(i u, j v) u v h Image Features Detecting intensity changes in the image Ioannis Stamos – CSc 83020 Spring 2007 Grouping image features Finding continuous lines from edge segments Ioannis Stamos – CSc 83020 Spring 2007 Camera Calibration Camera Coordinate Frame Zc Pixel Coordinates Yc Xc Extrinsic Parameters Zw Yw World Coordinate Frame Xw Intrinsic Parameters Image Coordinate Frame Shape from X • Shape from X – Stereo – Motion – Shading – Texture foreshortening Binocular Stereo depth map Active Sensing Sources of error: 1) grazing angle, 2) object boundaries. Sheet of light Lens CCD array Shape from Shading Three-dimensional shape from a single image. Ioannis Stamos – CSc 83020 Spring 2007 Motion (optical flow) Determining the movement of scene objects Ioannis Stamos – CSc 83020 Spring 2007 Reflectance and Color Why do these spheres look different? Object Recognition Learning visual appearance. Real-time object recognition. Template-Based Methods Cootes et al. Some Vision Systems… Example 2: Structure From Motion Slide courtesy of Sebastian Thrun http://cs223b.stanford.edu Stanford http://www.cs.unc.edu/Research/urbanscape Example 2: Structure From Motion Slide courtesy of Sebastian Thrun http://cs223b.stanford.edu Stanford http://www.cs.unc.edu/Research/urbanscape Example 2: Structure From Motion Slide courtesy of Sebastian Thrun http://cs223b.stanford.edu Stanford http://www.cs.unc.edu/Research/urbanscape Example 2: Structure From Motion Slide courtesy of Sebastian Thrun http://cs223b.stanford.edu Stanford http://www.cs.unc.edu/Research/urbanscape Example 2: Structure From Motion http://www.cs.unc.edu/Research/urbanscape Slide courtesy of Sebastian Thrun http://cs223b.stanford.edu Stanford Example 4: 3D Modeling Slide courtesy of Sebastian Thrun http://cs223b.stanford.edu Stanford Drago Anguelov Example 6: Classification Slide courtesy of Sebastian Thrun http://cs223b.stanford.edu Stanford Example 6: Classification Slide courtesy of Sebastian Thrun http://cs223b.stanford.edu Stanford Real-world Applications Osuna et al: Range Scanning Outdoor Structures Ioannis Stamos – CSc 83020 Spring 2007 Data Acquisition • • • • Spot laser scanner. Time of flight. Max Range: 100m. Scanning time: 20 minutes for 1000 x1000 points. • Accuracy: 6mm. Video Latest Video Inserting models in Google Earth Dynamic Scenes Image sequence (CMU, Virtualized Reality Project) Dynamic Scenes Dynamic 3D model. Dynamic Scenes Dynamic texture-mapped model. Scanning the David Marc Levoy, Stanford height of gantry: weight of gantry: 7.5 meters 800 kilograms Head of Michelangelo’s David photograph 1.0 mm computer model What do you think?