Metaheuristics Implementation

advertisement

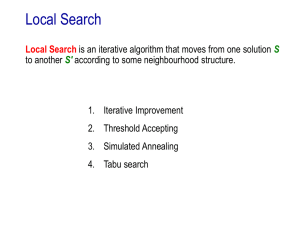

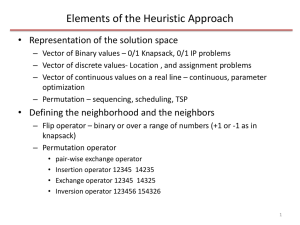

Metaheuristics • The idea: search the solution space directly. No math models, only a set of algorithmic steps, iterative method. Find a feasible solution and improve it. A greedy solution may be a good starting point. • Goal: Find a near optimal solution in a given bounded time. • Applied to combinatorial and constraint optimization problems • Diversification and intensification of the search are the two strategies for search in Metaheuristics. One must strike a balance between them. Too much of either one will yield poor solutions. Remember that you have only a limited amount of time to search and you are also looking for a good quality solution. Quality vs Time tradeoff. – For applications such as design decisions focus on high quality solutions (take more time) Ex. high cost of investment, and for control/operational decisions where quick and frequent decisions are taken look for good enough solutions (in very limited time) Ex: scheduling – Trajectory based methods are heavy on intensification, while population based methods are heavy on diversification. 1 Metaheuristics • The idea: search the solution space directly. No math models, only a set of algorithmic steps, iterative method. • Trajectory based methods (single solution based methods) – search process is characterized by a trajectory in the search space – It can be viewed as an evolution of a solution in (discrete) time of a dynamical system • Tabu Search, Simulated Annealing, Iterated Local search, variable neighborhood search, guided local search • Population based methods – Every step of the search process has a population – a set of- solutions – It can be viewed as an evolution of a set of solutions in (discrete) time of a dynamical system • Genetic algorithms, swarm intelligence - ant colony optimization, bee colony optimization, scatter search • Hybrid methods • Parallel metaheuristics: parallel and distributed computingindependent and cooperative search You will learn these techniques through several examples 2 S-Metaheuristics – single solution based • Idea: Improve a single solution • These are viewed as walks through neighborhoods in the search space (solution space) • The walks are performed via iterative procedures that move from the current solution to the next one • Iterative procedure consist of generation and replacement from a single current solution. – Generation phase: A set of candidate solutions C(s) are generated from the current solution s by local transformation. – Replacement phase: Selection of s’ is performed from C(s) such that the obj function f(s’) is better than f(s). • The iterative process continues until a stopping criteria is reached • The generation and replacement phases may be memoryless. Otherwise some history of the search is stored for further generation of candidate solutions. • Key elements: define the neighborhood structure and the initial solution. 3 Neighborhood • Representation of solutions – – – – Vector of Binary values – 0/1 Knapsack, 0/1 IP problems Vector of discrete values- Location , and assignment problems Vector of continuous values on a real line – continuous, parameter optimization Permutation – sequencing, scheduling, TSP • k-distance – For discrete values distance d(s,s’)<e, for continuous values: sphere of radius d around s – For binary vector of size n, 1-distance neighborhood of s will have n neighbors (flip one bit at a time) Ex: hypercube, neighbors of 000 are 100, 010, 001. • For permutation based representations – k-exchange (swapping) or k-opt operator (for TSP) • Ex: 2-opt: for permutations of size n, the size of the neighborhood is neighbors of 231 are 321, 213 and 132 n(n-1)/2. The – for scheduling problems • Insertion operator 12345 14235 • Exchange operator 12345 14325 • Inversion operator 123456 154326 4 Initial solution and objective function • Random or greedy • Or hybrid • In most cases starting with greedy will reduce computational time and yield better solutions, but not always • Sometimes random solutions may be infeasible • Sometimes expertise is used to generate initial solutions • For population-based metaheuristics a combination of greedy and random solutions is a good strategy. • Complete vs incremental evaluation of the obj. function 5 Distance and Landscape • For binary and flip move operators – For a problem of size n, the search space size is 2n and the maximum distance of the search space is n (the maximum distance is by flipping all n values). • For permutation and exchange move operators – For a problem of size n, the search space size is n! and the maximum distance between 2 permutations is n-1 • Landscape – Flat, plain; basin, valley; rugged, plain; rugged, valley 6 Local search • Hill climbing (descent), iterative improvement • Select an initial solution • Selection of the neighbor that improves the solution (obj func) – Best improvement (steepest ascent/descent). Exhaustive exploration of the neighborhood (all possible moves). Pick the best one with the largest improvement. – First improvement (partial exploration of the neighborhood) – Random selection – evaluate a few randomly selected neighbors and select the best among them. • Great method if there are not too many local optimas. • Issues: search time depends on initial solution and not good if there are many local optimas. 7 Local search • • • • Maximize f(x)= x3-60x2+900x Use binary search Starting solution 10001 = 24+ 20 = f (17) = 2774 Find the neighbors for 10001 • Solution : local optima is 10000 = f(16) = 3136 • But global optima is 01010 = f(10)= 4000 8 Escaping local optimas • Accept nonimproving neighbors – Tabu search and simulated annealing • Iterating with different initial solutions – Multistart local search, greedy randomized adaptive search procedure (GRASP), iterative local search • Changing the neighborhood – Variable neighborhood search • Changing the objective function or the input to the problem in a effort to solve the original problem more effectively. – Guided local search 9 Tabu search – Job-shop Scheduling problems • Single machine, n jobs, minimize total weighted tardiness, a job when started must be completed, N-P hard problem, n! solutions • Completion time Cj • Due date dj • Processing time pj • Weight wj • Release date rj • Tardiness Tj = max (Cj-dj, 0) • Total weighted tardiness = ∑ wj . Tj • The value of the best schedule is also called aspiration criterion • Tabu list = list the swaps for a fixed number of previous moves (usually between 5 and 9 swaps for large problems), too few will result in cycling and too many may be unduly constrained. • Tabu tenure of a move= number of iterations for which a move is forbidden. 10 Tabu Search - Job shop scheduling example • Single machine, 4 jobs, minimize total weighted tardiness, a job when started must be completed, N-P hard problem, 4! Solutions • Use adjacent pair-wise flip operator • Tabu list – last two moves cannot be swapped again. Jobs j pj dj wj 1 10 4 14 2 10 2 12 3 13 1 1 4 4 12 12 Initial solution 2143 weighted tardiness = 500 • Stopping criterion: Stop after a fixed number of moves or after a fixed number of moves show no improvements over the current best solution. 11 Tabu search • Static and dynamic size of tabu list • Short term memory – stores recent history of solutions to prevent cycling. Not very popular because of high data storage requirements. • Medium term memory – Intensification of search around the best found solutions. Only as effectives a the landscape structure. Ex: intensification around a basin is useless. • Long term memory – Encourages diversification. Keeps an account of the frequency of solutions (often from the start of the search) and encourages search around the most frequent ones. 12