Synthesized Images

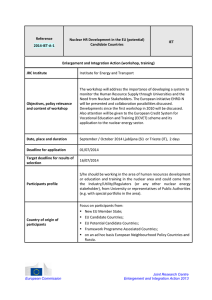

advertisement

Shaun S. Gleason1, Mesfin Dema2, Hamed Sari-Sarraf2, Anil Cheriyadat1, Raju Vatsavai1, Regina Ferrell1 1Oak Ridge National Laboratory, Oak Ridge, TN 2Texas Tech University, Lubbock, TX 1 Motivation Existing Approaches Proposed Approach Generative Model Formulation General Framework Preliminary Results Conclusions 2 Automated identification of complex facilities in aerial imagery is an important and challenging problem. For our application, nuclear proliferation, facilities of interest can be complex. Such facilities are characterized by: the presence of known structures, their spatial arrangement, their geographic location, and their location relative to natural resources. Development, verification, and validation of semantic classification algorithms for such facilities is hampered by the lack of available sample imagery with ground truth. 3 Switch Yard Containment Building Turbine Building Cooling Towers Semantics: Set of objects like: Switch yard, Containment Building, Turbine Generator, Cooling Towers AND Their spatial arrangement => may imply a semantic label like “nuclear power plant” Many algorithms are being developed to extract and classify regions of interest from images, such as in [1]. V & V of the algorithms have not kept pace with their development due to lack of image datasets with high accuracy ground truth annotations. The community needs research techniques that can provide images with accurate ground truth annotation at a low cost. [1] Gleason SS, et al., “Semantic Information Extraction from Multispectral Geospatial Imagery via a Flexible Framework,” IGARSS, 2010. 5 Manual ground truth annotation of images Very tedious for volumes of images Highly subjective Using synthetic images with corresponding ground truth data Digital Imaging and Remote Sensing Image Generation ( DIRSIG) [2] ▪ ▪ ▪ ▪ Capable of generating hyper-spectral images in range of 0.4-20 microns. Capable of generating accurate ground truth data. Very tedious 3D scene construction stage. Incapable of producing training images in sufficient quantities. [2] Digital Imaging and Remote Sensing Image Generation (DIRSIG): http://www.dirsig.org/. 6 In [3,4], researchers attempted to partially automate the cumbersome 3D scene construction of the DIRSIG model. LIDAR sensor is used to extract 3D objects from a given location. Other modalities are used to correctly identify object types. 3D CAD models of objects and object locations are extracted. Extracted CAD models are placed at their respective position to reconstruct the 3D scene and finally to generate synthetic image with corresponding ground truth. Availability of 3D model databases, such as Google SketchUp [5], reduces the need for approaches like [3,4]. [3] S.R. Lach, et al., “Semi-automated DIRSIG Scene Modeling from 3D LIDAR and Passive Imaging Sources”, in Proc. SPIE Laser Radar Technology and Applications XI, vol. 6214,2006. [4] P. Gurram, et al., “3D scene reconstruction through a fusion of passive video and Lidar imagery,” in Proc. 36th AIPR Workshop, pp. 133–138, 2007. [5] Google SketchUp: http://sketchup.google.com/. 7 To generate synthetic images with ground truth annotation at low cost, we need a system which can learn from few training examples. This system must be generative so that one can sample a plausible scene from the model. The system must also be capable of producing synthetic images with corresponding ground truth data in sufficient quantity. Our contribution to the problem is two-fold. We incorporated expert knowledge into the problem with less effort. We adapted a generative model to synthetic image generation process. 8 Nuclear Power Plant Switchyard Reactor Cooling Tower Turbine Building CT Type1 Building CT Type 2 [6] S.C. Zhu. and D. Mumford,” A Stochastic Grammar of Images”. Foundation and Trends in Computer Graphics and Vision, 2(4): pp .259–362, 2006 9 Given observed constraints (i.e. the hierarchical and contextual information) of an unobserved distribution f , a probability distribution p which best approximates f is the one with maximum entropy [7,8]. p ( X ) arg m ax p ( x ) log( p ( x )) x X subject to ( ) ( ) f X p X ( ) ( ) f X p X F eature , O r N odes , A nd N odes [7] J. Porway ,et al. “ Learning compositional Models for Object Categories From Small Sample Sets”, 2009 [8] J. Porway ,et al. “ A Hierarchical and Contextual Model for Aerial Image Parsing”, 2010 10 Gibbs Distribution p(I; , S ) I;, S N or i 1 Z ( ) ( ) exp I ; , S , H i, p ( ) N A nd (I ) i 1 ( )( t 1) ( ) ,H ( ) j, p (I ) j 1 Parameter Learning j j j i ( )( t ) ( ) H ( ) j, p log H i , f ( ) H ( ) j, f 11 12 [5] [9] [5] Google SketchUp: http://sketchup.google.com/. [9] Persistence of Vision Raytracer (POV-Ray): http://www.povray.org/. 13 We are currently working with experts on annotating training images of nuclear power plant sites. To demonstrate the idea of the proposed approach, we have used a simple example as a proof-of-principle. Using this example, we illustrate how the generative framework can sample plausible scenes, and finally generate synthetic images with corresponding ground truth annotation. 14 15 16 Orientation Corrected Images 17 Orientation Corrected Images Followed by Ellipse Fitting 18 No. Relationship 1 Relative Position in X 2 Relative Position in Y Function x1 x2 2M aj y1 y2 2M in 1 M aj 3 Relative Major Axis 2 M aj 1 M in 4 Relative Minor Axis 5 Relative Orientation 2 M in 1 2 1 M aj 6 Aspect Ratio 1 M in 19 Before Learning After Learning 20 Before Learning After Learning 21 After Learning Synthesized Image 22 Part level ground truth image Object level ground truth image 23 3D Google Sketch-Up model of a nuclear plant: Pickering Nuclear Plant, Canada (left), and model manually overlaid on an image (right). 24 Maximum Entropy model has proven to be an elegant framework to learn patterns from training data and generate synthetic samples having similar patterns. Using the proposed framework, generating synthetic images with accurate ground truth annotation comes at relatively low cost. The proposed approach is very promising for algorithm verification and validation. 25 The current model generates some results that do not represent a well-learned configuration of objects. We believe that constraint representation using histograms contributes to invalid results, since some values are averaged out while generating histograms. To avoid invalid results, we are currently studying a divide-and-conquer strategy by introducing on-the-fly clustering approaches. This separates the bad samples from the good ones, which helps tune the parameters during the learning phase. 26 Funding for this work is provided by the Simulations, Algorithms, and Modeling program within the NA-22 office of the National Nuclear Security Administration, U.S. Department of Energy. 27 28