Hidden Conditional Random Fields - Universidade Federal de São

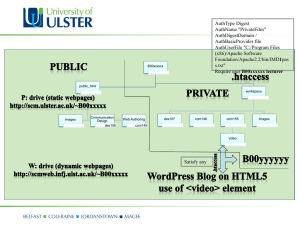

advertisement

- A comparison with Neural Networks and Hidden Markov Models -

César R. de Souza, Ednaldo B. Pizzolato and Mauro dos Santos Anjo

Universidade Federal de São Carlos (Federal University of São Carlos)

IBERAMIA 2012

Cartagena de Índias, Colombia

2012

Context, Motivation, Objectives and

the Organization of this Presentation

3

Multidisciplinary

Computing and Linguistics

Ethnologue lists about 130 sign

languages existent in the world

(LEWIS, 2009)

Motivation

Objectives

Agenda

4

Two fronts

Social

Aim to improve quality of life for the

deaf and increase the social inclusion

Scientific

Investigation of the distinct interaction

methods, computational models and

their respective challenges

Context

Motivation

Objectives

Agenda

5

This paper

Investigate the behavior and applicability of SVMs

and HCRFs in the recognition of specific signs from

the Brazilian Sign Language

Long term

Walk towards the creation of a full-fledged

recognition system for LIBRAS

This work represents a small but important

step in achieving this goal

Context

Motivation

Objectives

Agenda

6

Introduction

Libras - Brazilian Sign Language

Literature Review

Methods and Tools

Support Vector Machines

Conditional Random Fields

Experiments

Results

Conclusion

Context

Motivation

Objectives

Agenda

Structures and the manual alphabet

7

8

Natural language

Not mimics

Not universal

LIBRAS

Difficulties

Grammar

It is not only “a problem of the

deaf or a language pathology”

(QUADROS & KARNOPP, 2004)

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

9

Highly context-sensitive

Same sign may have distinct meanings

Interpretation is hard

even for humans

Introduction

Libras

Review

Methods

LIBRAS

Difficulties

Grammar

Experiments

Results

Conclusion

10

Fingerspelling is only

part of the Grammar

Needed when explicitly spelling

the name of a person or a location

Subset of the full-language

recognition problem

Introduction

Libras

Review

Methods

LIBRAS

Difficulties

Grammar

Experiments

Results

Conclusion

11

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

12

Literature

Layer architectures are common

Static gestures x Dynamic gestures

One of the best works on LIBRAS handles

only the movement aspect of the language

(Dias et al.)

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

13

Few studies explore SVMs

But many use Neural Networks

No studies on HCRFs and LIBRAS

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

Example

Recognition of a fingerspelled word

using a two-layered architecture

Pato

HMM

Sequence classifier

P

P

P

A

A

A

T

T

T

O

O

Static gesture classifier

ANN

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

15

YANG, SCLAROFF e LEE, 2009

Multiple layers, SVMs

Elmezain, 2011

HCRF, in-air drawing recognition

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

Overview of the chosen techniques

and reasons for their choice

16

Neural Networks and Support Vector

Machines for the detection of static signs

17

18

Find 𝑓(𝑥) such that…

c

𝑓(𝑥)

b

a

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

19

Neural Networks

Biologically inspired

McCulloch & Pitts, Rosenblatt, Rumelhart

Support Vector Machines

Maximum Margin

Multiple Classes

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

Perceptron

Hyperplane decision

Linearly separable problems

Learning is a ill-posed problem

Multiple local minima, ill-conditioning

Layer architecture

Universal approximator

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

21

Neural Networks

Strong theoretical basis

Statistical Learning Theory

Structural Risk Minimization (SRM)

Support Vector Machines

Maximum Margin

Multiple Classes

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

22

Large-margin classifiers

Risk minimization through margin maximization

Capacity control through margin control

Sparse solutions considering only a few support vectors

Neural Networks

Support Vector Machines

Maximum Margin

Multiple Classes

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

23

Problem: binary-only classifier

How to generalize to multiple classes?

Neural Networks

Support Vector Machines

Maximum Margin

Multiple Classes

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

24

Problem: binary-only classifier

How to generalize to multiple classes?

Drawbacks

Classical approaches

Only works when equiprobable

Evaluation of c(c-1)/2 machines

Non-guaranteed optimum results

One-against-all

One-against-one

Discriminant functions

With 27 static gestures, this would result in

351 SVM evaluations each time a new

classification is required!

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

25

Problem: binary-only classifier

How to generalize to multiple classes?

Directed Acyclic Graphs

Neural Networks

Generalization of Decision Trees,

allowing for non-directed cycles

Support Vector Machines

Maximum Margin

Multiple Classes

Require at maximum c-1 evaluations

So, for 27 static gestures, only 26

SVM evaluations are required. Only

7.4% of the original effort

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

26

Elimination proccess

A

B

C

D

One class eliminated at a time

Candidates

A lost

D lost

A

B

C

B

C

D

B lost

C lost

D lost

B lost

Libras

C lost

D lost

D

Introduction

A

B

B

C

C

D

C lost

A lost

A lost

C

Review

Methods

B

Experiments

B lost

A

Results

Conclusion

27

However, no matter the model

We’ll have (extreme) noise due pose transitions

How can we cope with that?

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

Hidden Markov Models, Conditional Random

Field and Hidden Conditional Random Fields for

dynamic gesture recognition.

28

29

Find 𝑓(𝑥) such that

given extremely noisy sequences of labels,

estimate the word being signed.

blyrei

𝑓(𝑥)

hil

hello

hi

bye

hmeylrlwo

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

30

Hidden Markov

Models (HMMs)

Conditional Random

Fields (CRFs)

Hidden Conditional

Random Fields (HCRFs)

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

31

Hidden Markov Models

Joint probability model of a observation

sequence and its relationship with time

Hidden Markov

Models (HMMs)

𝑇

𝑝 𝑥, 𝑦 =

Conditional Random

Fields (CRFs)

𝑝 𝑦𝑡 𝑦𝑡−1 𝑝(𝑥𝑡 |𝑦𝑡 )

𝑡=1

A

Introduction

Libras

Review

B

Hidden Conditional

Random Fields (HCRFs)

Methods

Experiments

Results

Conclusion

32

Hidden Markov Models

Marginalizing over y, we achieve the

observation sequence likelihood

Hidden Markov

Models (HMMs)

𝑇

𝑝 𝑥 =

𝑝 𝑦𝑡 𝑦𝑡−1 𝑝(𝑥𝑡 |𝑦𝑡 )

Conditional Random

Fields (CRFs)

𝒚 𝑡=1

Hidden Conditional

Random Fields (HCRFs)

Which can be used for classification

using either the ML or MAP criteria

𝑝 𝜔𝑖 |𝒙 =

Introduction

Libras

𝑝 𝜔𝑖 𝑝 𝒙|𝜔𝑖

𝑐

𝑗𝑝

𝒙|𝜔𝑗

Review

Methods

Experiments

Results

Conclusion

33

Word 1

𝑝(𝒙|ω𝟏 )

Word 2

𝑝(𝒙|ω𝟐 )

ω = max 𝑝 𝒙 𝜔𝑗 𝑝(𝜔𝑗 )

𝜔𝑗 ∈ ω

...

𝑝(𝒙|ω𝒏 )

Word n

One model for each word

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

34

Hidden Markov Models have found

great applications in speech recognition

Conditional Random

Fields (CRFs)

However, a fundamental paradigm

shift recently occurred in this field

Introduction

Libras

Review

Methods

Hidden Markov

Models (HMMs)

Hidden Conditional

Random Fields (HCRFs)

Experiments

Results

Conclusion

35

Probability distributions governing speech

signals could not be modeled accurately,

turning “Bayes decision theory inapplicable

under those circumstances”

(Juang & Rabiner, 2005)

Introduction

Libras

Review

Methods

Experiments

Hidden Markov

Models (HMMs)

Conditional Random

Fields (CRFs)

Hidden Conditional

Random Fields (HCRFs)

Results

Conclusion

36

Hidden Markov

Models (HMMs)

Conditional Random

Fields (CRFs)

Hidden Conditional

Random Fields (HCRFs)

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

37

Conditional Random Fields

Generalization of the Markov models

Hidden Markov

Models (HMMs)

Conditional Random

Fields (CRFs)

Discriminative Models

Model 𝑝 𝒚|𝒙 without incorporating 𝑝 𝒙

Hidden Conditional

Random Fields (HCRFs)

Designates a family of MRFs

Each new observation originates a new MRF

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

HMM

Directional models

Discriminative

Naïve Bayes

Graphs

Sequence

Generative

38

Logistic Regression

Linear-chain CRF

CRF

Infograph based on the tutorial by Sutton, C., McCallum, A., 2007

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

1

𝑝 𝒚𝒙 =

𝑍(𝒙)

𝑒𝑥𝑝

𝐶𝑝 ∈ 𝒞 Ψ𝑐 ∈𝐶𝑝

Potential Functions

Conditional

Potential

CliquesRandom Fields

Generalization of the Markov models

1

𝑍(𝒙)

𝑝 𝒚𝒙 =

39

𝐾(𝑝)

𝜆𝑝𝑘 𝑓𝑝𝑘 (𝒙𝒄 , 𝒚𝒄 )

𝑘=1

Parameter vector which can

be optimized using gradient

Hidden Markov

methods

Models (HMMs)

Ψ𝑐 (𝒙𝒄 , 𝒚𝒄 ; 𝜃)

Conditional Random

Fields (CRFs)

𝐶𝑝 ∈ 𝒞 Ψ𝑐 ∈𝐶𝑝

Hidden Conditional

Random Fields (HCRFs)

𝐾(𝑝)

Ψ𝑐 𝒙𝒄 , 𝒚𝒄 ; 𝜃𝑝 = 𝑒𝑥𝑝

𝜆𝑝𝑘 𝑓𝑝𝑘 (𝒙𝒄 , 𝒚𝒄 )

𝑘=1

𝑍 𝒙 =

Characteristic

function vector

Ψ𝑐 (𝒙𝒄 , 𝒚𝒄 ; 𝜃)

𝒚 𝐶𝑝 ∈ 𝒞 Ψ𝑐 ∈𝐶𝑝

Partition

function

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

1

𝑝 𝒚𝒙 =

𝑍(𝒙)

40

𝐾(𝑝)

𝑒𝑥𝑝

𝐶𝑝 ∈ 𝒞 Ψ𝑐 ∈𝐶𝑝

𝜆𝑝𝑘 𝑓𝑝𝑘 (𝒙𝒄 , 𝒚𝒄 )

𝑘=1

𝑇

Conditional Random Fields

𝑝 𝑥, 𝑦 = of the Markov models

𝑝 𝑦𝑡 𝑦𝑡−1

Generalization

𝑡=1

𝑨

𝑝(𝑥𝑡 |𝑦𝑡 )

𝑩

How do we initialize those models?

Reaching HCRFs from a𝑇HMM

𝑨 𝑦𝑡 , 𝑦𝑡−1 𝑩(𝑥𝑡 , 𝑦𝑡 )

𝑡=1

Introduction

Libras

Review

Hidden Markov

Models (HMMs)

Conditional Random

Fields (CRFs)

Hidden Conditional

Random Fields (HCRFs)

Methods

Experiments

Results

Conclusion

1

𝑝 𝒚𝒙 =

𝑍(𝒙)

𝑇

𝑝 𝑥, 𝑦 =

𝑒

𝑒𝑥𝑝

𝐶𝑝 ∈ 𝒞 Ψ𝑐 ∈𝐶𝑝

𝑇𝑇

𝑘=1

𝑨𝐥𝐧𝑨

𝑦𝑡 , 𝑦𝑦𝑡−1

𝑩(𝑥+𝑡 ,𝐥𝐧𝑩(𝑥

𝑦𝑡 ) 𝑡 , 𝑦𝑡 )

𝑡 , 𝑦𝑡−1

ln

𝑡=1 𝑡=1

𝑡=1

= 𝑒𝑥𝑝

𝑡=1

𝑇

𝑇

𝐥𝐧𝑨 𝑦𝑡 , 𝑦𝑡−1 +

𝑡=1

𝑡=1

Libras

𝜆𝑝𝑘 𝑓𝑝𝑘 (𝒙𝒄 , 𝒚𝒄 )

𝑇

𝑇𝑇

Introduction

41

𝐾(𝑝)

Review

𝐥𝐧𝑩(𝑥𝑡 , 𝑦𝑡 )

𝑡=1

Methods

Experiments

𝑡=1

Results

Conclusion

1

𝑝 𝒚𝒙 =

𝑍(𝒙)

𝑒𝑥𝑝

𝐶𝑝 ∈ 𝒞 Ψ𝑐 ∈𝐶𝑝

𝑇𝑇

𝑝 𝑥, 𝑦 = 𝑒𝑥𝑝

𝜆𝑝𝑘 𝑓𝑝𝑘 (𝒙𝒄 , 𝒚𝒄 )

𝑘=1

𝑇

𝑇

𝐥𝐧𝑨 𝑦𝑡 , 𝑦𝑡−1 +

𝑡=1

𝑡=1

𝑛

42

𝐾(𝑝)

𝐥𝐧𝑩(𝑥𝑡 , 𝑦𝑡 )

𝑡=1

𝑡=1

ln 𝑨

ln 𝑩

a11 a12 a13

b11 b12

a21 a22 a23

𝑛

b21 b22

a31 a32 a33

b31 b32

𝑛

𝑚

𝝀:

𝑘 = 𝑛 ×𝑛 + 𝑛× 𝑚

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

1

𝑝 𝒚𝒙 =

𝑍(𝒙)

𝑒𝑥𝑝

𝜆𝑝𝑘 𝑓𝑝𝑘 (𝒙𝒄 , 𝒚𝒄 )

𝐶𝑝 ∈ 𝒞 Ψ𝑐 ∈𝐶𝑝

𝑘=1

𝑇𝑇

𝐥𝐧𝑨 𝑦𝑡 , 𝑦𝑡−1 +

𝐥𝐧𝑩(𝑥𝑡 , 𝑦𝑡 )

𝑡=1

𝑡=1

𝑡=1

𝑡=1

𝒇𝒆𝒅𝒈𝒆 𝒚𝒕 , 𝒚𝒕−𝟏 , 𝒙; 𝑖, 𝑗 = 𝟏{𝒚𝒕=𝐢} 𝟏{𝒚𝒕−𝟏 =𝐣}

𝝀:

𝑇

𝑇

𝑝 𝑥, 𝑦 = 𝑒𝑥𝑝

𝒇:

43

𝐾(𝑝)

𝒇𝒏𝒐𝒅𝒆 𝒚𝒕 , 𝒚𝒕−𝟏 , 𝒙; 𝑖, 𝑜 = 𝟏{𝒚𝒕=𝐢} 𝟏{𝒙𝒕 =𝐨}

𝒇𝒆 𝒇𝒆 𝒇𝒆 𝒇𝒆 𝒇 𝒆 𝒇𝒆 𝒇𝒆 𝒇𝒆 𝒇𝒆 𝒇𝒏 𝒇𝒏 𝒇 𝒏 𝒇𝒏 𝒇 𝒏 𝒇𝒏

i=1

i=1

i=1

i=2

i=2

i=2

i=3

i=3

i=3

i=1

i=1

i=2

i=2

i=3

i=3

j=1

j=2

j=3

j=1

j=2

j=3

j=1

j=2

j=1

o=1 o=2 o=1 o=2 o=1 o=2

a11 a12 a13 a21 a22 a23 a31 a32 a33 b11 b12 b21 b22 b31 b32

𝑘 = 𝑛 ×𝑛 + 𝑛× 𝑚

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

1

𝑝 𝒚𝒙 =

𝑍(𝒙)

𝑇

𝑛

44

𝐾(𝑝)

𝑒𝑥𝑝

𝐶𝑝 ∈ 𝒞 Ψ𝑐 ∈𝐶𝑝

𝜆𝑝𝑘 𝑓𝑝𝑘 (𝒙𝒄 , 𝒚𝒄 )

𝑘=1

𝑛

𝑝 𝑥, 𝑦 = 𝑒𝑥𝑝

𝑇

𝑛

𝑚

𝑎𝑖𝑗 1{𝑦𝑡=𝑖} 1{𝑦𝑡−1 =𝑗} +

𝑡=1 𝑖=1 𝑗=1

𝑏𝑖𝑗 1{𝑦𝑡=𝑖} 1{𝑥𝑡 =𝑗}

𝑡=1 𝑖=1 𝑗=1

𝑇

𝑇

𝒇𝒆𝒅𝒈𝒆 𝒚𝒕 , 𝒚𝒕−𝟏 , =

𝒙; 𝑖,

𝑗 = 𝟏{𝒚𝑎𝒕=𝐢}𝒇𝟏{𝒚

𝒚𝒕 ,(𝒚

𝒚 , 𝒚, 𝒙; 𝑖,, 𝒙)

𝑜 = 𝟏{𝒚𝒕=𝐢} 𝟏{𝒙𝒕 =𝐨}

𝒕−𝟏

𝑒𝑥𝑝

𝒚𝒕−𝟏 , 𝒙) + 𝒇𝒏𝒐𝒅𝒆

𝑏𝑖𝑗 𝒇

𝑖𝑗 𝒊𝒋 (𝒚

𝒕 , =𝐣}

𝒊𝒋 𝒕−𝟏

𝒕 𝒕−𝟏

𝑡=1

𝑡=1

𝐾

= 𝑒𝑥𝑝

𝜆𝑘 𝑓𝑘 𝒙, 𝒚

𝑘=1

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

1

𝑝 𝒚𝒙 =

𝑍(𝒙)

45

𝐾(𝑝)

𝑒𝑥𝑝

𝐶𝑝 ∈ 𝒞 Ψ𝑐 ∈𝐶𝑝

𝜆𝑝𝑘 𝑓𝑝𝑘 (𝒙𝒄 , 𝒚𝒄 )

𝑘=1

𝐾

𝜆𝑘 𝑓𝑘 1𝒙, 𝒚

𝑝 𝑥, 𝑦 = 𝑒𝑥𝑝

𝑝 𝒚𝒙 =

𝑝 𝑦𝑘=1 𝑍(𝒙)

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

46

Hidden Markov

Models (HMMs)

Drawback

Assumes both 𝒙 and 𝒚 are known

Conditional Random

Fields (CRFs)

Hidden Conditional

Random Fields (HCRFs)

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

47

Hidden Markov

Models (HMMs)

Conditional Random

Fields (CRFs)

Hidden Conditional

Random Fields (HCRFs)

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

48

Hidden Markov

Models (HMMs)

Conditional Random

Fields (CRFs)

Hidden Conditional

Random Fields (HCRFs)

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

49

Hidden Conditional Random Fields

Generalization of the hidden Markov classifiers

Hidden Markov

Models (HMMs)

Conditional Random

Fields (CRFs)

Hidden Conditional

Random Fields (HCRFs)

Parameter space

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

50

Hidden Conditional Random Fields

Generalization of the hidden Markov classifiers

Hidden Markov

Models (HMMs)

Sequence classification

Conditional Random

Fields (CRFs)

Model 𝑝 𝜔|𝒙 without explicitly modeling 𝑝 𝒙

Hidden Conditional

Random Fields (HCRFs)

Do not require 𝒚 to be known

The sequence of states is now hidden

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

51

Hidden Conditional Random Fields

Generalization of the hidden Markov classifiers

𝑝,(𝜔

𝒚 |𝒙) =

𝑝 𝜔𝒙 =

𝒚

𝒚

1

𝑍(𝒙)

𝐾(𝑝)

𝑘=1

Libras

𝐶𝑝 ∈ 𝒞 Ψ𝑐 ∈𝐶𝑝

Conditional Random

Fields (CRFs)

𝐾(𝑝)

𝜽𝒑𝑐

𝜆𝑝𝑘 𝑓𝑝𝑘 𝒙𝒄 , 𝒚𝒄;, 𝜔

Ψ𝑐 𝒙𝒄,, 𝜔

𝒚𝑐𝒄 𝜽

; 𝜽𝒑𝒑 = 𝑒𝑥𝑝

Introduction

Ψ𝑐 (𝒙𝒄 , 𝒚𝒄 ,; 𝜔

𝜽𝑐𝒑 )

Review

Hidden Markov

Models (HMMs)

𝑘=1

Methods

Experiments

Hidden Conditional

Random Fields (HCRFs)

Results

Conclusion

52

Single model for all words

ω

yt-2

yt-1

yt

xt-2

xt-1

xt

ω = max 𝑝(𝜔𝑗 |𝒙)

𝜔𝑗 ∈ ω

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

Fingerspelling recognition with SVMs

and HCRFs against ANNs and HMMs

53

Pato

HCRF

ω

HMM

yt-2

yt-1

yt

xt-2

xt-1

xt

P

P

Sequence classifier

P

A

A

A

T

T

T

O

O

Static gesture classifier

SVM

ANN

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

55

Static gesture recognition

Static gestures

(hand postures)

Database of 8100 grayscale images

Input instances with 1024 features

27 classes (manual alphabet signs)

Introduction

Libras

Review

Methods

Dynamic gestures

(spelled words)

Experiments

Results

Conclusion

56

Neural Networks

Evaluate initialization heuristics (Nguyen-Widrow)

Support Vector Machines

Evaluate the heuristic value for 𝜎 2 based on the interquartile range of the norm statistics for the input

dataset

Introduction

Libras

Review

Methods

Experiments

Static gestures

(hand postures)

Dynamic gestures

(spelled words)

Results

Conclusion

57

Static sign classification

Kappa

σ2

Kappa

Hidden Neurons

Kappa

0,1

0.000

50

0.851

1

0.106

100

0.887

10

0.569

300

0.921

100

0.950

500

0.922

(heuristic) 392

0.917

1000

0.924

1000

0.863

1

800.00

0.8

600.00

0.6

400.00

0.4

200.00

0.2

0

0.1

1

Busca em grade

Introduction

Libras

Review

0.00

1000

10

100

Heurística

Vetores de Suporte

Methods

Experiments

Support Vectors

(Average)

Resilient Backpropagation ANN

Gaussian kernel SVM

Results

Conclusion

58

Static sign classification

Kappa

σ2

Kappa

Hidden Neurons

Kappa

0.1

0.000

50

0.851

1

0.106

100

0.887

10

0.569

300

0.921

100

0.959

500

0.922

(heuristic) 392

0.917

1000

0.925

1000

0.863

1

800.00

0.8

600.00

0.6

400.00

0.4

200.00

0.2

0

0.1

1

Busca em grade

Introduction

Libras

Review

0.00

1000

10

100

Heurística

Vetores de Suporte

Methods

Experiments

Support Vectors

(Average)

Resilient Backpropagation ANN

Gaussian kernel SVM

Results

Conclusion

59

Hyperparameter surface for Gaussian SVMs

Kappa

1.00

0.95

0.90

0.85

1000000

10000

C

100

0.85-0.90

50…

15…

10…

20…

0.95-1.00

400

200

Libras

Review

Methods

20…

15…

400-600

50…

200-400

10…

Sigma (σ²)

500

H

0

0-200

Introduction

0.90-0.95

1

600

0.1

1

10

50

75

100

200

300

Average number of SVs

0.80-0.85

500

Sigma (σ²)

H

0.1

1

10

50

75

100

200

300

0.80

Experiments

1000000

10000

C

100

1

Results

Conclusion

60

Static gesture classification

Statistically significant results (p < 0.01)

Static gestures

(hand postures)

Dynamic gestures

(spelled words)

Points of interest

Polynomial machines have increased sparcity but smaller kappa

Neural networks were faster to evaluate, but not to learn – unless using linear SVMs

Sigma plays a much more important role than C in Gaussian machines

Heuristics for choosing sigma and C resulted in great performance values

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

61

Static gestures

(hand postures)

Dynamic gestures

(spelled words)

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

62

Static gestures

(hand postures)

Dynamic gestures

(spelled words)

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

Pato

HCRF

ω

yt-2

yt-1

yt

xt-2

xt-1

xt

Sequence classifier

P

P

P

A

A

A

T

T

Static gesture classifier

SVM

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

T

64

Dynamic gesture classification

Database containing 540 signed words

Containing a total of 63,703 static signs

The previous layer labels the entire dataset

Then we tested all possible model combinations

Estimated kappa (𝜅) sampled from 10-fold CV

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

65

Dynamic gesture classification

Labeller

Classification

Algorithm

SVM

HMM

Baum-Welch

SVM

HCRF

RProp

ANN

HMM

Baum-Welch

ANN

HCRF

RProp

Introduction

Libras

Review

Methods

Training

Validation

Kappa

Kappa

Experiments

Results

Conclusion

66

Dynamic gesture classification

Training

Validation

Kappa

Kappa

Labeller

Classification

Algorithm

SVM

HMM

Baum-Welch

0.95

0.82

SVM

HCRF

RProp

0.98

0.83

ANN

HMM

Baum-Welch

0.95

0.80

ANN

HCRF

RProp

0.99

0.82

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

67

Dynamic gesture classification

Training

Validation

Kappa

Kappa

Labeller

Classification

Algorithm

SVM

HMM

Baum-Welch

0.95

0.82

SVM

HCRF

RProp

0.98

0.83

ANN

HMM

Baum-Welch

0.95

0.80

ANN

HCRF

RProp

0.99

0.82

SVM+HCRF have shown the best validation result (10-fold CV)

Combinations using HCRF have shown best results in general

Training results are statistically different

We have not enough evidence to say validation results are not equivalent

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

68

Dynamic gesture classification

Training

Validation

Kappa

Kappa

Labeller

Classification

Algorithm

SVM

HMM

Baum-Welch

0,95

0,82

SVM

HCRF

RProp

0,98

0,83

ANN

HMM

Baum-Welch

0,95

0,80

ANN

HCRF

RProp

0,99

0,82

Hidden Conditional Random Fields – in this specific problem – had

higher ability to retain knowledge while keeping the same generality

In other words, achieved less overfitting.

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

and future works

69

Fingerspelling experiments

SVMs & HCRFs vs ANNs & HMMs

Static Gesture Recognition

Statistically significant results favoring SVMs

Linear SVMs on DDAGs:

Best compromise between speed, accuracy and ease of use

SVMs have shown easier training, reduced training times

Heuristic initializations work rather well, less parameter tuning

Dynamic Gesture Recognition

Choice of gesture classifier had much more impact

Linear-chain HCRFs:

Increased knowledge absorption without overfitting

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

71

Future works

Detect standard words rather than fingerspelling (already complete)

Use Structural Support Vector Machines, which are equivalent to

HCRFs but are trained using a hinge loss function

Use a mixed language model to categorize full phrases

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

73

References

BOWDEN, R. et al. A Linguistic Feature Vector for the Visual Interpretation of

Sign Language. European Conference on Computer Vision. [S.l.]: Springer-Verlag.

2004. p. 391-401.

BRADSKI, G. R. Computer Vision Face Tracking For Use in a Perceptual User

Interface. Intel Technology Journal, n. Q2, 1998. Disponivel em:

<http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.14.7673>.

DIAS, D. B. et al. Hand movement recognition for brazilian sign language: a

study using distance-based neural networks. Proceedings of the 2009

international joint conference on Neural Networks. Atlanta, Georgia, USA: IEEE

Press. 2009. p. 2355-2362.

FERREIRA-BRITO, L. Por uma gramática de Línguas de Sinais. 2nd. ed. Rio de

Janeiro: Tempo Brasileiro, 2010. 273 p. ISBN 85-282-0069-8.

FERREIRA-BRITO, L.; LANGEVIN, R. The Sublexical Structure of a Sign Language.

Mathématiques, Informatique et Sciences Humaines, v. 125, p. 17-40, 1994.

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

74

References

FEUERSTACK, S.; COLNAGO, J. H.; SOUZA, C. R. D. Designing and Executing

Multimodal Interfaces for the Web based on State Chart XML. Proceedings of

3a. Conferência Web W3C Brasil 2011. Rio de Janeiro: [s.n.]. 2011.

PIZZOLATO, E. B.; ANJO, M. D. S.; PEDROSO, G. C. Automatic recognition of

finger spelling for LIBRAS based on a two-layer architecture. Proceedings of the

2010 ACM Symposium on Applied Computing. Sierre, Switzerland: ACM. 2010. p.

969-973.

VIOLA, P.; JONES, M. Robust Real-time Object Detection. International Journal of

Computer Vision. [S.l.]: [s.n.]. 2001.

VAPNIK, V. N. The nature of statistical learning theory. New York, NY, USA:

Springer-Verlag New York, Inc., 1995. ISBN 0-387-94559-8.

VAPNIK, V. N. Statistical learning theory. [S.l.]: Wiley, 1998. ISBN 0471030031.

YANG, R.; SARKAR, S. Detecting Coarticulation in Sign Language using

Conditional Random Fields. Pattern Recognition, 2006. ICPR 2006. 18th

International Conference on. [S.l.]: [s.n.]. 2006. p. 108-112.

Introduction

Libras

Review

Methods

Experiments

Results

Conclusion

Guilherme Cartacho

Appendix A

77

Accord.NET Framework

Machine Learning and Artificial Intelligence

78

Accord.NET Framework

Machine Learning and Artificial Intelligence

Computer Vision / Audition

79

Accord.NET Framework

Machine Learning and Artificial Intelligence

Computer Vision / Audition

Mathematics and Statistics

80

Builds upon well established foundations

83

It has been used to

Recognize gestures using Wii

84

It has been used to

Study and evaluate performance in 3D gesture recognition

85

It has been used to

Predict attacks in computer networks

86

It has been used to

Compare touch and in-air gestures using Kinect

87

It has been used to

Provide sensor information in multi-model interfaces

It has been used in

an increasing number of publications

Guido Soetens, Estimating the limitations of single-handed multi-touch

input. Master Thesis, Utrecht University. September, 2012.

K. N. Pushpalatha, A. K. Gautham, D. R. Shashikumar, K. B. ShivaKumar.

Iris Recognition System with Frequency Domain Features optimized

with PCA and SVM Classifier, IJCSI International Journal of Computer

Science Issues, Vol. 9, Issue 5, No 1, September 2012.

Arnaud Ogier, Thierry Dorval. HCS-Analyzer: Open source software for

High-Content Screening data correction and analysis. Bioinformatics.

First published online May 13, 2012.

It has been used in

an increasing number of publications

Ludovico Buffon, Evelina Lamma, Fabrizio Riguzzi, and Davide Forment.

Un sistema di vision inspection basato su reti neurali. In Popularize

Artificial Intelligence. Proceedings of the AI*IA Workshop and Prize for

Celebrating 100th Anniversary of Alan Turing's Birth (PAI 2012), Rome,

Italy, June 15, 2012, number 860 in CEUR Workshop Proceedings, pages

1-6, Aachen, Germany, 2012.

Liam Williams, Spotting The Wisdom In The Crowds. Master Thesis on

Joint Mathematics and Computer Science. Imperial College London,

Department of Computing. June, 2012.

Alosefer, Y.; Rana, O.F.; "Predicting client-side attacks via behaviour

analysis using honeypot data," Next Generation Web Services Practices

(NWeSP), 2011 7th International Conference on , vol., no., pp.31-36, 1921 Oct. 2011

It has been used in

an increasing number of publications

Brummitt, L. Scrabble Referee: Word Recognition Component, 2011.

Final project report. University of Sheffield, Sheffield, England.

Cani, V., 2011. Image Stitching for UAV remote sensing application.

Master Degree Thesis. Computer Engineering, School of Castelldefels of

Universitat Politècnica de Catalunya. Barcelona, Spain.

Hassani, A. Z.; "Touch versus in-air Hand Gestures: Evaluating the

acceptance by seniors of Human-Robot Interaction using Microsoft

Kinect," Master Thesis, University of Twente, Enschede, Netherlands,

2011.

Kaplan, K., 2011. ADES: Automatic Driver Evaluation System. PhD

Thesis, Boğaziçi University, Istanbul, Turkey.

It has been used in

an increasing number of publications

Wright, M., Lin, C.-J., O'Neill, E., Cosker, D. and Johnson, P., 2011. 3D

Gesture recognition: An evaluation of user and system performance. In:

Pervasive Computing - 9th International Conference, Pervasive 2011,

Proceedings. Heidelberg: Springer Verlag, pp. 294-313.

Lourenço, J., 2010. Wii3D: Extending the Nintendo Wii Remote into 3D.

Final course project report, Rhodes University, Grahamstown. 110p.

Mendelssohn, T.; 2010. Gestureboard - Entwicklung eines Wiimotebasierten, gestengesteuerten, Whiteboard-Systems für den

Bildungsbereich. Final project report. Hochschule Furtwangen

University, Furtwangen im Schwarzwald, Germany.

http://accord.googlecode.com

92