ppt - Johnny Chung Lee

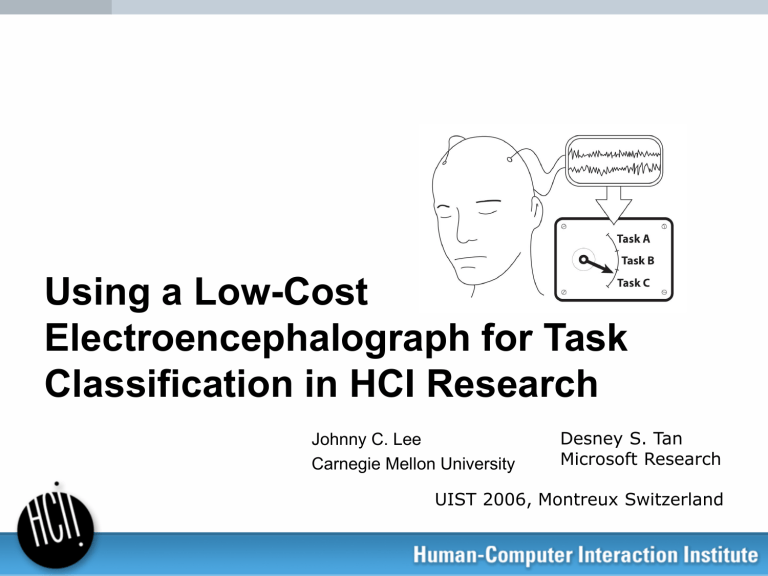

Using a Low-Cost

Electroencephalograph for Task

Classification in HCI Research

Johnny C. Lee

Carnegie Mellon University

Desney S. Tan

Microsoft Research

UIST 2006, Montreux Switzerland

National Geographic, March 2005

NY Times Magazine, October 16, 2005

Brain-Computer Interfaces (BCI)

A direct technological interface between a brain and computer not requiring any motor output from the user

Example Conferences/Journals with BCI interests:

Neural Information Processing Systems (NIPS)

IEEE Transactions on Biomedical Engineering

IEEE Transactions on Neural Systems and Rehabilitation Engineering

Why is this relevant to UIST or HCI?

BCI research traditionally focuses on exploratory neuroscience and rehabilitation engineering.

Brain sensing could provide valuable data about:

- engagement

- cognitive work load

- surprise

- satisfaction

- frustration

Potentially helpful to

Context Sensitive or Evaluation Systems

Values of BCI

To use any means necessary to demonstrate that brain-computer interaction is possible.

It is okay to: use equipment costing

$100K to +$1 million USD use highly invasive surgical procedures require hours or days of operant conditioning remove data from poor performing subjects

VS

Values of HCI

To use reasonable means to achieve a practical benefit to many users.

We’d like to: use fairly affordable and accessible equipment be safe for repeated and extended use be usable without requiring significant user training use data from all subjects to evaluate its performance

Where do we start?

Brain Sensing/Imaging Technologies

MRI

CT

ECoG

SPECT

PET

MEG fMRI

Currently Impractical for HCI

EROS/fNIR

EEG - Safe, easy, no medical expertise

EEG – Electroencephalograph the neurophysiological measurement of the electrical activity of the brain by recording from electrodes placed on the scalp

(skipping the lower level neurophysiology)

Measures the voltage difference between two locations on the scalp

Only picks up gross, macroscopic, coordinated, and synchronized firing of neurons near the surface of the brain with perpendicular orientation to the scalp. (thus majority of activity is hidden)

Analogous to holding a thermometer up to the side of a PC case

EEG Devices

Manufacturer: EGI Systems

Channels: 128-512

Cost: $100K-$250K USD

Manufacturer: BioSemi

Channels: 64-128

Cost: ~$30K USD

The Brainmaster

• Lowest cost FDA approved device

• Designed for home and small clinical use.

• Only $1500 USD

• Specs:

– 2-channels

– 8-bit at 4µV resolution

– 256 samples/sec

• Has yet to be validated for BCI research work.

• If it works, it lowers the entry bar for BCI research.

Validating the Device (and ourselves)

1. Validate the device

Can we get useful data from such a low-end device?

2. Validate ourselves

To explore this space, we must be able to collect our own data.

Validating the Device (and ourselves)

Keirn, Z., “A New Mode of Communication Between Man and His Surroundings”, IEEE Transactions on

Biomedical Engineering , Vol. 37, No. 12, 1990.

• Data is available for download

• Data has not been reproduced in the past 15 years

– Some computational BCI researchers have just used this data.

– State of the art does is not a great deal better.

Reproducing the Keirn Data

We adapted procedure from Keirn to better control potential confounds.

3 tasks:

• Rest (Baseline): Relaxation and clearing of mind

• Math: Mental arithmetic, prompted with “7 times 3 8 5”

• Rotation: Mentally rotate an object, prompted with “peacock”

Tasks from the original paper were designed to elicit hemispheric differences.

Experimental Procedure

User is instructed to keep eyes closed, minimize body movement, and not to vocalize part of the tasks.

For each task, a computer driven cue is given:

Rest , Math , Rotate

Following Math and Rotate , the experimenter says either the math problem or object

Experimental Procedure

Block design adapted from Kiern task (14 seconds)

Rot Math Rest

Math Rest Rot

Rest Rot Math

Rot Rest Math

Rest Math Rot

Math Rot Rest trial session

Experimental Procedure

Rot Math Rest

Math Rest Rot

Rest Rot Math

Rot Rest Math

Rest Math Rot

Math Rot Rest

Rot Math Rest

Math Rest Rot

Rest Rot Math

Rot Rest Math

Rest Math Rot

Math Rot Rest

Rot Math Rest

Math Rest Rot

Rest Rot Math

Rot Rest Math

Rest Math Rot

Math Rot Rest

3 sessions per subject

Many short tasks prevent correlation with EEG drift

Experimental Procedure

Rot Math Rest

Math Rest Rot

Rest Rot Math

Rot Rest Math

Rest Math Rot

Math Rot Rest

Rot Math Rest

Math Rest Rot

Rest Rot Math

Rot Rest Math

Rest Math Rot

Math Rot Rest

Subjects:

8 subjects (3 female)

29-58 years of age

All were cognitively and neurologically healthy

All right handed

Rot Math Rest

Math Rest Rot

Rest Rot Math

Rot Rest Math

Rest Math Rot

Math Rot Rest

EEG Setup

International 10-20 EEG electrode placement system

Two channels placed on P3 and P4 with both references tied to Cz.

Electrodes are held in place using conductive paste.

5-10 minute preparation.

Processing the Data

Data Processing task (14 seconds)

Rot

14 secs

Data Processing task (14 seconds)

Rot

14 secs

Task Cue

Data Processing task (14 seconds)

Rot

14 secs

Experimenter Prompt

Data Processing task (14 seconds)

Rot

14 secs

Task Onset

Data Processing task (14 seconds)

Rot

14 secs

Performing Task

Data Processing task (14 seconds)

Rot

14 secs

~4 secs

Performing Task

Data Processing task (14 seconds)

Rot

10 secs

Performing Task

Removing time for machine learning

Most machine learning algorithms don’t handle time series data very well.

30

20

10

0

-10

-20

-30

0 500 1000 1500

10 seconds

2000 2500 3000

Removing time for machine learning

• Divide the 10 seconds into 2 sec windows that overlap by 1 sec

• Perform signal processing on each of the 9 windows to get our

“time less” feature set

30

20

10

0

-10

-20

-30

0

2 secs

500 1000 1500 2000 2500 3000

Removing time for machine learning

• Divide the 10 seconds into 2 sec windows that overlap by 1 sec

• Perform signal processing on each of the 9 windows to get our

“time less” feature set

30

20

10

0

-10

-20

-30

0 500

2 secs

1000 1500 2000 2500 3000

Removing time for machine learning

• Divide the 10 seconds into 2 sec windows that overlap by 1 sec

• Perform signal processing on each of the 9 windows to get our

“time less” feature set

30

20

10

0

-10

-20

-30

0 500

2 secs

1000 1500 2000 2500 3000

Removing time for machine learning

• Divide the 10 seconds into 2 sec windows that overlap by 1 sec

• Perform signal processing on each of the 9 windows to get our

“time less” feature set

30

20

10

0

-10

-20

-30

0 500 1000

2 secs

1500 2000 2500 3000

Removing time for machine learning

• Divide the 10 seconds into 2 sec windows that overlap by 1 sec

• Perform signal processing on each of the 9 windows to get our

“time less” feature set

30

20

10

0

-10

-20

-30

0 500 1000

2 secs

1500 2000

This provides

2500 3000

486 windows per participant

Signal features for each window

Generic signal features such as mean power, peak frequency, peak frequency amplitude, etc.

Features frequently used in EEG signal analysis.

Common EEG Features

Raw EEG

Spectral

Power

30

20

-10

-20

10

0

-30

0

5000

4000

3000

2000

1000

0

0

500 1000

5 10 15

Delta

Theta

(4Hz-8Hz)

(1Hz-4Hz)

Alpha

(8Hz-12Hz)

Beta Low

(12Hz-20Hz)

20

1500

25

Beta High

(20Hz-30Hz)

30

2000

35 40

2500

Gamma

(30Hz-50Hz)

45

3000

50

Feature Processing and Selection

The 39 base features from each window are mathematically combined to create 1521 total features.

We used a feature preparation and selection process similar to [Fogarty CHI’05] to reduce the number of features:

23 features for 3-task classification (486 examples)

16.4

features for pair-wise classification (324 examples)

Baseline Results – 3 cognitive tasks

BayesNet classifier user 1 user 2 user 3 user 4 user 5 user 6 user 7 user 8

3 task

67.9%

70.6%

77.6%

63.6%

66.5%

59.3%

71.4%

69.8%

Math v.

Rotate

83.3%

82.7%

88.3%

69.4%

91.0%

80.6%

87.3%

87.7%

Rest v.

Math

88.0%

91.4%

93.8%

84.9%

81.2%

80.2%

90.4%

82.4%

Rest v.

Rotate

85.8%

84.3%

86.7%

86.7%

80.9%

68.5%

86.7%

83.6%

Average

Chance:

68.3% 83.8% 86.5% 82.9%

33.3% 50% 50% 50%

30

20

10

0

-10

-20

-30

0 500 1000

2 secs

1500 2000 2500 3000

We can do better…

30

20

10

0

-10

-20

-30

0 500 1000 1500 2000 2500 3000

???

Throwing time back in…

30

20

10

0

-10

-20

-30

0 500 1000 1500 2000 2500 3000

“Math”

We can average over temporally adjacent windows to improve classification accuracy

Averaging with Task Transitions

Task transitions result in conflicting data in averaging window.

High density of transitions will result in lower accuracy.

Averaging with Task Transitions

Fewer task transitions will yield better classification accuracy.

Averaging with Task Transitions

No transitions and averaging over all data will be the even better.

Classification Accuracy with Averaging

Mean Classification Accuracy vs. Averaging Scenarios (Mental Tasks)

100%

75%

70%

65%

60%

55%

50%

95%

90%

85%

80%

3 tasks

Math v. Rot

Rest v. Rot

Rest v. Math

Baseline

No Averaging

5 windows with transitions

5 windows no transitions

9 windows no transitions

+5.1

to +15.7% for 3-tasks

Error bars represent standard deviation

So, can we really read minds?

Mean Classification Accuracy vs. Averaging Scenarios (Mental Tasks)

100%

75%

70%

65%

60%

55%

50%

95%

90%

85%

80%

3 tasks detecting subtle motor movements….

Rest v. Rot

Rest v. Math

Baseline

No Averaging

5 windows with transitions

5 windows no transitions

9 windows no transitions

Error bars represent standard deviation

Cognitive/Motor “Fabric”

Tasks of varying cognitive difficultly are involuntarily coupled with physiological responses, such as minute imperceptible motor activity. [Kramer ’91]

Therefore, it is impossible to completely isolate cognitive activity neurologically intact individuals.

Does this matter to neuroscience?

Yes

Does this matter to HCI? Maybe not

Cognitive/Motor “Fabric”

If motor artifacts are reliably correlated with different types of tasks or engagement, why not use those to help the classifier?

Requiring users to not move is also very impractical.

Non-Cognitive Artifacts detected by EEG:

– Blinking

– Eye movement

– Head movement

– Scalpal GSR

– Jaw and facial EMG

– Gross limb movements

– Sensory Response Potentials

Experiment 2 – Game Task

To explore this idea of using non-cognitive artifacts to classify tasks using EEG, we chose a PC-based video game task.

Halo, a PC-based first person shooter game produced by Microsoft Game

Studios.

Navigate a 3D environment in an effort to shoot opponents using various weapons.

Relatively high degree of interaction with mouse and keyboard input controls.

Game Tasks

Rest – baseline rest task, relax, fixate eyes on cross hairs on center of screen, do not interact with controls. Game elements do not interact with participant.

Solo – navigate environment, interact with elements in the scene, and collect ammunition. Opponent controlled by expert did not interact with participant.

Play – navigate environment and engage opponent controlled by expert.

Expert instructed to play at a level just slightly above skill of participant to optimally challenge them.

Game Experimental Procedure

Setup, design, and procedure was similar to first study.

• Participants had tutorial and practice time with game controls.

• 3 tasks repeated 6 times (counterbalanced)

• Tasks were 24 seconds to allow navigation time.

• Only 2 sessions were run for each participant

• Same 8 participants from first study were run in this study.

• Same data preparation and machine learning procedure.

Results – Game Tasks

Mean Classification Accuracy vs. Averaging Scenarios (Game Tasks)

100%

95%

65%

60%

55%

50%

90%

85%

80%

75%

70%

3 tasks

Rest v. Play

Rest v. Solo

Solo v. Play

Baseline

No Averaging

5 windows with transitions

5 windows no transitions

19 windows no transitions

Error bars represent standard deviation

93.1%

Conclusion

This experimental design and data processing procedure can be applied to a much wider range of applications/tasks. Our two experiments were just two examples at different ends of a spectrum.

Compelling results can be achieved with low-cost equipment and without significant medical expertise or training.

Non-cognitive artifacts (inevitable in realistic computing scenarios) can be embraced improve classification power.

To make BCI relevant to HCI, we must challenge traditional assumptions and creatively work with its limitations.

Thanks!

Johnny Chung Lee johnny@cs.cmu.edu

Desney Tan desney@microsoft.com

Thanks to MSR and the VIBE

Group for supporting this work.

Cross-user Classifier

3 Cognitive Tasks

100.00%

95.00%

90.00%

85.00%

80.00%

75.00%

70.00%

65.00%

60.00%

55.00%

50.00%

45.00%

40.00%

3class

MathRotate

RestRotate

RestMath

Base 5 win 5win no edges Known

Cross-user Classifier

3 game tasks

100.00%

95.00%

90.00%

85.00%

80.00%

75.00%

70.00%

65.00%

60.00%

55.00%

50.00%

Base 5 win 5win no edges

3class

SoloPlay

RestPlay

RestSolo

Known

Brain Sensing/Imaging Technologies

MRI – only anatomical data

CT – only anatomical data

ECoG

SPECT

PET

MEG fMRI

EROS/fNIR

EEG

Brain Sensing/Imaging Technologies

MRI – only anatomical data

CT – only anatomical data

ECoG – highly invasive surgery

SPECT – radiation exposure

PET – radiation exposure

MEG fMRI

EROS/fNIR

EEG

Brain Sensing/Imaging Technologies

MRI – only anatomical data

CT – only anatomical data

ECoG – highly invasive surgery

SPECT – radiation exposure

PET – radiation exposure

MEG – extremely expensive fMRI – extremely expensive

EROS/fNIR

EEG

Brain Sensing/Imaging Technologies

MRI – only anatomical data

CT – only anatomical data

ECoG – highly invasive surgery

SPECT – radiation exposure

PET – radiation exposure

MEG – extremely expensive fMRI – extremely expensive

EROS/fNIR – currently expensive, still in infancy

EEG – safe, easy, no medical expertise

Other cool things you can do with an EEG device…

Event Related Potentials (ERP)

• Electrical activity related to or in response to the presentation of a stimulus

• Very well studied

• Relatively robust

• Used daily in clinical settings to check sensory mechanisms, typically in infants

• Requires averaging over 30-100 windows synchronized with to see response.

ERP - AEP

ERP: Auditory Evoked Potential

• Used in clinics/hospitals to check hearing.

• Response to clicks in the ear

AEP response

AEP response

Bold Lines = no clicks

Thin Line = with clicks

ERP - VEP

ERP: Visual Evoked Potential

• Focusing on a flashing target, the visual cortex will “resonate” at the stimulus frequency.

Stimulus Frequency

Harmonics

ERP – Auditory and Visual P300

• Well known/studied potential related to “attention” or

“surprise”

• Presented with 2 stimuli and instructed to count one of the stimuli

•

Positive response will follow the stimulus of interest.

Side note: EEG as ECG

ECG - Electrocardiogram

• placing an electrode on the chest provides a clear measure of cardiac activity.

• translation to BPM is a simple autocorrelation

Heart beats

Single Beat period

EEG as EMG

EMG - Electromyography

• Measures muscular activity

Wrist relaxation (return to straight position)

Wrist rest state

Tension holding

Wrist inward contraction (toward inner forearm)

NOTE: The magnitude of the spikes seem to be proportional to the acceleration involved with the movement.

EEG as Blink Detector

Blinks

Blinks

200

150

100

50

0

-50

-100

-150

-200

0 0.5

1 1.5

2 2.5

x 10

4

• Electrical activity due to muscle movement involved with eye blinks propagate through the head.

• Similarly, eye movements also affect the EEG recording

Task Classification Background

• Previous work is split primarily into two camps:

Operant Conditioning Pattern Recognition

Task Classification Background

• Previous work is split primarily into two camps:

Operant Conditioning Pattern Recognition

Human learns how the machine works

Machine learns how the human works

Task Classification Background

• Previous work is split primarily into two camps:

Operant Conditioning Pattern Recognition

Human learns how the machine works

Machine learns how the human works

Relatively new

Early dabbling in the late80’s

Most work has happened in last 5 years.

Pattern Recognition

Data Collection &

Experimental Design

Signal Processing &

Feature Generation

Machine Learning &

Improving Accuracy

EEG Setup

International 10-20 EEG electrode placement system

Two channels placed on P3 and P4 with both references tied to Cz. Locations selected based on pilot recordings.

P

Attaching electrodes: Prepare the site with a cleaner, use conductive paste to improve connection and hold electrode in place.

Paste rinses out with water, non-toxic.

5-10 minute preparation.