Matrix Methods - Civil and Environmental Engineering | SIU

MATRIX METHODS

SYSTEMS OF LINEAR EQUATIONS

ENGR 351

Numerical Methods for Engineers

Southern Illinois University Carbondale

College of Engineering

Dr. L.R. Chevalier

Dr. B.A. DeVantier

Copyright

©

2003 by Lizette R. Chevalier and Bruce A. DeVantier

Permission is granted to students at Southern Illinois University at Carbondale to make one copy of this material for use in the class ENGR 351, Numerical

Methods for Engineers. No other permission is granted.

All other rights are reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of the copyright owner.

Systems of Linear Algebraic

Equations

Specific Study Objectives

• Understand the graphic interpretation of ill-conditioned systems and how it relates to the determinant

• Be familiar with terminology: forward elimination, back substitution, pivot equations and pivot coefficient

Specific Study Objectives

• Apply matrix inversion to evaluate stimulusresponse computations in engineering

• Understand why the Gauss-Seidel method is particularly well-suited for large sparse systems of equations

• Know how to assess diagonal dominance of a system of equations and how it relates to whether the system can be solved with the

Gauss-Seidel method

Specific Study Objectives

• Understand the rationale behind relaxation and how to apply this technique

How to represent a system of linear equations as a matrix

[A]{x} = {c} where {x} and {c} are both column vectors

How to represent a system of linear equations as a matrix

0

0

.

.

3

1 x

0 .

5 x

1 x

1

1

0 x

2

.

52 x

2

1 .

9 x

3 x

3

0

0 .

67

.

01

0 .

3 x

2

0 .

5 x

3

0 .

44

{ X }

{ C }

0

0

0 .

.

3

.

5

1

0 .

52

1

0 .

3

1

0 .

.

1

9

5

x x

2 x

1

3

0 .

0 .

0 .

01

67

44

Practical application

• Consider a problem in structural engineering

• Find the forces and reactions associated with a statically determinant truss

90

60

30 hinge: transmits both vertical and horizontal forces at the surface roller: transmits vertical forces

F

1

H

2

2

30

F

2

V

2

FREE BODY DIAGRAM

1000 kg

90

1

60

F

3

3

V

3

F

H

F v

0

0

Node 1

F

1,V

F

1

30 60

F

1,H

F

H

F

V

F

3

F

1 cos 30

F

3 cos 60

F

1 , H

F

1 sin 30

F

3 sin 60

F

1 , V

F

1 cos 30

F

1 sin 30

F

3 cos 60

0

F

3 sin 60

1000

Node 2

H

2

V

2

30

F

1

F

2

F

H

F

V

0 H

F

F

2 2 1 cos 30

0 V

F

2 1 sin 30

Node 3

F

2

60

F

3

F

H

F

V

V

3

F

3 cos 60

F

2

F

3 sin 60

V

3

F

1 cos 30

F

1 sin 30

F

3 cos 60

F

3 sin 60

0

1000

H

2

V

2

F

2

F

1

F

1 sin 30

cos 30

0

F

3

F

3 cos sin 60

60

F

2

V

3

0

0

0

SIX EQUATIONS

SIX UNKNOWNS

3

4

1

2

5

6

Do some book keeping

F

1

F

2

F

3

H

2

0

0

-cos30 0

-sin30 0 cos30 1 sin30 0 cos60

-sin60

0

0

0

0

-1 -cos60 0

0 sin60 0

1

0

V

2

0

1

0

0

0

0

V

3

0

0

0

0

0

1

0

0

0

0

0

-1000

This is the basis for your matrices and the equation

[A]{x}={c}

0 866 0

0

.

0

1

0

1

0 0

0

.

0

0

0

.

1

0

0

0

0

0

0

1

0

0

0

0

0

0

0

1

F

F

1

2

F

H

2

V

2

3

0

1000

0

0

0

V

3

0

System of Linear Equations

• We have focused our last lectures on finding a value of single equation x that satisfied a

• f(x) = 0

• Now we will deal with the case of determining the values of equations x

1

, x

2

, .....x

that simultaneously satisfy a set of n

,

System of Linear Equations

• Simultaneous equations

•

•

• f f

2

1

(x

(x

1

1

, x

2

, x

2

.............

, .....x

n

, .....x

n

) = 0

) = 0

• f n

(x

1

, x

2

, .....x

n

) = 0

• Methods will be for linear equations

•

•

•

• a

11 x

1 a

21 x

1

..........

a n1 x

1

+ a

12 x

2

+ a

22 x

2

+ a n2 x

2

+...... a

1n x n

+...... a

2n x n

+...... a nn x n

=c

1

=c

2

=c n

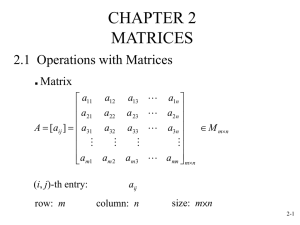

Mathematical Background

Matrix Notation

a a a

.

11

.

12 13 a a a

21 22 23

.

...

...

a a a m 1 m 2 m 3

...

a

1 n a

2 n

.

a mn

• a horizontal set of elements is called a row

• a vertical set is called a column

• first subscript refers to the row number

• second subscript refers to column number

a a

11 a

.

21 m 1 a

12 a

22

.

a m 2 a

13

...

a

23

...

.

a m 3

...

a a

.

1 n

2 n a mn

This matrix has m rows an n column.

It has the dimensions m by n ( m x n )

a a a

11

.

21 m

1 a

12 a

22

.

a m

2 a

13

...

a

23

...

.

a m

3

...

a a

.

1 n

2 n a mn

This matrix has m rows and n column.

It has the dimensions m by n ( m x n ) note subscript

column 3

a a a

.

11

.

12 13 a a a

21 22 23

.

...

...

a a a m 1 m 2 m 3

...

a

1 n a

2 n

.

a mn

Note the consistent scheme with subscripts denoting row,column row 2

Types of Matrices

Row vector: m=1

b

1 b

2

.......

b n

Column vector: n=1 Square matrix: m = n

c c

.

.

c m

1

2

a a a a a

11

21

31 a a

12

22

32 a a

13

23

33

Definitions

• Symmetric matrix

• Diagonal matrix

• Identity matrix

• Inverse of a matrix

• Transpose of a matrix

• Upper triangular matrix

• Lower triangular matrix

• Banded matrix

Symmetric Matrix

a ij

= a ji for all i ’s and j ’s

5

1

1 2

3 7

2 7 8

Does a

23

= a

32

?

Yes. Check the other elements on your own.

Diagonal Matrix

A square matrix where all elements off the main diagonal are zero

a

11

0

0 a

0

0

22 a

0

0

33

0

0

0

0 0 0 a

44

Identity Matrix

A diagonal matrix where all elements on the main diagonal are equal to 1

1 0 0 0

0 1 0 0

0 0 1 0

0 0 0 1

The symbol [I] is used to denote the identify matrix.

Inverse of [A]

Transpose of [A] t

a a

11 21 a a

12 22

.

.

.

.

.

.

a a

1 n 2 n

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

a m 1 a

.

.

.

m 2

a mn

Upper Triangle Matrix

Elements below the main diagonal are zero

a a a

11 12

0

0 a

0

22 a a

13

23

33

Lower Triangular Matrix

All elements above the main diagonal are zero

5 0 0

1 3 0

2 7 8

Banded Matrix

All elements are zero with the exception of a band centered on the main diagonal

a a

11 12 a a a

0

21 a

22

32 a

0

23

33 a

0

0

34

0 0 a a

43 44

Matrix Operating Rules

• Addition/subtraction

• add/subtract corresponding terms

• a ij

+ b ij

= c ij

• Addition/subtraction are commutative

• [A] + [B] = [B] + [A]

• Addition/subtraction are associative

• [A] + ([B]+[C]) = ([A] +[B]) + [C]

Matrix Operating Rules

• Multiplication of a matrix [A] by a scalar g is obtained by multiplying every element of [A] by g

ga ga

11 12 ga ga

21 22

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

ga ga

.

.

.

1 n

2 n

ga ga m 1 m 2

.

.

.

ga mn

Matrix Operating Rules

• The product of two matrices is represented as

[C] = [A][B]

• n = column dimensions of [A]

• n = row dimensions of [B] c ij

k

N

1 a b ik kj

Simple way to check whether matrix multiplication is possible

exterior dimensions conform to dimension of resulting matrix

[A] m x n [B] n x k = [C] m x k interior dimensions must be equal

Recall the equation presented for matrix multiplication

• The product of two matrices is represented as

[C] = [A][B]

• n = column dimensions of [A]

• n = row dimensions of [B] c ij

k

N

1 a b ik kj

Example

Determine [C] given [A][B] = [C]

1

0

1

3

3

2

3

4

2

4

2

0

1

2

1

2

2

3

Matrix multiplication

• If the dimensions are suitable, matrix multiplication is associative

• ([A][B])[C] = [A]([B][C])

• If the dimensions are suitable, matrix multiplication is distributive

• ([A] + [B])[C] = [A][C] + [B][C]

• Multiplication is generally not commutative

• [A][B] is not equal to [B][A]

Determinants

Denoted as det A or lAl for a 2 x 2 matrix a c a c b

ad

bc d b

ad

bc d

Determinants

For a 3 x 3

+

2

9

4

3

2

5

1

3

2

2

9

4

2

5

-

3 1

3

2

2

9

4

3

2

5

+

1

3

2

Problem

1

4

6

7

3

1

9

2

5

Determine the determinant of the matrix.

Properties of Determinants

• det A = det A T

• If all entries of any row or column is zero, then det A = 0

• If two rows or two columns are identical, then det A = 0

• Note: determinants can be calculated using mdeterm function in Excel

Excel Demonstration

• Excel treats matrices as arrays

• To obtain the results of multiplication, addition, and inverse operations, you hit control-shift-enter as opposed to enter .

• The resulting matrix cannot be altered…let’s see an example using

Excel in class matrix.xls

Matrix Methods

• Cramer’s Rule

• Gauss elimination

• Matrix inversion

• Gauss Seidel/Jacobi

a a a

11 a a a

21

12

22

13

23

a a a

31 32 33

Graphical Method

2 equations, 2 unknowns a x

11 1

a x

12 2

c

1 a x

21 1

a x

22 2

c

2 x

2

a

11 a

12

x

1

c

1 a

12 x

2

a

21 a

22

x

1

c

2 a

22 x

2

( x

1

, x

2

) x

1

3 x

1

2 x

2

18 x

2

3

2

x

1

9 x

2

9

3

2 x

1

x

2

1

2 x

2

2 x

2

1

2

x

1

1

1

2

1 x

1

3 x

1

2 x

2

18 x

1

2 x

2

2 x

2 x

2

3

2

x

1

1

2

x

1

9

1 x

2

9

1

3

2

( 4 , 3 )

2

1 x

1

Check: 3(4) + 2(3) = 12 + 6 = 18

Special Cases

• No solution

• Infinite solution

• Ill-conditioned x

2

( x

1

, x

2

) x

1

a) No solution - same slope f(x) b) infinite solution f(x) c) ill conditioned so close that the points of intersection are difficult to detect visually f(x) x

-1/2 x

1

+ x

2

-x

1

+2x

2

= 2

= 1 x x

Ill Conditioned Systems

• If the determinant is zero, the slopes are identical a x

11 1

a x

12 2

c

1 a x

21 1

a x

22 2

c

2

Rearrange these equations so that we have an alternative version in the form of a straight line: i.e. x

2

= (slope) x

1

+ intercept

Ill Conditioned Systems

x

2

a

11 a

12 x

1

c

1 a

12 x

2

a

21 a

22 x

1

c

2 a

22

If the slopes are nearly equal (ill-conditioned) a

11

a

21 a

12 a

22 a a

11 22

a a

21 12 a a

11 22

a a

21 12

0

Isn’t this the determinant?

a a

11 12 a a

21 22

det A

Ill Conditioned Systems

If the determinant is zero the slopes are equal.

This can mean:

- no solution

- infinite number of solutions

If the determinant is close to zero, the system is ill conditioned.

So it seems that we should use check the determinant of a system before any further calculations are done.

Let’s try an example.

Example

Determine whether the following matrix is ill-conditioned.

37

19

.

2

.

2

4 .

7

2 .

5

x

1 x

2

22

12

Solution

37 2 4 7

19 2 2 5

.

What does this tell us? Is this close to zero? Hard to say.

If we scale the matrix first, i.e. divide by the largest a value in each row, we can get a better sense of things.

Solution

5 x

10

-40

-60

-80

0

0

-20

15 This is further justified when we consider a graph of the two functions.

Clearly the slopes are nearly equal

Another Check

• Scale the matrix of coefficients, [A], so that the largest element in each row is 1. If there are elements of [A] -1 that are several orders of magnitude greater than one, it is likely that the system is ill-conditioned.

• Multiply the inverse by the original coefficient matrix.

If the results are not close to the identity matrix, the system is ill-conditioned

.

• Invert the inverted matrix. If it is not close to the original coefficient matrix, the system is illconditioned.

We will consider how to obtain an inverted matrix later.

Cramer’s Rule

• Not efficient for solving large numbers of linear equations

• Useful for explaining some inherent problems associated with solving linear equations.

a

11 a

21 a

31 a

12 a

22 a

32 a

13 a

23 a

33

x x

2 x

1

3

b b

2

3 b

1

Cramer’s Rule

x

1

b a a

1 12 13

1

A b a a

2 22 23 b a a

3 32 33 to solve for x i

- place {b} in the ith column

Cramer’s Rule

x

1

b

1

1 b

2

A b

3 a

12 a

22 a

32 a

13 a

23 a

33 x

2

a

11

1

A a

21 a

31 b

1 b

2 b

3 a

13 a

23 a

33 x

3

a

11

1

A a

21 a

31 a

12 a

22 a

32 b

1 b

2 b

3 to solve for x i

- place {b} in the ith column

EXAMPLE

Use of Cramer’s Rule

2 x

1

3 x

2

5 x

1

x

2

5

2

3

1 1 x

1 x

2

5

5

Solution

2

3

1 1 x

1 x

2

5

5

A

2 3 5 x

1

1 5

5 5 1

3

1

5

3 5

20

5

4 x

2

1

5

2 5

1 5

1

5

5

1

Elimination of Unknowns

( algebraic approach) a

11 x a

21 x

1

1

a

12 x

2

a

22 x

2

c

1 c

2 a

11 x a

21 x

1

1

a

12 x

2

a

22 x

2

c

1 c

2

a

21

11

a

21 a

11 x

1 a

21 a

11 x

1

a

21 a

12 x

2

a

11 a

22 x

2

a

21 c

1

a

11 c

2

SUBTRACT

Elimination of Unknowns

( algebraic approach) a

21 a

11 x

1 a

21 a

11 x

1

a

21 a

12 x

2

a

11 a

22 x

2

a

21 c

1

a

11 c

2

SUBTRACT a

11 a

21 x

2

a

22 a

11 x

2

c

1 a

21

c

2 a

11 x

2

a

21 c a

12 a

21

1

a

11 c

2

a

22 a

11 x

1

a

22 c a

11 a

22

1

a

12 c

2 a

12 a

21

NOTE: same result as

Cramer’s Rule

Gauss Elimination

• One of the earliest methods developed for solving simultaneous equations

• Important algorithm in use today

• Involves combining equations in order to eliminate unknowns and create an upper triangular matrix

• Progressively back substitute to find each unknown

Two Phases of Gauss

Elimination

a a a

11 12 a a a

21 22 23 a a a

31 32

13

33

|

|

| c c c

3

1

2

a a a

11 12 13

0

0

' a a

22

0

'

23

' ' a

33

|

|

| c c c

3

' '

'

1

2

Forward

Elimination

Note: the prime indicates the number of times the element has changed from the original value.

Two Phases of Gauss

Elimination

a

11

0

0 a

12

' a

22

0 a

13

' a

23

'' a

33

|

|

| x

3 x

2 x

1

c

3

''

'' a

33

c

2

'

c

1

a

1

23 x

3

' a

22 a

12 x

2

a

11 a

13 x

3

c c c

3

''

'

1

2

Back substitution

Rules

• Any equation can be multiplied (or divided) by a nonzero scalar

• Any equation can be added to (or subtracted from) another equation

• The positions of any two equations in the set can be interchanged.

EXAMPLE

Perform Gauss Elimination of the following matrix.

2 x

1

x

2

3 x

3

1

4 x

1

4 x

2

7 x

3

1

2 x

1

5 x

2

9 x

3

3

Solution

2 x

1

x

2

3 x

3

1

4 x

1

4 x

2

7 x

3

1

2 x

1

5 x

2

9 x

3

3

Multiply the first equation by a

21

/ a

11

= 4/2 = 2

Note: a

11 is called the pivot element

4 x

1

2 x

2

6 x

3

2

a

21

/ a

11

= 4/2 = 2

2 x

1

x

2

3 x

3

1

4 x

1

4 x

2

7 x

3

1

2 x

1

5 x

2

9 x

3

3

4 x

1

2 x

2

6 x

3

2

a

21

/ a

11

= 4/2 = 2

2 x

1

x

2

3 x

3

1

4 x

1

4 x

2

7 x

3

1

2 x

1

5 x

2

9 x

3

3

4 x

1

4 x

1

2 x

1

2 x

2

4 x

2

5 x

2

6 x

3

7 x

3

9 x

3

2

1

3

a

21

/ a

11

= 4/2 = 2

2 x

1

x

2

3 x

3

1

4 x

1

4 x

2

7 x

3

1

2 x

1

5 x

2

9 x

3

3

4 x

1

4 x

1

2 x

1

2 x

2

4 x

2

5 x

2

6 x

3

7 x

3

9 x

3

2

1

3

Subtract the revised first equation from the second equation

a

21

/ a

11

= 4/2 = 2

2 x

1

x

2

3 x

3

1

4 x

1

4 x

2

7 x

3

1

2 x

1

5 x

2

9 x

3

3

4 x

1

4 x

1

2 x

1

2 x

2

4 x

2

5 x

2

6 x

3

7 x

3

9 x

3

2

1

3

Subtract the revised first equation from the second equation

4

4

x

1

4

2

x

2

7

6

x

3

1 2

0 x

1

2 x

2

x

3

1

a

21

/ a

11

= 4/2 = 2

2 x

1

x

2

3 x

3

1

4 x

1

4 x

2

7 x

3

1

2 x

1

5 x

2

9 x

3

3

4 x

1

4 x

1

2 x

1

2 x

2

4 x

2

5 x

2

6 x

3

7 x

3

9 x

3

2

1

3

Subtract the revised first equation from the second equation

4

4

x

1

4

2

x

2

7

6

x

3

1 2

0 x

1

2 x

2

x

3

1

NEW

MATRIX

2 x

1

0 x

1

2 x

1

x

2

2 x

2

3 x

3 x

3

5 x

2

9 x

3

1

1

3

a

21

/ a

11

= 4/2 = 2

2 x

1

x

2

3 x

3

1

4 x

1

4 x

2

7 x

3

1

2 x

1

5 x

2

9 x

3

3

4 x

1

4 x

1

2 x

1

2 x

2

4 x

2

5 x

2

6 x

3

7 x

3

9 x

3

2

1

3

Subtract the revised first equation from the second equation

4

4

x

1

4

2

x

2

7

6

x

3

1 2

0 x

1

2 x

2

x

3

1

NOW LET’S

GET A ZERO

HERE

2 x

1

0 x

1

2 x

1

x

2

2 x

2

3 x

3 x

3

5 x

2

9 x

3

1

1

3

Solution

2 x

1

x

2

3 x

3

1

4 x

1

4 x

2

7 x

3

1

2 x

1

5 x

2

9 x

3

3

Multiply equation 1 by a

31

/a

11 and subtract from equation 3

= 2/2 = 1

2

2

x

1

5

1

x

2

9

3

x

3

3

1

0 x

1

4 x

2

6 x

3

2

Solution

Following the same rationale, subtract the 3rd equation from the first equation

2 x

1

x

2

3 x

3

1

4 x

1

4 x

2

7 x

3

1

2 x

1

5 x

2

9 x

3

3

2 x

1

x

2

3 x

3

1

2 x

2

x

3

1

4 x

2

6 x

3

2

Continue the computation by multiplying the second equation by a

32

’/a

22

’ = 4/2 =2

Subtract the third equation of the new matrix

Solution

2 x

1

x

2

3 x

3

1

2 x

2

x

3

1

4 x

2

6 x

3

2

2 x

1

x

2

3 x

3

1

2 x

2

x

3

1

4 x

3

4

THIS DERIVATION OF

AN UPPER TRIANGULAR MATRIX

IS CALLED THE FORWARD

ELIMINATION PROCESS

Solution

From the system we immediately calculate:

2 x

1

x

2

3 x

3

1

2 x

2

x

3

1

4 x

3

4 x

3

1

4

Continue to back substitute

THIS SERIES OF

STEPS IS THE

BACK

SUBSTITUTION x

2 x

1

1

2

1 3

2

1

2

Pitfalls of the Elimination Method

• Division by zero

• Round off errors

• magnitude of the pivot element is small compared to other elements

• Ill conditioned systems

Pivoting

• Partial pivoting

• rows are switched so that the pivot element is not zero

• rows are switched so that the largest element is the pivot element

• Complete pivoting

• columns as well as rows are searched for the largest element and switched

• rarely used because switching columns changes the order of the x’s adding unjustified complexity to the computer program

For example

Pivoting is used here to avoid division by zero

2 x

2

3 x

3

8

4 x

1

6 x

2

7 x

3

3

2 x

1

x

2

6 x

3

5

4 x

1

6 x

2

7 x

3

3

2 x

2

3 x

3

8

2 x

1

x

2

6 x

3

5

Another Improvement: Scaling

• Minimizes round-off errors for cases where some of the equations in a system have much larger coefficients than others

• In engineering practice, this is often due to the widely different units used in the development of the simultaneous equations

• As long as each equation is consistent, the system will be technically correct and solvable

Example

(solution in notes)

Use Gauss Elimination to solve the following set of linear equations. Employ partial pivoting when necessary.

3 x

2

13 x

3

50

2 x

1

6 x

2

x

3

4 x

1

8 x

3

4

45

Solution

3 x

2

13 x

3

50

2 x

1

6 x

2

x

3

4 x

1

8 x

3

4

45

First write in matrix form, employing short hand presented in class.

0

2

3

13

6 1

4 0 8

50

45

4

We will clearly run into problems of division by zero.

Use partial pivoting

0

2

3

13

6 1

4 0 8

50

45

4

Pivot with equation with largest a n1

0

2

4

4

2

0

3

6

0

13

1

8

50

45

4

0

6

3

8

1

13

4

45

50

0

2

4

3

6

0

13

1

8

50

45

4

4

2

0

4

0

0

0

6

3

8

1

13

4

45

50

0

6

3

8

3

13

4

43

50

Begin developing upper triangular matrix

4 0 8

0

6

3

0 3

13

4

43

50

4

0

0

0

6

0

8

3

.

4

43

.

x

3 x

1

.

4

.

4 x

2

.

CHECK

.

.

50

43

.

6

.

okay

...end of problem

Gauss-Jordan

• Variation of Gauss elimination

• Primary motive for introducing this method is that it provides a simple and convenient method for computing the matrix inverse .

• When an unknown is eliminated, it is eliminated from all other equations, rather than just the subsequent one

Gauss-Jordan

a

11 a

12 a

0

0 a

0

22 a a

13

23

33

1

0

0

1

0

0

0

0

0 0 1 0

0 0 0 1

• All rows are normalized by dividing them by their pivot elements

• Elimination step results in an identity matrix rather than an UT matrix

Graphical depiction of Gauss-Jordan

a a a

11 12

'

13

a

21 a '

22 a a a a

31 32

23

''

33

|

|

| c

1 c c

3

''

2

'

1 0 0

0 1 0

0 0 1

|

|

| c

1 c c

2

3

Graphical depiction of Gauss-Jordan

a a a

11 12

'

13

a

21 a '

22 a a a a

31 32

23

''

33

|

|

| c

1 c c

3

''

2

'

1 0 0

0 1 0

0 0 1

|

|

| c c c

3

1

2

1 0 0

0 1 0

0 0 1

|

|

| c

1 c c

2

3

x

1 x

2

c

1

c

2 x

3

c

3

Matrix Inversion

• [A] [A] -1 = [A] -1 [A] = I

• One application of the inverse is to solve several systems differing only by {c}

• [A]{x} = {c}

• [A] -1 [A] {x} = [A] -1 {c}

• [I]{x}={x}= [A] -1 {c}

• One quick method to compute the inverse is to augment [A] with [I] instead of {c}

Graphical Depiction of the Gauss-Jordan

Method with Matrix Inversion

a a a

11 12 a a a

21 22 23 a a a

31 32

13

33

1

0

0

1

0

0

0 0 1

1 0 0

0 1 0

0 0 1

1

1 a a a

11 12

13

1

a a a

21

1

1

22

1

23

31

1

1 a a a

32

1

33

1

Note: the superscript

“-1” denotes that the original values have been converted to the matrix inverse, not 1/a ij

WHEN IS THE INVERSE MATRIX USEFUL?

CONSIDER STIMULUS-RESPONSE

CALCULATIONS THAT ARE SO COMMON IN

ENGINEERING.

Stimulus-Response Computations

• Conservation Laws mass force heat momentum

• We considered the conservation of force in the earlier example of a truss

Stimulus-Response Computations

• [A]{x}={c}

• [interactions]{response}={stimuli}

• Superposition

• if a system subject to several different stimuli, the response can be computed individually and the results summed to obtain a total response

• Proportionality

• multiplying the stimuli by a quantity results in the response to those stimuli being multiplied by the same quantity

• These concepts are inherent in the scaling of terms during the inversion of the matrix

Example

Given the following, determine {x} for the two different loads {c}

Ax

c

A

1

1

2

2

3

2

4

1

7

6

1

3

1

1

3

4

Solution

Ax

c

A

1

1

2

2

3

2

4

1

7

6

1

3

1

1

3

4

{c} T = {1 2 3} x

1 x

2 x

3

= (2)(1) + (-1)(2) + (1)(3) = 3

= (-2)(1) + (6)(2) + (3)(3) = 19

= (-3)(1) + (1)(2) + (-4)(3) = -13

{c} T = {4 -7 1) x

1 x

2 x

3

= (2)(4) + (-1)(-7) + (1)(1)=16

= (-2)(4) + (6)(-7) + (3)(1) = -47

= (-3)(4) + (1)(-7) + (-4)(1) = -23

Gauss Seidel Method

• An iterative approach

• Continue until we converge within some prespecified tolerance of error

• Round off is no longer an issue, since you control the level of error that is acceptable

• Fundamentally different from Gauss elimination.

This is an approximate, iterative method particularly good for large number of equations

Gauss-Seidel Method

• If the diagonal elements are all nonzero, the first equation can be solved for x

1 c

1

a x

12 2

a x

13 3 x

1

a x

1 n n a

11

• Solve the second equation for x

2

, etc.

To assure that you understand this, write the equation for x

2

x

1 x

2 x

3

c

1

a x

12 2

a x

13 3 a

11 c

2

a x

21 1

a x

23 3

a

22 c

3

a x

31 1

a x

32 2 a

33 x n

c n

a x n 1 1

a n x

3 2 a nn a x

1 n n a x

2 n n a x

3 n n a nn

1 x n

1

Gauss-Seidel Method

• Start the solution process by guessing values of x

• A simple way to obtain initial guesses is to assume that they are all zero

• Calculate new values of x i starting with

• x

1

= c

1

/a

11

• Progressively substitute through the equations

• Repeat until tolerance is reached

Gauss-Seidel Method

x

1

c

1

a x

12 2

a x

13 3

/ a

11 x

2

c

2

a x

21 1

a x

23 3

/ a

22 x

3

c

3

a x

31 1

a x

32 2

/ a

33 x

1

c

1

a

12

0

a

13

0

/ a

11

c

1

0

a

11 x

2

c

2

a x '

21 1

a

23

/ a

22

x x

3

c

3

a x '

31 1

a x '

32 2

/ a

33

x

'

2

'

3

x '

1

Example

Given the following augmented matrix, complete one iteration of the Gauss Seidel method.

2

3 1

4 1 2

3 2 1

2

2

1

2

3 1

4 1 2

3 2 1

2

2

1

GAUSS SEIDEL x

1

c

1

a

12

0

a

13

0

/ a

11

c

1

a

11 x

1 x

2

2 c

2

2 a x

2

'

21 1

4 1 a

23

0

/

2 a

22

x

1

'

2

2 4 x x

2 x

3

c

3

a x '

31 1

a x '

32 2

/ a

33

x

1

1

1

3

1 1

'

3

1 3 12 x '

1

6

10

Jacobi Iteration

• Iterative like Gauss Seidel

• Gauss-Seidel immediately uses the value of x i in the next equation to predict x i+1

• Jacobi calculates all new values of x i calculate a set of new x i values

’s to

Graphical depiction of difference between Gauss-Seidel and Jacobi x x

1

3

c c

1

3

a x

12 2 a x

31 1

a x

13 3 a x

32 2

FIRST ITERATION

/

/ a x

2

c

2

a x

21 1

a x

23 3

/ a a

11

22

33 x

1

c

1

a x

12 2

a x

13 3

/ a

11 x

2

c

2

a x

21 1

a x

23 3

/ a

22 x

3

c

3

a x

31 1

a x

32 2

/ a

33 x x

1

3

c c

1

3

a x

12 2 a x

31 1

SECOND ITERATION a x

13 3 a x

32 2

/

/ a x

2

c

2

a x

21 1

a x

23 3

/ a a

11

22

33 x

1

c

1

a x

12 2

a x

13 3

/ a

11 x

2

c

2

a x

21 1

a x

23 3

/ a

22 x

3

c

3

a x

31 1

a x

32 2

/ a

33

Example

Given the following augmented matrix, complete one iteration of the Gauss Seidel method and the Jacobi method.

2

3 1

4 1 2

3 2 1

2

2

1

Note: We worked the Gauss Seidel method earlier

Gauss-Seidel Method convergence criterion

x i j x i j

1 x i j

100

s as in previous iterative procedures in finding the roots, we consider the present and previous estimates.

As with the open methods we studied previously with one point iterations

1. The method can diverge

2. May converge very slowly

Convergence criteria for two linear equations

,

1 2

c

1

a

11 a

12 a

11 x

2

,

1 2

c

2

a

22 a

21 x

2 a

22

Class question: where do these formulas come from?

consider the partial derivatives of u and v

u

x

1

v

x

1

0

a

21 a

22

v

x u

x

2

2

a

12 a

11

0

Convergence criteria for two linear equations cont.

u

x

u

y

v x

1

v y

1

Criteria for convergence where presented earlier in class material for nonlinear equations.

Noting that x = x y = x

2

1 and

Substituting the previous equation:

Convergence criteria for two linear equations cont.

a

21 a

22

1 a

12 a

11

1

This is stating that the absolute values of the slopes must be less than unity to ensure convergence.

Extended to n equations: a ii

a ij where j

1, n excluding j

i

Convergence criteria for two linear equations cont.

a ii

a ij where j

1, n excluding j

i

This condition is sufficient but not necessary; for convergence.

When met, the matrix is said to be diagonally dominant.

Diagonal Dominance

0 .

2

0 .

1

1

0

0

0

.

.

.

2

8

5

0

0

.

.

4

4

0 .

9

x x x

1

2

3

3

4

9

To determine whether a matrix is diagonally dominant you need to evaluate the values on the diagonal.

Diagonal Dominance

0 .

2

0 .

1

1

0

0

0

.

.

.

2

8

5

0

0

.

.

4

4

0 .

9

x x x

1

2

3

3

4

9

Now, check to see if these numbers satisfy the following rule for each row (note: each row represents a unique equation).

a ii

a ij where j

1, n excluding j

i

x

2

Review the concepts of divergence and convergence by graphically illustrating Gauss-Seidel for two linear equations x

1 u : 11 x

1

13 x

2

286 v : 11 x

1

9 x

2

99

x

2 v : 11 x

1

9 x

2

99 u : 11 x

1

13 x

2

286

Note: we are converging on the solution x

1

CONVERGENCE

x

2 u : 11 x

1

13 x

2

286 v : 11 x

1

9 x

2

99

Change the order of the equations: i.e. change direction of initial estimates x

1

DIVERGENCE

Improvement of Convergence

Using Relaxation

This is a modification that will enhance slow convergence.

After each new value of x is computed, calculate a new value based on a weighted average of the present and previous iteration.

x i new x i new

x i old

Improvement of Convergence Using

Relaxation x i new x i new

x i old

• if = 1unmodified

• if 0 < < 1 underrelaxation

• nonconvergent systems may converge

• hasten convergence by dampening out oscillations

• if 1< < 2 overrelaxation

• extra weight is placed on the present value

• assumption that new value is moving to the correct solution by too slowly