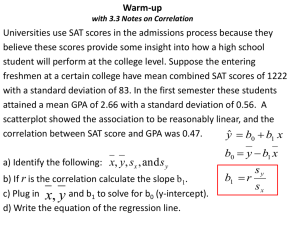

Chapter 3 Linear Regression and Correlation

advertisement

Chapter 3 Linear Regression and Correlation Descriptive Analysis & Presentation of Two Quantitative Data Chapter Objectives • To be able to present two-variables data in tabular and graphic form • Display the relationship between two quantitative variables graphically using a scatter diagram. • Calculate and interpret the linear correlation coefficient. • Discuss basic idea of fitting the scatter diagram with a best-fitted line called a linear regression line. • Create and interpret the linear regression line. Terminology • Data for a single variable is univariate data • Many or most real world models have more than one variable … multivariate data • In this chapter we will study the relations between two variables … bivariate data Bivariate Data • In many studies, we measure more than one variable for each individual • Some examples are – Rainfall amounts and plant growth – Exercise and cholesterol levels for a group of people – Height and weight for a group of people Types of Relations When we have two variables, they could be related in one of several different ways – They could be unrelated – One variable (the input or explanatory or predictor variable) could be used to explain the other (the output or response or dependent variable) – One variable could be thought of as causing the other variable to change Note: When two variables are related to each other, one variable may not cause the change of the other variable. Relation does not always mean causation. Lurking Variable • Sometimes it is not clear which variable is the explanatory variable and which is the response variable • Sometimes the two variables are related without either one being an explanatory variable • Sometimes the two variables are both affected by a third variable, a lurking variable, that had not been included in the study Example 1 • An example of a lurking variable • A researcher studies a group of elementary school children – Y = the student’s height – X = the student’s shoe size • It is not reasonable to claim that shoe size causes height to change • The lurking variable of age affects both of these two variables More Examples • Some other examples • Rainfall amounts and plant growth – Explanatory variable – rainfall – Response variable – plant growth – Possible lurking variable – amount of sunlight • Exercise and cholesterol levels – Explanatory variable – amount of exercise – Response variable – cholesterol level – Possible lurking variable – diet Types of Bivariate Data Three combinations of variable types: 1. Both variables are qualitative (attribute) 2. One variable is qualitative (attribute) and the other is quantitative (numerical) 3. Both variables are quantitative (both numerical) Two Qualitative Variables • When bivariate data results from two qualitative (attribute or categorical) variables, the data is often arranged on a cross-tabulation or contingency table Example: A survey was conducted to investigate the relationship between preferences for television, radio, or newspaper for national news, and gender. The results are given in the table below: Male Female TV 280 115 Radio 175 275 NP 305 170 Marginal Totals • This table, may be extended to display the marginal totals (or marginals). The total of the marginal totals is the grand total: Male Female Col. Totals TV 280 115 395 Radio 175 275 450 NP Row Totals 305 760 170 560 475 1320 Note: Contingency tables often show percentages (relative frequencies). These percentages are based on the entire sample or on the subsample (row or column) classifications. Percentages Based on the Grand Total (Entire Sample) • The previous contingency table may be converted to percentages of the grand total by dividing each frequency by the grand total and multiplying by 100 – For example, 175 becomes 13.3% 175 = 100 13.3 1320 Male Female Col. Totals TV 21.2 8.7 29.9 Radio 13.3 20.8 34.1 NP Row Totals 23.1 57.6 12.9 42.4 36.0 100.0 Illustration • These same statistics (numerical values describing sample results) can be shown in a (side-by-side) bar graph: Percentages Based on Grand Total 25 Male 20 Female 15 Percent 10 5 0 TV Radio Media NP Percentages Based on Row (Column) Totals • The entries in a contingency table may also be expressed as percentages of the row (column) totals by dividing each row (column) entry by that row’s (column’s) total and multiplying by 100. The entries in the contingency table below are expressed as percentages of the column totals: Male Male Female Female Col. Col.Totals Totals TV TV Radio Radio NP NP Row RowTotals Totals 70.9 70.9 38.9 38.9 64.2 64.2 57.6 57.6 29.1 29.1 61.1 61.1 35.8 35.8 42.4 42.4 100.0 100.0 100.0 100.0 100.0 100.0 100.0 100.0 Note:These statistics may also be displayed in a side-by-side bar graph One Qualitative & One Quantitative Variable 1. When bivariate data results from one qualitative and one quantitative variable, the quantitative values are viewed as separate samples 2. Each set is identified by levels of the qualitative variable 3. Each sample is described using summary statistics, and the results are displayed for side-by-side comparison 4. Statistics for comparison: measures of central tendency, measures of variation, 5-number summary 5. Graphs for comparison: side-by-side stemplot and boxplot Example Example: A random sample of households from three different parts of the country was obtained and their electric bill for June was recorded. The data is given in the table below: Northeast Northeast 23.75 23.75 40.50 40.50 33.65 33.65 31.25 31.25 42.55 42.55 50.60 50.60 37.70 37.70 31.55 31.55 38.85 38.85 21.25 21.25 Midwest Midwest 34.38 34.38 34.35 34.35 39.15 39.15 37.12 37.12 36.71 36.71 34.39 34.39 35.12 35.12 35.80 35.80 37.24 37.24 40.01 40.01 West West 54.54 54.54 65.60 65.60 59.78 59.78 45.12 45.12 60.35 60.35 61.53 61.53 52.79 52.79 47.37 47.37 59.64 59.64 37.40 37.40 • The part of the country is a qualitative variable with three levels of response. The electric bill is a quantitative variable. The electric bills may be compared with numerical and graphical techniques. Comparison Using Box-and-Whisker Plots 70 The Monthly Electric Bill 60 50 Electric Bill 40 30 20 Northeast Midwest West The electric bills in the Northeast tend to be more spread out than those in the Midwest. The bills in the West tend to be higher than both those in the Northeast and Midwest. Descriptive Statistics for Two Quantitative Variables Scatter Diagrams and correlation coefficient Two Quantitative Variables • The most useful graph to show the relationship between two quantitative variables is the scatter diagram • Each individual is represented by a point in the diagram – The explanatory (X) variable is plotted on the horizontal scale – The response (Y) variable is plotted on the vertical scale Example Example: In a study involving children’s fear related to being hospitalized, the age and the score each child made on the Child Medical Fear Scale (CMFS) are given in the table below: Age (x ) CMFS (y ) 8 9 9 10 11 9 8 9 8 11 31 25 40 27 35 29 25 34 44 19 Age (x ) CMFS (y ) 7 6 6 8 9 12 15 13 10 10 28 47 42 37 35 16 12 23 26 36 Construct a scatter diagram for this data Solution • age = input variable, CMFS = output variable Child Medical Fear Scale 50 40 CMFS 30 20 10 6 7 8 9 10 11 Age 12 13 14 15 Another Example • An example of a scatter diagram Note: the vertical scale is truncated to illustrate the detail relation! Types of Relations • There are several different types of relations between two variables – A relationship is linear when, plotted on a scatter diagram, the points follow the general pattern of a line – A relationship is nonlinear when, plotted on a scatter diagram, the points follow a general pattern, but it is not a line – A relationship has no correlation when, plotted on a scatter diagram, the points do not show any pattern Linear Correlations • Linear relations or linear correlations have points that cluster around a line • Linear relations can be either positive (the points slants upwards to the right) or negative (the points slant downwards to the right) Positive Correlations • For positive (linear) correlation – Above average values of one variable are associated with above average values of the other (above/above, the points trend right and upwards) – Below average values of one variable are associated with below average values of the other (below/below, the points trend left and downwards) Example: Positive Correlation • As x increases, y also increases: 60 50 Output 40 30 20 10 15 20 25 30 35 Input 40 45 50 55 Negative Correlations • For negative (linear) correlation – Above average values of one variable are associated with below average values of the other (above/below, the points trend right and downwards) – Below average values of one variable are associated with above average values of the other (below/above, the points trend left and upwards) Example: Negative Correlation • As x increases, y decreases: 95 85 Output 75 65 55 10 15 20 25 30 35 Input 40 45 50 55 Nonlinear Correlations • Nonlinear relations have points that have a trend, but not around a line • The trend has some bend in it No Correlations • When two variables are not related – There is no linear trend – There is no nonlinear trend • Changes in values for one variable do not seem to have any relation with changes in the other Example: No Correlation • As x increases, there is no definite shift in y: 55 Output 45 35 10 20 Input 30 Distinction between Nonlinear & No Correlation Nonlinear relations and no relations are very different – Nonlinear relations are definitely patterns … just not patterns that look like lines – No relations are when no patterns appear at all Example • Examples of nonlinear relations – “Age” and “Height” for people (including both children and adults) – “Temperature” and “Comfort level” for people • Examples of no relations – “Temperature” and “Closing price of the Dow Jones Industrials Index” (probably) – “Age” and “Last digit of telephone number” for adults Please Note Perfect positive correlation: all the points lie along a line with positive slope Perfect negative correlation: all the points lie along a line with negative slope If the points lie along a horizontal or vertical line: no correlation If the points exhibit some other nonlinear pattern: nonlinear relationship Need some way to measure the strength of correlation Linear Correlation Coefficient Measure of Linear Correlation • The linear correlation coefficient is a measure of the strength of linear relation between two quantitative variables • The sample correlation coefficient “r” is r = ( xi x ) ( y i y ) sx sy n 1 X , Y , Sx , S y are the sample means and sample variances Note: of the two variables X and Y. Properties of Linear Correlation Coefficients Some properties of the linear correlation coefficient – r is a unitless measure (so that r would be the same for a data set whether x and y are measured in feet, inches, meters etc.) – r is always between –1 and +1. r = -1 : perfect negative correlation r = +1: perfect positive correlation – Positive values of r correspond to positive relations – Negative values of r correspond to negative relations Various Expressions for r There are other equivalent expressions for the linear correlation r as shown below: (x r= x )( y y ) (n 1) S x S y r= ( x x )( y y ) ( x x ) ( y y) 2 2 However, it is much easier to compute r using the short-cut formula shown on the next slide. Short-Cut Formula for r SS( xy) r= SS( x)SS( y) SS( x ) = “sum of squ ares for x”= x 2 SS( y ) = “sum of squ ares for y”= y 2 ( x) 2 n ( y) 2 n SS( xy ) = “sum of squ ares for xy”= xy x y n Example The table below presents the weight (in thousands of Example: pounds) x and the gasoline mileage (miles per gallon) y for ten different automobiles. Find the linear correlation coefficient: x Sum Sum Sum y x 2 2.5 2.5 2.5 3.0 3.0 3.0 4.0 4.0 4.0 3.5 3.5 3.5 2.7 2.7 2.7 4.5 4.5 4.5 3.8 3.8 3.8 2.9 2.9 2.9 5.0 5.0 5.0 2.2 2.2 2.2 34.1 34.1 34.1 40 40 40 43 43 43 30 30 30 35 35 35 42 42 42 19 19 19 32 32 32 39 39 39 15 15 15 14 14 14 309 309 309 6.25 6.25 6.25 9.00 9.00 9.00 16.00 16.00 16.00 12.25 12.25 12.25 7.29 7.29 7.29 20.25 20.25 20.25 14.44 14.44 14.44 8.41 8.41 8.41 25.00 25.00 25.00 4.84 4.84 4.84 123.73 123.73 123.73 x y x2 y2 1600 1600 1600 1849 1849 1849 900 900 900 1225 1225 1225 1764 1764 1764 361 361 361 1024 1024 1024 1521 1521 1521 225 225 225 196 196 196 10665 10665 10665 y2 xy 100.0 100.0 100.0 129.0 129.0 129.0 120.0 120.0 120.0 122.5 122.5 122.5 113.4 113.4 113.4 85.5 85.5 85.5 121.6 121.6 121.6 113.1 113.1 113.1 75.0 75.0 75.0 30.8 30.8 30.8 1010.9 1010.9 1010.9 xy Completing the Calculation for r SS( x) = x) ( 2 x n SS( y ) = y SS( xy) = r= 2 2 y) ( n 2 (34.1) 2 = 123.73 = 7.449 10 (309) 2 = 10665 = 1116.9 10 x y (34.1)(309) xy = 1010.9 = 42.79 SS ( xy ) = SS ( x )SS ( y ) n 10 42.79 ( 7.449 )(1116 .9 ) = 0.47 Please Note r is usually rounded to the nearest hundredth r close to 0: little or no linear correlation As the magnitude of r increases, towards -1 or +1, there is an increasingly stronger linear correlation between the two variables We’ll also learn to obtain the linear correlation coefficient from the graphing calculator. Positive Correlation Coefficients • Examples of positive correlation Strong Positive r = .8 Moderate Positive r = .5 Very Weak r = .1 • In general, if the correlation is visible to the eye, then it is likely to be strong Negative Correlation Coefficients • Examples of negative correlation Strong Negative r = –.8 Moderate Negative r = –.5 Very Weak r = –.1 • In general, if the correlation is visible to the eye, then it is likely to be strong Nonlinear versus No Correlation • Nonlinear correlation and no correlation Nonlinear Relation No Relation • Both sets of variables have r = 0.1, but the difference is that the nonlinear relation shows a clear pattern Interpret the Linear Correlation Coefficients • Correlation is not causation! • Just because two variables are correlated does not mean that one causes the other to change • There is a strong correlation between shoe sizes and vocabulary sizes for grade school children – Clearly larger shoe sizes do not cause larger vocabularies – Clearly larger vocabularies do not cause larger shoe sizes • Often lurking variables result in confounding How to Determine a Linear Correlation? • How large does the correlation coefficient have to be before we can say that there is a relation? • We’re not quite ready to answer that question Summary • Correlation between two variables can be described with both visual and numeric methods • Visual methods – Scatter diagrams – Analogous to histograms for single variables • Numeric methods – Linear correlation coefficient – Analogous to mean and variance for single variables • Care should be taken in the interpretation of linear correlation (nonlinearity and causation) Linear Regression Line Learning Objectives • Find the regression line to fit the data and use the line to make predictions • Interpret the slope and the y-intercept of the regression line • Compute the sum of squared residuals Regression Analysis • Regression analysis finds the equation of the line that best describes the relationship between two variables • One use of this equation: to make predictions Best Fitted Line • If we have two variables X and Y which tend to be linearly correlated, we often would like to model the relation with a line that best fits to the data. • Draw a line through the scatter diagram • We want to find the line that “best” describes the linear relationship … the regression line Residuals • One difference between math and stat is that statistics assumes that the measurements are not exact, that there is an error or residual • The formula for the residual is always Residual = Observed – Predicted • This relationship is not just for this chapter … it is the general way of defining error in statistics What is a Residual? • Here shows a residual on the scatter The residual The regression line diagram The observed value y The predicted value y The x value of interest Example • For example, say that we want to predict a value of y for a specific value of x – Assume that we are using y = 10 x + 25 as our model – To predict the value of y when x = 3, the model gives us y = 10 3 + 25 = 55, or a predicted value of 55 – Assume the actual value of y for x = 3 is equal to 50 – The actual value is 50, the predicted value is 55, so the residual (or error) is 50 – 55 = –5 Method of Least Squares • • We want to minimize the prediction errors or residuals, but we need to define what this means We use the method of least-squares which involves the following 3 steps: 1. We consider a possible linear model to fit the data 2. We calculate the residual for each point 3. We add up the squares of the residuals ( We square all of the residuals to avoid the cancellation of positive residuals and negative residuals, since some observed values are under predicted, some of the observed valued are over predicted by the proposed linear model.) • The line that has the smallest overall residuals ( i.e. the sum of all the squares of the residuals) is called the least-squares regression line or simply the regression line which is the best-fitted line to the data. Method of Least Squares • Assume the equation of the best-fitting line: yˆ =b0 b1 x Where yˆ (called, y hat) denotes the predicted value of • Least squares method: Find the constants b0 and b1 such that the sum 2 2 ˆ ( y y ) = ( y ( b b x )) 0 1 of the overall prediction errors is as small as possible y Illustration • Observed and predicted values of y: y ^y = b0 b1 x ( x, y) y ^y ( x , ^y ) ^y y x Linear Regression Line • The equation for the regression line is given by yˆ =b0 b1 x – Yˆ denotes the predicted value for the response variable. – b1 is the slope of the least-squares regression line – b0 is the y-intercept of the least-squares regression line Note: Different textbooks may use different notations for the slope and the intercept. Find the Equation of a Linear Regression Line • The equation is determined by: b0: y-intercept b1: slope • Values that satisfy the least squares criterion: ( x x )( y y ) SS( xy ) b1 = = 2 SS( x ) ( x x) y (b1 x ) b0 = = y (b1 x) n Example Example: A recent article measured the job satisfaction of subjects with a 14-question survey. The data below represents the job satisfaction scores, y, and the salaries, x, for a sample of similar individuals: x y 31 17 33 20 22 13 24 15 35 18 29 17 23 12 37 21 1) Draw a scatter diagram for this data 2) Find the equation of the line of best fit (i.e., regression line) Finding b1 & b0 • Preliminary calculations needed to find b1 and b0: x 23 23 23 23 31 31 31 31 33 33 33 33 22 22 22 22 24 24 24 24 35 35 35 35 29 29 29 29 37 37 37 37 234 234 234 234 x y xy x2 12 12 12 12 529 529 529 529 276 276 276 276 17 17 17 17 961 961 961 961 527 527 527 527 20 20 20 20 1089 1089 1089 1089 660 660 660 660 13 13 13 13 484 484 484 484 286 286 286 286 15 15 15 15 576 576 576 576 360 360 360 360 18 18 18 18 1225 1225 1225 1225 630 630 630 630 17 17 17 17 841 841 841 841 493 493 493 493 21 21 21 21 1369 1369 1369 1369 777 777 777 777 133 133 133 133 7074 7074 7074 7074 4009 4009 4009 4009 y x2 xy Linear Regression Line x) 234 ( x = 7074 = 229.5 2 SS( x ) = 2 b1 = b0 8 n SS( xy ) = 2 x y (234)(133) xy = 4009 = 118.75 n 8 SS( xy ) = 118.75 = 0.5174 SS( x ) 229.5 y (b1 x ) 133 (0. 5174)(234) = = = 14902 . n 8 . 0. 517 x Solution 1) Equation of the line of best fit: ^y = 149 Scatter Diagram Solution 2) Job Satisfaction Survey 22 21 20 19 18 Job Satisfaction 17 16 15 14 13 12 21 23 25 27 29 Salary 31 33 35 37 Please Note Keep at least three extra decimal places while doing the calculations to ensure an accurate answer When rounding off the calculated values of b0 and b1, always keep at least two significant digits in the final answer The slope b1 represents the predicted change in y per unit increase in x The y-intercept is the value of y where the line of best fit intersects the y-axis. That is, it is the predicted value of y when x is zero. The line of best fit will always pass through the point ( x, y) Please Note • Finding the values of b1 and b0 is a very tedious process • We should also know to use Graphing calculator for this • Finding the coefficients b1 and b0 is only the first step of a regression analysis – We need to interpret the slope b1 – We need to interpret the y-intercept b0 Making Predictions 1. One of the main purposes for obtaining a regression equation is for making predictions 2. For a given value of x, we can predict a value of ^y 3. The regression equation should be used only to cover the sample domain on the input variable. You can estimate values outside the domain interval, but use caution and use values close to the domain interval. 4. Use current data. A sample taken in 1987 should not be used to make predictions in 1999. Interpret the Slope • Interpreting the slope b1 – The slope is sometimes defined as as Rise Run – The slope is also sometimes defined as as Change in y Change in x • The slope relates changes in y to changes in x Interpret the Slope • For example, if b1 = 4 – If x increases by 1, then y will increase by 4 – If x decreases by 1, then y will decrease by 4 – A positive linear relationship • For example, if b1 = –7 – If x increases by 1, then y will decrease by 7 – If x decreases by 1, then y will increase by 7 – A negative linear relationship Example • For example, say that a researcher studies the population in a town (which is the y or response variable) in each year (which is the x or predictor variable) – To simplify the calculations, years are measured from 1900 (i.e. x = 55 is the year 1955) • The model used is y = 300 x + 12,000 • A slope of 300 means that the model predicts that, on the average, the population increases by 300 per year. • An intercept of 12,000 means that the model predicts that the town had a population of 12,000 in the year 1900 (i.e. when x = 0) Interpret the y-intercept • Interpreting the y-intercept b0 • Sometimes b0 has an interpretation, and sometimes not – If 0 is a reasonable value for x, then b0 can be interpreted as the value of y when x is 0 – If 0 is not a reasonable value for x, then b0 does not have an interpretation • In general, we should not use the model for values of x that are much larger or much smaller than the observed values of x included (that is, it may be invalid to predict y for x values lying outside the range of the observed x.) Summary • Summarize two quantitative data – Scatter diagrams – Correlation coefficients • Linear models of correlation – Least-squares regression line – Prediction Obtain Linear Correlation Coefficient and Regression Line Equation from TI Calculator 1. Turn on the diagnostic tool: CATALOG[2nd 0] DiagnosticOn ENTER ENTER 2. Enter the data: STAT EDIT. Enter the x-variable data into L1 and the corresponding y-variable data into L2 3. Obtain regression line and the linear correlation r: STAT CALC 4:LinReg(ax+b) ENTER L1, L2, Y1 (Notice: to enter Y1, use VARS Y-VARS 1:Function 1:Y1 ENTER). (The screen will also show r2. Just ignore it.) 4. Display the scatter diagram and the fitted regression line: Zoom 9:ZoomStat TRACE (press up or down arrow keys to move the cursor to the regression line. Now, you can trace the points along the line by pressing the right or left arrow keys. While the cursor is on the regression line, you can also enter a number, the screen will show the predicted value of y for the x value you just entered.)