Semi-Supervised Learning in Gigantic Image Collections

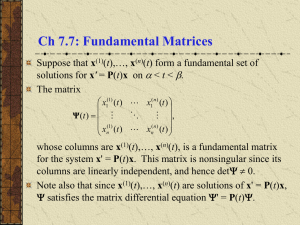

advertisement

Semi-Supervised Learning in Gigantic Image Collections Rob Fergus (New York University) Yair Weiss (Hebrew University) Antonio Torralba (MIT) What does the Image world look like? Gigantic Collections High level Object Recognition image statistics for large-scale image search Spectrum of Label Information Human annotations Noisy labels Unlabeled Semi-Supervised Learning Data Supervised Semi-Supervised • Classification function should be smooth with respect to data density Semi-Supervised Learning using Graph Laplacian [Zhu03,Zhou04] 2 Wi jis=n xexp(¡ kx ¡ x k=2² ) j n affinityi matrix (n = # of points) 2 2 W = exp(¡ kx ¡ x k=2² ) i j i j Wi j = exp(¡ kx i ¡ x j k=2² ) Graph Laplacian: P ¡ 1=2¡ 1=2¡ 1=2 ¡ 1=2 D ¡=1=2 W ¡ 1=2 L == ID¡ D L D W D = I ¡ Di i W j Di j SSL using Graph Laplacian = U® • Want to find label function ff that minimizes: T T f L f + (f ¡ y) ¤ (f ¡ y) Smoothness Agreement with labels • y = labels • If labeled, ¤ i i = ¸ , otherwise¤ i i = 0 • Solution: n x n system (n = # points) Eigenvectors of Laplacian • Smooth vectors will be linear combinations of eigenvectors U with small eigenvalues: [Belkin & Niyogi 06, f = U® Schoelkopf & Smola 02, Zhu et al 03, 08] U = [Á1 ; : : : ; Ák ] Rewrite System • • • • Let f = U® U = smallest k eigenvectors of L ® = coeffs. k is user parameter (typically ~100) • Optimal ® is now solution to k x k system: (§ + U T ¤ U)® = U T ¤ y Computational Bottleneck • Consider a dataset of 80 million images • Inverting L – Inverting 80 million x 80 million matrix • Finding eigenvectors of L – Diagonalizing 80 million x 80 million matrix Large Scale SSL - Related work • Nystrom method: pick small set of landmark points [see Zhu ‘08 survey] – Compute exact eigenvectors on these – Interpolate solution to rest Data Landmarks • Other approaches include: Mixture models (Zhu and Lafferty ‘05), Sparse Grids (Garcke and Griebel ‘05), Sparse Graphs (Tsang and Kwok ‘06) Our Approach Overview of Our Approach • Compute approximate eigenvectors Density Data Ours Limit as n ∞ Linear in number of data-points Landmarks Nystrom Reduce n Polynomial in number of landmarks Consider Limit as n ∞ • Consider x to be drawn from 2D distribution p(x) • Let Lp(F) be a smoothness operator on p(x), for a function F(x) • Smoothness operator penalizes functions that vary in areas of high density • Analyze eigenfunctions of Lp(F) Eigenvectors & Eigenfunctions Key Assumption: Separability of Input data p(x1) • Claim: If p is separable, then: p(x2) p(x1,x2) Eigenfunctions of marginals are also eigenfunctions of the joint density, with same eigenvalue [Nadler et al. 06,Weiss et al. 08] Numerical Approximations to Eigenfunctions in 1D • 300,000 points drawn from distribution p(x) • Consider p(x1) p(x1) p(x) Data Histogram h(x1) Numerical Approximations to Eigenfunctions in 1D • Solve for values of eigenfunction at set of discrete locations (histogram bin centers) – and associated eigenvalues – B x B system (B = # histogram bins, e.g. 50) 1D Approximate Eigenfunctions 1st Eigenfunction of h(x1) 2nd Eigenfunction 3rd Eigenfunction of h(x1) of h(x1) Separability over Dimension • Build histogram over dimension 2: h(x2) • Now solve for eigenfunctions of h(x2) 1st Eigenfunction of h(x2) 2nd Eigenfunction 3rd Eigenfunction of h(x2) of h(x2) From Eigenfunctions to Approximate Eigenvectors Eigenfunction value • Take each data point • Do 1-D interpolation in each eigenfunction 1 • Very fast operation 50 Histogram bin Preprocessing • Need to make data separable • Rotate using PCA PCA Not separable Separable Overall Algorithm 1. Rotate data to maximize separability (currently use PCA) 2. For each of the d input dimensions: – – Construct 1D histogram Solve numerically for eigenfunctions/values 3. Order eigenfunctions from all dimensions by increasing eigenvalue & take first k 4. Interpolate data into k eigenfunctions – Yields approximate eigenvectors of Laplacian 5. Solve k x k least squares system to give label function Experiments on Toy Data Nystrom Comparison • With Nystrom, too few landmark points result in highly unstable eigenvectors Nystrom Comparison • Eigenfunctions fail when data has significant dependencies between dimensions Experiments on Real Data Experiments • Images from 126 classes downloaded from Internet search engines, total 63,000 images Dump truck Emu • Labels (correct/incorrect) provided by Alex Krizhevsky, Vinod Nair & Geoff Hinton, (CIFAR & U. Toronto) Input Image Representation • Pixels not a convenient representation • Use Gist descriptor (Oliva & Torralba, 2001) • L2 distance btw. Gist vectors rough substitute for human perceptual distance • Apply oriented Gabor filters over different scales • Average filter energy in each bin Are Dimensions Independent? Joint histogram for pairs of dimensions from raw 384-dimensional Gist Joint histogram for pairs of dimensions after PCA to 64 dimensions PCA MI is mutual information score. 0 = Independent Real 1-D Eigenfunctions of PCA’d Gist descriptors Eigenfunction 1 1 8 16 32 40 48 56 64 Input Dimension 24 Protocol • Task is to re-rank images of each class (class/non-class) • Use eigenfunctions computed on all 63,000 images • Vary number of labeled examples • Measure precision @ 15% recall 4800 Total number of images 5000 6000 8000 4800 Total number of images 5000 6000 8000 4800 Total number of images 5000 6000 8000 4800 Total number of images 5000 6000 8000 80 Million Images Running on 80 million images • PCA to 32 dims, k=48 eigenfunctions • For each class, labels propagating through 80 million images • Precompute approximate eigenvectors (~20Gb) • Label propagation is fast <0.1secs/keyword Japanese Spaniel 3 positive 3 negative Labels from CIFAR set Airbus, Ostrich, Auto Summary • Semi-supervised scheme that can scale to really large problems – linear in # points • Rather than sub-sampling the data, we take the limit of infinite unlabeled data • Assumes input data distribution is separable • Can propagate labels in graph with 80 million nodes in fractions of second • Related paper in this NIPS by Nadler, Srebro & Zhou – See spotlights on Wednesday Future Work • Can potentially use 2D or 3D histograms instead of 1D – Requires more data • Consider diagonal eigenfunctions • Sharing of labels between classes Comparison of Approaches Data Exact Eigenvector Eigenfunction Exact Eigenvectors Eigenvalues Approximate Exact -- Approximate Eigenvectors 0.0531 : 0.0535 Data 0.1920 : 0.1928 0.2049 : 0.2068 0.2480 : 0.5512 0.3580 : 0.7979 Are Dimensions Independent? Joint histogram for pairs of dimensions from raw 384-dimensional Gist Joint histogram for pairs of dimensions after PCA PCA MI is mutual information score. 0 = Independent Are Dimensions Independent? Joint histogram for pairs of dimensions from raw 384-dimensional Gist Joint histogram for pairs of dimensions after ICA ICA MI is mutual information score. 0 = Independent Varying # Eigenfunctions Leveraging Noisy Labels • Images in dataset have noisy labels • Keyword used in from Internet search engine • Can easily be incorporated into SSL scheme • Give weight 1/10th of hand-labeled example Leveraging Noisy Labels Effect of Noisy Labels Complexity Comparison Key: n = # data points (big, >106) l = # labeled points (small, <100) m = # landmark points d = # input dims (~100) k = # eigenvectors (~100) b = # histogram bins (~50) Nystrom Eigenfunction Select m landmark points Rotate n points Get smallest k eigenvectors of m x m system Interpolate n points into k eigenvectors Solve k x k linear system Polynomial in # landmarks Form d 1-D histograms Solve d linear systems, each b x b k 1-D interpolations of n points Solve k x k linear system Linear in # data points Semi-Supervised Learning using Graph Laplacian [Zhu03,Zhou04] G = (V; E ) V = data points (n in total) E = n x n affinity matrix W 2 Wi j W =i j exp(¡ kx i kx ¡ ix¡j k=2² ) 2) = exp(¡ x j k=2² ¡ 1=2 Di i = P j Wi j Graph Laplacian: ¡ 1=2 ¡ 1=2 1=2 L == DI ¡¡ 1=2 L D ¡ 1=2 DL¡ D W D= I ¡ D ¡ 1=2 WD¡ 1=2 Rewrite System • • • • Let f = U® U = smallest k eigenvectors of L ® = coeffs. k is user parameter (typically ~100) J (®) = ®T § ®+ (U®¡ y) T ¤ (U® ¡ y) • Optimal ® is now solution to k x k system: (§ + U T ¤ U)® = U T ¤ y Consider Limit as n ∞ • Consider x to be drawn from 2D distribution p(x) • Let Lp(F) be a smoothness operator on p(x), for a function F(x): RR L p (F ) = 1=2 (F (x 1 ) ¡ F (x 2 )) 2 W(x 1 ; x 2 )p(x 1 )p(x 2 )dx 1 dx 2 2 where W (x 1; x 2 ) = exp(¡ kx 1 ¡ x 2 k=2² 2 ) • Analyze eigenfunctions of Lp(F) Numerical Approximations to Eigenfunctions in 1D ~ •)Pg ^ W = ¾ P Dg Solve for values g of eigenfunction at set of discrete locations (histogram bin centers) ¾ – and associated eigenvalues – B x B system (# histogram bins = 50) • P is diag(h(x1)) ~ W Affinity between discrete locations ~= D P j ~ W ^ = di ag( D P j ~ P W) Real 1-D Eigenfunctions of PCA’d Gist descriptors Eigenfunction 1 1 8 16 32 40 Input Dimension 24 48 56 64 Eigenfunction 256