Document

advertisement

Introduction to Network

Mathematics (1)

- Optimization techniques

Yuedong Xu

10/08/2012

Purpose

• Many networking/system problems boil

down to optimization problems

– Bandwidth allocation

– ISP traffic engineering

– Route selection

– Cache placement

– Server sleep/wakeup

– Wireless channel assignment

– … and so on (numerous)

Purpose

• Optimization as a tool

– shedding light on how good we can achieve

– guiding design of distributed algorithms

(other than pure heuristics)

– providing a bottom-up approach to reverseengineer existing systems

Outline

•

•

•

•

•

•

Toy Examples

Overview

Convex Optimization

Linear Programming

Linear Integer Programming

Summary

Toys

• Toy 1: Find x to minimize f(x) := x2

.

x*=0 if no restriction on x

.

x*=2 if 2≤x≤4

Toys

• Toy 2: Find the minimum

minimize

subject to x 0 ,

10

i 1

log( x i a i )

Optimal solution ?

10

i 1

xi 1

Toys

• Toy 3: Find global minimum

local minimum

global minimum

Outline

•

•

•

•

•

Toy Examples

Overview

Convex Optimization

Linear Programming

Linear Integer Programming

Overview

Ingredients!

• Objective Function

– A function to be minimized or maximized

• Unknowns or Variables

– Affect value of objective function

• Constraints

– Restrict unknowns to take on certain values

but exclude others

Overview

• Formulation:

Minimize f0(x)

subject to

Objective function

fi(x) ≤ 0; i=1,…,m Inequality constraint

hi(x) = 0; i=1,…,p Equality constraint

x: Decision variables

Overview

• Optimization tree

Overview

• Our coverage

Nonlinear

Programs

Convex

Programs

Linear

Programs

(Polynomial)

Flow

and

Matching

Integer

Programs

(NP-Hard)

Outline

•

•

•

•

•

Toy Examples

Overview

Convex Optimization

Linear Programming

Linear Integer Programming

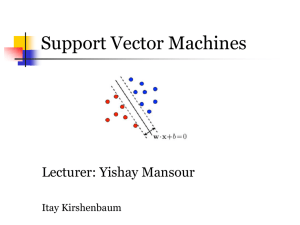

Convex Optimization

• Concepts

– Convex combination:

x, y Rn

01

z = x +(1)y

A convex combination of x, y.

A strict convex combination

of x, y if 0, 1.

Convex Optimization

• Concepts

– Convex set:

S

n

R

convex

is convex if it contains all convex

combinations of pairs x, y S.

nonconvex

Convex Optimization

• Concepts

– Convex set: more complicated case

The intersection of any number of convex sets is convex.

Convex Optimization

• Concepts

– Convex function:(大陆教材定义与国外相反!)

S Rn

c:S R

a convex set

a convex function if

c(x +(1)y) c(x) + (1)c(y), 0 1

c

c(x) + (1)c(y)

c(y)

c(x)

x

c(x +(1)y)

x +(1)y

y

Convex Optimization

• Concepts – convex functions

• Exponential: eax is convex on R

• Powers:

xa is convex on R+ when a≥1 or

a≤0, and concave for 0≤a≤1

• Logarithm: log x is concave on R+

• Jensen’s inequality:

– if f() is convex, then f(E[x]) <= E[f(x)]

– You can also check it by taking 2nd order derivatives

We are lucky to find that many networking problems have convex obj.s

Convex Optimization

• Concepts

– Convex Optimization:

Minimize f0(x)

subject to

fi(x) ≤ 0; i=1,…,m

hi(x) = 0; i=1,…,p

Convex

Convex

Linear/Affine

– A fundamental property: local optimum =

global optimum

Convex Optimization

• Method

– You may have used

• Gradient method

• Newton method

to find optima where constraints are real explicit

ranges (e.g. 0≤x≤10, 0≤y≤20, -∞≤z≤∞ ……).

– Today, we are talking about more

generalized constrained optimization

Convex Optimization

How to solve a constrained optimization problem?

• Enumeration? Maybe for small, finite feasible

set

• Use constraints to reduce number of

variables? Works occasionally

• Lagrange multiplier method – a general

method (harder to understand)

Convex Optimization

Example:

Minimize

subject to

x2 + y2

x+y=1

Lagrange multiplier method:

• Change problem to an unconstrained problem:

L(x, y, p) = x2 + y2 + p(1-x-y)

• Think of p as “price”, (1-x-y) as amount of “violation”

of the constraint

• Minimize L(x,y,p) over all x and y, keeping p fixed

• Obtain x*(p), y*(p)

• Then choose p to make sure constraint met

• Magically, x*(p*) and y*(p*) is the solution to the

original problem!

Convex Optimization

Example:

Minimize x2 + y2

subject to x + y = 1

Lagrangian:

L(x, y, p) = x2 + y2 + p(1-x-y)

• Setting dL/dx and dL/dy to 0, we get

x = y = p/2

• Since x+y=1, we get p=1

• Get the same solution by substituting y=1-x

Convex Optimization

• General case:

minimize

f0(x)

subject to fi(x) ≤ 0, i = 1, …, m

(Ignore equality constraints for now)

Optimal value denoted by f*

Lagrangian:

L(x, p) = f0(x) + p1f1(x) + … + pmfm(x)

Define

g(p) = infx (f0(x) + p1f1(x) + … + pmfm(x))

If pi≥0 for all i, and x feasible, then g(p) ≤ f*

Convex Optimization

• Revisit earlier example

L(x,p) = x2 + y2 + p(1-x-y)

x* = y* = p/2

g(p) = p(1-p/2)

This is a concave function, with g(p*) = 1/2

• We know f* is 1/2, and g(p) is a lower bound

for f* with different values of p – the

tightest lower bound occurs at p=p*.

Convex Optimization

• Duality

• For each p, g(p) gives a lower bound for f*

• We want to find as tight a lower bound as possible:

maximize g(p)

subject to p≥0

a) This is called the (Lagrange) “dual” problem, original

problem the “primal” problem

b) Let the optimal value of dual problem be denoted d*.

We always have d* ≤ f* (called “weak duality”)

c) If d* = f*, then we have “strong duality”. The

difference (f*-d*) is called the “duality gap”

Convex Optimization

• Price Interpretation

• Solving the constrained problem is

equivalent to obeying hard resource limits

• Imaging the resource limits can be violated;

you can pay a marginal price per unit

amount of violation (or receive an amount if

the limit not met)

• When duality gap is nonzero, you can

benefit from the 2nd scenario, even if the

price are set in unfavorable terms

Convex Optimization

• Duality in algorithms

An iterative algorithm produces at iteration j

• A primal feasible x(j)

• A dual feasible p(j)

With f0(x(j))-g(p(j)) -> 0 as j -> infinity

The optimal solution f* is in the interval

[g(p(j)), f0(x(j))]

Convex Optimization

• Complementary Slackness

To make duality gap zero, we need to have

pi fi(x) = 0

for all i

This means

pi > 0 => fi(x*) = 0

fi(x*) < 0 => pi = 0

If “price” is positive, then the corresponding

constraint is limiting

If a constraint is not active, then the “price”

must be zero

Convex Optimization

• KKT Optimality Conditions

• Satisfy primal and dual constraints

fi(x*) ≤0, pi* ≥0

• Satisfy complementary slackness

pi* fi(x*) = 0

• Stationary at optimal

f0’(x*) + Σi pi* fi’(x*) = 0

If primal problem is convex and KKT conditions met,

then x* and p* are dual optimal with zero duality gap

KKT = Karush-Kuhn-Tucker

Convex Optimization

• Method

– Take home messages

• Convexity is important (non-convex problems

are very difficult)

• Primal problem is not solved directly due to

complicated constraints

• Using Lagrangian dual approach to obtain dual

function

• KKT condition is used to guarantee the strong

duality for convex problem

Convex Optimization

• Application: TCP Flow Control

– What’s in your mind about TCP?

• Socket programming?

• Sliding window?

• AIMD congestion control?

• Retransmission?

• Anything else?

Convex Optimization

• Application: TCP Flow Control

– Our questions:

• It seems that TCP works as Internet scales.

Why?

• How does TCP allocate bandwidth to flows

traversing the same bottlenecks?

• Is TCP optimal in terms of resource allocation?

Convex Optimization

• Application: TCP Flow Control

Network

Links l of capacities cl

Sources i

L(s) - links used by source i (routing)

Ui(xi) - utility if source rate = xi

i). The larger rate xi, the more happiness;

ii). The increment of happiness is shrinking as xi

increases.

Convex Optimization

• Application: TCP Flow Control

– A simple network

x1

x1 x 3 c 2

x1 x 2 c1

c1

c2

x2

x3

Convex Optimization

• Application: TCP Flow Control

– Primal problem

max

xi 0

subject to

U (x )

i

i

i

yl cl ,

l L

Convex Optimization

• Application: TCP Flow Control

– Lagrangian dual problem

Dual

function

:

D ( x, p )

U s ( x s ) pl (cl x )

l

s

Dual

problem :

min

p0

D ( p ) max

xs 0

l

l

U

(

x

)

p

(

c

x

s s l l )

s

l

You can solve it using KKT conditions!

You need whole information!

Convex Optimization

• Application: TCP Flow Control

– Question:

Is distributed flow control possible without

knowledge of the network?

Convex Optimization

• Application: TCP Flow Control

– Primal-dual approach:

• Source updates sending rate xi

• Link generates congestion signal

– Source’s action:

• If congestion severe, then reduce xi

• If no congestion, then increase xi

– Congestion measures:

• Packet loss (TCP Reno)

• RTT

(TCP Vegas)

Convex Optimization

• Application: TCP Flow Control

– Gradient based Primal-dual algorithm:

Source updates rate given congestion signal

source :

'1

x i ( t 1) U i ( q i ( t ))

Total prices of a flow

along its route

Link updates congestion signal

link :

p l ( t 1) [ p l ( t ) l ( y l ( t ) c l )]

Price of congestion at a link: packet drop prob., etc.

Convex Optimization

• Application: TCP Flow Control

– Relation to real TCP

Convex Optimization

• Application: Fairness concerns

– Many concepts regarding fairness

• Max-min, proportional fairness, etc.

• (add some examples to explain the concepts)

Convex Optimization

• Application: Fairness concerns

– How does fairness relate to optimization?

Reflected by utility function

U s x ws

x

1

1

U s x w s log x

if 1,

otherwise

– Esp. max-min fairness as alpha infinity

Convex Optimization

• Application: TCP Flow Control

Take home messages:

– TCP is solving a distributed optimization

problem!

– Primal-dual algorithm can converge!

– Packet drop, and RTT are carrying “price of

congestion”!

– Fairness reflected by utility function

Outline

•

•

•

•

•

•

Toy Examples

Overview

Convex Optimization

Linear Programming

Linear Integer Programming

Summary

Linear Programming

• A subset of convex programming

• Goal: maximize or minimize a linear

function on a domain defined by linear

inequalities and equations

• Special properties

Linear Programming

• Formulation

– General form

Max or min

c1 x1

Subject to

a11 x1

a11 x1

c2 x2

a12 x 2

a12 x 2

cn xn

a1 n x n

a1 n x n

b1

b1

amn xn

amn xn

b m

b m

……

a m 1 x1

a m 1 x1

am 2 x2

am 2 x2

Linear Programming

• Formulation

– General form

Max or min

cA1 xlinear

c 2objective

x2

function

cn xn

1

Subject to

a11 x1 a12 x 2

a1 n x n

aA

x1 of

alinear

x

a1 n x n

constraints

11 set

12 2

……

• linear inequalities or

linear

a•

x

aequations

x

m1 1

m2 2

a m 1 x1 a m 2 x 2

amn xn

amn xn

b1

b1

b m

b m

Linear Programming

• Formulation

– Canonical form

Min

subject to

“greater than”

only

c2 x2

cn xn

a11 x1

a12 x 2

a1 n x n

b1

a 21 x1

a 22 x 2

a2 n xn

b2

a m 1 x1

am 2 x2

amn xn

bm

c1 x1

Non-negative

x1 , x 2 ,

, xn 0

Linear Programming

• Example

y

Maximize

3x

2y

Subject to

x

3y

12

x

y

8

2x

y

10

x

0

y

0

8

7

6

x

12

y

3

x

y

1

0

5

y

4

8

2x

3

2

1

x

1

2

3

4

5

6

7

8

Linear Programming

• Example

3x

2y

Subject to x

3y

12

x

y

8

2x

y

10

x

0

y

0

8

7

6

x

19

y

y

2

8

y

2

15

y

2

3x

3x

1

2

2

3x

3

22

y 8

2 y

x

3x

3x

4

y

1

0

3x

5

2

12

y

3

2x

Maximize

y

6

y

0

1

x

2

3

4

5

6

7

8

Linear Programming

• Example

– Observations:

• Optimum is at the corner!

– Question:

• When can optimum be

a point not at the corner?

• How to find optimum in a

more general LP problem?

feasible region

Linear Programming

(Dantzig 1951) Simplex method

•For USA Air Force

•Very efficient in practice

• Exponential time in worst case

(Khachiyan 1979) Ellipsoid method

• Not efficient in practice

• Polynomial time in worst case

Linear Programming

• Simplex method

– Iterative method to find optimum

– Finding optimum by moving around the

corner of the convex polytope in cost

descent sense.

basic

feasible at a corner

solution

– Local optimum = global optimum (convexity)

Linear Programming

• Application

– Max flow problem

Outline

•

•

•

•

•

•

Toy Examples

Overview

Convex Optimization

Linear Programming

Linear Integer Programming

Summary

Linear Integer Programming

• Linear programming is powerful in many

areas

• But ………

Linear Integer Programming

• Random variables are integers

– Building a link between two nodes, 0 or 1?

– Selecting a route, 0 or 1?

– Assigning servers in a location

– Caching a movie, 0 or 1?

– Assigning a wireless channel, 0 or 1?

– and so on……

No fractional solution !

Linear Integer Programming

• Graphical interpretation

Only

discrete

values

allowed

Linear Integer Programming

• Method

– Enumeration – Tree Search, Dynamic

Programming etc.

x1=0

X2=0

X2=1

X2=2

x1=2

x1=1

X2=0

X2=1

X2=2

X2=0

Guaranteed “feasible” solution

Complexity grows exponentially

X2=1

X2=2

Linear Integer Programming

• Method

– Linear relaxation plus rounding

Integer Solution

-cT

x2

a). Variables being continuous

b). Solving LP efficiently

LP Solution

c). Rounding the solution

x1

No guarantee of gap to optimality!

Linear Integer Programming

• Combined Method

– Branch and bound algorithm

Step 1: Solve LP relaxation problem

Step 2:

Linear Integer Programming

• Example – Knapsack problem

Which boxes should be chosen

to maximize the amount of

money while still keeping the

overall weight under 15 kg ?

Linear Integer Programming

• Example – Knapsack problem

x1 {0,1}

x 4 {0,1}

Objective Function

M axim ize

4 x1 2 x 2 10 x 3 2 x 4 1 x 5

x 2 {0,1}

Unknowns or Variables

x i 's, i 1,

x 5 {0,1}

x 3 {0,1}

,5

Constraints

S ubject t o

12 x1 1 x 2 4 x 3 2 x 4 1 x 5 15

x i 0,1 , i 1,

,5

Linear Integer Programming

• Example – Knapsack problem

M axim ize 4 x1 2 x 2 10 x 3 2 x 4 1 x 5

S ubject to 12 x1 1 x 2 4 x 3 2 x 4 1 x 5 15,

x i 0,1 , i 1,

,5

Outline

•

•

•

•

•

•

Toy Examples

Overview

Convex Optimization

Linear Programming

Linear Integer Programming

Summary

Summary

Our course covers

• a macroscopic view of optimizations in

networking

• ways of solving optimizations

• applications to networking research

Summary

Keywords

• Convex optimization

– Lagrangian dual problem, KKT

• Linear programming

– Simplex, Interior-point

• Integer linear programming

– Dynamic programming, Rounding, or Branch

and bound

Summary

Complexity (“solve” means solving optimum)

• Linear programming

– [P], fast to solve

• Nonlinear programming

– Convex: [P], easy to solve

– Non-convex: usually [NP], difficult to solve

• (Mixed) Integer linear programming

– Usually [NP], difficult to solve

Summary

Thanks!

Backup slides

Convex Optimization

• Concepts

– Convex function: more examples (see Byod’s

book)

We are lucky to find that many networking problems have convex obj.s

Convex Optimization

• Example – waterfilling problem

To Tom: you can write

The Lagrangian dual function

On the white board.

Convex Optimization

• Method

– Roadmap

• Treat the original optimization problem as the

Primal problem

• Transform primal problem into dual problem

• Solve dual problem and validate the optimality

Convex Optimization

• Method

– Lagrangian dual function

Convex Optimization

• Method

– For given ( , ) , solving dual problem

Convex Optimization

• Method

– Comparison

What shall we do next?

Primal:

Dual:

Min f0(x)

s.t.

fi(x) ≤ 0; i=1,…,m

hi(x) = 0; i=1,…,p

Min L ( x , , )

If λ ≥ 0, dual

minimum is lower

bound of primal

minimum

Convex Optimization

• Method

– The Lagrangian dual problem

Much easier after getting rid of ugly constraints!

Convex Optimization

• Method

– Question: are these two problems the

same?

Convex Optimization

• Method

– Karush-Kuhn-Tucker (KKT) conditions

For the convex problem, satisfying KKT means strong duality!