Spatial Hearing

advertisement

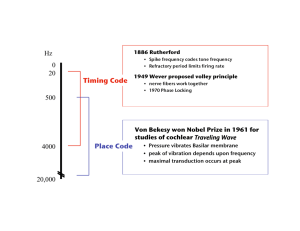

Localising Sounds in Space MSc Neuroscience Prof. Jan Schnupp jan.schnupp@dpag.ox.ac.uk Objectives of This Lecture: Acoustic Cues to Sound Source Position Cues to Direction Interaural Level Cues Interaural Time Cues Spectral Cues Cues to Distance Encoding of Spatial Cues in the Brainstem Divisions of the Cochlear Nucleus Properties of the Superior Olivary Nuclei Representation of Auditory Space in Midbrain And Cortex The “auditory space map” in Superior Colliculus The role of Primary Auditory Cortex (A1) “Where” and “What” streams in auditory belt areas? Distributed, “panoramic” spike pattern codes? Part 1: Acoustic Cues Interaural Time Difference (ITD) Cues ITD ITDs are powerful cues to sound source direction, but they are ambiguous (“cones of confusion”) Interaural Level Cues (ILDs) ILD at 700 Hz ILD at 11000 Hz Unlike ITDs, ILDs are highly frequency dependent. At higher sound frequencies ILDs tend to become larger, more complex, and hence potentially more informative. Binaural Cues in the Barn Owl Barn owls have highly asymmetric outer ears, with one ear pointing up, the other down. Consequently, at high frequencies, barn owl ILDs vary with elevation, rather than with azimuth (D). Consequently ITD and ILD cues together form a grid specifying azimuth and elevation respectively. Spectral (Monaural) Cues Spectral Cues in the Cat For frontal sound source positions, the cat outer ear produces a “mid frequency (first) notch” near 10 kHz (A). The precise notch frequency varies systematically with both azimuth and elevation. Thus, the first notch isofrequency contours for both ears together form a fairly regular grid across the cat’s frontal hemifield (B). From Rice et al. (1992). Adapting to Changes in Spectral Cues Hofman et al. made human volunteers localize sounds in the dark, then introduced plastic molds to change the shape of the concha. This disrupted spectral cues and led to poor localization, particularly in elevation. Over a prolonged period of wearing the molds, (up to 3 weeks) localization accuracy improved. Part 2: Brainstem Processing Phase Locking Auditory Nerve Fibers are most likely to fire action potentials “at the crest” of the sound wave. This temporal bias known as “phase locking”. Phase locking is crucial to ITD processing, since it provides the necessary precise temporal information. Mammalian ANF cannot phase lock to frequencies faster than 3-4 kHz. https://mustelid.physiol.ox.ac.uk/drupal/?q=ear/phase_locking Evans (1975) Preservation of Time Cues in AVCN spherical bushy cell endbulb of Held VIII nerve fiber Auditory Nerve Fibers connect to spherical and globular bushy cells in the anteroventral cochlear nucleus (AVCN) via large, fast and secure synapses known as “endbulbs of Held”. Phase locking in bushy cells is even more precise than in the afferent nerve fibers. Bushy cells project to the superior olivary complex. Extraction of Spectral Cues in DCN Bushy Octopus Multipolar (Stellate) Pyramidal “Type IV” neurons in the dorsal cochlear nucleus often have inhibitory frequency response areas with excitatory sidebands. This makes them sensitive to “spectral notches” like those seen in spectral localisation cues. Superior Olivary Nuclei: Binaural Convergence Medial superior olive -excitatory input from each side (EE) Lateral superior olive -inhibitory input from the contralateral side (EI) Processing of Interaural Level Differences Sound on the ipsilateral side Lateral superior olive I>C Contralateral side C>I Interaural intensity difference Processing of Interaural Time Differences Sound on the ipsilateral side Contralateral side Medial superior olive Interaural time difference How Does the MSO Detect Interaural Time Differences? From contralateral AVCN From ipsilateral AVCN Jeffress Delay Line and Coincidence Detector Model. MSO neurons are thought to fire maximally only if they receive simultaneous input from both ears. If the input from one or the other ear is delayed by some amount (e.g. because the afferent axons are longer or slower) then the MSO neuron will fire maximally only if an interaural delay in the arrival time at the ears exactly compensates for the transmission delay. In this way MSO neurons become tuned to characteristic interaural delays. The delay tuning must be extremely sharp: ITDs of only 0.01-0.03 ms must be resolved to account for sound localisation performance. https://mustelid.physiol.ox.ac.uk/drupal/?q=topics/jeffress-model-animation The Calyx of Held MNTB relay neurons receive their input via very large calyx of Held synapses. These secure synapses would not be needed if the MNTB only fed into “ILD pathway” in the LSO. MNTB also provides precisely timed inhibition to MSO. Inhibition in the MSO From Brandt et al., Nature 2002 For many MSO neurons best ITD neurons are outside the physiological range. The code for ITD set up in the MSO may be more like a rate code than a time code. Blocking glycinergic inhibition (from MNTB) reduces the amount of spike rate modulation seen over physiological ITD ranges. The Superior Olivary Nuclei – a Summary Most neurons in the LSO receive inhibitory input from the contralateral ear and excitatory input from the ipsilateral ear (IE). Consequently they are sensitive to ILDs, responding best to sounds that are louder in the ipsilateral ear. Neurons in the MSO receive direct excitatory input from both ears and fire strongly only when the inputs are temporally co-incident. This makes them sensitive to ITDs. Excitatory Connection Inhibitory Connection Midline IC LSO IC MNTB MSO CN CN Part 3: Midbrain and Cortex The “Auditory Space Map” in the Superior Colliculus The SC is involved in directing orienting reflexes and gaze shifts. Acoustically responsive neurons in rostral SC tend to be tuned to frontal sound source directions, while caudal SC neurons prefer contralateral directions. Similarly, lateral SC neurons prefer low, medial neurons prefer high sound source elevations. Eye Position Effects in Monkey Sparks Physiol Rev 1986 Possible Explanations for Sparks’ Data Underlying spatial receptive fields might shift left or right with changes in gaze direction, or hey might shift up or down. Creating Virtual Acoustic Space (VAS) Probe Microphones VAS response fields of CNS neurons C15 Elev [deg] 90 90 1.3 45 0.9 00 0.4 -45 -90 -90 -180 -180 -135 -90 0 -45 00 45 90 180 90 135 180 Azim [deg] 0019/vas4@30 Microelectrode Recordings Passive Eye Displacement Effects in Superior Colliculus Zella, Brugge & Schnupp Nat Neurosci 2001 SC auditory receptive fields mapped with virtual acoustic space in barbiturate anaesthetized cat. RF mapping repeated after eye was displaced by pulling on the eye with a suture running through the sclera. Lesion Studies Suggest Important Role for A1 Jenkins & Merzenich, J. Neurophysiol, 1984 A1 Virtual Acoustic Space (VAS) Receptive Fields B #19-255, EO, CF=12, A=2.19, D=0.80, L=0.50, 15 dB D C #54-94, EE, CF=9, A=2.77, D=2.29, L=0.19, 25 dB E #51-19, EO, CF=17, A=4.36, D=1.49, L=0.37, 20 dB G #38-78, EO, CF=7, A=5.06, D=2.59 L=0.10, 35 dB H #54-12, EI, CF=8, A=8.28, D=2.39, L=0.13, 20 dB #51-02, EE, CF=5, A=7.92, D=2.39, L=0.14, 15 dB F #51-07, OE, CF=5, A=8.23, D=2.84, L=0.03, 35 dB I #54-304, EE, CF=28, A=1.37, D=1.77, L=0.29, 15 dB #51-15, EE, CF=21, A=1.97, D=3.03, L=0.21, 20 dB Spikes per presentation A a Left and Right Ear Frequency-Time Response Fields Virtual Acoustic Space Stimuli 16 4 Frequency [kHz] Predicting Space from Spectrum 1 d Elev[deg] [deg] Elev 4 1 c -5 0 5 10 dB 1 0 -60 -180 -120 -60 100 0 e 0.5 0 200 ms 60 rate (Hz) response b C81 16 0 60 120 180 Azim [deg] 200 0 0 100 ms 200 f Schnupp et al Nature 2001 Examples of Predicted and Observed Spatial Receptive Fields “Higher Order” Cortical Areas In the macaque, primary auditory cortex(A1) is surrounded by rostral (R), lateral (L), caudomedial (CM) and medial “belt areas”. L can be further subdivided into anterior, medial and caudal subfields (AL, ML, CL) Are there “What” and “Where” Streams in Auditory Cortex? Anterolateral Belt Caudolateral Belt Some reports suggest that anterior cortical belt areas may more selective for sound identity and less for sound source location, while caudal belt areas are more location specific. It has been hypothesized that these may be the starting positions for a ventral “what” stream heading for inferotemporal cortex and a dorsal “where” stream which heads for posteroparietal cortex. A “Panoramic” Code for Auditory Space? Middlebrooks et al. found neural spike patterns to vary systematically with sound source direction in a number cortical areas of the cat (AES, A1, A2, PAF). Artificial neural networks can be trained to estimate sound source azimuth from the neural spike pattern. Spike trains in PAF carry more spatial information than other areas, but in principle spatial information is available in all auditory cortical areas tested so far. Azimuth, Pitch and Timbre Sensitivity in Ferret Auditory Cortex Bizley, Walker, Silverman, King & Schnupp - J Neurosci 2009 Cortical Deactivation Deactivating some cortical areas (A1, PAF) by cooling impairs sound localization, but impairing others (AAF) does not. Lomber & Malhorta J. Neurophys (2003) Summary A variety of acoustic cues give information relating to the direction and distance of a sound source. Virtually nothing is known about the neural processing of distance cues. The cues to direction include binaural cues and monaural spectral cues. These cues appear to be first encoded in the brainstem and then combined in midbrain and cortex. ITDs are encoded in the MSO, ILDs in the LSO. The Superior Colliculus is the only structure in the mammalian brain that contains a topographic map of auditory space. Lesion studies point to an important role of auditory cortex in many sound localisation behaviours. The spatial tuning of many A1 neurons is easily predicted from spectral tuning properties, suggesting that A1 represents spatial information only “implicitly”. Recent work suggests that caudal belt areas of auditory cortex may be specialized for aspects of spatial hearing. However, other researchers posit a distributed “panoramic” spike pattern code that operates across many cortical areas. For a Reading List See reading lists at http://www.physiol.ox.ac.uk/~jan/NeuroIIspatialHearing.htm And for demos and media see http://auditoryneuroscience.com/spatial_hearing