Toward_Next_Generation_Health_Assistants

Towards Next Generation Integrative Mobile

Semantic Health Information Assistants

Objectives

• Use semantic technologies to encode and integrate a wide range of health information to help people function at a level higher than their training.

• Leverage existing curated and uncurated sources, build reusable integrated content sources and infrastructure

Evan W. Patton

(

pattoe@rpi.edu

)

, John Sheehan

( sheehj4@rpi.edu

)

,

Yue Robin Liu ( liuy30@rpi.edu

) , Bassem Makni ( maknib@rpi.edu

) , and

Deborah L. McGuinness ( dlm@cs.rpi.edu

)

Rensselaer Polytechnic Institute / 110 8

th

Street / Troy, NY, 12180 USA http://tw.rpi.edu/web/project/MobileHealth

Introduction

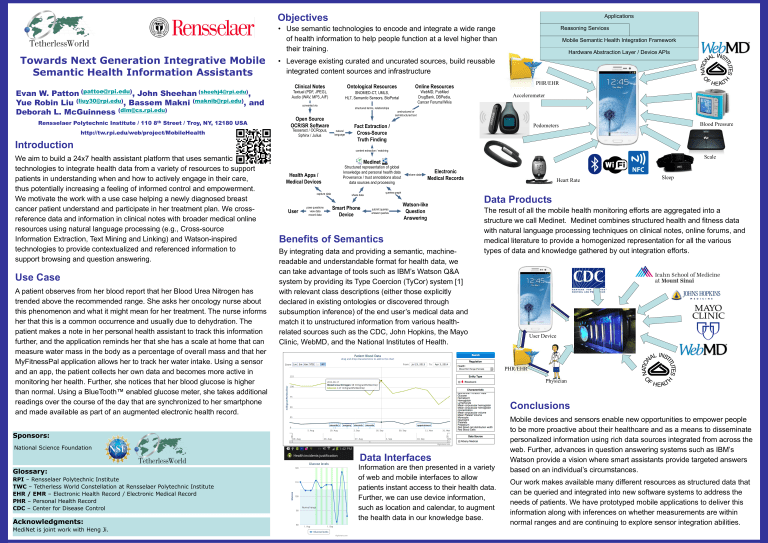

We aim to build a 24x7 health assistant platform that uses semantic technologies to integrate health data from a variety of resources to support patients in understanding when and how to actively engage in their care, thus potentially increasing a feeling of informed control and empowerment.

We motivate the work with a use case helping a newly diagnosed breast cancer patient understand and participate in her treatment plan. We crossreference data and information in clinical notes with broader medical online resources using natural language processing (e.g., Cross-source

Information Extraction, Text Mining and Linking) and Watson-inspired technologies to provide contextualized and referenced information to support browsing and question answering.

Use Case

A patient observes from her blood report that her Blood Urea Nitrogen has trended above the recommended range. She asks her oncology nurse about this phenomenon and what it might mean for her treatment. The nurse informs her that this is a common occurrence and usually due to dehydration. The patient makes a note in her personal health assistant to track this information further, and the application reminds her that she has a scale at home that can measure water mass in the body as a percentage of overall mass and that her

MyFitnessPal application allows her to track her water intake. Using a sensor and an app, the patient collects her own data and becomes more active in monitoring her health. Further, she notices that her blood glucose is higher than normal. Using a BlueTooth ™ enabled glucose meter, she takes additional readings over the course of the day that are synchronized to her smartphone and made available as part of an augmented electronic health record.

Clinical Notes

Textual (PDF, JPEG),

Audio (WAV, MP3, AIF) converted via

Open Source

OCR/SR Software

Tesseract / OCRopus,

Sphinx / Julius natural language

Ontological Resources

SNOMED-CT, UMLS,

HL7, Semantic Sensors, BioPortal structured terms, relationships unstructured or semistructured text

Online Resources

WebMD, PubMed

DrugBank, DBPedia,

Cancer Forums/Wikis

Fact Extraction /

Cross-Source

Truth Finding

content extraction / matching

Health Apps /

Medical Devices

User

capture data pose questions view data record data

Medinet

Structured representation of global knowledge and personal health data

Provenance / trust annotations about data sources and processing queries graph share data

Smart Phone

Device

submit queries answer queries share data

Watson-like

Question

Answering

Electronic

Medical Records

Benefits of Semantics

By integrating data and providing a semantic, machinereadable and understandable format for health data, we can take advantage of tools such as IBM’s Watson Q&A system by providing its Type Coercion (TyCor) system [1] with relevant class descriptions (either those explicitly declared in existing ontologies or discovered through subsumption inference) of the end user’s medical data and match it to unstructured information from various healthrelated sources such as the CDC, John Hopkins, the Mayo

Clinic, WebMD, and the National Institutes of Health.

PHR/EHR

Accelerometer

PHR/EHR

Pedometers

User Device

Applications

Reasoning Services

Mobile Semantic Health Integration Framework

Hardware Abstraction Layer / Device APIs

Heart Rate

Physician

Sleep

Blood Pressure

Scale

Data Products

The result of all the mobile health monitoring efforts are aggregated into a structure we call Medinet. Medinet combines structured health and fitness data with natural language processing techniques on clinical notes, online forums, and medical literature to provide a homogenized representation for all the various types of data and knowledge gathered by out integration efforts.

Sponsors:

National Science Foundation

Glossary:

RPI – Rensselaer Polytechnic Institute

TWC – Tetherless World Constellation at Rensselaer Polytechnic Institute

EHR / EMR – Electronic Health Record / Electronic Medical Record

PHR – Personal Health Record

CDC – Center for Disease Control

Acknowledgments:

MediNet is joint work with Heng Ji.

Data Interfaces

Information are then presented in a variety of web and mobile interfaces to allow patients instant access to their health data.

Further, we can use device information, such as location and calendar, to augment the health data in our knowledge base.

Conclusions

Mobile devices and sensors enable new opportunities to empower people to be more proactive about their healthcare and as a means to disseminate personalized information using rich data sources integrated from across the web. Further, advances in question answering systems such as IBM’s

Watson provide a vision where smart assistants provide targeted answers based on an individual’s circumstances.

Our work makes available many different resources as structured data that can be queried and integrated into new software systems to address the needs of patients. We have prototyped mobile applications to deliver this information along with inferences on whether measurements are within normal ranges and are continuing to explore sensor integration abilities.