Introduction to Time Series Analysis

advertisement

Time Series Analysis

Definition

A Time Series {xt : t T} is a collection of random

variables usually parameterized by

1) the real line T = R= (-∞, ∞)

2) the non-negative real line T = R+ = [0, ∞)

3) the integers T = Z = {…,-2, -1, 0, 1, 2, …}

4) the non-negative integers T = Z+ = {0, 1, 2, …}

If xt is a vector, the collection of random vectors

{xt : t T}

is a multivariate time series or multi-channel time

series.

If t is a vector, the collection of random variables

{xt : t T} is a multidimensional “time” series or

spatial series.

(with T = Rk= k-dimensional Euclidean space or a kdimensional lattice.)

Example of spatial time series

The project

• Buoys are located in a grid across the Pacific

ocean

• Measuring

– Surface temperature

– Wind speed (two components)

– Other measurements

The data is being collected almost continuously

The purpose is to study El Nino

Technical Note:

The probability measure of a time series is defined by

specifying the joint distribution (in a consistent manner)

of all finite subsets of {xt : t T}.

i.e. marginal distributions of subsets of random

variables computed from the joint density of a complete

set of variables should agree with the distribution

assigned to the subset of variables.

The time series is Normal if all finite subsets of

{xt : t T} have a multivariate normal

distribution.

Similar statements are true for multi-channel

time series and multidimensional time series.

Definition:

m(t) = mean value function of {xt : t T} = E[xt]

for t T.

s(t,s) = covariance function of {xt : t T}

= E[(xt - m(t))(xs - m(s))] for t,s T.

For multichannel time series

m(t) = mean vector function of {xt : t T} = E[xt]

for t T and

S(t,s) = covariance matrix function of {xt : t T}

= E[(xt - m(t))(xs - m(s))′] for t,s T.

The ith element of the k × 1 vector m(t)

mi(t) =E[xit]

is the mean value function of the time series {xit : t T}

The i,jth element of the k × k matrix S(t,s)

sij(t,s) =E[(xit - mi(t))(xjs - mj(s))]

is called the cross-covariance function of the two time series

{xit : t T} and {xjt : t T}

Definition:

The time series {xt : t T} is stationary if

the joint distribution of xt1, xt2, ... , xtk is the

same as the joint distribution of xt1+h ,xt2+h ,

... ,xtk+h for all finite subsets t1, t2, ... , tk of T

and all choices of h.

Definition:

The multi-channel time series {xt : t T} is

stationary if the joint distribution of xt1, xt2,

... , xtk is the same as the joint distribution of

xt1+h , xt2+h , ... , xtk+h for all finite subsets t1,

t2, ... , tk of T and all choices of h.

Definition:

The multidimensional time series {xt : t T}

is stationary if the joint distribution of xt1,

xt2, ... , xtk is the same as the joint distribution

of xt1+h ,xt2+h , ... ,xtk+h for all finite subsets

t1, t2, ... , tk of T and all choices of h.

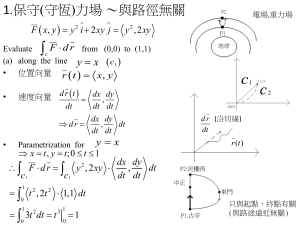

The distribution of

observations at these

points in time

same as

The distribution of

observations at these

points in time

Time

Stationarity

Some Implication of Stationarity

If {xt : t T} is stationary then:

1. The distribution of xt is the same for all t T.

2. The joint distribution of xt, xt + h is the same

as the joint distribution of xs, xs + h .

Implication of Stationarity for the mean value

function and the covariance function

If {xt : t T} is stationary then for t T.

m(t) = E[xt] = m

and for t,s T.

s(t,s) = E[(xt - m)(xs - m)]

= E[(xt+h - m)(xs+h - m)]

= E[(xt-s - m)(x0 - m)] with h = -s

= s(t-s)

If the multi-channel time series{xt : t T} is

stationary then for t T.

m(t) = E[xt] = m

and for t,s T

S(t,s) = S(t-s)

Thus for stationary time series the mean value

function is constant and the covariance function

is only a function of the distance in time (t – s)

If the multidimensional time series {xt : t T} is

stationary then for t T.

m(t) = E[xt] = m

and for t,s T.

s(t,s) = E[(xt - m)(xs - m)]

= s(t-s) (called the Covariogram)

Variogram

V(t,s) = V(t - s) = Var[(xt - xs)] = E[(xt - xs)2]

= Var[xt] + Var[xs] –2Cov[xt,xs]

= 2[s(0) - s(t-s)]

Definition:

r(t,s) = autocorrelation function of {xt : tT}

= correlation between xt and xs.

covxt , xs

s t , s

varxt varxs

s t , t s s, s

for t,s T.

If {xt : t T} is stationary then

r(h) = autocorrelation function of {xt : t T}

= correlation between xt and xt+h.

covxt h , xt

s h

s h

varxt h varxt

s o s o s o

Definition:

The time series {xt : t T} is weakly

stationary if:

m(t) = E[xt] = m for all t T.

and

s(t,s) = s(t-s) for all t,s T.

or

r(t,s) = r(t-s) for all t,s T.

Examples

Stationary time series

1. Let X denote a single random variable with mean m

and standard deviation s. In addition X may also be

Normal (this condition is not necessary)

Let xt = X for all t T = { …,, -2, -1, 0, 1, 2, …}

Then E[xt] = m = E[X] for t T and

s(h) = E[(xt+h - m)(xt - m)]

= Cov(xt+h,xt )

= E[(X - m)(X - m)] = Var(X)

= s2 for all h.

s h

r h

1 for all h.

s o

Excel file illustrating this time series

2. Suppose {xt : t T} are identically distributed and

uncorrelated (independent).

T = { …,, -2, -1, 0, 1, 2, …}

Then E[xt] = m for t T and

s(h) = E[(xt+h - m)(xt - m)]

= Cov(xt+h,xt )

Varxt h 0

h0

0

s 2

0

h0

h0

The auto correlation function:

s h 1 h 0

r h

s o 0 h 0

Comment:

If m = 0 then the time series {xt : t T} is called a white noise

time series.

Thus a white noise time series consist of independent

identically distributed random variables with mean 0 and

common variance s2

Excel file illustrating this time series

3. Suppose X1, X2, … , Xk and Y1, Y2, … , Yk are

independent independent random variables with

E X i EYi 0 and E X i2 E Yi 2 s i2

Let 1, 2, … k denote k values in (0,p)

For any t T = { …,, -2, -1, 0, 1, 2, …}

k

xt X i cosi t Yi sin i t

i 1

k

X i cos2p i t Yi sin 2p i t

i 1

k

2pt

2pt

Yi sin

X i cos

i 1

Pi

Pi

Excel file illustrating this time series

Then

k

E xt E X i cosi t Yi sin i t

i 1

k

E X i cosi t E Yi sin i t 0

i 1

s h Ext h xt

k

E X i cosi t h Yi sin i t h

i 1

k

j 1

X j cos j t Y j sin j t

Hence

k k

s h E X i X j cosi t h cos j t

i 1 j 1

X iYj cosi t hsin j t

Yi X j sini t hcos j t

YiY j sin i t h sin j t

k

s i2 cosi t h cosi t sin i t h sin i t

i 1

since E X iYj 0, E X i X j 0 E YiYj 0 if i j

and E X i2 E Yi 2 s i2

Hence using

cos(A – B) = cos(A) cos(B) + sin(A) sin(B)

k

k

i 1

i 1

s h s i2 cosi t h i t s i2 cosi h

and

k

s h

r h

s 0

2

s

i cosi h

i 1

k

2

s

j

j 1

where wi

s i2

k

s

j 1

2

j

k

wi cosi h

i 1

4. The Moving Average Time series of order q, MA(q)

Let 0 =1, 1, 2, … q denote q + 1 numbers.

Let {ut|t T} denote a white noise time series with

variance s2.

– independent

– mean 0, variance s2.

Let {xt|t T} be defined by the equation.

xt m 0ut 1ut 1 2ut 2 qut q

m ut 1ut 1 2ut 2 qut q

Then {xt|t T} is called a Moving Average time series

of order q. MA(q)

Excel file illustrating this time series

The mean

Ext Em 0ut 1ut 1 2ut 2 qut q

m 0 Eut 1Eut 1 2 Eut 2 q Eut q

m

The auto covariance function

s h E xt h m xt m

E ut h 1ut h1 2ut h2 qut hq

ut 1ut 1 2ut 2 qut q

q

q

E i ut h i j ut j

i 0

j 0

q q

E i j ut h i ut j

i 0 j 0

q

q

i j E ut hi ut j

i 0 j 0

2 q h

s i i h if i q

i 0

0

iq

2

2

and

E

u

s

.

since E uiu j 0 if i j.

i

The autocovariance function for an MA(q) time series

2 q h

s i i h if i q

s h i 0

0

iq

The autocorrelation function for an MA(q) time series

q h

s h i i h

r h

i 0

s 0

0

q 2

i if i q

i 0

iq

5. The Autoregressive Time series of order p, AR(p)

Let b1, b2, … bp denote p numbers.

Let {ut|t T} denote a white noise time series with

variance s2.

– independent

– mean 0, variance s2.

Let {xt|t T} be defined by the equation.

xt b1xt 1 b2 xt 2 b p xt p ut

Then {xt|t T} is called a Autoregressive time series of

order p. AR(p)

Excel file illustrating this time series

Comment:

An Autoregressive time series is not necessarily stationary.

Suppose {xt|t T} is an AR(1) time series satisfying the

equation:

xt b1 xt 1 ut

xt 1 ut

where {ut|t T} is a white noise time series with

variance s2. i.e. b1 = 1 and = 0.

xt xt 1 ut xt 2 ut 1 ut

x0 u1 u2 ut 1 ut

Ext Ex0 Eu1 Eu2 Eut 1 Eut

Ex0

but

Varxt Varx0 Varu1 Varut

Varx0 ts 2

and is not constant.

A time series {xt|t T} satisfying the equation:

xt xt 1 ut

is called a Random Walk.

Derivation of the mean,

autocovariance function and

autocorrelation function of a

stationary Autoregressive time series

We use extensively the rules of

expectation

Assume that the autoregressive time series {xt|t T}

be defined by the equation:

xt b1xt 1 b2 xt 2 b p xt p ut

is stationary.

Let m = E(xt). Then

Ext b1Ext 1 b2 Ext 2 b p Ext p Eut

m b1m b2 m b p m

1 b b

1

2

b p m

or E xt m

1 b1 b 2 b p

The Autocovariance function, s(h)

The Autocovariance function, s(h), of a stationary

autoregressive time series {xt|t T}can be determined

by using the equation:

xt b1xt 1 b2 xt 2 b p xt p ut

Now 1 b1 b 2

Thus

bp m

xt m b1 xt 1 m b p xt p m ut

Hence

s h Ext h m xt m

E b1 xt h1 m b p xt h p m ut h xt m

b1Ext h1 m xt m

b p E xt h p m xt m Eut h xt m

b1s h 1 b ps h p s ux h

where

0

h0

s ux h Eut h xt m

Eut xt m h 0

Now

s ux 0 E ut xt m

E ut b1 xt 1 m

b p xt p m ut

b1Eut xt 1 m b p E ut xt p m E ut2

s

2

The equations for the autocovariance function of an

AR(p) time series

s 0 b1s 1 b ps p s 2

s 1 b1s 0 b ps p 1

s 2 b1s 1 b ps p 2

s 3 b1s 2 b ps p 3

etc

Or using s(-h) = s(h)

s 0 b1s 1 b ps p s 2

s 1 b1s 0 b ps p 1

s 2 b1s 1 b ps p 2

s p b1s p 1 b ps 0

and

s h b1s h 1 b ps h p

for h > p

Use the first p + 1 equations to find s(0), s(1) and s(p)

Then use

s h b1s h 1 b ps h p

To compute s(h) for h > p

The Autoregressive Time series of order p, AR(p)

Let b1, b2, … bp denote p numbers.

Let {ut|t T} denote a white noise time series with

variance s2.

– independent

– mean 0, variance s2.

Let {xt|t T} be defined by the equation.

xt b1xt 1 b2 xt 2 b p xt p ut

Then {xt|t T} is called a Autoregressive time series of

order p. AR(p)

If the autoregressive time series {xt|t T} be

defined by the equation:

xt b1xt 1 b2 xt 2 b p xt p ut

is stationary.

Then

E xt m

1 b1 b 2 b p

The Autocovariance function, s(h), of a stationary

autoregressive time series {xt|t T} be defined by the

equation:

xt b1xt 1 b2 xt 2 b p xt p ut

Satisfy the equations:

The mean

E xt m

1 b1 b2

bp

The autocovariance function for an AR(p) time series

s 0 b1s 1 b ps p s

s 1 b1s 0 b ps p 1

2

Yule Walker

Equations

s 2 b1s 1 b ps p 2

s p b1s p 1 b ps 0

and

s h b1s h 1 b ps h p

for h > p

Use the first p + 1 equations (the Yole-Walker Equations)

to find s(0), s(1) and s(p)

Then use

s h b1s h 1 b ps h p

To compute s(h) for h > p

The Autocorrelation function, r(h), of a stationary

autoregressive time series {xt|t T}:

s h

r h

s 0

The Yule walker Equations become:

s2

1 b1r 1 b p r p

s 0

r 1 b11 b p r p 1

r 2 b1r 1 b p r p 2

r p b1r p 1 b p1

and

r h b1r h 1 b p r h p

for h > p

To find r(h) and s(0): solve for r(1), …, r(p)

r 1 b11 b p r p 1

r 2 b1r 1 b p r p 2

r p b1r p 1 b p1

Then

s 0

s

2

1 b1r 1 b p r p

for h > p

r h b1r h 1 b p r h p

Example

Consider the AR(2) time series:

xt = 0.7xt – 1+ 0.2 xt – 2 + 4.1 + ut

where {ut} is a white noise time series with

standard deviation s = 2.0

White noise ≡ independent, mean zero

(normal)

Find m, s(h), r(h)

To find r(h) solve the equations:

r 1 b11 b2 r 1

r 2 b1r 1 b21

or

r 1 (0.7)1 0.2 r 1

r 2 0.7 r 1 0.21

thus

0.7 0.7

r 1

0.875

1 .2 0.8

r 2 0.7 0.875 0.2 0.8125

for h > 2

r h b1r h 1 b2 r h 2

0.7 r h 1 0.2 r h 2

This can be used in sequence to find:

r 3 , r 4 , r 5 ,

etc.

results

h

0

r hh

) 1.0000

1

0.8750

2

0.8125

3

0.7438

4

0.6831

5

0.6269

6

0.5755

7

0.5282

8

0.4849

To find s(0) use:

s 0

s2

1 b1r 1 b p r p

or

s 0

s2

1 b1r 1 b2 r 2

2.02

1 0.70 0.8750 0.20 0.8125

= 17.778

To find s(h) use:

s h s 0 r h

To find m use:

m

1 b1 b 2

4.1

4.1

41

1 0.70 0.20 0.1

An explicit formula for r(h)

Auto-regressive time series of order p.

Consider solving the difference equation:

r h b1r h 1 b p r h p 0

This difference equation can be solved by:

Setting up the polynomial

b x 1 b1x b p x

p

x

x

x

1 1 1

r

r

r

1

2

p

where r1, r2, … , rp are the roots of the polynomial

b(x).

The difference equation

r h b1r h 1 b p r h p 0

has the general solution:

1

1

1

r h c1 c2 c p

r1

r2

rp

h

h

h

where c1, c2, … , cp are determined by using the starting

values of the sequence r(h).

Example: An AR(1) time series

xt b1xt 1 ut

r 0 1

r 1 b1r 0 b1

for h > 1

r h b1r h 1 b1h

and

s2

s2

s 0

1 b1r 1 1 b12

The difference equation

r h b1r h 1 0

Can also be solved by:

Setting up the polynomial

b x 1 b1 x

x

1

1 wherer1

b1

r1

Then a general formula for r(h) is:

h

1

r h c1 c1b1h b1h since r 0 1

r1

Example: An AR(2) time series

xt b1 xt 1 b2 xt 2 ut

r 0 1

and r 1 b1 b 2 r 1

b1

or r 1 r1

1 b2

for h > 1

r h b1r h 1 b2 r h 2

Setting up the polynomial

b x 1 b1x b2 x

x

1

r1

2

1 1 1 2

x

1 1 x

x

r2

r1 r2 r1r2

b1 b12 4 b 2

where r1

2b 2

b1 b12 4 b 2

and r2

2b 2

1 1

1

Note: b1 and b 2

r1 r2

r1r2

Then a general formula for r(h) is:

h

1

1

r h c1 c2

r1

r2

For h = 0 and h = 1.

1 c1 c2

b1

c1 c2

r 1 r1

1 b 2 r1 r2

Solving for c1 and c2.

h

Solving for c1 and c2.

r1 1 r

c1

r1r2 1r1 r2

and

2

2

r2 r12 1

c2

r1r2 1r1 r2

Then a general formula for r(h) is:

h

1

1

r1 1 r

r2 r 1

r h

r1r2 1r1 r2 r1 r1r2 1r1 r2 r2

2

2

2

1

h

If

b12 4b2 0 r1 and r2

are real and

h

1

1

r1 1 r

r2 r 1

r h

r1r2 1r1 r2 r1 r1r2 1r1 r2 r2

2

2

is a mixture of two exponentials

2

1

h

If

b12 4b2 0 r1 and r2 are complex conjugates.

r1 x iy R e

r2 x iy R ei

i

x

1 x

where R x y and tan , tan

y

y

2

2

Some important complex identities

e cos i sin , e

i

i

e e

cos

2

i

i

cos i sin

i

e e

, sin

2i

i

The above identities can be shown using the power series

expansions:

2

3

4

u u u

e 1 u

2! 3! 4!

u

cos u

2

4

6

u u u

1

2! 4! 6!

3

5

7

u u u

sin u u

3! 5! 7!

Some other trig identities:

1. cos u v cos u cos v sin u sin v

2. cos u v cos u cos v sin u sin v

3. sin u v sin u cos v cos u sin v

4. sin u v sin u cos v cos u sin v

5. cos 2u cos2 u sin 2 u

6. sin 2u 2sin u cos u

i

i 2

r1 1 r

R e 1 R e

2

i

i

r1r2 1r1 r2 R 1 R e e

2

2

i

2

i

e R e

2

R 1 2i sin

2

i

i 2

r2 r 1

R e R e 1

2

i

i

r1r2 1r1 r2 R 1 R e e

2

1

2

i

i

R e e

2

R 1 2i sin

2

Hence

h

1

1

r1 1 r

r2 r 1

r h

r1r2 1r1 r2 r1 r1r2 1r1 r2 r2

2

2

2

1

h

ei R 2e i

e ih

R 2ei ei

eih

2

h 2

h

R 1 2i sin R

R 1 2i sin R

R 2 ei h1 e i h1 ei h1 ei h1

h

2

R R 1 2i sin

R 2 sin h 1 sin h 1

h

2

R R 1 sin

R 2 sin hcos coshsin sin hcos coshsin

R h R 2 1 sin

R

2

1 sinhcos R 2 1 coshsin

R h R 2 1 sin

R2 1

cosh 2 sin hcot

R 1

Rh

R2 1

if tan 2

cot

R 1

cos h sin h tan

Rh

cos h sin h tan

Hence r h

Rh

1

cosh cos sin h sin

cos

Rh

D cos h

Rh

a damped cosine wave

cos2 sin 2

1

where D

1 tan2

cos

cos

Example

Consider the AR(2) time series:

xt = 0.7xt – 1+ 0.2 xt – 2 + 4.1 + ut

where {ut} is a white noise time series with

standard deviation s = 2.0

The correlation function found before using the

difference equation:

r(h) = 0.7 r(h – 1) + 0.2 r(h – 2)

h

0

r hh

) 1.0000

1

0.8750

2

0.8125

3

0.7438

4

0.6831

5

0.6269

6

0.5755

7

0.5282

8

0.4849

Alternatively setting up the polynomial

x

b x 1 b1x b2 x2 1 .7x .2x2 1

r1

x

1

r2

b1 b 4b 2 .7 .7 4 .2

where r1

2b 2

2 .2

2

2

1

1.089454

b1 b 4b 2 .7 .7 4 .2

and r2

2b 2

2 .2

2

1

4.58945

2

Thus

h

1

1

r1 1 r

r2 r 1

r h

r1r2 1r1 r2 r1 r1r2 1r1 r2 r2

2

2

2

1

h

rr

1 2 1 r1 r2 22.7156

r1 1 r

2

2

21.8578

r1 1 r22

r1r2 1 r1 r2

and r2 r 1 0.85782

2

1

0.962237 and

h

r2 r12 1

r1r2 1 r1 r2

0.037763

1

1

r h 0.962237

0.037763

1.089454

4.58945

h

Another Example

Consider the AR(2) time series:

xt = 0.2xt – 1- 0.5 xt – 2 + 4.1 + ut

where {ut} is a white noise time series with

standard deviation s = 2.0

The correlation function found before using the

difference equation:

r(h) = 0.2 r(h – 1) - 0.5 r(h – 2)

h

0

r hh

) 1.0000

1

0.8750

2

0.8125

3

0.7438

4

0.6831

5

0.6269

6

0.5755

7

0.5282

8

0.4849

Alternatively setting up the polynomial

x

b x 1 b1x b2 x2 1 .2x .5x2 1

r1

x

1

r2

b1 b 4b 2 .2 .2 4 0.5

where r1

2b 2

2 0.5

2

1

2

.2 1.96

.2 1.96i

1

2

2

b1 b1 4b 2 .2 .2 4 0.5

and r2

2b 2

2 0.5

.2 1.96

.2 1.96i

1

Thus

i

r1 .2 1.96i R e

r2 .2 1.96i R ei

where

R x 2 y 2 .22 1.96 2

and

x

0.2

tan

0.142857,

y 1.96

1

thus tan 0.142857 0.141897

2 1

R2 1

cot .14897 2.33333

Now tan 2 cot

2 1

R 1

Thus tan1 2.33333 1.165905

Also D 1 tan 2 1 2.33332 2.538591

D cos h

Finally r h

Rh

2.538591cos 0.141897h 1.165905

h

22

cos h sin h tan

Hence r h

Rh

1

cosh cos sin h sin

cos

Rh

D cos h

Rh

a damped cosine wave

cos2 sin 2

1

where D

1 tan2

cos

cos

Conditions for stationarity

Autoregressive Time series of

order p, AR(p)

If b1 = 1 and = 0.

i.e. xt b1 xt 1 ut

The value of xt increases in magnitude and ut

eventually becomes negligible.

The time series {xt|t T} satisfies the equation:

xt b1 xt 1

The time series {xt|t T} exhibits deterministic

behaviour.

Let b1, b2, … bp denote p numbers.

Let {ut|t T} denote a white noise time series with

variance s2.

– independent

– mean 0, variance s2.

Let {xt|t T} be defined by the equation.

xt b1xt 1 b2 xt 2 b p xt p ut

Then {xt|t T} is called a Autoregressive time series of

order p. AR(p)

Consider the polynomial

p

b x 1 b1x b p x

x

x

x

1 1 1

r

r

r

1

2

p

with roots r1, r2 , … , rp

then {xt|t T} is stationary if |ri| > 1 for all i.

If |ri| < 1 for at least one i then {xt|t T} exhibits

deterministic behaviour.

If |ri| ≥ 1 and |ri| = 1 for at least one i then {xt|t T}

exhibits non-stationary random behaviour.

Special Cases: The AR(1) time

Let {xt|t T} be defined by the equation.

xt b1 xt 1 ut

Consider the polynomial

x

b x 1 b1 x 1

r1

with root r1= 1/b1

1. {xt|t T} is stationary if |r1| > 1 or |b1| < 1 .

2. If |ri| < 1 or |b1| > 1 then {xt|t T} exhibits

deterministic behaviour.

3. If |ri| = 1 or |b1| = 1 then {xt|t T} exhibits nonstationary random behaviour.

Special Cases: The AR(2) time

Let {xt|t T} be defined by the equation.

xt b1xt 1 b2 xt 2 ut

Consider the polynomial

x

x

b x 1 b1x b2 x 1 1

r1 r2

where r1 and r2 are the roots of b(x)

1. {xt|t T} is stationary if |r1| > 1 and |r2| > 1 .

This is true if b1+b2 < 1 , b2 –b1 < 1 and b2 > -1.

2

These inequalities define a triangular region for

b1 and b2.

2. If |ri| < 1 or |b1| > 1 then {xt|t T} exhibits

deterministic behaviour.

3. If |ri| ≤ 1 for i = 1,2 and |ri| = 1 for at least on i then

{xt|t T} exhibits non-stationary random behaviour.

Patterns of the ACF and PACF of AR(2) Time Series

In the shaded region the roots of the AR operator are complex

b2