Week4-1

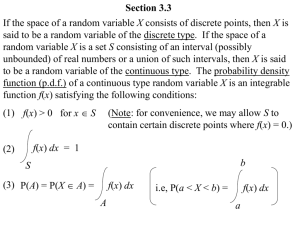

advertisement

Moment generating function

The moment generating function of random variable X is

given by

(t ) E[etX ]

Moment generating function

The moment generating function of random variable X is

given by

(t ) E[etX ]

(t ) etx p( x) if X is discrete

x

Moment generating function

The moment generating function of random variable X is

given by

(t ) E[etX ]

(t ) etx p( x) if X is discrete

x

(t ) etx f ( x)dx if X is continuous

dE[etX ]

d tX

'(t )

E[ e ] E[ XetX ]

dt

dt

'(0) E[ X ]

dE[etX ]

d tX

'(t )

E[ e ] E[ XetX ]

dt

dt

'(0) E[ X ]

d

(t ) E[ XetX ] E[ X 2 etX ]

dt

(2) (0) E[ X 2 ]

(2)

More generally,

d k 1 tX

(t ) E[ X e ] E[ X k etX ]

dt

( k ) (0) E[ X k ]

(k )

Example: X has the Poisson distribution with parameter l

Example: X has the Poisson distribution with parameter l

x l

x l

(

l

)

e

(

l

)

e

tX

tx

tx

(t ) E[e ] x 0 e

x 0 e

x!

x!

t x

t

(

l

e

)

l

l l et

l ( ee 1)

=e x 0

e e e

x!

Example: X has the Poisson distribution with parameter l

x l

x l

(

l

)

e

(

l

)

e

tX

tx

tx

(t ) E[e ] x 0 e

x 0 e

x!

x!

t x

(

l

e

)

l

l l et

l ( et 1)

=e x 0

e e e

x!

(t )

d [e

l ( et 1)

dt

]

t l ( et 1)

le e

Example: X has the Poisson distribution with parameter l

x l

x l

(

l

)

e

(

l

)

e

tX

tx

tx

(t ) E[e ] x 0 e

x 0 e

x!

x!

t x

(

l

e

)

l

l l et

l ( et 1)

=e x 0

e e e

x!

(t )

d [e

l ( et 1)

dt

]

l et el ( e 1)

t

ax

a

e

x 0 x!

t l ( et 1)

'(0) l e e

t 0

l

'(0) l e e

t l ( et 1)

''(0) l e e

t l ( et 1)

t 0

l

le le e

t

t l ( et 1)

t 0

l l2

t l ( et 1)

'(0) l e e

t l ( et 1)

''(0) l e e

t 0

l

t l ( et 1)

le le e

t

Var ( X ) E[ X 2 ] E[ X ]2 l

t 0

l l2

If X and Y are independent, then

X Y (t ) E[et ( X Y ) ] E[etX etY ) ] E[etX ]E[etY ]

= X (t )Y (t )

The moment generating function of the sum of two random

variables is the product of the individual moment generating

functions

Let Y = X1+X2 where X1~Poisson(l1) and X2~Poisson(l2) and

X1 and X1 are independent, then

E[etY ] E[et ( X1 X 2 ) ] E[etX1 ]E[etX 2 ]

=e

l1 ( et 1) l2 ( et 1)

e

e

( et 1)( l1 l2 )

Let Y = X1+X2 where X1~Poisson(l1) and X2~Poisson(l2) and

X1 and X1 are independent, then

E[etY ] E[et ( X1 X 2 ) ] E[etX1 ]E[etX 2 ]

=e

l1 ( et 1) l2 ( et 1)

e

Y ~ Poisson(l1 l2 )

e

( et 1)( l1 l2 )

Note: The moment generating function uniquely determines

the distribution.

Markov’s inequality

If X is a random variable that takes only nonnegative values,

then for any a > 0,

E[ X ]

P( X a)

.

a

Proof (in the case where X is continuous):

E[ X ] xf ( x )dx

a

a

xf ( x)dx xf ( x )dx

xf ( x)dx

a

a

a

af ( x)dx a f ( x)dx aP ( X a )

Strong law of large numbers

Let X1, X2, ..., Xn be a set of independent random variables

having a common distribution, and let E[Xi] = m. then, with

probability 1

X1 X1 ... X n

m

n

as n .

Central Limit Theorem

Let X1, X2, ..., Xn be a set of independent random variables

having a common distribution with mean m and variance s.

Then the distribution of

X 1 X 1 ... X n nm

s n

tends to the standard normal as n . That is

a

X 1 X 1 ... X n nm

1

x2 / 2

P(

a)

e

dx

s n

2

as n .

Conditional probability and

conditional expectations

Let X and Y be two discrete random variables, then the

conditional probability mass function of X given that Y=y is

defined as

P{ X x, Y y} p( x, y )

p X |Y ( x | y ) P{ X x | Y y}

.

P{Y y}

p( y )

for all values of y for which P(Y=y)>0.

Conditional probability and

conditional expectations

Let X and Y be two discrete random variables, then the

conditional probability mass function of X given that Y=y is

defined as

P{ X x, Y y} p( x, y )

p X |Y ( x | y ) P{ X x | Y y}

.

P{Y y}

p( y )

for all values of y for which P(Y=y)>0.

The conditional expectation of X given that Y=y is defined as

E[ X | Y y] xP{ X x | Y y} xpX |Y ( x | y).

x

x

Let X and Y be two continuous random variables, then the

conditional probability density function of X given that Y=y

is defined as

f ( x, y )

f X |Y ( x | y )

.

fY ( y )

for all values of y for which fY(y)>0.

The conditional expectation of X given that Y=y is defined as

E[ X | Y y] xf X |Y ( x | y)dx.

E[ X ] E[ E[ X | Y y ]] E[ E[ X | Y ]]

E[ X ] E[ X | Y y ]P(Y y ) if Y is discrete

y

E[ X ] E[ X | Y y ] f ( y ) dy if Y is continuous.

Proof: