Fusion 2011 — Mutual Information Scheduling for Ranking

advertisement

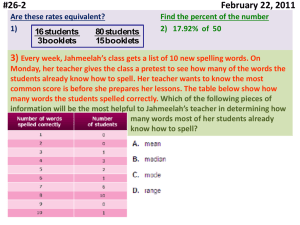

Mutual Information Scheduling for Ranking Hamza Aftab Nevin Raj Paul Cuff Sanjeev Kulkarni Adam Finkelstein 1 Applications of Ranking 2 Pair-wise Comparisons Query: A>B? Ask a voter whether candidate I is better than candidate J Observe the outcome of a match 3 Scheduling Design queries dynamically, based on past observations. 4 Example: Kitten Wars 5 Example: All Our Ideas (Matthew Salganik – Princeton) 6 Select Informative Matches Assume matches are expensive but computation is cheap Previous Work (Finkelstein) Use Ranking Algorithm to make better use of information Select matches by giving priority based on two criterion Lack of information: Has a team been in a lot of matches already? Comparability of the match: Are the two teams roughly equal in strength? Our innovation Select matches based on Shannon’s mutual information 7 Related Work Sensor Management (tracking) Information-Driven [Manyika, Durrant-Whyte 1994] [Zhao et. al. 2002] – Bayesian filtering [Aoki et. al. 2011] – This session Learning Network Topology [Hayek, Spuckler 2010] Noisy Sort 8 Ranking Algorithms – Linear Model Each player has a skill level µ The probability that Player I beats Player J is a function of the difference µi - µj Transitive Use Maximum Likelihood Thurstone-Mosteller Model Q function Performance has Gaussian distribution about the mean µ Bradley-Terry Model Logistic function 9 Examples Elo’s chess ranking system Based on Bradley-Terry model Sagarin’s sports rankings 10 Mutual Information Mutual Information: Conditional Mutual information 11 Entropy Entropy: High entropy Conditional Entropy 12 Low entropy Mutual Information Scheduling Let R be the information we wish to learn (i.e. ranking or skill levels) Let Ok be the outcome of the kth match At time k, scheduler chooses the pair (ik+1, jk+1): 13 Why use Mutual Information? Additive Property Fano’s Inequality Related entropy to probability of error For small error: Continuous distributions: MSE bounds differential entropy 14 Greedy is Not Optimal Consider Huffman codes---Greedy is not optimal 15 Performance (MSE) 16 Performance (Gambling Penalty) 17 Identify correct ranking 18 Find strongest player 19 Find strongest player 20 Evaluating Goodness-of-Fit 1 3 2 4 Ranking: Inversions Skill Level Estimates: 1 2 3 4 Mean squared error (MSE) Kullback-Leibler (KL) divergence (relative entropy) Others Betting risk Sampling inconsistency 21 D( p || q) p( x) log xX p( x) q ( x) Numerical Techniques Calculate mutual information Importance sampling Convex Optimization (tracking of ML estimate) Summary of Main Idea Get the most out of measurements for estimating a ranking Schedule each match to maximize (Greedy, to make the computation tractable) Flexible S is any parameter of interest, discrete or continuous (skill levels; best candidate; etc.) Simple design---competes well with other heuristics Ranking Based on Pair-wise Comparisons Bradley Terry Model: Examples: A hockey team scores Poisson- goals in a game Two cities compete to have the tallest person is the population Importance Sampling: Multidimensional integral Probability distributions Skill level estimates Skill level of player 2 Computing Mutual Information Skill level of player 1 • Why is it good for estimating skill levels? – Faster than convex optimization – Efficient memory use 25 (for a 10 player tournament and100 experiments) Results 4.5 3.5 3 Average number of inversions Average number of inversions 4 ELO TrueSkill Random Scheduling MinGames/ClosestSkill Mutual Information Graph Based 2.5 2 1.5 1 0.7 0.6 0.5 0.4 0.3 verage number of inversions 0.5 0.5 0 0 26 20 0.4 0.3 0.2 100 200 300 Number of games 400 500 30 40 50 Number of games 60 70 Visualizing the Algorithm Outcomes Player A B C D A 0 2 3 3 B 0 0 7 2 C 0 2 0 5 D 1 2 2 0 A B ? C 27 D Scheduling Player A B C A 0 B 0.031 C 0.025 0.023 D 0.024 0.033 0.030 D 0.031 0.025 0.024 0 0.023 0.033 0 0.030 0