Topic 4

advertisement

Embedded Computer Architecture

VLIW architectures:

Generating VLIW code

TU/e 5kk73

Henk Corporaal

VLIW lectures overview

•

•

•

•

Enhance performance: architecture methods

Instruction Level Parallelism

VLIW

Examples

– C6

– TM

– TTA

• Clustering and Reconfigurable components

• Code generation

–

–

–

–

compiler basics

mapping and scheduling

TTA code generation

Design space exploration

• Hands-on

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

2

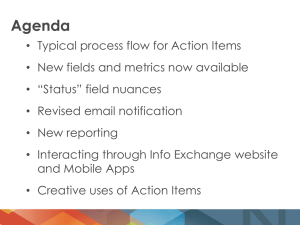

Compiler basics

• Overview

–

–

–

–

–

–

Compiler trajectory / structure / passes

Control Flow Graph (CFG)

Mapping and Scheduling

Basic block list scheduling

Extended scheduling scope

Loop scheduling

– Loop transformations

• separate lecture

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

3

Compiler basics: trajectory

Source program

Preprocessor

Compiler

Error

messages

Assembler

Library

code

Loader/Linker

Object program

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

4

Compiler basics: structure / passes

Source code

Lexical analyzer

token generation

check syntax

check semantic

parse tree generation

Parsing

Intermediate code

Code optimization

Code generation

Register allocation

Sequential code

Scheduling and allocation

data flow analysis

local optimizations

global optimizations

code selection

peephole optimizations

making interference graph

graph coloring

spill code insertion

caller / callee save and restore code

exploiting ILP

Object code

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

5

Compiler basics: structure

Simple example: from HLL to (Sequential) Assembly code

position := initial + rate * 60

Lexical analyzer

id := id + id * 60

temp1 := intoreal(60)

temp2 := id3 * temp1

temp3 := id2 + temp2

id1 := temp3

Syntax analyzer

Code optimizer

temp1 := id3 * 60.0

id1 := id2 + temp1

:=

id

+

id

*

id

Code generator

60

Intermediate code generator

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

movf

mulf

movf

addf

movf

id3, r2

#60, r2, r2

id2, r1

r2, r1

r1, id1

6

Compiler basics:

Control flow graph (CFG)

CFG: shows the flow

between basic blocks

C input code:

if (a > b)

else

{ r = a % b; }

{ r = b % a; }

1

sub t1, a, b

bgz t1, 2, 3

2

3

rem r, a, b

goto 4

rem r, b, a

goto 4

4

…………..

…………..

Program, is collection of

Functions, each function is collection of

Basic Blocks, each BB contains set of

Instructions, each instruction consists of several

Transports,..

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

7

Compiler basics: Basic optimizations

• Machine independent optimizations

• Machine dependent optimizations

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

8

Compiler basics: Basic optimizations

• Machine independent optimizations

–

–

–

–

–

–

–

Common subexpression elimination

Constant folding

Copy propagation

Dead-code elimination

Induction variable elimination

Strength reduction

Algebraic identities

• Commutative expressions

• Associativity: Tree height reduction

– Note: not always allowed(due to limited precision)

• For details check any good compiler book !

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

9

Compiler basics: Basic optimizations

• Machine dependent optimization example

– What’s the optimal implementation of a*34 ?

– Use multiplier: mul Tb, Ta, 34

• Pro: No thinking required

• Con: May take many cycles

– Alternative:

– SHL Tb, Ta, 1

– SHL Tc, Ta, 5

– ADD Tb, Tb, Tc

•

•

•

•

4/13/2015

Pros: May take fewer cycles

Cons:

Uses more registers

Additional instructions ( I-cache load / code size)

Embedded Computer Architecture

H. Corporaal, and B. Mesman

10

Compiler basics: Register allocation

• Register Organization

– Conventions needed for parameter passing

– and register usage across function calls

r31

Callee saved registers

r21

r20

r11

r10

Caller saved registers

Other temporaries

Function Argument and Result transfer

r1

r0

4/13/2015

Embedded Computer Architecture

Hard-wired 0

H. Corporaal, and B. Mesman

11

Register allocation using graph coloring

Given a set of registers, what is the most efficient

mapping of registers to program variables in terms

of execution time of the program?

Some definitions:

• A variable is defined at a point in program when a value is

assigned to it.

• A variable is used at a point in a program when its value is

referenced in an expression.

• The live range of a variable is the execution range between

definitions and uses of a variable.

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

12

Register allocation using graph coloring

Program:

define

Live Ranges

a

b

c

d

a :=

c :=

b :=

:= b

d :=

:= a

:= c

:= d

use

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

13

Register allocation using graph coloring

Inference Graph

a

Coloring:

a = red

b = green

c = blue

d = green

b

c

d

Graph needs 3 colors => program needs 3 registers

Question: map coloring requires (at most) 4 colors; what’s the

maximum number of colors (= registers) needed for register

interference graph coloring?

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

14

Register allocation using graph coloring

Spill/ Reload code

Spill/ Reload code is needed when there are not enough colors

(registers) to color the interference graph

Example:

Only two registers

available !!

4/13/2015

Embedded Computer Architecture

Program:

Live Ranges

a

b

c

d

a :=

c :=

store c

b :=

:= b

d :=

:= a

load c

:= c

:= d

H. Corporaal, and B. Mesman

15

Register allocation for a monolithic RF

Scheme of the optimistic register allocator

Spill code

Renumber

Build

Spill costs

Simplify

Select

The Select phase selects a color (= machine register) for a variable

that minimizes the heuristic h:

h = fdep(col, var) + caller_callee(col, var)

where:

fdep(col, var)

: a measure for the introduction of false dependencies

caller_callee(col, var) : cost for mapping var on a caller or callee saved register

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

16

Some explanation of reg allocation phases

•

•

[Renumber:] The first phase finds all live ranges in a procedure

and numbers (renames) them uniquely.

•

[Build:] This phase constructs the interference graph.

•

•

•

•

[Spill Costs:] In preparation for coloring, a spill cost estimate

is computed for every live range. The cost is simply the sum of the

execution frequencies of the transports that define or use the variable

of the live range.

•

•

•

•

•

•

[Simplify:] This phase removes nodes with degree < k in an

arbitrary order from the graph and pushes them on a stack. Whenever

it discovers that all remaining nodes have degree >= k, it chooses

a spill candidate. This node is also removed from the graph and

optimistically pushed on the stack, hoping a color will be available in

spite of its high degree.

•

•

•

•

•

[Select:] Colors are selected for nodes. In turn, each node is

popped from the stack, reinserted in the interference graph and given a

color distinct from its neighbors. Whenever it discovers that it has no

color available for some node, it leaves the node uncolored and

continues with the next node.

•

•

[Spill Code:] In the final phase spill code is

inserted for the live ranges of all uncolored nodes.

•

•

•

Some symbolic registers must be mapped on a specific machine register

(like stack pointer). These registers get their color in the simplify

stage instead of being pushed on the stack.

•

•

The other machine registers are divided in caller-saved and callee-saved

registers. The allocator computes the caller-saved and callee-saved cost.

•

•

•

•

•

•

•

The caller-saved cost for the symbolic registers is computed when they have

live-ranges across a procedure call. The cost per symbolic register is twice

the execution frequency of its transport.

The callee-saved cost of a

symbolic register is twice the execution frequency of the procedure to which

the transport of the symbolic register belongs. With these two costs in mind

the allocator chooses a machine register.

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

17

Compiler basics: Code selection

• CISC era (before 1985)

– Code size important

– Determine shortest sequence of code

• Many options may exist

– Pattern matching

Example M68029:

D1 := D1 + M[ M[10+A1] + 16*D2 + 20 ]

ADD ([10,A1], D2*16, 20) D1

• RISC era

– Performance important

– Only few possible code sequences

– New implementations of old architectures optimize RISC part of

instruction set only; for e.g. i486 / Pentium / M68020

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

18

Overview

•

•

•

•

Enhance performance: architecture methods

Instruction Level Parallelism

VLIW

•What is scheduling

Examples

•Basic Block Scheduling

– C6

– TM

– TTA

• Clustering

• Code generation

•Extended Basic Block

Scheduling

•Loop Scheduling

– Compiler basics

– Mapping and Scheduling of Operations

• Design Space Exploration: TTA framework

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

19

Mapping / Scheduling =

placing operations in space and time

d = a * b;

e = a + d;

f = 2 * b + d;

r = f – e;

x = z + y;

a

b

2

*

*

d

z

+

+

e

f

-

y

+

x

r

Data Dependence Graph (DDG)

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

20

How to map these operations?

b

a

*

Architecture constraints:

• One Function Unit

• All operations single cycle latency

2

*

d

+

e

+

f

r

4/13/2015

z

y

cycle 1

*

2

*

3

+

4

5

+

6

+

+

x

Embedded Computer Architecture

H. Corporaal, and B. Mesman

-

21

How to map these operations?

b

a

*

Architecture constraints:

• One Add-sub and one Mul unit

• All operations single cycle latency

2

*

d

+

e

+

f

r

z

y

cycle 1

2

+

Mul

*

Add-sub

+

*

+

3

+

4

5

-

x

6

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

22

There are many mapping solutions

x

Pareto graph

x

x

(solution space)

x

x

x

x

x

x

x

x

x

x

x

xx

x

x

x

x

x

x

x

x

x

0

x

x

x

x

x

x

x

Cost

Point x is pareto there is no point y for which i yi<xi

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

23

Scheduling: Overview

Transforming a sequential program into a parallel program:

read sequential program

read machine description file

for each procedure do

perform function inlining

for each procedure do

transform an irreducible CFG into a reducible CFG

perform control flow analysis

perform loop unrolling

perform data flow analysis

perform memory reference disambiguation

perform register allocation

for each scheduling scope do

perform instruction scheduling

write out the parallel program

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

24

Basic Block Scheduling

• Basic Block =

piece of code which can only be entered from the top (first

instruction) and left at the bottom (final instruction)

• Scheduling a basic block =

Assign resources and a cycle to every operation

• List Scheduling =

Heuristic scheduling approach, scheduling the

operation one-by-one

– Time_complexity = O(N), where N is #operations

• Optimal scheduling has Time_complexity = O(exp(N)

• Question: what is a good scheduling heuristic

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

25

Basic Block Scheduling

•

Make a Data Dependence Graph (DDG)

•

Determine minimal length of the DDG (for the given architecture)

– minimal number of cycles to schedule the graph (assuming sufficient resources)

•

Determine:

–

–

–

–

ASAP (As Soon As Possible) cycle = earliest cycle instruction can be scheduled

ALAP (As Late As Possible) cycle = latest cycle instruction can be scheduled

Slack of each operation = ALAP – ASAP

Priority of operations = f (Slack, #decendants, #register impact, …. )

•

Place each operation in first cycle with sufficient resources

•

Notes:

– Basic Block = a (maximal) piece of consecutive instructions which can only be entered at the

first instruction and left at the end

– Scheduling order sequential

– Scheduling Priority determined by used heuristic; e.g. slack + other contributions

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

26

Basic Block Scheduling:

determine ASAP and ALAP cycles

B

ASAP cycle

C

we assume all

operations are

single cycle !

ALAP cycle

A

ADD

<2,2>

SUB

<3,3>

NEG

<1,1> slack

A

LD

<2,3>

ADD

C

ADD

<4,4>

Embedded Computer Architecture

LD

H. Corporaal, and B. Mesman

A

B

MUL

<2,4>

y

X

4/13/2015

<1,3>

<1,4>

z

27

Cycle based list scheduling

proc Schedule(DDG = (V,E))

beginproc

ready = { v | (u,v) E }

ready’ = ready

sched =

current_cycle = 0

while sched V do

for each v ready’ (select in priority order) do

if ResourceConfl(v,current_cycle, sched) then

cycle(v) = current_cycle

sched = sched {v}

endif

endfor

current_cycle = current_cycle + 1

ready = { v | v sched (u,v) E, u sched }

ready’ = { v | v ready (u,v) E, cycle(u) + delay(u,v) current_cycle}

endwhile

endproc

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

28

Extended Scheduling Scope:

look at the CFG

Code:

A;

If cond

Then B

Else C;

D;

If cond

Then E

Else F;

G;

CFG:

Control

Flow

Graph

A

B

C

D

E

F

Q: Why enlarge the scheduling scope?

G

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

29

Extended basic block scheduling:

Code Motion

A

a) add r3, r4, 4

b) beq . . .

Q: Why moving code?

B

C

c) add r1, r1, r2

d) sub r3, r3, r2

D

e) mul r1, r1, r3

• Downward code motions?

— a B, a C, a D, c D, d D

• Upward code motions?

— c A, d A, e B, e C, e A

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

30

Possible Scheduling Scopes

Trace

4/13/2015

Superblock

Embedded Computer Architecture

Decision tree

H. Corporaal, and B. Mesman

Hyperblock/region

31

A

B

C

Create and Enlarge Scheduling Scope

D

E

F

G

A

A

C

B

D

F

E

Trace

Embedded Computer Architecture

C

D

D’

E

tail

duplication

E’

F

G

G

4/13/2015

B

G’

Superblock

H. Corporaal, and B. Mesman

32

A

B

C

Create and Enlarge Scheduling Scope

D

E

F

G

A

C

B

E

F

G

G’

D

F’

E’

G’’

Decision Tree

Embedded Computer Architecture

C

B

D’

D

4/13/2015

A

tail

duplication

H. Corporaal, and B. Mesman

F

E

G

Hyperblock/ region

33

A

B

C

Comparing scheduling scopes

D

E

F

G

Trace Sup. Hyp.

block block

Multiple exc. paths

No

No

Yes

Side-entries allowed

Yes

No

No

Join points allowed

Yes

No

Yes

Code motion down joins Yes

No

No

Must be if-convertible

No

No

Yes

Tail dup. before sched.

No

Yes

No

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

Dec. Region

Tree

Yes

Yes

No

No

No

Yes

No

No

No

No

Yes

No

34

Code movement (upwards) within

regions: what to check?

destination block

Legend:

Copy

needed

I

I

I

I

4/13/2015

Embedded Computer Architecture

Check for

off-liveness

Code

movement

I

add

Intermediate

block

source block

H. Corporaal, and B. Mesman

35

Extended basic block scheduling:

Code Motion

• A dominates B A is always executed before B

– Consequently:

• A does not dominate B code motion from B to A requires

code duplication

• B post-dominates A B is always executed after A

– Consequently:

• B does not post-dominate A code motion from B to A is speculative

A

Q1: does C dominate E?

B

Q2: does C dominate D?

C

Q3: does F post-dominate D?

D

E

Q4: does D post-dominate B?

F

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

36

Scheduling: Loops

Loop Optimizations:

A

C

B

A

C’

C’

C’’

C’’

D

D

Loop unrolling

Loop peeling

4/13/2015

C

B

C

B

A

D

Embedded Computer Architecture

H. Corporaal, and B. Mesman

37

Scheduling: Loops

Problems with unrolling:

• Exploits only parallelism within sets of n iterations

• Iteration start-up latency

• Code expansion

resource utilization

Basic block scheduling

Basic block scheduling

and unrolling

Software pipelining

time

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

38

Software pipelining

• Software pipelining a loop is:

– Scheduling the loop such that iterations start before

preceding iterations have finished

Or:

– Moving operations across the backedge

Example: y = a.x

LD

LD

LD

LD ML

LD ML

ML

LD ML ST

LD ML ST

ST

ML ST

ML ST

ST

ST

3 cycles/iteration

4/13/2015

Embedded Computer Architecture

Unroling (3 times)

Software pipelining

5/3 cycles/iteration

1 cycle/iteration

H. Corporaal, and B. Mesman

39

Software pipelining (cont’d)

Basic loop scheduling techniques:

• Modulo scheduling (Rau, Lam)

– list scheduling with modulo resource constraints

• Kernel recognition techniques

–

–

–

–

unroll the loop

schedule the iterations

identify a repeating pattern

Examples:

This algorithm most used in

commercial compilers

• Perfect pipelining (Aiken and Nicolau)

• URPR (Su, Ding and Xia)

• Petri net pipelining (Allan)

• Enhanced pipeline scheduling (Ebcioğlu)

– fill first cycle of iteration

– copy this instruction over the backedge

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

40

Software pipelining: Modulo scheduling

Example: Modulo scheduling a loop

ld

mul

sub

st

for (i = 0; i < n; i++)

A[i+6] = 3* A[i] - 1;

(a) Example loop

r1,(r2)

r3,r1,3

r4,r3,1

r4,(r5)

(b) Code (without loop control)

ld

mul

sub

st

r1,(r2)

r3,r1,3

r4,r3,1

r4,(r5)

ld

mul

sub

st

r1,(r2)

r3,r1,3

r4,r3,1

r4,(r5)

Prologue

ld

mul

sub

st

(c) Software pipeline

r1,(r2)

r3,r1,3

r4,r3,1

r4,(r5)

ld

mul

sub

st

r1,(r2)

r3,r1,3

r4,r3,1

r4,(r5)

Kernel

Epilogue

• Prologue fills the SW pipeline with iterations

• Epilogue drains the SW pipeline

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

41

Software pipelining:

determine II, the Initiation Interval

For (i=0;.....)

Cyclic data dependences

A[i+6]= 3*A[i]-1

ld r1, (r2)

(0,1)

(1,0)

(delay, iteration distance)

mul r3, r1, 3

(0,1)

(1,0)

(1,6)

sub r4, r3, 1

(0,1)

(1,0)

st r4, (r5)

Initiation Interval

cycle(v) cycle(u) + delay(u,v) - II.distance(u,v)

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

ld_1

ld_2

ld_3

-5

ld_4

ld_5

ld_6 st_1

ld_7

42

Modulo scheduling constraints

MII, minimum initiation interval, bounded by cyclic dependences

and resources:

MII = max{ ResMinII, RecMinII }

Resources:

used(r )

ResMinII max

rresources available

(

r

)

Cycles:

cycle(v) cycle(v) delay(e) II.distance(e)

ec

Therefore:

RecMinII minII N | ccycles,0 delay(e) II.distance(e)

ec

Or:

4/13/2015

ec delay(e)

RecMinII max

ccycles

ec distance(e)

Embedded Computer Architecture

H. Corporaal, and B. Mesman

43

Let's go back to: The Role of the Compiler

9 steps required to translate an HLL program:

(see online bookchapter)

1.

2.

3.

4.

5.

6.

7.

8.

9.

4/13/2015

Front-end compilation

Determine dependencies

Graph partitioning: make multiple threads (or tasks)

Bind partitions to compute nodes

Bind operands to locations

Bind operations to time slots: Scheduling

Bind operations to functional units

Bind transports to buses

Execute operations and perform transports

Embedded Computer Architecture

H. Corporaal, and B. Mesman

44

Division of responsibilities between hardware and

compiler

Application

(1)

Frontend

(2)

Determine Dependencies

Superscalar

Determine Dependencies

Dataflow

(3)

Binding of Operands

Binding of Operands

Multi-threaded

(4)

Scheduling

Scheduling

Indep. Arch

(5)

Binding of Operations

Binding of Operations

VLIW

(6)

Binding of Transports

TTA

(7)

Execute

Responsibility of compiler

4/13/2015

Binding of Transports

Embedded Computer Architecture

H. Corporaal, and B. Mesman

Responsibility of Hardware

45

Overview

•

•

•

•

Enhance performance: architecture methods

Instruction Level Parallelism

VLIW

Examples

– C6

– TM

– TTA

• Clustering

• Code generation

• Design Space Exploration: TTA framework

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

46

Mapping applications to processors

MOVE framework

User

intercation

Optimizer

x

x

x

feedback

x

x

Architecture

parameters

Parametric compiler

Pareto curve

(solution space)

x

feedback

x

x

x

x

x

x

x

x x

x

x

x

x x

cost

Hardware generator

Move framework

Parallel

object

code

chip

TTA based system

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

47

TTA (MOVE) organization

Data Memory

load/store load/store

unit

unit

integer

ALU

integer

ALU

boolean

RF

instruct.

unit

float

ALU

Socket

integer

RF

float

RF

immediate

unit

Instruction Memory

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

48

Code generation trajectory for TTAs

• Frontend:

GCC or SUIF

(adapted)

Architecture description

Application (C)

Compiler frontend

Sequential code

Compiler backend

Parallel code

4/13/2015

Sequential simulation

Embedded Computer Architecture

Input/Output

Profiling data

Parallel simulation

H. Corporaal, and B. Mesman

Input/Output

49

Exploration: TTA resource reduction

•

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

50

Execution time

Exporation: TTA connectivity reduction

FU stage constrains cycle time

0

4/13/2015

Embedded Computer Architecture

Number of connections removed

H. Corporaal, and B. Mesman

51

Can we do better?

• Code Transformations

• SFUs: Special Function

Units

• Vector processing

• Multiple Processors

Execution time

How ?

Cost

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

52

Transforming the specification (1)

+

+

+

+

+

+

Based on associativity of + operation

a + (b + c) = (a + b) + c

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

53

Transforming the specification (2)

d = a * b;

e = a + d;

f = 2 * b + d;

r = f – e;

x = z + y;

a

b

*

d

1

2

+

f

r

b

z

+

x

r

x

Embedded Computer Architecture

z

+

-

y

y

a

<<

*

+

e

4/13/2015

r = 2*b – a;

x = z + y;

H. Corporaal, and B. Mesman

54

Changing the architecture

adding SFUs: special function units

+

+

+

+

+

+

4-input adder

why is this faster?

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

55

Changing the architecture

adding SFUs: special function units

In the extreme case put everything into one unit!

Spatial mapping

- no control flow

However: no flexibility / programmability !!

but could use FPGAs

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

56

SFUs: fine grain patterns

• Why using fine grain SFUs:

–

–

–

–

–

–

Code size reduction

Register file #ports reduction

Could be cheaper and/or faster

Transport reduction

Power reduction (avoid charging non-local wires)

Supports whole application domain !

• coarse grain would only help certain specific applications

Which patterns do need support?

• Detection of recurring operation patterns needed

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

57

SFUs: covering results

Adding only 20 'patterns' of 2

operations dramatically reduces # of

operations (with about 40%) !!

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

58

Exploration: resulting architecture

stream

input

4 Addercmp FUs

2 Multiplier FUs

2 Diffadd FUs

4 RFs

9 buses

stream

output

Architecture for image processing

• Several SFUs

• Note the reduced connectivity

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

59

Conclusions

• Billions of embedded processing systems / year

– how to design these systems quickly, cheap, correct,

low power,.... ?

– what will their processing platform look like?

• VLIWs are very powerful and flexible

– can be easily tuned to application domain

• TTAs even more flexible, scalable, and lower

power

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

60

Conclusions

• Compilation for ILP architectures is mature

– used in commercial compilers

• However

– Great discrepancy between available and exploitable

parallelism

• Advanced code scheduling techniques needed to

exploit ILP

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

61

Bottom line:

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

62

Handson-1 (2014)

•

•

•

•

•

•

HOW FAR ARE YOU?

VLIW processor of Silicon Hive (Intel)

Map your algorithm

Optimize the mapping

Optimize the architecture

Perform DSE (Design Space Exploration) trading

off (=> Pareto curves)

– Performance,

– Energy and

– Area (= Cost)

4/13/2015

Embedded Computer Architecture

H. Corporaal, and B. Mesman

63