ppt - IBM

advertisement

Subspace Embeddings for the

L1 norm with Applications

Christian Sohler

TU Dortmund

David Woodruff

IBM Almaden

Subspace Embeddings for the

L1 norm with Applications

to...

Robust Regression and

Hyperplane Fitting

Outline

Massive data sets

Regression analysis

Our results

Our techniques

Concluding remarks

3

Massive data sets

Examples

Internet traffic logs

Financial data

etc.

Algorithms

Want nearly linear time or less

Usually at the cost of a randomized approximation

4

Regression analysis

Regression

Statistical method to study dependencies between variables in the

presence of noise.

5

Regression analysis

Linear Regression

Statistical method to study linear dependencies between variables in the

presence of noise.

6

Regression analysis

Linear Regression

Statistical method to study linear dependencies between variables in the

presence of noise.

Example

Ohm's law V = R ∙ I

Example Regression

250

200

150

Example Regression

100

50

0

0

50

100

150

7

Regression analysis

Linear Regression

Statistical method to study linear dependencies between variables in the

presence of noise.

Example Regression

Example

250

Ohm's law V = R ∙ I

Find linear function that best 200

fits the data

150

Example Regression

100

50

0

0

50

100

150

8

Regression analysis

Linear Regression

Statistical method to study linear dependencies between variables in the

presence of noise.

Standard Setting

One measured variable b

A set of predictor variables a 1,…, ad

Assumption:

b = x 0+ a 1 x 1+ … + a d x d + e

e is assumed to be noise and the xi are model parameters we want to learn

Can assume x0 = 0

Now consider n measured variables

9

Regression analysis

Matrix form

Input: nd-matrix A and a vector b=(b1,…, bn)

n is the number of observations; d is the number of predictor

variables

Output: x* so that Ax* and b are close

Consider the over-constrained case, when n À d

Can assume that A has full column rank

10

Regression analysis

Least Squares Method

Find x* that minimizes S (bi – <Ai*, x*>)²

Ai* is i-th row of A

Certain desirable statistical properties

Method of least absolute deviation (l1 -regression)

Find x* that minimizes S |bi – <Ai*, x*>|

Cost is less sensitive to outliers than least squares

11

Regression analysis

Geometry of regression

We want to find an x that minimizes |Ax-b|1

The product Ax can be written as

A*1x1 + A*2x2 + ... + A*dxd

where A*i is the i-th column of A

This is a linear d-dimensional subspace

The problem is equivalent to computing the point of the column space of A

nearest to b in l1-norm

12

Regression analysis

Solving l1 -regression via linear programming

Minimize (1,…,1) ∙ (a+ + a )

Subject to:

A x + a+- a- = b

a+, a- ≥ 0

Generic linear programming gives poly(nd) time

Best known algorithm is nd5 log n + poly(d/ε) [Clarkson]

13

Our Results

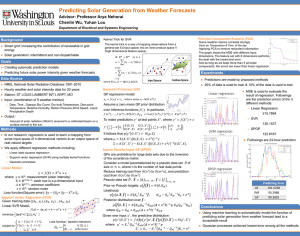

A (1+ε)-approximation algorithm for l1-regression problem

Time complexity is nd1.376 + poly(d/ε)

(Clarkson’s is nd5 log n + poly(d/ε))

First 1-pass streaming algorithm with small space

(poly(d log n /ε) bits)

Similar results for hyperplane fitting

14

Outline

Massive data sets

Regression analysis

Our results

Our techniques

Concluding remarks

15

Our Techniques

Notice that for any d x d change of basis matrix U,

minx in Rd |Ax-b|1 = minx in Rd |AUx-b|1

16

Our Techniques

Notice that for any y 2 Rd,

minx in Rd |Ax-b|1 = minx in Rd |Ax-b+Ay|1

We call b-Ay the “residual”, denoted b’, and so

minx in Rd |Ax-b|1 = minx in Rd |Ax-b’|1

17

Rough idea behind algorithm of Clarkson

Takes nd5 log n time

Takes nd5 log n time

minx in Rd |Ax-b|1 = minx in Rd |AUx – b’|1

Compute well-conditioned

Compute poly(d)Sample poly(d/ε) rows of AU◦b’basis

approximation

proportional to their l1-norm.

Find a basis U so that for all x in

Find y such that

from the

Rd,

|Ay-b|1 · poly(d) Sample

minx in Rdrows

|Ax-b|

1

and

Let b’ = b-Aywell-conditioned

be the residual|x|basis

1/poly(d) · |AUx|1 · poly(d) |x|1

the residual of the poly(d)approximation

Now generic linear

Takes poly(d/ε) time

Takes nd time

programming is efficient

Solve l1-regression on the sample, obtaining vector x, and output x

18

Our Techniques

Suffices to show how to quickly compute

1. A poly(d)-approximation

2. A well-conditioned basis

19

Our main theorem

Theorem

There is a probability space over (d log d) n matrices R such that for any

nd matrix A, with probability at least 99/100 we have for all x:

|Ax|1 ≤ |RAx|1 ≤ d log d ∙ |Ax|1

Embedding

is linear

is independent of A

preserves lengths of an infinite number of vectors

20

Application of our main theorem

Computing a poly(d)-approximation

Compute RA and Rb

Solve x’ = argminx |RAx-Rb|1

Main theorem applied to A◦b implies x’ is a d log d – approximation

RA, Rb have d log d rows, so can solve l1-regression efficiently

Time is dominated by computing RA, a single matrix-matrix product

21

Application of our main theorem

Computing a well-conditioned basis

1. Compute RA

Life is really

simple!

2. Compute U so that RAU is orthonormal (in the l2-sense)

3. Output AU

Time dominated

AU is well-conditioned

because: by

computing RA

and AU, two matrix-matrix products

|AUx|1 · |RAUx|1 · (d log d)1/2 |RAUx|2 = (d log d)1/2 |x|2 · (d log d)1/2 |x|1

and

|AUx|1 ¸ |RAUx|1/(d log d) ¸ |RAUx|2/(d log d) = |x|2/(d log d) ¸ |x|1 /(d3/2 log d)

22

Application of our main theorem

It follows that we get an nd1.376 + poly(d/ε) time algorithm

for (1+ε)-approximate l1-regression

23

What’s left?

We should prove our main theorem

Theorem:

There is a probability space over (d log d) n matrices R such that for any

nd matrix A, with probability at least 99/100 we have for all x:

|Ax|1 ≤ |RAx|1 ≤ d log d ∙ |Ax|1

R is simple

The entries of R are i.i.d. Cauchy random variables

24

Cauchy random variables

pdf(z) = 1/(π(1+z)2) for z in (-1, 1)

Infinite expectation and variance

z

1-stable:

If z1, z2, …, zn are i.i.d. Cauchy, then for a 2 Rn,

a1¢z1 + a2¢z2 + … + an¢zn » |a|1¢z, where z is Cauchy

25

main

Proof

i |Zi| of

= (d

logtheorem

d) with probability

1-exp(-d) by Chernoff

By 1-stability,

For

all rowsonr of

R,| |Ax|1 = 1}

ε-net

argument

{Ax

shows |RAx|

= |Ax|

¢(d

log d) for all

<r,1 Ax>

» 1|Ax|

1¢Z,

x

where Z is a Cauchy

z

Scale

1/(d ¢log

d)

RAxR»by

(|Ax|

1 Z1, …, |Ax|1 ¢ Zd log d),

where Z1, …, Zd log d are i.i.d. Cauchy

|RAx|1 = |Ax|1 i |Zi| / (d log d)

But i |Zi| is heavy-tailed

The |Zi| are half-Cauchy

26

Proof of main theorem

i |Zi| is heavy-tailed, so |RAx|1 = |Ax|1 i |Zi| / (d log d) may be large

Each |Zi| has c.d.f. asymptotic to 1-Θ(1/z) for z in [0, 1)

No problem!

We know there exists a well-conditioned basis of A

We can assume the basis vectors are A*1, …, A*d

|RA*i|1 » |A*i|1 ¢ i |Zi| / (d log d)

With constant probability, i |RA*i|1 = O(log d) i |A*i|1

27

Proof of main theorem

Suppose i |RA*i|1 = O(log d) i |A*i|1 for well-conditioned basis A*1, …, A*d

We will use the Auerbach basis which always exists:

For all x, |x|1 · |Ax|1

i |A*i|1 = d

I don’t know how to compute such a basis, but it doesn’t matter!

i |RA*i|1 = O(d log d)

|RAx|1 · i |RA*i xi| · |x|1 i |RA*i|1 = |x|1O(d log d) = O(d log d) |Ax|1

Q.E.D.

28

Main Theorem

Theorem

There is a probability space over (d log d) n matrices R such that for any

nd matrix A, with probability at least 99/100 we have for all x:

|Ax|1 ≤ |RAx|1 ≤ d log d ∙ |Ax|1

29

Outline

Massive data sets

Regression analysis

Our results

Our techniques

Concluding remarks

30

Regression for data streams

Streaming algorithm given additive updates to entries of A and b

Pick random matrix R according to the distribution of main theorem

Maintain RA and Rb during the stream

Entries

R do |RAx'-Rb|1 using linear programming

Find

x' thatof

minimizes

Compute

U so

not need

tothat

beRAU is orthonormal

independent

The hard thing is sampling rows from AU◦b’ proportional to their norm

Do not know U, b’ until end of stream

Surpisingly, there is still a way to do this in a single pass by treating U, x’ as

formal variables and plugging them in at the end

Uses a noisy sampling data structure

Omitted from talk

31

Hyperplane Fitting

Given n points in Rd, find hyperplane minimizing sum of l1-distances of

points to the hyperplane

Reduces to d invocations of l1-regression

32

Conclusion

Main results

Efficient algorithms for l1-regression and hyperplane fitting

nd1.376 time improves previous nd5 log n running time for l1-regression

First oblivious subspace embedding for l1

33