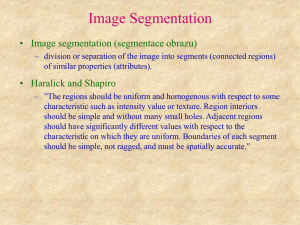

Image Segmentation

advertisement

主講者:陳建齊

Outline & Content

1. Introduction

2. Thresholding

3. Edge-based segmentation

4. Region-based segmentation

5. conclusion

2

1. Introduction

What is segmentation?

Three major ways to do.

Thresholding

Edge-based segmentation

Region-based segmentation

3

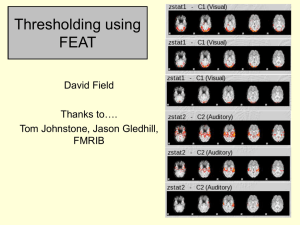

Thresholding

Finding histogram of gray level intensity.

Basic Global Thresholding

Otsu’s Method

Multiple Threshold

Variable Thresholding

4

Edge-based segmentation

Using mask to detect edge in image by convolution.

Basic Edge Detection

The Marr-Hildreth edge detector(LoG)

Short response Hilbert transform(SRHLT)

Watersheds

5

Region-based segmentation

Finding region, but not finding edge.

Region Growing

Data Clustering (Hierarchical clustering)

Partitional clustering

Cheng-Jin Kuo`s method

6

2. Thresholding

Basic Global Thresholding

1)

2)

Select an initial To

Segment image use:

g ( x, y ) 01

if f(x,y) T

if f(x,y) T

3)

Compute the average intensity m 1

and m 2 for the pixels in and .

4)

Compute a new threshold: T 1 (m 1 m 2 )

5)

Until the difference between values of T is smaller than a

predefined parameter.

2

7

Otsu’s Method

{0,1,2,…,L-1} , L means gray level intensity

MN n 0 n 1 n 2 ... n L1

M*N is the total number of pixel.

n i denote the number of pixels with intensity i

we select a threshold T (k ) k , 0 k L 1 , and use it to

classify C : intensity in the range [0, k ] and C : [k 1, L 1]

P (k ) p , P (k ) p 1 P (k )

P 1 m 1 P 2 m 2 m G , P 1 + P 2 =1

2

G2 = i m G p i

, it is global variance.

i 0

1

k

1

i 0

i

2

L 1

2

i k 1

i

1

L1

8

(k ) P 1 P 2 (m1 m 2 )

2

B

2

(m G P 1 (k ) m(k )) 2

P 1 (k )(1 P 1 (k ))

it is 2between-class variance

B (k )

=

, 0 (k *) 1

G2

it is a measure of separability between class.

2

2

(

k

)

max

B

B (k )

ok L 1

g ( x, y ) 10

if f(x,y) k*

if f(x,y) k*

For x = 0,1,2,…,M-1 and y = 0,1,2…,N-1.

Using image Smoothing/Edge to improve Global

Threshold

Smoothing

Edge

detection

What situation is Large object we Small object we

more suitable for the are interested.

are interested

method

9

Multiple Threshold

As Otsu’s method, it takes more area and k*

B2 P 1 (m 1 m G )2 P 2 (m 2 m G )2 P 3 (m 3 m G )2

P 1 m1 P 2 m 2 P 3 m 3 m G

P 1 + P 2 +P 3 =1

B2 (k1* , k2* ) max B2 (k 1 , k 2 )

o k 1 k 2 L 1

2

*

*

(

k

,

k

*

*

B 1

2)

(

k

,

k

)

1 2

G2

Disadvantage: it becomes too complicate when

number of area more than three.

10

Variable Thresholding

1) Image partitioning

.

It is work when the objects of interest and the

background occupy regions of reasonably comparable

size. If not , it will fail.

11

2) Variable thresholding based on local image

properties

Let xy and m xy denote the standard deviation and

mean value of the set of pixels contained in a

neighborhood, S xy .

Q(local parameters) is true

g ( x, y ) 10 ifif Q(local

parameters) is true

Q(

xy , m xy )

true if f ( x , y ) a

false otherwise

xy

ANDf ( x , y ) bm xy

12

3) Using moving average

It discussed is based on computing a moving average

along scan lines of an image.

1 k 1

1

m(k 1)

z

m

(

k

)

( z k 1 z k n )

i

n i k 2n

n

z k 1 denote the intensity of the point at step k+1.

n denote the number of point used in the average.

m(1) z 1 / n is the initial value.

T xy bm xy ,where b is constant and is the moving

average at point (x,y)

13

3. Edge-based segmentation

Basic Edge Detection

Why we can find edge by difference?

image

intensity

first-order deviation

second-order deviation

First-order

deviation

produce

thicker edges

Second-order

deviation

stronger

response to fine

detail

double-edge

response

Determine edge

is from light to

dark or dark to

light

14

1) Gradient

The image gradient is to find edge strength and direction at

location (x,y) of image.

f

g x x

f grad( f )

g y f

y

The magnitude (length) of vector , denoted as M(x,y):

mag(f ) g x g y

The direction of the gradient vector is given by the angle:

( x, y) tan

1

gy

g x

15

Roberts cross-gradient operators:

Prewitt operator:

Sobel operator:

G( x, y)

1

0

if R ( x , y ) T

otherwise

16

The Marr-Hildreth edge

detector(LoG)

This is second-order deviation, we call Laplacian.

2

g ( x, y) [ G( x, y)] f ( x, y)

g ( x, y) 2 [G( x, y) f ( x, y)]

Filter the input image with an n*n Gaussian lowpass

filter. 99.7% of the volume under a 2-D Gaussian

surface lies between about the mean. So n 6 .

17

Short response Hilbert

Transform(SRHLT)

1) Hilbert transform

1

g

(

)

h

(

x

)

*

g

(

x

),

where

h

(

x

)

H

x

GH ( f ) H ( f )G( f ) , H ( f ) j sgn( f )

18

2) SRHLT

gH hb x g x , where hb x b csch( bx)

GH ( f ) H b ( f )G ( f ) where GH ( f ) FT [ g H ( )],

G ( f ) FT [ g ( )],

H b ( f ) j tanh( f / b).

HLT

b0

Choose a suitable

value

differentiation

b

Lower b

Higher b

Impulse response

Longer

Shorter

Noise robustness

Good

Bad

Type of edge

Ramp

Step

Output

Thick

Sharp

19

Simulation result

20

Watersheds

Algorithm:

T [n] {( s, t ) | g ( s, t ) n} , g(s,t) is intensity.

n= min+1 to n = max +1. And let T[n]=0, others 1.

R

C[n] C n ( M i ) ,C n (M i ) is minimum point beneath n.

i 1

21

Markers

External markers:

Points along the watershed

line along highest points.

Internal markers:

(1) That is surrounded higher points .

(2) Points in region form a connected component

(3) All points in connected component have the same

intensity.

22

4. Region-based segmentation

Region Growing

Algorithm:

a. Choose a random pixels

b. Use 8-connected and threshold to determine

c. Repeat a and b until almost points are classified.

23

Simulation of region growing (90% pixels )

Threshold/second: 20/4.7 seconds.

24

Data Clustering

Using centroid to represent the huge numbers of

clusters

Hierarchical clustering, we can change the number

of cluster anytime during process if we want.

Partitional clustering , we have to decide the number

of clustering we need first before we begin the

process.

25

Hierarchical clustering

1) Algorithm of hierarchical agglomeration(built):

A. See every single data as a cluster Ci .

B. Find out Ci ,

Cj

for the distance is the shortest.

C. Repeat the steps until satisfies our demand.

•

d (a, b) as the distance between data a and b

26

2) Algorithm of hierarchical division (break up ):

Diameter of cluster

D(Ci ) max(d (a,b)) , for a Ci , b Ci

27

1) See the whole database as one cluster.

2) Find out the cluster having the biggest diameter

3) max (d ( x, c)) , for x C .

4) Split out x as a new cluster C1 , and see the rest data

points as Ci .

5) If d ( y, Ci ) > d ( y, C1 ) , for y Ci then split y out of Ci and

classify it to C1

6) Back to step2 and continue the algorithm until C1

and Ci is not change anymore.

28

Partitional clustering

Decide the numbers of the cluster(k-means)

Problem:

Initial problem

29

Number of regions are more than clusters you set.

Determine the number of clusters.

Vadility=

intra

inter

1 k

intra-cluster distance= x zi

N i 1 xCi

inter-cluster distance min( zi z j

2

2

)

, i 1, 2,3,......, K 1

; j i 1,......K

30

Simulation of k-means

Clustering/time: 9 clustering/ 0.1

31

Advantage and disadvantage of data clustering

Hierarchical

algorithm

Partitional

algorithms

advantage

1.Concept is simple 1.Computing speed

is fast.

2. Result is reliable. 2.Numbers of

cluster is fixed, so

the concept is also

simple.

disadvantage

It is consuming, so

is not suitable for

a large database.

1. Determine the

number of

clusters.

2. Initial problem

3. ….

32

Cheng-Jin Kuo`s method

Algorithm

33

1) Adaptive threshold decision with local variance

Variance of Lena: 1943

716

899

447

1293 2470

1579 1960 2238

1497 1822 1974 1273

2314 1129 1545 1646

Small variance cause small threshold.

34

2) Adaptive threshold decision with local variance

and frequency

Variance of baboon: 1503

716

899

447

1293 2470

1579 1960 2238

1497 1822 1974 1273

2314 1129 1545 1646

6.9451

8.8807

7.2965 7.0914

8.0413 10.0076 8.4951 7.6421

9.6709 10.3219 7.9815 6.1310

9.0464 10.4821 7.1513 6.4118

35

High frequency, high variance. Set highest threshold.

(4,1)

High frequency, low variance. Set second high

threshold.

(4,2)

Low frequency, high variance. Set third high

threshold.

(1,4)

Low frequency, low variance. Set the lowest threshold.

(1,1)

36

Comparison of all algorithm by

data compression

Region

growing

Speed

bad

K-means

Watershed Cheng-Jin

Kuo`s

method

Good(worse

bad

than C.J.K’s

method)

fragmentary oversegmen

tation

intact

Shape

connectivi

ty

Good(better Good(equal

Shape

than C.J.K’s C.J.K’s

match

method)

method)

bad

Good

Intact

Good

37

Conclusion

Speed

Connectivity

System reliability

38

Reference

R. C. Gonzalez, R. E. Woods, Digital Image

Processing third edition, Prentice Hall, 2010.

2. C. J. Kuo, J. J. Ding, Anew Compression-Oriented

Fast image Segmentation Technique, NTU,2009.

1.

39

Q&A

40