Case Studies:

Bin Packing &

The Traveling Salesman Problem

David S. Johnson

AT&T Labs – Research

© 2010 AT&T Intellectual Property. All rights reserved. AT&T and the AT&T logo are trademarks of AT&T Intellectual Property.

Outline

• Lecture 1: Today

– Introduction to Bin Packing (Problem 1)

– Introduction to TSP (Problem 2)

• Lecture 2: Today -- TSP

• Lecture 3: Friday --TSP

• Lecture 4: Friday –- Bin Packing

Applications

Packing commercials into station breaks

Packing files onto floppy disks (CDs, DVDs, etc.)

Packing MP3 songs onto CDs

Packing IP packets into frames, SONET time

slots, etc.

• Packing telemetry data into fixed size packets

•

•

•

•

Standard Drawback: Bin Packing is NP-complete

NP-Hardness

• Means that optimal packings cannot be constructed in

worst-case polynomial time unless P = NP.

• In which case, all other NP-hard problems can also be

solved in polynomial time:

–

–

–

–

Satisfiability

Clique

Graph coloring

Etc.

• See M.R. Garey & D.S.Johnson, Computers and

Intractability: A Guide to the Theory of NPCompleteness, W.H.Freeman, 1979.

Standard Approach to Coping

with NP-Hardness:

• Approximation Algorithms

– Run quickly (polynomial-time for theory,

low-order polynomial time for practice)

– Obtain solutions that are guaranteed to be

close to optimal

First Fit (FF):

Decreasing,

Put eachBest

item Fit

in the

Decreasing

first bin with

(FFD,BFD):

enough space

Start by reordering the items by non-increasing size.

Best Fit (BF): Put each item in the bin that will hold it with

the least space left over

Worst-Case Bounds

• Theorem [Ullman, 1971][Johnson et al.,1974].

For all lists L,

BF(L), FF(L) ≤ (17/10)OPT(L) + 3.

• Theorem [Johnson, 1973]. For all lists L,

BFD(L), FFD(L) ≤ (11/9)OPT(L) + 4.

(Note 1: 11/9 = 1.222222…)

(Note 2: These bounds are asymptotically tight.)

Lower Bounds: FF and BF

½-

½-

½+

OPT: N bins

½+

½-

FF, BF:

N/2 bins

+

N bins

= 1.5 OPT

Lower Bounds: FF and BF

1/6 - 2

1/6 - 2

1/6 - 2

1/3 +

1/6 - 2

1/3 +

1/6 - 2

½+

1/2 +

1/6 - 2

1/3 +

1/6 - 2

OPT: N bins

FF, BF:

N/6 bins + N/2 bins + N bins

= 5/3 OPT

Lower Bounds: FF and BF

1/7 +

1/43 + ,

1/1806 + ,

etc.

1/3 +

FF, BF = N(1 + 1/2 + 1/6 + 1/42 + 1/1805 + … )

1/2 +

OPT: N bins

(1.691..) OPT

Jeff Ullman’s Idea

1/6 +

1/3 +

1/2 +

OPT: N bins

FF, BF = N (1 + 1/2 + 1/5) = (17/10) OPT

Key modification:

Replace some 1/3 +’s & 1/6+’s

with 1/3-i & 1/6+i, and some

with 1/3+i & 1/6-i,

with i increasing from bin to bin.

(Actually yields FF, BF ≥ (17/10)OPT - 2

See References for details.)

Lower Bounds: FFD and BFD

1/4-2

1/4-2

1/4+

1/4-2

½+

1/4+2

1/4-2

1/4+2

3n bins

OPT = 9n

1/4-2

1/4+

1/4-2

½+

1/4+

1/4+2

6n bins

1/4+

6n bins

2n bins

1/4-2

3n bins

FFD, BFD = 11n

Asymptotic Worst-Case Ratios

• RN(A) = max{A(I)/OPT(I): OPT(I) = N}

• R(A) = max{RN(A): N > 0}

– absolute worst-case ratio

• R∞(A) = limsupN∞RN(A)

– asymptotic worst-case ratio

• Theorem: R∞(FF) = R∞(BF) = 17/10.

• Theorem: R∞(FFD) = R∞(BFD) = 11/9.

Why Asymptotics?

• Partition Problem: Given a set A of numbers

ai, can they be partitioned into two subsets

with the sums (aAa)/2?

• This problem is NP-hard, and is equivalent to

the special case of bin packing in which we ask

if OPT = 2.

• Hence, assuming P NP, no polynomial-time

bin packing algorithm can have R(A) < 3/2.

Implementing Best Fit

• Put the unfilled bins in a binary search tree,

ordered by the size of their unused space

(choosing a data structure that implements

inserts and deletes in time O(logN)).

Bin

Index

Bin with smaller gap

Gap

Size

Bin with larger gap

Implementing Best Fit

• When an item x arrives, the initial “current node” is

the root of the tree.

• While the item x is not yet packed,

– If x fits in the current node’s gap,

•

If x fits in the gap of the node’s left child, let that node

become the current node.

• Otherwise,

– delete the current node.

– pack x in the current node’s bin.

– reinsert that bin into the tree with its new gap.

– Otherwise,

• If the current node has a right child, let that become the

current node.

• Otherwise, pack x in a new bin, and add that to the tree.

Implementing First Fit

• New tree data structure:

– The bins of the packing are the leaves, in their original order.

– Each node contains the maximum gap in bins under it.

1.00

.99

1.00

.56

.41

.11

.99

.56

.41

.56

.37

.82

.82

.03

.77

.99

.50

.26

.99

.26

.77

.17

.77

.44

1.00

1.00

●●●

Online Algorithms (+ Problem 1)

• We have no control over the order in which items

arrive.

• Each time an item arrives, we must assign it to a bin

before knowing anything about what comes later.

• Examples: FF and BF

• Simplest example: Next Fit (NF)

– Start with an empty bin

– If the next item to be packed does not fit in the current bin,

put it in a new bin, which now becomes the “current” bin.

Problem 1: Prove R∞(NF) = 2.

Best Possible Online Algorithms

• Theorem [van Vliet, 1996]. For any online bin packing

algorithm A, R∞(A) ≥ 1.54.

• Theorem [Richey, 1991]. There exist polynomial-time

online algorithms A with R∞(A) ≤ 1.59.

• Drawback: Improving the worst-case behavior of

online algorithms tends to guarantee that their

average-case behavior will not be much better.

• FF and BF are much better on average than they are

in the worst case.

Best Possible Offline Algorithm?

• Theorem [Karmarkar & Karp, 1982]. There is a

polynomial-time (offline) bin packing algorithm KK

that guarantees

KK(L) ≤ OPT(L) + log2(OPT(L)) .

• Corollary. R∞(KK) = 1.

• Drawback: Whereas FFD and BFD can be

implemented to run in time O(NlogN), the best bound

we have on the running time of KK is O(N8log3N).

• Still open: Is there a polynomial-time bin packing

algorithm A that guarantees A(L) ≤ OPT(L) + c for

any fixed constant c?

To Be Continued…

• Next time: Average-Case Behavior

• For now: On to the TSP!

The Traveling Salesman Problem

Given:

Set of cities {c1,c2,…,cN }.

For each pair of cities {ci,cj}, a distance d(ci,cj).

Find:

Permutation π : {1,2,...,N} {1,2,...,N} that minimizes

N1

d(c

i1

π(i)

, cπ(i1) ) d(cπ(N) , cπ(1) )

N = 10

N = 10

Other Types of Instances

• X-ray crystallography

– Cities: orientations of a crystal

– Distances: time for motors to rotate the crystal

from one orientation to the other

• High-definition video compression

– Cities: binary vectors of length 64 identifying the

summands for a particular function

– Distances: Hamming distance (the number of terms

that need to be added/subtracted to get the next

sum)

No-Wait Flowshop Scheduling

– Cities: Length-4 vectors <c1,c2,c3,c4> of

integer task lengths for a given job

that consists of tasks that require 4

processors that must be used in order,

where the task on processor i+1 must

start as soon as the task on processor i

is done).

– Distances: d(c,c’) = Increase in the

finish time of the 4th processor if c’ is

run immediately after c.

– Note: Not necessarily symmetric: may

have d(c,c’) d(c’,c).

How Hard?

• NP-Hard for all the above applications

and many more

– [Karp, 1972]

– [Papadimitriou & Steiglitz, 1976]

– [Garey, Graham, & Johnson, 1976]

– [Papadimitriou & Kanellakis, 1978]

– …

How Hard?

Number of possible tours:

N! = 123(N-1)N = Θ(2NlogN)

10! = 3,628,200

20! ~ 2.431018 (2.43 quadrillion)

Dynamic Programming Solution:

O(N22N) = o(2NlogN)

Dynamic Programming Algorithm

• For each subset C’ of the cities containing c1, and

each city cC’, let f(C’,c) = Length of shortest path

that is a permutation of C’, starting at c1 and ending

at c.

• f({c1}, c1) = 0

• For xC’, f(C’{x},x) = MincC’f(C’,c) + d(c,x).

• Optimal tour length = MincCf(C,c) + d(c, c1).

• Running time: ~(N-1)2N-1 items to be computed, at time

N for each = O(N22N)

How Hard?

Number of possible tours:

N! = 123(N-1)N = Θ(2NlogN)

10! = 3,628,200

20! ~ 2.431018 (2.43 quadrillion)

Dynamic Programming Solution:

O(N22N)

102210 = 102,400

202220 = 419,430,400

N = 10

N = 100

N = 1000

N = 10000

Planar Euclidean Application #1

• Cities:

– Holes to be drilled in printed circuit boards

N = 2392

Planar Euclidean Application #2

• Cities:

– Wires to be cut in a “Laser Logic”

programmable circuit

N = 7397

N = 33,810

N = 85,900

Standard Approach to Coping

with NP-Hardness:

• Approximation Algorithms

– Run quickly (polynomial-time for theory,

low-order polynomial time for practice)

– Obtain solutions that are guaranteed to be

close to optimal

– For the latter and the TSP, we need the

triangle inequality to hold:

d(a,c) ≤ d(a,b) + d(b,c)

Danger when No -Inequality

• Theorem [Karp, 1972]: Given a graph G = (V,E), it is

NP-hard to determine whether G contains a Hamiltonian

circuit (collections of edges that make up a tour).

• Given a graph, construct a TSP instance in which

{

d(c,c’) =

1 if {c,c’} E

N2N if {c,c’} E

• If Hamiltonian circuit exists, OPT = N, if not, OPT > N2N.

• A polynomial-time approximation algorithm with that

guaranteed a tour of length no more than 2N OPT would

imply P = NP.

Nearest Neighbor (NN):

1.

Start with some city.

2. Repeatedly go next to the nearest unvisited neighbor of

the last city added.

3. When all cities have been added, go from the last back

to the first.

A

d(A,D) ≤ d(A,E) + d(E,D)

B

E

d(B,C) ≤ d(B,E) + d(E,C)

D

C

Note: By -inequality, an optimal tour need not contain any crossed edges.

d(A,D) + d(B,C) ≤ (d(A,E) + d(E,D)) + (d(B,E) + d(E,C))

= (d(A,E) + d(E,C)) + (d(B,E) + d(E,D))

= d(A,C) + d(B,D)

For the Euclidean metric, the inequalities are strict

(unless all relevant cities are co-linear)

• Theorem

[Rosenkrantz, Stearns, & Lewis, 1977]:

– There exists a constant c, such that if instance

I obeys the triangle inequality, then we have

NN(I) ≤ clog(N)Opt(I).

– There exists a constant c’, such that for all N >

3, there are N-city instances I obeying the

triangle inequality for which we have NN(I) >

c’log(N)Opt(I).

– For any algorithm A, let

RN(A) = min{A(I)/OPT(I): I is an N-city instance}

– Then RN(NN) = Θ(log(N)) (worst-case ratio)

Lower Bound Examples

F1:

1

1+

(NN starts at left, ends in middle)

1

Li/2 + 1

Fi+1:

Fi

1+

Li/2 + 1

1+

Fi

Let Li be the number of edges encountered if we travel stepby-step from the leftmost vertex in Fi to the rightmost.

L1 = 2,

Li+1 = 2Li + 2

Set the distance from the rightmost vertex of Fk to the

leftmost to 1+, and set all non-specified distances to

their -inequality minimum.

OPT(Fk)/(1+) ≤ N = Lk + 1 = (2Lk-1 + 1) + 1

= (2(2Lk-2 + 1) + 1) + 1 = (2(2(2Lk-3 + 1) + 1) + 1) + 1

k 1

= 2 L1

Log(N) < k+1

k 1

i

2

= 2k + 2k – 1 < 2k+1

i 0

NN(Fk) ≥ Lk + 1 +

k 1

2

i1

k -1-i

k 1

Li > 2k + 2k-1-i2i = 2k + (k-1)2k-1

i1

> k2k-1 > (k/4) OPT(Fk)/(1+) = Ω(log(N) OPT(Fk)

Upper Bound Proof (Sketch)

• Observation 1: For symmetric instances with the inequality, d(c,c’) ≤ OPT(I)/2 for all pairs of cities {c,c’}.

P1

c

Optimal Tour

P2

c’

-inequality d(c,c’) ≤ length(P1) and d(c,c’) ≤ length(P2)

2d(c,c’) ≤ OPT(I)

• Observation 2: For cities c, let L(c) be the length of

edge added when c was the last city in the tour.

For all pairs {c,c’}, d(c,c’) ≥ min(L(c),L(c’)).

• Proof: Suppose without loss of generality that c is the

first of c,c’ to occur in the NN tour. When c was the

last city, the next city chosen, say c*, satisfied d(c,c*)

≤ c(c,c’’) for all unvisited cities c’’. Since c’ was as yet

unvisited, this implies c(c,c’) ≥ d(c,c*) = L(c).

• Overall Proof Idea: We will partition C into O(logN)

disjoint sets Ci such that cCi L(c) ≤ OPT(I), implying

that

NN(I) = cC L(c) = O(logN)OPT(I).

• Set X0 = C, i = 0 and repeat while |Xi| > 3.

• Let Ti be the set of edges in an optimal tour for Xi -note that |Ti| = |Xi|. By the -inequality, the total

length of these edges is at most OPT(I).

• Let Ti’ be the set of edges in Ti with length greater

than 2OPT(I)/|Ti| -- note that |Ti’| < |Ti|/2.

• For each edge e = {c,c’} in Ti - Ti’, let f(e) be a city c’’

{c,c’} such that L(c’’) ≤ d(c,c’).

• Set Ci = {f(e): e Ti - Ti’} -- note that

– cCi L(c) ≤ eTi length(e) ≤ OPT(I).

– |Ci| ≥ |Ti - Ti’|/2 ≥ |Ti|/4 = |Xi|/4 .

• Set Xi+1 = Xi – Ci -- note that |Xi+1| ≤ (3/4)|Xi|.

• Given that |Xi+1| ≤ (3/4)|Xi|, i ≥ 0, this process can only

continue while (3/4)iN > 3, i.e., by the time log(3/4)●i +

logN > log(3), or roughly while log(N)-log(3) > log(3/4)●i = log(4/3)●i.

• Hence the process halts at iteration i*, where

log(N) log(3)

i*

O(logN)

log(4/3)

• At this point there are 3 or fewer cities in Xi*+1. Put

each of them in a separate set Ci*+j, 1 ≤ j ≤ |Xi*+1|, for

which, by Observation 1, we will have L(c) ≤ OPT(I)/2.

Greedy (Multi-Fragment) (GR):

1. Sort the edges, shortest first, and treat them in that

order. Start with an empty graph.

2. While the current graph is not a TSP tour, attempt to add

the next shortest edge. If it yields a vertex degree

exceeding 2 or a tour of length less than N, delete.

• Theorem [Ong & Moore, 1984]: For all instances I

obeying the -Inequality, GR(I) = O(logN)OPT(I).

• Theorem [Frieze, 1979]: There are N-city

instances IN for arbitrarily large N that obey the

-Inequality and have GR[IN ] = Ω(logN/loglogN).

Nearest Insertion (NI):

1. Start with 2-city tour consisting of the some

city and its nearest neighbor.

2. Repeatedly insert the non-tour city that is

closest to a tour city (in the location that

yields the smallest increase in tour length).

• Theorem [Rosenkrantz, Stearns, & Lewis, 1977]:

If instance I obeys the triangle

inequality, then R(NI) = 2.

• Key Ideas of Upper Bound Proof:

– The minimum spanning tree (MST) for I is no longer

than the optimal tour, since deleting an edge from

the tour yields a spanning tree.

– The length of the NI tour is at most twice the

length of an MST.

A

d(B,C) ≤ d(B,A) + d(A,C) by -inequality, so

C

B

d(A,C) + d(B,C) – d(B,A) ≤ 2d(A,C)

(A,C) would be the next edge added by

Prim’s minimum spanning tree algorithm

Lower Bound Examples

2

2

2

2

NI(I) = 2N-2

OPT(I) = N+1

2

2

2

2

Another Approach

• Observation 1: Any connected graph in which

every vertex has even degree contains an

“Euler Tour” – a cycle that traverses each

edge exactly once, which can be found in

linear time.

[Problem 2: Prove this!]

• Observation 2: If the -inequality holds, then

traversing an Euler tour but skipping past

previously-visited vertices yields a Traveling

Salesman tour of no greater length.

Obtaining the Initial Graph

• Double MST algorithm (DMST):

– Combine two copies of an MST.

– Theorem [Folklore]: DMST(I) ≤ 2Opt(I).

• Christofides algorithm (CH):

– Combine one copy of an MST with a minimumlength matching on its odd-degree vertices (there

must be an even number of them since the total

sum of degrees for any graph is even).

– Theorem [Christofides, 1976]: CH(I) ≤ 1.5Opt(I).

Optimal Tour on Odd-Degree Vertices

(No longer than overall Optimal Tour)

Matching M1 + Matching M2 = Optimal Tour

Hence Optimal Matching ≤ min(M1,M2) ≤ OPT(I)/2

Can we do better?

• No polynomial-time algorithm is known that has a

worst-case ratio less than 3/2 for arbitrary instances

satisfying the -inequality.

• Assuming P NP, no polynomial-time algorithm can do

better than 220/219 = 1.004566… [Papadimitriou &

Vempala, 2006].

• For Euclidean and related metrics, there exists a

polynomial-time approximation scheme (PTAS): For

each > 0, a polynomial-time algorithm with worst-case

ratio ≤ 1+. [Arora, 1998][Mitchell, 1999].

PTAS RunningTimes

• [Arora, STOC 1996]:

O(N100/)

• [Arora, JACM 1998]:

O(N(logN)O(1/))

• [Rao & Smith, STOC 1998]:

O(1)

(1/)

O(2

N

+ N log N)

• If = ½ and O(1) = 10, then

O(1)

(1/)

2

> 21000.

Performance “In Practice”

• Testbed: Random Euclidean Instances

(Results appear to be reasonably well-correlated

with those for our real-world instances.)

Nearest Neighbor

Greedy

Smart Shortcuts

Smart-Shortcut Christofides

Smart-Shortcut Christofides

2-Opt

3-Opt

Original Tour

Original Tour

Result of 2-Opt move

Results of 3-Opt moves

2-Opt [Johnson-McGeoch Implementation]

4.2% off optimal

10,000,000 cities in 101 seconds at 2.6 Ghz

3-Opt [Johnson-McGeoch Implementation]

2.4% off optimal

10,000,000 cities in 4.3 minutes at 2.6 Ghz

Lin-Kernighan [Johnson-McGeoch Implementation]

1.4% off optimal

10,000,000 cities in 46 minutes at 2.6 Ghz

Iterated Lin-Kernighan [J-M Implementation]

0.4% off optimal

100,000 cities in 35 minutes at 2.6 Ghz

Worst-Case Running Times

• Nearest Neighbor: O(N2)

• Greedy: O(N2logN)

• Nearest Insertion: O(N2)

• Double MST: O(N2)

• Christofides: O(N3)

• 2-Opt: O(N2●(number of improving moves))

• 3-Opt: O(N3●(number of improving moves))

K-d trees [Bentley, 1975, 1990]

Data Elements

Array T: Permutation of Point indices

Array H: H[i] = index of tree leaf containing Point i

Tree Vertex k:

Index L in

Array T of

First Point

Index U in

Array T of

Last Point

Widest

Dimension

Median Value

in Widest

Dimension

Leaf?

Empty?

Implicit: Index of Parent = k/2

Index of Lower Child = 2k

Index of Higher Child = 2k+1

Operations

• Construct kd-tree (recursively): O(NlogN)

• Delete or temporarily delete points (without

rebalancing): O(1)

• Find nearest neighbor to one of points:

“typically” O(logN)

• Given point p and radius r, find all points p’ with

d(p,p’) ≤ r (“ball search”):

“typically” O(logN + number of points returned).

Finding Nearest Neighbor to Point p

• Initialize M = ∞. Call rnn(H[p],p,M).

• rnn(k,p,M):

– If Vertex k is a leaf,

• For each point x in vertex k’s list,

– If dist(p,x) < M, set M = dist(p,x) and Champion = x.

– Otherwise,

• Let d be vertex k’s widest dimension, m be its median value for

that dimension, and pd the value of p’s coordinate in dimension d.

• If pd > m, let NearChild = 2k+1 and FarChild = 2k.

• Otherwise, NearChild = 2k and FarChild = 2k+1.

• If NearChild has not been explored, rnn(NearChild,p,M).

• If |pd – m| < M, rnn(FarChild,p,M).

– Mark Vertex k as “explored”

Nearest Neighbor Algorithm in

“O(NlogN)”

• Construct kd-Tree on cities.

• Pick a starting city cπ(1).

• While there remains an unvisited city, let the

current last city be cπ(i).

– Use the kd-Tree to find the nearest unvisited city

to cπ(i).

– Delete cπ(i) from the kd-Tree

Greedy (Lazily) in “O(NlogN)”

Initialization:

• Construct a kd-tree on the cities. Let G = (C,). We will maintain

the property that the kd-tree contains only vertices with degree

0 or 1 in G.

• For each city c, let n(c) be its nearest “legal” neighbor. A

neighbor is legal if adding edge {c,n(c)} to the current graph does

not create

– A vertex of degree 3 or

– A cycle of length less than N.

• Put triples (c,n(c),dist(c,n(c))) in a priority queue, sorted in order

of increasing distance.

• For each city c, define otherend(c) = c.

Greedy (Lazily) in “O(NlogN)”

While not yet a tour:

• Extract the minimum (c,n(c),d(c,n(c)) triple from the priority queue.

• If {c,n(c)} is a legal edge, add it to G, and if either c or n(c) now has

degree 2, delete it from the kd-tree.

• If c has degree 2, continue.

• Otherwise n(c) = otherend(c) and so completes a short cycle.

– Temporarily delete otherend(c) from the kd-Tree.

– Find the new nearest neighbor n’(c) of c.

– Add (c,n’(c),dist(c,n’(c)) to the priority queue.

– Put otherend(c) back in the kd-Tree.

“Nearest Insertion” in “O(NlogN)”

• Straightforward if we instead implement the variant in

which each new city is inserted next to its nearest

neighbor in the tour (“Nearest Addition”) – upper bound

proof still goes through, but performance suffers.

• Seemingly no way to avoid Ω(N2) if we need to find the

best place to insert.

• Essentially as good performance can be obtained using

Jon Bentley’s “Nearest Addition+” variant:

– If c is to be inserted and x is the nearest city in the tour to c,

choose the best insertion that places x next to a city x’ with

d(c,x’) ≤ 2d(c,x).

– Idea: Use ball search to find candidates for x’.

2-Opt:

Does not work!

N

N(N 3) 4 the

Organizing

2

C

Search

C

B

A

AB

Try each

in C

turn

the current

improving

move is found –

TryAall

notaround

adjacent

to A ortour

B inuntil

the an

current

tour

take first one found. If none found after all cities tried for A, halt.

(N-3 possibilities) .

C

D

B

A

If this is an improving move, we cannot have both

d(A,B) < d(A,C) and d(D,C) < d(D,B),

Since this would imply d(A,C) + d(D,B) > d(A,B) + d(D,C).

C

B

A

Conseqeuently, for a given choice of A and B, we only

need to consider those C with d(A,C) < d(A,B)

-- possibly a much smaller number than N.

Taking Advantage

• First Idea (trading preprocessing time for later speed):

– Preprocess by constructing for each city A, a sorted list of the

other cities C in increasing order by d(A,C).

– Preprocessing Time = Θ(N2logN)

• Second Idea (trading quality for even more speed):

– Preprocess by constructing for each city A a list of its K

nearest neighbors C in increasing order by d(A,C), and only

consider those cities as candidates for C when processing A

(and store distances to save later re-computations).

– Preprocessing Time = “Θ(kNlogN)” [using kd-trees]

– Typically, neighbor lists with K = 20 or 40 are fine.

One More Tradeoff: Don’t Look Bits

• Suppose that that there was no improving move from city A the

last time we checked, and it still has the same tour neighbors as

before.

• Then it is unlikely that we will find an improving move this time

either.

• So, don’t even look for one.

• Can be implemented by storing a don’t-look-bit for each city A,

initially set to 0.

– Set to 1 whenever the search from c for an improving move fails.

– Set to 0 whenever a move is made that changes one of A’s neighbors

in the tour.

• Rotate through values of A as before, but we now only need

constant time to process any city whose don’t-look-bit equals 1.

• Alternatively (and potentially faster): Store cities c with don’tlook-bit[c] = 0 in a queue, and process them in queue order.

Remaining Major Bottleneck:

The Tour Representation

• Standard Representation: Doubly-Linked List.

Prev Name Next

Prev Name Next

Prev Name Next

• Need double-linking (both Next and Prev) in order to

identify legal 2-Opt moves in constant time:

– If the first deleted edge is Next[A], then the second must

be Prev[C], and vice versa.

• Worst-Case cost of performing a move: Θ(N).

Can flip either half, but, empirically, even the length of

the shorter one still grows as Θ(N0.7) [Bentley, 1992]

Tour Data Structure

• Operations

– Initialize -- Build initial tour representation.

– Flip(a,b) -- Reverse segment from a to b.

• Queries

– Prev(c) -- Returns city before c in tour.

– Next(c) -- Returns city after c in tour.

– Between(a,b,c) – “Yes” if and only if c is

encountered before b when traversing tour

forward from a.

Why Between(a,b,c)?

3-Opt

C

Can’t break next --

X

B

D

A

X -- Can’t break prev

Depends on whether Between(A,C,D) = Yes

Why Between(a,b,c)?

3-Opt

C

B

D

A

Why Between(a,b,c)?

Doesn’t work!

3-Opt

C

B

A

Only D with Between(A,C,D) = Yes are viable

Tour Data Structure

• Doubly-Linked List

– Initialize, Flip: O(N)

– Next, Prev: O(1)

– Between: O(N)

• O(1) if we add an index to each city node

• Array (A[i] is ith city in tour)

– Initialize, Flip: O(N)

– Next, Prev: O(1)

– Between: O(1)

2-Level Tree

• Divide tour into sqrt(N) segments, each

of length sqrt(N).

• For each segment, store

– A bit indicating whether that segment

should be traversed in forward or reversed

direction

– Pointers to the next and previous segments

in the tour.

2-Level Tree

Parent

Prev Segment

Next Segment

Size

Seq. #

Start of Segment

End of Segment

Parent Structure

Parent

Prev

Seq. #

City

Segment Element Structure

Next

2-Level Tree

• Initialize: O(N)

• Flip: O(sqrt(N))

• Next, Prev: O(1)

• Between: O(1)

In practice, beats arrays once N > 1000

Lower Bounds

[Fredman, Johnson, McGeoch, & Ostheimer, 1995]

• In the cell probe model of computation,

any data structure must take worstcase amortized time per operation of

Ω(logN/loglogN)

Splay Tree representation

• Initialize: O(NlogN)

• Flip, Next, Prev, Between:

O(logN))

In practice, beats 2-Level Trees only

when N > 1,000,000

Improving on 3-Opt

• K-Opt (k > 3)

– Small tour improvement for major running time

increase.

• Lin-Kernighan [1973]

– Drastically pruned k-Opt, but for values of k up to N.

• Iterated Lin-Kernighan

[Martin, Otto, & Felten, 1991], [Johnson, 1990]

– Run Lin Kernighan

– Perform random 3-opt move to resulting tour

– Repeat above loop f(N) times (f(N) = N/10, N, 10N, ...)

For more on the TSP algorithm performance, see the website for the

DIMACS TSP Challenge:

http://www2.research.att.com/~dsj/chtsp/index.html/

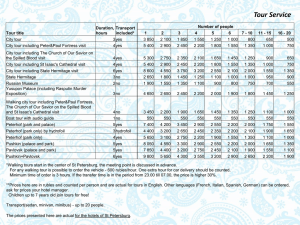

Comparison: Smart-Shortcut Christofides versus 2-Opt

Tour Length

Normalized Running Time

N = 1000 “Random Clustered Instance”

N = 10,000 “Random Clustered Instance”

Estimating Running-Time

Growth Rate for 2-Opt

Microseconds/N

Microseconds/NlogN

Microseconds/N1.25