AP Statistics

advertisement

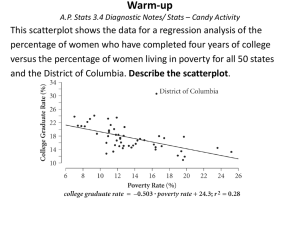

AP Statistics Topic 3 Summary of Bivariate Data Overview of this Topic In Topic 2 we studied the analysis of univariate data Now we will study bivariate data • Observe 2 characteristics on each observational unit Graphically display the data • Scatterplot • Form, Strength and Direction Numerical summary of the data • Pearson’s Correlation Coefficient, • Coefficient of Determination, and • Least Squares Regression Line Least square regression lines from data and from Minitab output Scatterplots Visual display of bivariate data Describe the scatterplot • • • • • Form: Linear or non-linear Direction: Positive, negative or random Strength: Strong, Moderate, Weak Look for clustering of data Look for data points that fall far from the body of data • Context We can incorporate qualitative info into our scatterplots Pearson’s Correlation Coefficient We’ve collected bivariate data and created a scatterplot We’ve described our scatterplot Now we’re ready to perform some numerical summaries The first is Pearson’s Correlation Coefficient -- r Pearson’s Correlation Coefficient Pearson’s Correlation Coefficient – more often referred to as the correlation coefficient – is a statistic. The Population Correlation Coefficient is a parameter – r is a numerical measure of the strength of the linear association between the variables we are studying. Properties of r The value of r does not depend on the unit of measurement for either variable. The value of r does not depend on which of the two variables is considered the independent variable. The value of r is between +1 and -1. r=+1/-1 when data points lie exactly on a straight line that slopes upward/downward. The value of r is a measure of the extent to which the variables are linearly related Correlation does not imply causation. Calculating r zx z y n 1 x X y Y i i n 1 Output of running the LinReg function on the TI-83/84 Corr program Fitting a line Up to now • • • • We’ve collected data Created a scatterplot Described the scatterplot Summarized linear association with r Now we’d like to summarize our linear scatterplot with a straight line Since we’ll be using this line for predictions, it now becomes important to identify an explanatory and response variable How do we fit a ‘best line’ Our line should be a good summary of our data and will be used as a prediction tool We’d like a line that goes through all our data points If that’s not possible, at least a line that is close to all the points Sketch a Scatterplot Sketch a scatterplot for the following data, fit a line that you think is a best fitting line M 730 600 740 570 620 720 620 650 760 550 V 790 590 700 530 540 780 640 620 700 610 M 710 640 590 680 770 540 540 520 710 740 V 670 470 490 720 650 590 600 540 560 650 There are many lines that can be chosen that would be a reasonable fit A standardized approach is essential so different analysts working with the same set of data will produce the same fits Least Squares Regression Line The least squares regression (LSR) is the most commonly method to find the best fit line As the name implies, it is the line whose summed squared vertical deviations is the least among all possible lines Least Squares Regression Line Terminology LSRL yˆ a bx Characteristics • e e i • 2 i 0 is minimized • Passes through ( X ,Y ) • close connection between correlation and slope br sy sx So what’s important here? Identify explanatory and response variables The form of your model Determine the coefficients Interpret the coefficients So how do we calculate the coefficients ? By hand? What, are you nuts? We’ll always use the graphing calculator (or use a MINITAB output) So let’s do a regression on the graphing calculator And you’ll do a LSRL activity for homework LSR on TI-83 Identify the explanatory and response variables and enter the data into lists Create your scatterplot Now calculate your LSRL • STAT – CALC – LINREG (a+bx) – ENTER • X-list – comma – Y-list – comma – VARS – YVARS – Function – Y1 – ENTER -ENTER Now print you scatterplot – and the LSLR will also be graphed Interpretation of Coefficients Form of your LSLR • yˆ a bx define your variables • Predicted Resp Var = a + b (Explan Var) Interpretation of b • b is the slope of your LSLR • Basic Algebra I interpretation Interpretation of a • a is the y-intercept • Basic Algebra I interpretation • Put your interpretation into the context of the problem • Sometimes the a value ‘makes sense’, other times it doesn’t – no practical interpretation Assessing the fit of a line So far we’ve collected bivariate data We’ve displayed the data in a scatterplot We’ve calculated the correlation coefficient We found a best fit line using the method of least squares regression Assessing the fit of a line Now we want to assess the fit of our best fit line Are there any unusual aspects of the data that we want to address before we use our line to predict? How accurate can we expect our predictions based on the regression to be? Residuals We’re going to use the residuals created by the LSR to assess the fit, and To give us a sense for how accurate our predictions will be What are the residuals? What are the residuals? Where are they stored in our calculator? Assessing the fit of a line We create and inspect the residual plot for our regression The residual plot is a scatterplot of the (x, residual) pairs What are we looking for? • Isolated points or patterns in the residual plot indicate potential problems Why the residual plot? Why do we inspect the residual plot and not just the scatterplot of our original data? Sometimes it’s easier to see curvature or problem points in a residual plot. Heights and Weights of American Women example To summarize … We determine the appropriateness of our least squares regression line by inspection of the residual plot We look for patterns and unusual values RANDOMNESS = GOOD PATTERNS/UNUSUAL VALUES = BAD Assess accuracy of line Once we determine that our LSR line is an appropriate summary of our data, we want to assess the accuracy of predictions • Are predictions made with the additional variable better than predictions without knowledge of the second variable? Two numerical measures The two numerical measures we use in this part of our assessment are 2 r • Coefficient of Determination • Standard deviation about the regression line Coefficient of Determination The Coefficient of Determination provides the proportion of variability that is explained by the LSRL – that is the proportion of variation attributed to the linear association between the two variables. It is one of the outputs when we run our regression. 0 r 1 2 Let’s digress … Let’s say … Let’s use our post knee surgery range of motion data Let’s say we wanted to predict range of motion – but only had the range of motion data – not the ages of the patients How? Now let’s say … You also have additional information – the ages of the patients We’ve already seen that there is a linear association between age and range of motion When we fit a line to this bivariate data, how do the residuals compare? SSR vs SST Coefficient of Determination If we look at the ratio of SSR to SST, can you interpret this? Now subtract this value from 1 – now give me an interpretation of this value It’s equal to R-sq – Coefficient of Determination Standard Deviation about the LSRL The CoD measures the extent of variation about the best fit line to the overall variation in y. A high R-sq value does not promise that the deviations from the line are small in an absolute sense. The Standard Deviation about the LSRL – se – is the typical amount an observation deviates from the LSRL Standard Deviation about the LSRL This value is analogous to the standard deviation s x X n 1 SSR se n2 Minitab Output Minitab is a desktop statistics software package You are responsible for reading and using Minitab output Things you can be expected to perform: • • • • Determine Determine Determine Determine LSR equation se R-sq R-sq from ANOVA table Non-linear Relationships and Transformations If our data has a linear pattern in a scatterplot, our approach is to summarize the data as a line using the method of least squares What if our data does not have a linear pattern – as depicted in the scatterplot or the residual plot Look at the ‘Rice Paddy’ data (L1, L2) Rice Paddy Is the time between flowering and harvesting related to the yield of a paddy? Time Btwn Yield Time Btwn Yield 16 18 20 22 24 26 28 30 2508 2518 3304 3423 3057 3190 3500 3883 32 34 36 38 40 42 44 46 3823 3646 3708 3333 3517 3214 3103 2776 Nonlinear Relationships We have 2 choices • We can fit a nonlinear regression • Transform the data Ex: Nonlinear regression for the ‘Rice Paddy’ data, inspect the residuals, R2 Transforming the Data An alternative is to transform the data so the transformed data scatterplot is linear, And then perform a linear regression We then ‘back-transform’ our data when we use the LSRL for predictions. Let’s look at an example Ex: River Velocity and Distance from Shore Is it reasonable to fit a line to this data? If we wanted to transform our data • Which variable to transform ? • What type of transformation ? River Water Velocity As fans of white-water rafting know, a river flows more slowly close to its banks. Let’s look at the relationship between water velocity (cm/sec) and distance from shore (m). Distance .5 1.5 2.5 3.5 4.5 5.5 6.5 7.5 8.5 9.5 Velocity 22 23.18 25.48 25.25 27.15 27.83 28.49 28.18 28.50 28.63 Transformations Which variable to transform ? • Explanatory and/or Response variable • Transform and then redraw scatterplot Which transformation to use ? • Use the transformation ladder and the shape of your scatterplot Transform the data, inspect the scatterplot and follow the steps for LSR Use your LSR to make a prediction • What is the velocity of the river 9 m from the bank? Let’s do another example In the previous problem, we only transformed the explanatory variable. Consequently the ‘back tranformation’ was easy. Let’s do another example where we transform both variables, run a regression and then make a prediction. Tortilla Chips: Relationship between Frying Time and Moisture Content Tortilla Chips No tortilla chip lover likes soggy chips, so it’s important to find characteristics of the production process that produce chips with an appealing texture. The following data on frying time (sec) and moisture content (%) are given below Frying Time 5 10 15 20 25 30 45 60 Moisture Content 16.3 9.7 8.1 4.2 3.4 2.9 1.9 1.3