Search

advertisement

Chapter 4

Informed Search and Exploration

Outline

Best-First Search

Greedy Best-First Search

A* Search

Heuristics

Variances of A* Search

Tree Search (Reviewed, Fig. 3.9)

A search strategy is defined by picking the order of

node expansion

function TREE-SEARCH ( problem, strategy ) returns a solution, or failure

initialize the search tree using the initial state of problem

loop do

if there are no candidates for expansion then return failure

choose a leaf node for expansion according to strategy

if the node contains a goal state then

return the corresponding solution

else expand the node and add the resulting nodes to the search tree

Search Strategies

Uninformed Search Strategies

by systematically generating new states and testing against

the goal

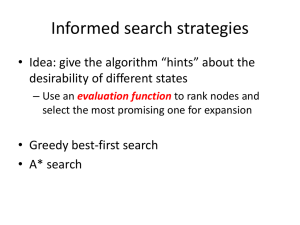

Informed Search Strategies

by using problem-specific knowledge beyond the definition

of the problem to find solutions more efficiently

Best-First Search

An instance of general Tree-Search or GraphSearch

A node is selected for expansion based on an

evaluation function, f(n), with the lowest evaluation

Implementation

an estimate of desirability

expand most desirable unexpanded node

a priority queue that maintains the fringe in ascending order

of f-values

Best-first search is venerable but inaccurate

Best-First Search (cont.-1)

Heuristic search uses problem-specific knowledge:

evaluation function

Choose the seemingly-best node based on the cost

of the corresponding solution

Need estimate of the cost to a goal

e.g., depth of the current node

sum of distances so far

Euclidean distance to goal, etc.

Heuristics: rules of thumb

Goal: to find solutions more efficiently

Best-First Search (cont.-2)

Heuristic Function

h(n) = estimated cost of the cheapest path

from node n to a goal

(h(n) = 0, for a goal node)

Special cases

Greedy Best-First Search (or Greedy Search)

minimizing estimated cost from the node to reach a goal

A* Search

minimizing the total estimated solution cost

Heuristic

Heuristic is derived from heuriskein in Greek,

meaning “to find” or “to discover”

The term heuristic is often used to describe rules of

thumb or advices that are generally effective, but not

guaranteed to work in every case

In the context of search, a heuristic is a function that

takes a state as an argument and returns a number

that is an estimate of the merit of the state with

respect to the goal

Heuristic (cont.)

A heuristic algorithm improves the average-case

performance, but does not necessarily improve the

worst-case performance

Not all heuristic functions are beneficial

The time spent evaluating the heuristic function in order to

select a node for expansion must be recovered by a

corresponding reduction in the size of search space

explored

Useful heuristics should be computationally inexpensive!

Romania with Step Costs in km

Straight-line distances

to Bucharest

Arad

366

Bucharest

0

Craiova

160

Drobeta

242

Eforie

161

Fagaras

176

Giurgiu

77

Hirsova

151

Iasi

226

Lugoj

244

Mehadia

241

Neamt

234

Oradea

380

Pitesti

100

Rimnicu Vilcea

193

Sibiu

253

Timisoara

329

Urziceni

80

Vaslui

199

Zerind

374

Greedy Best-First Search

Greedy best-first search expands the nodes that

appears to be closest to the goal

Evaluation function = Heuristic function

f(n) = h(n) = estimated best cost to goal from n

h(n) = 0 if n is a goal

e.g., hSLD(n) = straight-line distance for route-finding

function GREEDY-SEARCH ( problem ) returns a solution, or failure

return BEST-FIRST-SEARCH ( problem, h )

Greedy Best-First Search Example

Analysis of Greedy Search

Complete?

Yes (in finite space with repeated-state checking)

No (start down an infinite path and never return to try other

possibilities)

(e.g., from Iasi to Fagaras)

Susceptible to false starts

Isai Neamt (dead end)

No repeated states checking

Isai Neamt Isai Neamt (oscillation)

Analysis of Greedy Search (cont.)

Optimal? No

(e.g., from Arad to Bucharest)

Arad → Sibiu → Fagaras → Bucharest

Arad → Sibiu → Rim → Pitesti → Bucharest

(418 = 140+80+97+101)

Time? best: O(d), worst: O(bm)

(450 = 140+99+211, is not shortest)

m: the maximum depth

like depth-first search

a good heuristic can give dramatic improvement

Space? O(bm): keep all nodes in memory

*

A

Search

Avoid expanding paths that are already expansive

To minimizing the total estimated solution cost

Evaluation function f(n) = g(n) + h(n)

f(n) = estimated cost of the cheapest solution through n

g(n) = path cost so far to reach n

h(n) = estimated cost of the cheapest path from n to goal

function A*-SEARCH ( problem ) returns a solution, or failure

return BEST-FIRST-SEARCH ( problem, g+h )

Romania with Step Costs in km (remind)

Straight-line distances

to Bucharest

Arad

366

Bucharest

0

Craiova

160

Drobeta

242

Eforie

161

Fagaras

176

Giurgiu

77

Hirsova

151

Iasi

226

Lugoj

244

Mehadia

241

Neamt

234

Oradea

380

Pitesti

100

Rimnicu Vilcea

193

Sibiu

253

Timisoara

329

Urziceni

80

Vaslui

199

Zerind

374

*

A

Search Example

Romania with Step Costs in km (remind)

150

Straight-line distances

to Bucharest

Arad

366

Bucharest

0

Craiova

160

Drobeta

242

Eforie

161

Fagaras

176

Giurgiu

77

Hirsova

151

Iasi

226

Lugoj

244

Mehadia

241

Neamt

234

Oradea

380

Pitesti

100

Rimnicu Vilcea

193

Sibiu

253

Timisoara

329

Urziceni

80

Vaslui

199

Zerind

374

Admissible Heuristic

A* search uses an admissible heuristic h(n)

e.g., h(n) is not admissible

h(n) never overestimates the cost to the goal from n

n, h(n) h*(n), where h*(n) is the true cost to reach the

goal from n (also, h(n) 0, so h(G) = 0 for any goal G)

g(X) + h(X) = 102

g(Y) + h(Y) = 74

Optimal path is not found!

e.g., straight-line distance hSLD(n) never

overestimates the actual road distance

Admissible Heuristic (cont.)

e.g., 8-puzzle

h1(n) = number of misplaced tiles

h2(n) = total Manhattan distance

i.e., no. of squares from desired location of each tile

h1(S) = 8

h2(S) = 3+1+2+2+2+3+3+2 = 18

A* is complete and optimal if h(n) is admissible

Optimality of

*

A (proof)

Suppose some suboptimal goal G2 has been

generated and is in the queue

Let n be an unexpanded node on a shortest path to

an optimal goal G

Optimality of

*

A (cont.-1)

C*: cost of the optimal solution path

A* may expand some nodes before selecting a goal node

Assume: G is an optimal and G2 is a suboptimal goal

f(G2) = g(G2) + h(G2) = g(G2) > C*

----- (1)

For some n on an optimal path to G, if h is admissible, then

f(n) = g(n) + h(n) C*

----- (2)

From (1) and (2), we have

f(n) C* < f(G2)

So, A* will never select G2 for expansion

Monotonicity (Consistency) of Heuristic

A heuristic is consistent if

h(n) c(n, a, n’) + h(n’)

the estimated cost of reaching the goal from n is no greater than

the step cost of getting to successor n plus the estimated cost of

reaching the goal from n’

If h is consistent, we have

f(n’) = g(n’) + h(n’)

= g(n) + c(n, a, n’) + h(n’)

g(n) + h(n) = f(n)

f(n’) f(n)

i.e., f(n) is non-decreasing along any path

Theorem: If h(n) is consistent, A* is optimal

n

c(n,a,n’)

h(n)

n’

h(n’)

G

Optimality of

*

A (cont.-2)

A* expands nodes in order of increasing f value

Gradually adds f-contours of nodes

Contour i has all nodes with f=fi, where fi < fi+1

Why Use Estimate of Goal Distance?

Order in which uniform-cost

looks at nodes. A and B are

same distance from start, so

will be looked at before any

longer paths. No ”bias”

toward goal.

A

start

Assume states are points

the Euclidean plane

B

goal

Order of examination using

dist. From start + estimates of

dist. to goal. Notes “bias”

toward the goal; points away

from goal look worse.

Analysis of

*

A

Search

Complete? Yes

unless there are infinitely many nodes with f f(G)

Optimal? Yes, if the heuristic is admissible

Time? Exponential in [relative error in h* length of solution]

Space? O(bd), keep all nodes in memory

Optimally Efficient? Yes

i.e., no other optimal algorithms is guaranteed to expand fewer

nodes than A*

A* is not practical for many large-scale problems

since A* usually runs out of space long before it runs out of time

Heuristic Functions

Example

for 8-puzzle

Start State

for 15-puzzle

branching factor 3

depth = 22

# of states = 322 3.1 1010

9! / 2 = 181,400 (reachable distinct states)

# of states 1013

Two commonly used candidates

Goal State

h1(n) = number of misplaced tiles = 8

h2(n) = total Manhattan distance

(i.e., no. of squares from desired location to each tile)

= 3+1+2+2+2+3+3+2 = 18

Effect of Heuristic Accuracy

on Performance

h2 dominates (is more informed than) h1

Effect of Heuristic Accuracy

on Performance (cont.)

Effective Branching Factor b* is defined by

N + 1 = 1 + b* + (b*)2 +‧‧‧+(b*)d

N : total number of nodes generated by A*

d : solution depth

b* : branching factor that a uniform tree of depth d would have to

have in order to contain N+1 nodes

e.g., A* finds a solutions at depth 5 using 52 nodes, then b* = 1.92

A well designed heuristic would have a value of b* close to 1,

allowing fairly large problems to be solved

A heuristic function h2 is said to be more informed than h1

(or h2 dominates h1) if both are admissible and

n, h2(n) h1(n)

A* using h2 will never expand more nodes than A* using h1

Inventing Admissible Heuristic Functions

Relaxed problems

problems with fewer restrictions on the actions

The cost of an optimal solution to a relaxed problem is an

admissible heuristic for the original problem

e.g., 8-puzzle

A tile can move from square A to square B if

A is horizontally or vertically adjacent to B and B is blank.

(P if R and S)

3 relaxed problems

A tile can move from square A to square B if A is horizontally

or vertically adjacent to B. (P if R) --- derive h2

A tile can move from square A to square B if B is blank. (P if S)

A tile can move from square A to square B. (P) --- derive h1

Inventing Admissible Heuristic Functions

(cont.-1)

Composite heuristics

Given h1, h2, … , hm; none dominates any others

h(n) = max { h1(n), h2(n), … , hm(n) }

Subproblem

The cost of the optimal solution of the subproblem is a

lower bound on the cost of the complete problem

To get tiles 1, 2, 3 and 4 into their correct positions, without

worrying about what happens to other tiles.

‧

‧

‧

‧

‧

‧

Start State

‧

Goal State

‧

Inventing Admissible Heuristic Functions

(cont.-2)

Weighted evaluation functions

fw(n) = (1-w)g(n) + wh(n)

Learn the coefficients for features of a state

h(n) = w1 f1(n), , wk fk(n)

Search cost

Good heuristics should be efficiently computable

Memory-Bounded Heuristic Search

Overcome the space problem of A*, without

sacrificing optimality or completeness

IDA*

(iterative deepening A*)

a logic extension of iterative deepening search to use

heuristic information

the cutoff used is the f-cost (g+h) rather than depth

RBFS (recursive best-first search)

MA*

(memory-bounded A*)

SMA* (simplified MA*)

is similar to A*, but restricts the queue size to fit into the

available memory

Iterative Deepening

*

A

Iterative deepening is useful for reducing memory requirement

At each iteration, perform DFS with an f-cost limit

IDA* is complete and optimal (with the same caveats as A* search)

Space complexity: O (bf * / ) O(bd)

f* : the optimal solution cost

: the smallest operator cost

Time complexity: O(bd)

: the number of different f values

In the worst case, if A* expands N nodes,

IDA* will expand 1+2+…+N = O(N2) nodes

IDA* uses too little memory

Iterative Deepening

*

A (cont.-1)

first, each iteration expands

all nodes inside the contour

for the current f-cost

peeping over to find out the

next contour lines

once the search inside a

given contour has been

complete

a new iteration is started

using a new f-cost for the

next contour

Iterative Deepening

*

A (cont.-2)

function IDA* ( problem ) returns a solution sequence

inputs: problem, a problem

local variables: f-limit, the current f-COST limit

root, a node

root MAKE-NODE( INITIAL-STATE[ problem ])

f-limit f-COST( root )

loop do

solution, f-limit DFS-CONTOUR( root , f-limit )

if solution is non-null then return solution

if f-limit = then return failure

end

Iterative Deepening

*

A (cont.-3)

function DFS-CONTOUR ( node, f-limit )

returns a solution sequence and a new f-COST limit

inputs: node, a node

f-limit, the current f-COST limit

local variables: next-f, the f-COST limit for the next contour, initially

if f-COST[ node ] > f-limit then return null, f-COST[ node ]

if GOAL-TEST[ problem ]( STATE[ node ]) then return node, f-limit

for each node s in SUCCESSORS( node ) do

solution, new-f DFS-CONTOUR( s, f-limit )

if solution is non-null then return solution, f-limit

next-f MIN( next-f, new-f )

end

return null, next-f

Recursive Best-First Search (RBFS)

Keeps track of the f-value of the best-alternative

path available

if current f-values exceeds this alternative f-value, then

backtrack to alternative path

upon backtracking change f-value to the best f-value of its

children

re-expansion of this result is thus still possible

Recursive Best-First Search (cont.-1)

∞

Arad

447

Sibiu

Arad

646

417

Fagaras

Sibiu

415

450

366

393

Timisoara

447

Oradea

447

415

Rim Vil

Bucharest

591

450

671

Craiova

526

Bucharest

418

Zerind

449

417

413 417

447

Pitesti

Pitesti

Craiova

417

615

Sibiu

Rim Vil

553

607

Recursive Best-First Search (cont.-2)

function RECURSIVE-BEST-FIRST-SEARCH ( problem ) returns a solution, or failure

RBFS( problem, MAKE-NODE( INITIAL-STATE[ problem ] ), )

function RBFS ( problem, node, f_limit )

returns a solution, or failure and a new f-COST limit

if GOAL-TEST[ problem ]( STATE[ node ]) then return node

successors EXPAND( node, problem )

if successors is empty then return failure,

for each node s in successors do

f [ s ] max( g( s ) + h ( s ), f [ node ])

repeat

best the lowest f-value node in successors

if f [ best ] f_limit then return failure, f [ best ]

alternative the second lowest f-value among successors

result, f [ best ] RBFS( problem, best, MIN( f_limit, alternative ))

if result failure then return result

Analysis of RBFS

RBFS is a bit more efficient than IDA*

Complete? Yes

Optimal? Yes, if the heuristic is admissible

Time? Exponential

still excessive node generation (mind changes)

difficult to characterize, depend on accuracy of h(n) and

how often best path changes

Space? O(bd)

IDA* and RBFS suffer from too little memory

Simplified Memory Bounded

*

*

A (SMA )

SMA* expands the (newest) best leaf and deletes

the (oldest) worst leaf

Aim: find the lowest-cost goal

node with enough memory

Max Nodes = 3

A – root node

D,F,I,J – goal nodes

Label: current f-Cost

Simplified Memory Bounded

*

A (cont.-1)

3. memory is full

update (A) f-Cost for the min chlid

expand G, drop the higher f-Cost leaf (B)

5. drop H and add I

G memorize H

update (G) f-Cost for the min child

update (A) f-Cost

6. I is goal node, but may not be the

best solution

the path through G is not so great, so B is

generated for the second time

7. drop G and add C

A memorize G

C is non-goal node

C mark to infinite

‧How about J has a cost of 19 instead of 24 ?

8. drop C and add D

B memorize C

D is a goal node, and it is lowest f-Cost

node then terminate

*

A (cont.-2)

Simplified Memory Bounded

Aim: find the lowest-cost goal

node with enough memory

A – root node

D,F,I,J – goal nodes

Max Nodes = 3

A

10

10+5=15

10

30+5=35

E

8

B

10

20+5=25

Label: current f-Cost

0+12=12

G

10

C

D

8

20+0=20

16+2=18

8

10

F

30+0=30

24+0=24

J

8+5=13

16

H

I

24+0=24

8

K

24+5=29

Simplified Memory Bounded

*

A (cont.-3)

drop

Imemory

is goal

H and

G

C

node,

is full

addbut

IC may not be the best solution

D

A

update

G

the

B

memorize

memorize

memorize

path(A)

through

f-cost

G

C

H G

foristhe

notmin

so great

child

D

expand

update

C

soisBa

non-goal

isgoal

(G)

generate

G, drop

node

f-cost

node

the

and

for

forhigher

the

the

it issecond

min

lowest

f-cost

child

time

f-cost

leaf (B)

node then terminate

How

update

C

mark

about

(A)

to infinite

f-cost

J has a cost of 19 instead of 24 ??

A

20 (infinite)

15

25 infinite

C

12 (24)

13

15

20

(15)

B

G

D

20

18 infinite

H

13 (infinite)

24

I

24

Simplified Memory Bounded

*

A (cont.-4)

function SMA* ( problem ) returns a solution sequence

inputs: problem, a problem

local variables: Queue, a queue of nodes ordered by f-COST

Queue MAKE-QUEUE({ MAKE-NODE( INITIAL-STATE[ problem ])})

loop do

if Queue is empty then return failure

n deepest least f-COST node in Queue

if GOAL-TEST( n ) then return success

s NEXT-SUCCESSOR( n )

if s is not a goal and is at maximum depth then

f( s )

else f( s ) MAX( f( n ), g( s ) + h( s ))

if all of n‘s successors have been generated then

update n‘s f-COST and those of its ancestors if necessary

if SUCCESSORS( n ) all in memory then remove n from Queue

if memory is full then

delete shallowest, highest f-COST node in Queue

remove it from its parent’s successor list

insert its parent on Queue if necessary

insert s on Queue

end

*

SMA

Algorithm

SMA* makes use of all available memory M to carry

out the search

It avoids repeated states as far as memory allows

Forgotten nodes: shallow nodes with high f-cost will

be dropped from the fringe

A forgotten node will only be regenerated if all other

child nodes from its ancestor node have been shown

to look worse

Memory limitations can make a problem intractable

Analysis of

*

SMA

Complete? Yes, if M d

i.e., if there is any reachable solution

i.e., the depth of the shallowest goal node is less than the

memory size

Optimal? Yes, if M d *

i.e., if any optimal solution is reachable;

otherwise, it returns the best reachable solution

Optimally efficiently? Yes, if M bm

Time?

It is often that SMA* is forced to switch back and forth continually

between a set of candidate solution paths

Thus, the extra time required for repeated generation of the same

node

Memory limitations can make a problem intractable from the point

of view of computation time

Space? Limited

HW2, 4/11 deadline

Write an A* programs to solve the path

finding problem.