Probability in AI - Temple University

Made by: Maor Levy, Temple University 2012

1

Probability expresses uncertainty.

Pervasive in all of Artificial Intelligence

Machine learning

Information Retrieval (e.g., Web)

Computer Vision

Robotics

Based on mathematical calculus .

Probability of a fair coin:

P ( COIN

tail )

1

2

P ( tail )

1

2

2

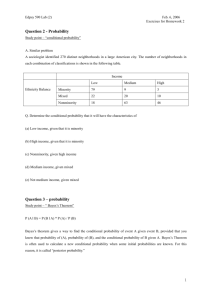

Example: Probability of cancer

P(has cancer) = 0.02

P(

¬ has cancer) = 0.98

Multiple events: cancer, test result

P(has cancer, test positive)

The problem with joint distributions: it takes

2 𝐷 − 1 numbers to specify them!

3

Conditional Probability describes the cancer test:

P(test positive | has cancer) = 0.9

P(test positive |

¬ has cancer) = 0.2

Put this together with: Prior probability

P(has cancer) = 0.02

P(test negative | has cancer) = 0.1

Total probability is a fundamental rule relating marginal probabilities to conditional probabilities.

𝑃 𝑌 = 𝑖

𝑃 𝑌 𝑋 = 𝑖 𝑃(𝑋 = 𝑖)

4

In summary:

P(has cancer) = 0.02

P(¬has cancer) = 0.98

P(test positive | has cancer) = 0.9

P(test positive |

¬ has cancer) = 0.2

P(test negative | has cancer) = 0.1

P(test negative |

¬ has cancer) = 0.8

P(cancer) and P(Test positive | cancer) is called the model .

Calculating P(Test positive) is called prediction .

Calculating P(Cancer | test positive) is called diagnostic reasoning.

5

A belief network consists of:

◦ A directed acyclic graph with nodes labeled with random variables

◦ a domain for each random variable

◦ a set of conditional probability tables for each variable

◦ given its parents (including prior probabilities for nodes w ith no parents).

A belief network is a graph: the nodes are random variables; there is an arc from the parents of each node into that node.

◦ A belief network is automatically acyclic by construction.

◦ A belief network is a directed acyclic graph (DAG) where nodes are random variables.

◦ The parents of a node n are those variables on which n directly depends.

◦ A belief network is a graphical representation of dependence and independence:

A variable is independent of its non-descendants given its parents.

6

Whether l1 is lit (L1_lit) depends only on the status of the light (L1_st) and whether there is power in wire w0. Thus, L1_lit is independent of the other variables given L1_st and W0.

In a belief network , W0 and L1_st are parents of L1_lit.

Similarly, W0 depends only on whether there is power in w1, whether there is power in w2, the position of switch s2 (S2_pos), and the status of switch s2 (S2_st).

7

To represent a domain in a belief network, you need to consider:

What are the relevant variables?

What will you observe?

What would you like to find out (query)?

What other features make the model simpler?

What values should these variables take?

What is the relationship between them? This should be expressed in terms of local influence.

How does the value of each variable depend on its parents? This is expressed in terms of the conditional probabilities.

8

The power network can be used in a number of ways:

◦ Conditioning on the status of the switches and circuit

◦ breakers, whether there is outside power and the position of the switches, you can simulate the lighting.

◦ Given values for the switches, the outside power, and whether the lights are lit, you can determine the posterior probability that each switch or circuit breaker is ok or not.

◦ Given some switch positions and some outputs and some intermediate values, you can determine the probability of any other variable in the network.

9

A Bayes network is a form of probabilistic graphical model. Specifically, a Bayes network is a directed acyclic graph of nodes representing variables and arcs representing dependence relations among the variables.

A representation of the joint distribution over all the variables represented by nodes in the graph. Let the variables be X(1), ..., X(n).

Let parents(A) be the parents of the node A.

Then the joint distribution for X(1) through X(n) is represented as the product of the probability distributions P(Xi | Parents(Xi)) for i = 1 to n:

P ( X

1

x

1

,..., X n

x n

)

i n

1

P ( X i

x i

| Parents ( X i

))

If X has no parents, its probability distribution is said to be unconditional, otherwise it is conditional.

10

Examples of Bayes network:

11

True Bayesians actually consider conditional probabilities as more basic than joint probabilities.

It is easy to define P(A|B) without reference to the joint probability P(A,B).

Bayes’ Rule:

◦ Posterior =

𝐿𝑖𝑘𝑒𝑙𝑖ℎ𝑜𝑜𝑑 ∗ 𝑃𝑟𝑖𝑜𝑟

→ 𝑃 𝐴 𝐵 =

𝑀𝑎𝑟𝑔𝑖𝑛𝑎𝑙 𝐿𝑖𝑘𝑒𝑙𝑖ℎ𝑜𝑜𝑑

Back to the cancer example:

𝑃

𝐵 𝐴

𝑃 𝐴

𝑃 𝐵

◦

P has cancer test positive) =

𝑃 𝑡𝑒𝑠𝑡 𝑝𝑜𝑠𝑖𝑡𝑖𝑣𝑒 ℎ𝑎𝑠 𝑐𝑎𝑛𝑐𝑒𝑟)∗𝑃(ℎ𝑎𝑠 𝑐𝑎𝑛𝑐𝑒𝑟)

𝑃(𝑡𝑒𝑠𝑡 𝑝𝑜𝑠𝑖𝑡𝑖𝑣𝑒)

◦ =

0.9∗0.02

0.02∗0.9+0.98∗0.2

=

0.018

0.214

≈ 0.0841

12

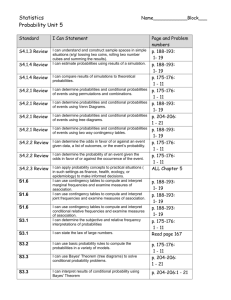

Two variables are independent if:

∀𝑥, 𝑦 ∶ 𝑃 𝑥 𝑃 𝑦 = 𝑃(𝑥, 𝑦)

It means that the occurrence of one event makes it neither more nor less probable that the other occurs.

This says that their joint distribution factors into a product two simpler distributions

This implies:

∀𝑥, 𝑦 ∶ 𝑃 𝑥|𝑦 = 𝑃(𝑥)

We write

Independence is a simplifying modeling assumption

Empirical joint distributions: at best “close” to independent

For example:

The event of getting a 6 the first time a die is rolled and the event of getting a 6 the second time are independent.

By contrast, the event of getting a 6 the first time a die is rolled and the event that the sum of the numbers seen on the first and second trials is 8 are not independent.

13

Two events are dependent if the outcome or occurrence of the first affects the outcome or occurrence of the second so that the probability is changed.

Example : A card is chosen at random from a standard deck of 52 playing cards. Without replacing it, a second card is chosen. What is the probability that the first card chosen is a queen and the second card chosen is a jack?

Probabilities:

P(queen on first pick) = 4

52

P(jack on 2nd pick given queen on 1st pick) = 4

51

P(queen and jack) = 4

52

∗

4

51

=

4

663

14

X and Y are conditionally independent given a third event Z precisely if the occurrence or non-occurrence of X and the occurrence or non-occurrence of Y are independent events in their conditional probability distribution given Z. We write:

15

Why Bayes Networks?

P(A)

P(B)

P(C|A,B)

P(D|E)

P(E|C)

A

C

B

D E

Joint Distribution of any five variables is:

2 5 − 1 = 31

In Bayes network:

◦ P(A,B,C,D,E)=P(A)*P(B)*P(C|A,B)*P(D|E)*P(E|C)

◦ Parameters: 1 1 4 2 2 Total of 10

16

The Naïve network has

2 16 − 1 = 65535 𝑝𝑎𝑟𝑎𝑚𝑒𝑡𝑒𝑟𝑠

Bayes Network needs only 47 numerical probabilities to specify the joint.

17

Are X and Y conditionally independent given evidence vars {Z}?

Yes, if X and Y “separated” by Z

Look for active paths from X to Y

No active paths = independence!

A path is active if each triple is active:

Causal chain A B C where B is unobserved (either direction)

Common cause A B C where B is unobserved

Common effect (aka v-structure)

A B C where B or one of its descendants is observed

All it takes to block a path is a single inactive segment

Active Triples Inactive Triples

18

Examples:

R B

L

R

T

T

’

Yes

D T

T

’

B

Yes

Yes

Yes

19

Overview:

Bayes network:

Graphical representation of joint distributions

Efficiently encode conditional independencies

Reduce number of parameters from exponential to linear (in many cases)

20