Backing off from infinity

advertisement

fundamental communication limits

in non-asymptotic regimes

Andrea Goldsmith

Thanks to collaborators Chen, Eldar,

Grover, Mirghaderi, Weissman

Information Theory and Asymptopia

Capacity with asymptotically small error

achieved by asymptotically long codes.

Defining capacity in terms of asymptotically

small error and infinite delay is brilliant!

Has also been limiting

Cause of unconsummated union between networks

and information theory

Optimal compression based on properties of

asymptotically long sequences

Leads to optimality of separation

Other forms of asymptopia

Infinite SNR, energy, sampling, precision, feedback, …

Why back off?

Theory not informing practice

Theory vs. practice

Theory

Infinite blocklength codes

Infinite SNR

Infinite energy

Infinite feedback

Infinite sampling rates

Infinite (free) processing

Infinite precision ADCs

Practice

Uncoded to LDPC

-7dB in LTE

Finite battery life

1 bit ARQ

50-500 Msps

200 MFLOPs-1B FLOPs

8-16 bits

What else lives in asymptopia?

Backing off from: infinite blocklength

Recent developments on finite blocklength

Channel codes (Capacity C for n)

Source codes (entropy H or rate distortion R(D))

[Ingber, Kochman’11; Kostina, Verdu’11]

[Wang et. Al’11; Kostina, Verdu’12]

Separation not Optimal

Separation not Optimal

Grand Challenges Workshop: CTW Maui

From the perspective of the cellular industry, the Shannon

bounds evaluated by Slepian are within .5 dB for a packet size of

30 bits or more for the real AWGN channel at 0.5 bits/sym, for

BLER = 1e-4. In this perhaps narrow context there is not much

uncertainty for performance evaluations.

For cellular and general wireless channels, finite blocklength

bounds for practical fading models are needed and there is very

little work along those lines.

Even for the AWGN channel the computational effort of

evaluating the Shannon bounds is formidable.

This indicates a need for accurate approximations, such as those

recently developed based on the idea of channel dispersion.

Diversity vs. Multiplexing Tradeoff

Use antennas for multiplexing or diversity

What

is

Infinite?

Low Pe

Error Prone

Diversity/Multiplexing tradeoffs (Zheng/Tse)

lim

log Pe ( SNR )

SNR

d

log SNR

lim

SNR

R(SNR)

log SNR

d (r) (N t r)(N r r)

*

r

Backing off from: infinite SNR

High SNR Myth: Use some spatial dimensions for

multiplexing and others for diversity

Reality: Use all spatial dimensions for one or the other*

Diversity is wasteful of spatial dimensions with HARQ

Adapt modulation/coding to channel SNR

*Transmit Diversity vs. Spatial Multiplexing in Modern MIMO Systems”, Lozano/Jindal

Diversity-Multiplexing-ARQ Tradeoff

Suppose we allow ARQ with incremental redundancy

d

16

14

12

L=4

10

8

6

ARQ Window

4

Size L=1

L=2

L=3

2

0

0

1

2

3

4

r

ARQ is a form of diversity [Caire/El Gamal 2005]

Joint Source/Channel Coding

Use antennas for multiplexing:

High-Rate

Quantizer

ST Code

High Rate

Decoder

Error Prone

Use antennas for diversity

Low-Rate

Quantizer

ST Code

High

Diversity

Decoder

Low Pe

How should antennas be used: Depends on end-to-end metric

Joint Source-Channel coding w/MIMO

uR

k

Source

Encoder

s bits

i

Increased rate here

decreases source distortion

Index

Assignment

s bits

p(i)

But permits less

diversity here

Channel

Encoder

MIMO

Channel

A joint design is needed

vj

Source

Decoder

s bits Inverse Index s bits

Assignment

j

p(j)

And maybe higher total distortion

Channel

Decoder

Resulting in more errors

Antenna Assignment vs. SNR

Relaying in wireless networks

Source

Relay

Destination

Intermediate nodes (relays) in a route help to forward the

packet to its final destination.

Decode-and-forward (store-and-forward) most common:

Packet decoded, then re-encoded for transmission

Removes noise at the expense of complexity

Amplify-and-forward: relay just amplifies received packet

Also amplifies noise: works poorly for long routes; low SNR.

Compress-and-forward: relay compresses received packet

Used when Source-relay link good, relay-destination link weak

Capacity of the relay channel unknown: only have bounds

Cooperation in Wireless Networks

Relaying is a simple form of cooperation

Many more complex ways to cooperate:

Virtual MIMO , generalized relaying, interference

forwarding, and one-shot/iterative conferencing

Many theoretical and practice issues:

Overhead, forming groups, dynamics, full-duplex, synch, …

Generalized Relaying and Interference

Forwarding

TX1

RX1

Y4=X1+X2+X3+Z4

X1

relay

Y3=X1+X2+Z3

TX2

X3= f(Y3)

X2

Analog network coding

Y5=X1+X2+X3+Z5

RX2

Can forward message and/or interference

Relay can forward all or part of the messages

Much room for innovation

Relay can forward interference

To help subtract it out

Beneficial to forward both

interference and message

In fact, it can achieve capacity

P1

S

P3

Ps

D

P2

P4

Maric/Goldsmith’12

•

For large powers Ps, P1, P2, …, analog network

coding (AF) approaches capacity : Asymptopia?

Interference Alignment

Addresses the number of interference-free signaling

dimensions in an interference channel

Based on our orthogonal analysis earlier, it would appear

that resources need to be divided evenly, so only 2BT/N

dimensions available

Jafar and Cadambe showed that by aligning interference,

2BT/2 dimensions are available

Everyone gets half the cake!

Except at finite SNRs

Backing off from: infinite SNR

High SNR Myth: Decode-and-forward equivalent to

amplify-forward, which is optimal at high SNR*

Noise amplification drawback of AF diminishes at high SNR

Amplify-forward achieves full degrees of freedom in MIMO systems

(Borade/Zheng/Gallager’07)

At high-SNR, Amplify-forward is within a constant gap from the capacity

upper bound as the received powers increase (Maric/Goldsmith’07)

Reality: optimal relaying unknown at most SNRs:

Amplify-forward highly suboptimal outside high SNR per-node regime,

which is not always the high power or high channel gain regime

Amplify-forward has unbounded gap from capacity in the high channel

gain regime (Avestimehr/Diggavi/Tse’11)

Relay strategy should

depend on the worst link

Decode-forward used in practice

Capacity and Feedback

Capacity under feedback largely unknown

Channels with memory

Finite rate and/or noisy feedback

Multiuser channels

Multihop networks

ARQ is ubiquitious in practice

Works well on finite-rate noisy feedback channels

Reduces end-to-end delay

Why hasn’t theory met practice when it comes to

feedback?

PtP Memoryless Channels: Perfect Feedback

W W

Encoder

Decoder

Wˆ W

+

• Shannon

• Feedback does not increase capacity of DMCs

• Schalkwijk-Kailath Scheme for AWGN channels

– Low-complexity linear recursive scheme

– Achieves capacity

– Double exponential decay in error probability

Backing off from: Perfect Feedback

N(0,1)

m Î{1,..., enR }

Xi

Channel

Encoder

Ui

• [Shannon 59]: No Feedback

+

Yi

Decoder

Feedback

Module

Pr {mˆ ¹ m} » e-O(n)

• [Pinsker, Gallager et al.]: Perfect feedback

• Infinite rate/no noise

Pr {mˆ ¹ m} £ exp(- exp(...exp( O(n)...)))

O(n)

• [Kim et. al. 07/10]: Feedback with AWGN

Pr {mˆ ¹ m} » e-O(n)

• [Polyaskiy et. al. 10]: Noiseless feedback reduces

the minimum energy per bit when nR is fixed and n

m

Gaussian Channel with Rate-Limited Feedback

N(0,1)

Xi

Channel

Encoder

Feedback is ratelimited ; no noise

Ui

+

Yi

Decoder

mˆ

Feedback

Module

• Constraints

é n

ù

E ê å | Xi |2 ú £ nP

ë i=1

û

• Objective:

Choose

and f

to maximize the decay rate of

error probability Pe (n, R, RFB , P)

A super-exponential error probability is achievable if and only if R ³ R

FB

•

RFB < R: The error exponent is finite but higher

than no-feedback error exponent

Pe (n, R, RFB , P) £ e-n(ENoFB (R)+RFB +o(1))

•

RFB ³ R: Double exponential error probability

Pe (n, R, RFB , P) £ e

-eO (n )

• RFB ³ LR : L-fold exponential error probability

Pe (n, R, RFB , P) £ exp(- exp(...exp( O(n)...)))

L

Feedback under Energy/Delay Constraint

Forward Energy: Et

S = b1...bm

Otherwise, resend

with energy E

t+1

m-bit

Decoder

}

Pr St ¹ Sˆt = e (EtFB )

Sˆt

Send back Sˆt

with energy EtFB

m-bit

Encoder

Feedback Energy: EtFB

Decoding Delay £ T

Total Energy: å (Et +E tFB ) £ Etot

t=1

m-bit

Decoder

Feedback

Channel

• Constraints

T

}

If Termination

Alarm is received,

report Sˆt as the

decoded message

St

{

Forward

Channel

m-bit

Encoder

If St = S , send

Termination

Alarm

{

Pr Sˆt ¹ S = e (Et )

Objective:

T

FB

Choose { Et , E }t=1 to minimize

the overall probability of error Pe (Etot ,T )

t

Feedback Gain under Energy/Delay Constraint

Depends on the error probability model ε()

• Exponential Error Model: ε(x)=βe-αx

Applicable when Tx energy dominates

Feedback gain is high if total energy is large

enough

No feedback gain for energy budgets below

a threshold

• Super-Exponential Error Model: ε(x)=βe

-

-αx2

Applicable when Tx and coding energy are comparable

No feedback gain for energy budgets above a threshold

Etot

Backing off from: perfect feedback

• Memoryless point-to-point channels:

• Capacity unchanged with perfect feedback

• Simple linear scheme reduces error exponent

(Schalkwijk-Kailath: double exponential)

• Feedback reduces energy consumption

No feedback

Feedback

• Capacity of feedback channels largely unknown

•

•

•

•

Unknown for general channels with memory and perfect feedback

Unknown under finite rate and/or noisy feedback

Unknown in general for multiuser channels

Unknown in general for multihop networks

• ARQ is ubiquitious in practice

• Assumes channel errors

• Works well on finite-rate noisy feedback channels

• Reduces end-to-end delay

How to use feedback in wireless networks?

Output feedback

Channel information (CSI)

Noisy/Compressed

Acknowledgements

Something else?

Interesting applications to neuroscience

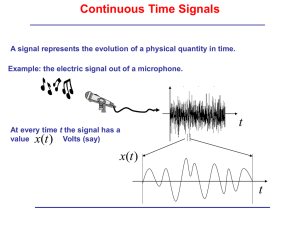

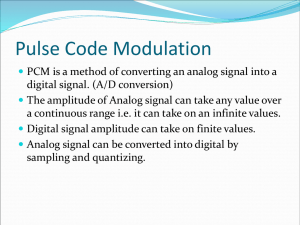

Backing off from: infinite sampling

New Channel

Sampling

Mechanism

(rate fs)

For a given sampling mechanism (i.e. a “new” channel)

What is the optimal input signal?

What is the tradeoff between capacity and sampling rate?

What known sampling methods lead to highest capacity?

What is the optimal sampling mechanism?

Among all possible (known and unknown) sampling schemes

Capacity under Sampling w/Prefilter

(t )

x (t )

h (t )

t nT s

s (t )

y [n ]

Theorem: Channel capacity

Determined by waterfilling:

suppresses aliasing

“Folded” SNR

filtered by S(f)

Capacity not monotonic in fs

Consider a “sparse” channel

Capacity not

monotonic in fs!

Single-branch

sampling fails to

exploit channel

structure

Filter Bank Sampling

t n ( mT s )

y1 [ n ]

s1 ( t )

(t )

x (t )

h (t )

t n ( mT s )

y i [n ]

s i (t )

t n ( mT s )

s m (t )

y m [n ]

Theorem: Capacity of the sampled channel using a

bank of m filters with aggregate rate fs

Similar to MIMO; no combining!

Equivalent MIMO Channel Model

H ( f kf s )

t n ( mT s )

y1 [ n ]

s1 ( t )

h (t )

S 1 ( f kf s

S m ( f kf s

(t )

x (t )

X ( f kf s

N ( f kf s )

t n ( mT s )

For each f

y i [n ]

s i (t )

H(f)

X(f

t n ( mT s )

s m (t )

X ( f kf s

S1 ( f

Sm ( f

N ( f kf s )

Ym ( f

S 1 ( f kf s

S i ( f kf s

S m ( f kf s

channel using a bank of m filters with aggregate rate

is

MIMO – Decoupling

Yi ( f

Theorem 3: The channel capacity of the sampled

Water-filling

over singular

values

S i ( f kf s

Si ( f

H ( f kf s )

y m [n ]

N(f )

Y1 ( f

Pre-whitening

Joint Optimization of Input and Filter Bank

Selects the m branches with m highest SNR

Example (Bank of 2 branches)

low

SNR

H ( f 2 kf s )

X ( f 2 kf s

H ( f kf s )

highest

SNR

X ( f kf s

X(f

H ( f kf s )

low SNR

X ( f kf s

N ( f kf s )

H(f)

2nd highest

SNR

N ( f 2 kf s ) S ( f 2 kf s )

S ( f kf s )

N(f )

S( f )

Y1 ( f

Y2 ( f

Capacity monotonic in fs

N ( f kf s ) S ( f kf s )

Can we do better?

Sampling with Modulator+Filter (1 or more)

q(t)

(t )

x(t)

h (t )

p(t)

y [n ]

s (t )

Theorem:

Bank of Modulator+FilterSingle Branch Filter Bank

t n ( mT s )

q(t)

zzzz

p(t)

zzzz

zz

y1 [ n ]

s1 ( t )

zzzz

s (t )

zzzz

zz

y [n ]

t n ( mT s )

equals

y i [n ]

s i (t )

t n ( mT s )

Theorem

s m (t )

Optimal among all time-preserving nonuniform

sampling techniques of rate fs

y m [n ]

Backing off from: Infinite processing power

Is Shannon-capacity still a good metric for system design?

Our approach

Power consumption via a network graph

power consumed in nodes and wires

X5

B1

B2

B3

B4

X6

X7

X8

Extends early work of El Gamal et. al.’84 and Thompson’80

Fundamental area-time-performance tradeoffs

For encoding/decoding “good” codes,

X5

B1

B2

B3

B4

Area occupied by wires

Encoding/decoding clock cycles

Stay away from capacity!

Close to capacity we have

Large chip-area

More time

More power

X6

X7

X8

Total power diverges to infinity!

Regular LDPCs closer to bound than capacity-approaching LDPCs!

Need novel code designs with short wires, good performance

Conclusions

Information theory asympotia has provided much insight and

decades of sublime delight to researchers

Backing off from infinity required for some problems to gain

insight and fundamental bounds

New mathematical tools and new ways of applying

conventional tools needed for these problems

Many interesting applications in finance, biology,

neuroscience, …