Non-Informative Dirichlet Score for learning Bayesian networks

advertisement

Non-Informative Dirichlet Score

for learning Bayesian networks

Maomi Ueno and Masaki Uto

University of ElectroCommunications, Japan

BDe(Bayesian Dirichlet equivalence)

Heckerman, Geiger and Chickering (1995)

Non-informative prior : BDeu

As Buntine (1991) described ijk /(ri qi )

is considered to be a special case of the

BDe metric. Heckerman et al. (1995)

called this special case “BDeu”.

gh

Problems of BDeu

BDeu is the most popular Bayesian network

score.

However, recent reports have described that

learning Bayesian network is highly

sensitive to the chosen equivalent sample

size (ESS) in the Bayesian Dirichlet

equivalence uniform (BDeu) and this often

engenders some unstable or undesirable

results.

(Steck and Jaakkola, 2002, Silander,

Kontkanen and Myllymaki, 2007).

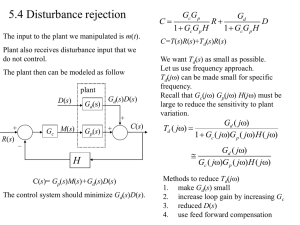

Theorem 1(Ueno 2010) When α is sufficiently

large, the log-ML is approximated

asymptotically as

1 N qi ri ri 1 nijk

log p( X | g ) H ( g , ) H ( g , , X )

log 1

O(1).

2 i 1 j 1 k 1 ri

ijk

Log-posterior

The number of

Parameters

The difference between

prior and data

As the two structures become equivalent, the

penalty term is minimized with the fixed ESS.

Conversely, as the two structures become

different, the term increases.

Moreover, α

determines the magnitude of the user‘s prior

belief for a hypothetical structure. Thus, the

mechanism of BDe makes sense to us when we

have approximate prior knowledge.

BDeu is not non-informative!!

Ueno (2011) showed that the prior of BDeu does

not represent ignorance of prior knowledge, but

rather a user’s prior belief in the uniformity of the

conditional distribution.

The results further imply that the optimal ESS

becomes large/small when the empirical

conditional distribution becomes uniform/skewed.

This main factor underpins the sensitivity of BDeu

to ESS.

Marginalization of ESS α

• Silander, Kontkanen and Myllymaki (2007)

proposed a learning method to marginalize

the ESS of BDeu. They averaged BDeu values

with increasing ESS from 1 to 100 by 1.0 .

• Cano et al. (2011) proposed averaging a wide

range of different chosen ESSs: ESS > 1:0, ESS

>> 1:0, ESS < 1:0, ESS << 1:0.

Why do we use Bayesian approach ?

The previous works eliminate out the ESS.

However,

One of most important features of

Bayesian approach is using prior

knowledge to augment the data.

→

When the score has a non-informative

prior, the ESS is expected to work

effectively as actual pseudo-samples to

augment the data.

Main idea

To obtain a non-informative prior,

we assume all possible hypothetical structures

as a prior belief of BDe because a problem of

BDeu is that it assumes only a uniform

distribution as a prior belief.

NIP-BDe

Definition 1. NIP-BDe (Non Informative Prior Bayesian Dirichlet

equivalence) is defined as

p( X | , g )

h

p

(

g

)

p

(

X

|

,

g

,

g

)

g h G

Calculation method

Our method has the following steps:

1. Estimate the conditional probability parameters set

g

g { ijk

}, (i 1, , N , j 1, , qi , k 1, , ri 1)

h

h

given gh from data.

p

2. Estimate the joint probability ( xi k , g

First, we estimate the conditional probability

parameters set given gh as

i

gh

ijk

j | g, g )

1

gh

nijk

gh

rq

i i

,

h

1

g

n

ij

gh

qi

Next, we calculate the estimated joint probability

as shown below.

p( xi k , gi j | g h , g )

h

p( x1 , , xi , , xN | g h , g )

h

xl x i g

i

h

Expected log-BDe

In practice, however, the Expected log-Bde is

difficult to calculate because the product of

multiple probabilities suffers serious

computational problems. To avoid this, we

propose an alternative method, Expected logBDe, as described below.

Eg h G log p( X | , g , g h )

h

p

(

g

)

log

p

(

X

|

,

g

,

g

)

g h G

Advantage

The optimal ESS of BDeu becomes large/small when

the empirical conditional distribution becomes

uniform/skewed because its hypothetical

structure assumes a uniform conditional

probabilities distribution and the ESS adjusts the

magnitude of the user’s belief for a hypothetical

structure.

However, the ESS of full non-informative prior is

expected to work effectively as actual pseudosamples to augment the data, especially when the

sample size is small, regardless of the uniformity

of empirical distribution.

Learning algorithm using dynamic programming

We employ the learning algorithm of (Silander and Myllymaki, 2006)

using dynamic programming. Our algorithm comprises four steps:

1. Compute the local Expected log-Bde scores for all possible

N 2 N 1 ( xi , g ) pairs.

2. For each variable xi x , find the best parent set in parent

candidate set { g } for all g x / xi ,

3. Find the best sink for all 2N variables and the best ordering.

4. Find the optimal Bayesian network

Only Step 1 is different from the procedure described by Silander et

al., 2006. Algorithm 1 gives a pseudo-code for computing the local

Expected log-BDe scores.

i

i

i

Algorithm

Computational costs

• The time complexity of the Algorithm 1 is

O(N222(N-1)exp(w)) given an elimination order

of width w. The traditional methods (BDeu,

AIC, BIC, and so on) run in O(N22(N-1)).

• However, the required memory for the

proposed computation is equal to that of the

traditional scores.

Experiments

Conclusions

This paper presented a proposal for a

noninformative Dirichlet score by

marginalizing the possible hypothetical

structures as the user’s prior belief.

The results suggest that the proposed method is

effective especially for a small sample size.