Particle Systems on GPU

advertisement

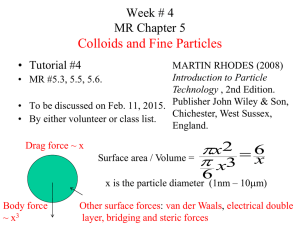

Youngho Kim CIS665: GPU Programming Building a Million Particle System: Lutz Latta UberFlow - A GPU-based Particle Engine: Peter Kipfer et al. Real-Time Particle Systems on the GPU in Dynamic Environments: Shannon Drone GPU-based Particle Systems for Illustrative Volume Rendering: R.F.P. van Pelt et al. Water Effects Nvdia DirectX10, Soft Particle Building a Million Particle System: SIGGRAPH 2004 Lutz Latta One of the original uses was in the movie Star Trek II William Reeves (implementor) Each Particle Had: • Position • Velocity • Color • Lifetime • Age • Shape • Size • Transparency Particle system from Star Trek II: Wrath of Khan Spacewar: 1962 Uses pixel clouds as Explosions Random motion Asteroids: 1978 Uses short moving vectors for Explosions Probably first “physical” particle simulation in CG/games Stateless Particle System ◦ A particle data is computed from birth to death by a closed form function defined by a set of start values and a current time. ◦ Does not react to dynamic environment Fast State Preserving Particle System ◦ Uses numerical iterative integration methods to compute particle data from previous values and changing environmental descriptions. Movie Clip Stateless Simulation – Computed particle data Particle Birth Rendering by closed form functions ◦ Notime reaction dynamically changing environment! Upload of birth &on initial Set global function parameter as Values to dynamic vertex program constants ◦ No Storage ofbuffer varying data (sim.vertex in vertex prog) Render point sprites/triangles/quads With particle system vertex program Generation – Particles are generated randomly within a predetermined location Particle Dynamics – The attributes of a particle may vary over time. Based upon equations depending on attribute. Extinction Premature Extinction: ◦ Age – Time the particle has been alive ◦ Lifetime – Maximum amount of time the particle can live. ◦ Running out of bounds ◦ Hitting an object (ground) ◦ Attribute reaches a threshold (particle becomes transparent) Rendered as a graphics primitive (blob) Particles that map to the same pixels are additive ◦ Sum the colors together ◦ No hidden surface removal ◦ Motion blur is rendered by streaking based on the particles position and velocity CPU based particle systems are limited to about 10,000 particles per frame in most game applications Limiting Factor is CPU-GPU communication ◦ PCI Express transfer rate is about 3 gigabytes/sec Rendering Passes: Process Birth and Deaths Update Velocities Update Positions Sort Particles (optional, takes multiple passes) Transfer particle positions from pixel to vertex memory Render particles Two Textures (position and velocity) ◦ Each holds an x,y,z component ◦ Conceptually a 1d array ◦ Stored in a 2d texture Use texture pair and double buffering to compute new data from previous data Storage Types ◦ Velocity can be stored using 16bit floats Size, Color, Opacity, etc. ◦ Simple attributes, can be added later, usually computed using the stateless method Birth = allocation of a particle Death = deallocation of a particle ◦ Associate new data with an available index in the attributes textures ◦ Serial process – offloaded to CPU ◦ Initial particle data determined on CPU also ◦ Must be processed on CPU and GPU CPU – frees the index associated with particle GPU – extra pass to move any dead particles to unseen areas (i.e. infinity, or behind the camera) In practice particles fade out or fall out of view (Clean-up rarely needs to be done) Stack 5 2 30 10 15 26 11 19 12 6 33 Easier! Heap 2 10 5 6 12 11 15 26 19 30 33 Optimize heap to always return smallest available index Better! Velocity Operations Global Forces ◦ Wind ◦ Gravity Local Forces Velocity Damping Collision Detection ◦ Attraction ◦ Repulsion F = Σ f0 ... fn F = ma a = F/m If m = 1 F=a Stokes Law of drag force on a sphere Fd = 6Πηr(v-vfl) η = viscosity r = radius of sphere C = 6Πηr (constant) v = particle velocity vfl = flow velocity Sample Flow Field Damping ◦ Imitates viscous materials or air resistance ◦ Implement by downward scaling velocity Un-damping ◦ Self-propelled objects (bee swarms) ◦ Implement by upward scaling velocity Collisions against simple objects ◦ Walls ◦ Bounding Spheres Collision against complex objects ◦ Terrain ◦ Complex objects ◦ Terrain is usually modeled as a texture-based height field vn = (vbc * n)vbc vt = vbc – vn Vn vbc = velocity before collision vn = normal component of velocity vt = tangental component of velocity V = (1-μ)vt – εvn μ = dynamic friction (affects tangent velocity) ε = resilience (affects normal velocity) V Vt Integrate acceleration to velocity: ◦ v = vp + a⋅ ∆ t Integrate velocity to position: ◦ p= pp + v⋅ ∆ t Computationally simple Needs storage of particle position and velocity Integrate acceleration to position: ◦ p = 2pp − ppp a⋅t2 ◦ ppp: position two time steps before Needs no storage of particle velocity Time step needs to be (almost) constant Explicit manipulations of velocity (e.g. for collision) impossible Odd-even merge sort ◦ Good for GPU because it always runs in constant time for a given data size Inefficient to check whether sort is done on GPU ◦ Guarantees that with each iteration sortedness never decreases Full sort can be distributed over 20-50 frames so it doesn’t slow down system too much (acceptable visual errors) UberFlow A GPU-based Particle Engine: SIGGRAPH 2004 Peter Kipfer et al. Major bottleneck ◦ Transfer of geometry to graphics card Process on GPU if transfer is to be avoided ◦ Need to avoid intermediate read-back also Requires dedicated GPU implementations Perform geometry handling for rendering on GPU Particle advection ◦ Motion according to external forces and 3D force field Sorting ◦ Depth-test and transparent rendering ◦ Spatial relations for collision detection Rendering ◦ Individually colored points ◦ Point sprites Simple two-pass method using two vertex arrays in double-buffer mode ◦ Render quad covering entire buffer ◦ Apply forces in fragment shader Render target Bind to texture Buffer 0 Screen Pass 1: integrate Bind to render target Buffer 1 Pass 2: render Bind to vertex array Additional buffers for state-full particles Store velocity per particle (Euler integration) Keep last two positions (Verlet integration) Simple: Collision with height-field stored as 2D texture ◦ ◦ ◦ ◦ RGB = [x,y,z] surface normal A = [w] height Compute reflection vector Force particle to field height Essential for natural behavior ◦ Full search is O(n²), not practicable ◦ Approximate solution by considering only neighbors ◦ Sort particles into spatial structure Staggered grid misses only few combinations Check m neighbors to the left/right ◦ Collision resolution with first collider (time sequential) ◦ Only if velocity is not excessively larger than integration step size Real-Time Particle Systems on the GPU in Dynamic Environments: SIGGRAPH 2007 Shannon Drone Storage requirements Integrating the equations of motion Saving particle states Changing behaviors Need to store immediate particle state (position, velocity, etc) Option 1: Store this data in a vertex buffer ◦ Each vertex represents the immediate state of the particle ◦ Particles are store linearly in the buffer Option 2: Store this data in a floating point texture array ◦ First array slice stores positions for all particles. ◦ Next array slice stores velocities, etc. Accuracy depends on the length of time step Use Euler integration for these samples Runge-Kutta based schemes can be more accurate at the expense of needing to store more particle state Integration on the CPU can use read-modifywrite operations to update the state in-place This is illegal on the GPU (except for nonprogramming blending hardware) Use double buffering Ping-pong between them Particles are no longer affixed to a predestined path of motion as in parametric systems Changing an individual velocity or position will change the behavior of that particle. This is the basis of every technique in this theory N-Body problems Force splatting for N2 interactions Gravity simulation Flocking particles on the GPU Every part of the system has the possibility of affecting every other part of the system Space partitioning can help Treating multiple parts of a system at a distance as one individual part can also help Parallelization can also help (GPU) Our goal is to project the force from one particle onto all other particles in the system Create a texture buffer large enough to hold forces for all particles in the simulation Each texel holds the accumulated forces acting upon a single particle It is O(N2), but it exploits the fast rasterization, blending, and SIMD hardware of the GPU It also avoids the need to create any space partitioning structures on the GPU Uses force splatting to project the gravitational attraction of each particle onto every other particle Handled on the GPU ◦ CPU only sends time-step information to the GPU ◦ CPU also handles highlevel switching of render targets and issuing draw calls Uses basic boids behaviors [Reynolds87,Reynolds99] to simulation thousands of flocking spaceships on the GPU ◦ ◦ ◦ ◦ Avoidance Separation Cohesion Alignment Cohesion and Alignment Unlike N-Body problems, Cohesion and Alignment need the average of all particle states ◦ Cohesion steers ships toward the common center. ◦ Alignment steers ships toward the common velocity We could average all of the positions and velocities by repeatedly down-sampling the particle state buffer / texture However, the graphics system can do this for us Graphics infrastructure can already perform this quick down-sampling by generating mip maps The smallest mip in the chain is the average of all values in the original texture Extend 2D partitioned grid [Lutz04] to 3D by rendering the scene into a volume texture Instance the geometry across all slices For each instance we clip the geometry to the slice For each voxel in the volume texture stores the plane equation and velocity of the scene primitive Once having the position buffer, it can gather paint splotches Use a gather pixel shader to traverse the position buffer For each particle in the buffer, the shader samples its position and determines if it can affect the current position in the position buffer If it can, the color of the particle is sent to the output render target, which doubles as the mesh texture GPU-based Particle Systems for Illustrative Volume Rendering: IEEE/ EG Symposium on Volume and PointBased Graphics 2008 R.F.P. van Pelt et al. Interactive GPU-based illustrative framework, called VolFlies-GPU, for rendering volume data, exploiting parallelism in both graphics hardware and particle systems User-configurable particle systems to produce stylized renderings from the volume data, imitating traditional pen and ink drawings Achieve real-time interaction and prompt parametrization of the illustrative styles, using an intuitive GPGPU paradigm GPGPU paradigm using transform feedback Intensive use of both vertex shaders and geometry shaders, while fragment shaders are completely discarded VolFliesGPU framework comprises an interactive illustrative visualization framework for real-time penand-ink style rendering of volume data Stippling: Surface shading by changing the stipple scale Hatching: Conveying shape by tracing hatch stroke in one or two directions. Contours Curvature-based hatching with contours Direction-based hatching on cone-splatted bone tissue, combined with a scale-based stippled skin iso-surface with contours. Similar styles, with added contours on the bone surface Interactive framerates The particle system is useful for graphic effects for movies, games, and medical visualization The particle system can be effectively operated on GPU processing http://www.seas.upenn.edu/~cis665/LECTURES/Lecture6.ppt http://wwwcg.in.tum.de/Research/data/uf_sig04_slides.pdf http://www.2ld.de/gdc2004/ http://wwwcg.in.tum.de/Research/Publications/UberFLOW http://ati.amd.com/developer/gdc/2007/Drone-RealTime_Particles_Systems_on_the_GPU_in_Dynamic_Environments(Siggraph0 7).pdf http://delivery.acm.org/10.1145/1290000/1281670/p80drone.pdf?key1=1281670&key2=2779414421&coll=GUIDE&dl=GUIDE&CF ID=39073315&CFTOKEN=91418908 http://yp.wtb.tue.nl/pdfs/9587.pdf