Slide - University of Southern California

advertisement

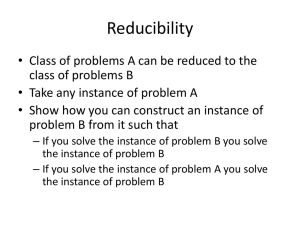

Approximation Algorithms for Orienteering and Discounted-Reward TSP Blum, Chawla, Karger, Lane, Meyerson, Minkoff CS 599: Sequential Decision Making in Robotics University of Southern California Spring 2011 TSP: Traveling Salesperson Problem • Graph V, E • Find a tour (path) of shortest length that visits each vertex in V exactly once • Corresponding decision problem – Given a tour of length L decide whether a tour of length less than L exists – NP-complete • Highly likely that the worst case running time of any algorithm for TSP will be exponential in |V| Robot Navigation • Can’t go everywhere, limits on resources • Many practical tasks don’t require completeness but do require immediacy or at least some notion of timeliness/urgency (e.g. some vertices are short-lived and need to get to them quickly) Prizes, Quotas and Penalties • Prize Collecting Traveling Salesperson Problem (PCTSP) – – – – A known prize (reward) available at each vertex Quota: The total prize to be collected on the tour (given) Not visiting a vertex incurs a known penalty Minimize the total travel distance plus the total penalty, while starting from a given vertex and collecting the pre-specified quota – Best algorithm is a 2 approximation • Quota TSP – All penalties are set to zero – Special case is k-TSP, in which all prizes are 1 (k is the quota) – k-TSP is strongly tied to the problem of finding a tree of minimum cost spanning any k vertices in a graph, called the k-MST problem • Penalty TSP: no required quota, only penalties • All these admit a budget version where a budget is given as input and the goal is to find the largest k-TSP (or other) whose cost is no more than the budget Orienteering • Orienteering: Tour with maximum possible reward whose length is less than a pre-specified budget B orienteering |ˌôriənˈti(ə)ri NG |noun a competitive sport in which participants find their way to various checkpoints across rough country with the aid of a map and compass, the winner being the one with the lowest elapsed time. ORIGIN 1940s: from Swedish orientering. Approximating Orienteering • Any algorithm for PC-TSP extends to unrooted Orienteering • Thus best solution for unrooted Orienteering is at worst a 2 approximation • No previous algorithm for constant factor approximation of rooted Orienteering Discounted-Reward TSP • Undirected weighted graph • Edge weights represent transit time over the edge • Prize (reward) v on vertex v • Find a path visiting each vertex at time t v t that maximizes v v Discounting and MDPs • Encourages early reward collection, important if conditions might change suddenly • Optimal strategy is a policy (a mapping from states to action) • Markov decision process – Goal is to maximize the expected total discounted reward (can be solved in polynomial time) in a stochastic action setting – Can visit states multiple times • Discounted-Reward TSP – Visit a state only once (reward available only on first visit) – Deterministic actions Overall Strategy • Approximate the optimum difference between the length of a prize-collecting path and the length of the shortest path between its endpoints • Paper gives – An algorithm that provably gives a constant factor approximation for this difference – A formula for the approximation • The results mean that constant factor approximations exist (and can be computed) for Orienteering and Discounted-Reward TSP Path Excess • Excess of a path P from s to t: d (s,t) d(s,t) • Minimum excess path of total prize is also the minimum cost path of total prize • An (s,t) path approximating optimal excess by factor will have length (by definition) d(s,t) (d(s,t) ) • Thus a path that approximates min excess by cost path by will also approximate minimum P Results Problem Approximation factor Source k-TSP CC Known from prior work (best value is 2) Min-excess EP CC 1 This paper 1 EP This paper Orienteering Discounted-RewardTSP 3 2 e( EP 1) (roughly) This paper First letter is objective (cost, prize, excess, or discounted prize) structure (path, cycle, or tree) and second is the Min Excess Algorithm • Let P* be shortest path from s to t with (P * ) k • Let (P* ) d(P* ) d(s,t) • Min-excess algorithm returns a path P of d(P) d(s,t) EP (P * ) length with (P) k 3 EP CC 1 where 2 Orienteering Algorithm • Compute maximum-prize path of length at most D starting at vertex s 1. Perform a binary search over (prize) values k 2. For each vertex v, compute min-excess path from s to v collecting prize k 3. Find the maximum k such that there exists a v where the min-excess path returned has length at most D; return this value of k (the prize) and the corresponding path Discounted-Reward TSP Algorithm 1. Re-scale all edge length so 1/2 2. Replace each prize by the prize discounted by the shortest path to that node v d v 3. Call this modified graph G’ 4. Guess t – the last node on optimal path P* with excess less than 5. Guess k – the value of (Pt* ) 6. Apply min-excess approximation algorithm to find a path P collecting scaled prize k with small excess 7. Return this path as solution v Results Problem Approximation factor Source k-TSP CC Known from prior work (best value is 2) Min-excess EP CC 1 This paper 1 EP This paper Orienteering Discounted-RewardTSP 3 2 e( EP 1) (roughly) This paper First letter is objective (cost, prize, excess, or discounted prize) structure (path, cycle, or tree) and second is the