Markov Chain

advertisement

Probabilistic Models

Consider our FMR triplet repeat finite state automaton...

a

S

g

1

c

2

g

3

c

4

g

5

g

6

c

7

t

8

g

c

While the above finite state automaton above is

non-deterministic, it is NOT probabilistic.

Sequences under test are either elements of the set of

“accepted” sequences, or are not (“rejected”)

What makes a model of DNA or protein probabilistic?

e

Probabilistic Models

Consider our FMR triplet repeat finite state automaton...

a

S

g

1

c

2

g

3

c

4

g

5

g

6

c

7

t

8

g

c

The key property of a probabilistic model is that

the model must define a probability distribution

over the set of possible sequences

Um, OK. What the heck does that actually mean?

Let’s review some more basic concepts of probability

e

Probability Distributions

First a formal description…

A probability distribution Pr{} on a sample space S is a

mapping from “events” of S to the real numbers such that

the following axioms are satisfied:

• Pr{A} ≥ 0 for any event A (i.e. probability of event A)

• Pr{S} = 1 (S here is the “certain event”)

• Pr{A ∪ B} = Pr{A} + Pr{B} for mutually exclusive events

An “event” is just some subset of the sample space

Probability Distributions

Sample spaces and events can conveniently be

conceptualized as a Venn diagram

Pr{A ∩ B} = ∅

S

A

B

Mutually independent events A and B here clearly don’t overlap (their

“intersection is null”), and the probability of their union is the sum of

their individual probabilities: Pr{A ∪ B} = Pr{A} + Pr{B}

Probability Distributions

Now an example

Consider the following sample space S:

S = {AA, AC, AG, AT, CA,CC,CG,CT,GA,GC,GG,GT,TA,TC,TG,TT}

• Each element s in S (s ∈ S) here is a dinucleotide.

• Sample space here is the set of all possible dinucleotides.

Pr

{UAi} = SPr{Ai}

i

i

The above formula is just a generalization of our axiom

Pr{A ∪ B} = Pr{A} + Pr{B} for mutually exclusive events

Probability Distributions

S

is represented by

the entire large

box.

We make the

“normalizing

assumption” that

its area is 1 in

order to satisfy

axiom 2

AA AC AG AT

CA CC CG CT

GA GC GG GT

TA TC TG TT

In this distribution, there are 16 possible mutually exclusive elemental

events, that together “paint” the entire sample space S without overlap.

This distribution has the additional property of being uniform

Probability Distributions

AA AC AG AT

A

Contains “C”

CA CC CG CT

GA GC GG GT

TA TC TG TT

B

No “C”

Events need not always be elemental. Here mutually exclusive events A

and B are shown and the axioms (as they must) are still satisfied

Discrete Probability Distributions

Many of the probability distributions we will be

considering have the additional property of being discrete*

Consider, for example, that for DNA strings of some

definite finite length n, there only 4n possibilities to

consider

Pr{A} =

SPr{s}

s∈A

A probability distribution is discrete if it is defined

over a finite or “countably infinite” sample space

* discrete, as in separate; distinct; individual. NOT discreet, as in “I won’t tell your wife what you did last Friday”

Unconditional Probability

Consider statements of the form:

“The chance of snow is 60%” or

“The test is 99% accurate”

These are unconditional statements, so we can say:

Pr{H} ≡ total probability

of

|H|

= set S of all

event H Pr{H}

(over the

|𝑆|

possible events)

Using intuitive notions of probability, we can express this

as the counts of events where H occurred, over the total

number of possible events in S

Unconditional Probability

Statements of this kind can conveniently be

conceptualized as a Venn diagram

S

H

Using intuitive notions of probability, we can express this

as the counts of events where H occurred, over the total

number of possible events in S

Conditional Probability

More often than not, we wish to express probabilities

conditionally. i.e. we specify the assumptions or

conditions under which it was measured

Conditional statements of probability take the form:

Pr{H|O} ≡ probability that event

H occurred in the subset of

cases where event O also

occurred

Conditional Probability

Again, this can be represented as a Venn diagram

S

H

O

The intersection H ∩ O represents events

where both H and O occurred

Conditional Probability

More often than not, we wish to express probabilities

conditionally. i.e. we specify the assumptions or

conditions under which it was measured

We can express the idea shown in the Venn diagram as:

Pr{H|O} =

|H ∩ O|

|𝑂|

Note that in the “universe of possibilities”, O has effectively

replaced S. Our probability for event H is now conditional

on the assumption that event O has already taken place.

Joint Probability

Let’s discuss the idea of joint probability, which

expresses the idea that both events O and H occur

In joint probability, the intersection of events are

expressed relative to the entire sample space S:

Pr{H∩O} =

|H ∩ O|

|𝑆|

A common alternative notation: P(H,O) = Pr{H∩O}

Joint Probability

Let’s discuss the idea of joint probability

i.e. both events O and H occur

There’s no reason we can’t rewrite this as:

Pr{H∩O} =

|H ∩ O |

|𝑆|

=

|H ∩ O| |O|

|𝑂|

|𝑆|

But wait… these new terms look awfully familiar!

Conditional & Joint Probability

Let’s discuss the idea of joint probability

i.e. both events O and H occur

Pr{H∩O} =

|H ∩ O |

|𝑆|

=Pr{H|O}Pr{O}

In other words, we can express joint probability in terms of

their separate conditional and unconditional probabilities!

This key result turns out to be exceedingly useful!

Conditional Probability

It gets even better! Dig it!

The intersection operator makes no assertion

regarding order

Pr{H∩O} =Pr{H|O}Pr{O}

=Pr{O|H}Pr{H}

We can therefore express everything only in terms of

reciprocal conditional and unconditional probabilities:

Pr{O|H}Pr{H} =Pr{H|O}Pr{O}

We’ll have a lot of fun* with this later!

*

So. Much. Fun.

Sequences considered probabilistically

What is the probability of some sequence of events x?

If it was generated from a probabilistic model it can

always be written:

The probability of observing sequence of states x...

P(x) = P(xL, xL-1, … ,x1)

...is equal to the probability that the XLth state was

whatever AND the XL-1th state was whatever else,

AND etc., etc. until we reach the first position

This is familiar as statement of joint probability

But how to proceed?

Sequences considered probabilistically

What is the probability of chain of events x?

We could try repeatedly applying our rules of

conditional probability…

P(x) = P(xL, xL-1, … ,x1)

= P(xL | xL-1, … ,x1) P(xL-1 | xL-2, … ,x1) ... P(x1)

The probability of events X AND Y happening is equal to

the probability of X happening given that Y has already

happened, times the probability of event Y

Remember our result P(X,Y) = P(X|Y) * P(Y)

Sequences considered probabilistically

What is the probability of chain of events x?

We could try repeatedly applying our rules of

conditional probability…

P(x) = P(xL, xL-1, … ,x1)

= P(xL | xL-1, … ,x1) P(xL-1 | xL-2, … ,x1) ... P(x1)

This is still incredibly yucky, but at least we

now have a separate probability term for

each position in our sequence

Is this the best we can do? Let’s digress for a moment…

Markov Chains

A traffic light considered as a sequence of states

Prg = 1

Pgy = 1

Pyr = 1

A trivial Markov chain – the transition probability between

the states is always 1

Markov Chains

A traffic light considered as a sequence of states

If we watch our traffic light, it will emit a string of states

In the case of a simple Markov model,

the state labels (e.g. green, red, yellow)

are the observable outputs of the process

Markov Chains

An occasionally malfunctioning traffic light!!

Pgy = 1

Prg =

.85

Pyg = .10

Pyr = .9

Pry = .15

The Markov property is that the probability of observing next a given

For this reason

westate

say that

a Markov

a memoryless

future

depends

only chain

on theiscurrent

state! process

Markov Chains

The Markov Property

English Translation:

The transition probability ast from state s to state t…

ast = P(xi = t | xi-1 = s)

…is equal to the probability that the ith state was t..

given that

that the immediately proceeding state (xi-1) was s

You should recognize this a statement of conditional probability!

Markov Chains

The Markov Property

ast = P(xi = t | xi-1 = s)

Another way to look at this is to say

that the conditional probability

distribution for the system’s next step

depends only on the current state, not

on any prior state or states.

There is no xi-2 in this equation!

Markov Chain

An occasionally malfunctioning traffic light!!

If we know the transition probabilities, we may already feel intuitively that

some outcomes are more likely to have been produced by our model than

others…..

But can we calculate the probability of an observed sequence?

Markov Chains

Can they help simplify our statement of probability for a sequence?

P(x) = P(xL | xL-1, … ,x1) P(xL-1 | xL-2, … ,x1) ... P(x1)

Remember, the key property of a Markov Chain is

that probability of symbol xi depends ONLY on the

value of preceding symbol Xi-1!!

Therefore:

P(x) = P(xL | xL-1) P(xL-1 | xL-2) ... P(x2|x1) P(x1)

L

P(x) = P(x1)

P

i=2

ax

i-1xi

Markov Chains

Scoring an arbitrary sequence

If we know the transition probabilities, our formula tells us

exactly how to calculate the probability of a sequence of

unknown origin:

L

P(x) = P(x1)

Pa

i=2

xi-1xi

Markov Chains

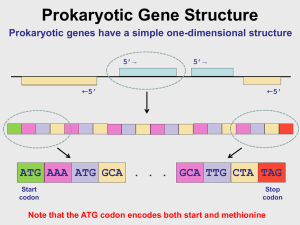

How about nucleic acid sequences?

A

C

G

T

No reason why nucleic acid sequences found in an

organism cannot be modeled using Markov chains

Markov Model

What do we need to probabilistically model DNA sequences?

States

Transition

probabilities

A

C

G

T

The states are the same for all organisms, so the transition

probabilities are the model parameters (θ) we need to estimate

Markov Models

What do we need to probabilistically model DNA sequences?

A

C

e

S

G

T

As with transformational grammars, we can also

model special start and end states

Markov Models

Which model best explains a newly observed sequence?

A

C

A

C

G

T

G

T

Organism A

Organism B

Each organism will have different transition probability

parameters, so you can ask “was the sequence more likely

to be generated by model A or model B?”

Markov Models

Using Markov chains for discrimination

=S log a

L

P(x|θA)

S(x) = log

P(x|θB)

i =1

aAxi-1xi

B

xi-1xi

A commonly used metric for discrimination using

Markov Chains is the Log-Odds ratio. Odds ratios

allow us to calibrate our thinking about a probability we

observe under one set of assumptions relative to a

probability given another set of assumptions

We must be careful in interpretation of log-odds ratios -- an

impressive seeming ratio will not necessarily be significant

Markov Models

Using Markov chains for discrimination

S(x) = log

P(x|θA)

P(x|θB)

=S

L

i =1

log

aAxi-1xi

aBxi-1xi

= log P(x|θA) - P(x|θB)

=S

L

i =1

log aAx

i-1xi

- log aBx

i-1xi

Note that we can rewrite this in several ways, and often one

form will be more convenient or efficient in a given context

More on this when we discuss numerical stability

Some unfinished business

Does a Markov Chain really define a probability distribution

over the entire space of sequences?

In other words, is the sum of the probability of all

possible sequences of any length equal to one?

This turns out to be just slightly slippery in the case

where we model the ends, but it’s not too bad in the case

of a definite length L. We’ll address this in two ways:

•

Primarily by simulation in Python

•

But also have a go at convincing yourself:

L

1=

𝑃(𝑥) =

{𝑥}

…

𝑥1

𝑥2

𝑃(𝑥1)

𝑥𝐿

Pa

i=2

xi-1-xi

The “Occasionally Dishonest Casino”

Consider a casino that uses two dice, one fair, one loaded.

Once in a while, they sneakily switch the dice…

You watch for awhile and observe the

following sequence of rolls…..

1 2 2 6 4 3 2 1 5 6 6 6 5 1 6 2 6

Would you know which die the casino was using?

Would you know if they switched at some point?

Your first problem……

The fair and cheat die have the SAME observables!!

DNA and Amino Acids are like our dice

We see the same symbols regardless of any functional association!

QATNRNTDGS

RWWCNDGRTP

SALLSSDITA

DGNGMNAWVA

TDYGILQINS

GSRNLCNIPC

SVNCAKKIVS

WRNRCKGTDQ

Can we tell by casual examination which segment of

this amino acid sequence corresponds to a transmembrane domain and which does not?

The amino acids are not labelled

Hidden Markov Models

What does Hidden refer to?

A

C

G

T

In our simple Markov model, we cannot say anything about

functional associations based on observed symbols..

Transition probabilities don’t reflect the underlying state of the system

Hidden Markov Models

Can we alter our model to accommodate alternative states for each observable?

C-

G-

A-

T-

A+

T+

Figure after Durbin, et al.

C+

G+

The complete set of “within set” transitions are omitted here for clarity. It’s still yucky!

Hidden Markov Models

The clearest representation is to separate transitions from emissions of symbols

A: 0.30

0.9

C: 0.25

A: 0.20

0.1

C: 0.35

0.99

G: 0.15

0.01

G: 0.25

T: 0.30

T: 0.20

State “+”

State “-”

Each state now has its own table of emission probabilities

and transitions now occur strictly between states

Hidden Markov Models

We now must incorporate the concept of a

state path

1 2 2 6 4 3 2 1 5 6 6 6 5 1 6 2 6

F F F F F F F F L L L L L L L F F

A A T A C A C G G C T A G C T A A

- - - - - - - + + + + + + + + - Often, the true state path (often denoted p ) associated

with sequences of interest will be hidden from us

Three Classic Problems of HMMs

We will study algorithms that address each of these problems

1. The learning problem

•

Given a model and some observed sequences, how do

we rationally choose or adjust (i.e. estimate) the model

parameters?

2. The evaluation problem

•

Given some HMM, and some observed sequences, what

is the probability that our model could have produced

those sequences? i.e. what is Pr{observation X | θ }

3. The decoding problem

•

Given some HMM and some observed sequences,

what state path through the model was most likely to

have produced those observations?

The Evaluation Problem

With HMMs, if we forget about the symbols that are emitted, the state

path still forms a Markov chain and the Markov property applies

The transition probability akl from state k to state l…

akl = P(pi = l | pi-1 = k)

…is equal to the probability that the ith state was l..

given that

… the immediately proceeding state (pi-1) was k

This statement of conditional probability is in exactly the same

form as was shown in our first Markov chain example

The Evaluation Problem

What about the emission probabilities ?

The probability of emitting symbol b when in state k

ek(b) = P(xi = b | pi = k)

…is equal to the probability that the ith symbol is b..

given that

… the current state (xi) is k

The probability that a particular symbol will be emitted is now

dependent on what state we are currently in! In other words, emission

probabilities are conditional on state.

Hidden Markov Models

Imagine we run this model generatively

A: 0.30

0.1

0.9

A: 0.20

S

0.9

C: 0.25

G: 0.15

T: 0.30

State “+”

C: 0.35

0.99

0.1

G: 0.25

0.01

T: 0.20

State “-”

What general sequence of events would occur?

The Evaluation Problem

Putting together the state transitions and the symbol emissions

The joint probability of some observed sequence x and

some state sequence p

P

L

P(x,p) = aS → p1

epi(xi) api →pi+1

i=1

…is equal to the probability of transitioning from Start to the first state..

..times a running product across all positions in the sequence of…

… the state specific emission probability times the probability of

transitioning to the next state

What we are really interested in knowing is the joint probability of the

sequence and the state path. But what if the state path is hidden?

The Evaluation Problem

Scoring with known state sequence….

S

0.10

0.10

A: 0.30

0.90

C: 0.35

G: 0.15

G: 0.25

T: 0.30

T: 0.20

0.01

Sequence under test

A: 0.20

C: 0.25

State “+”

0.9

0.90

_ACGCT

0.99

S---++

State “-”

0.99

0.99

0.01

0.9

*

*

*

*

0.2

0.35

0.25

0.25

= 1.042x10-5

0.3

0.9 * 0.198 * 0.3465 *0.0025 * 0.225 * 0.3

already a small

number!

This is the procedure when we have a known state sequence – but

We’ll

this last

another

day…

how could

werevisit

approach

this question

if the state

path was

unknown??

Alternative python transition dicts

Which reflects a uniform distribution?

self.transitions = {

"A": {

"A": 0.25,

"C": 0.25,

"G": 0.25,

"T": 0.25,

},

"C": {

"A": 0.25,

"C": 0.25,

"G": 0.25,

"T": 0.25,

},

"G": {

"A": 0.25,

"C": 0.25,

"G": 0.25,

"T": 0.25,

},

"T": {

"A": 0.25,

"C": 0.25,

"G": 0.25,

"T": 0.25,

},

}

self.transitions = {

"A": {

"A": 0.3,

"C": 0.2,

"G": 0.2,

"T": 0.3,

},

"C": {

"A": 0.4,

"C": 0.2,

"G": 0.25,

"T": 0.15,

},

"G": {

"A": 0.2,

"C": 0.2,

"G": 0.3,

"T": 0.3,

},

"T": {

"A": 0.4,

"C": 0.15,

"G": 0.25,

"T": 0.2,

},

}

I’ll send you some test sequences, and your program will tell me which

of these models was more likely to have produced the sequence!

Our python HMM data structures

Here are distributions for a dishonest casino…

self.emissions = {

self.transitions = {

"S":

{

"F": 0.5,

"L": 0.5,

},

"F":

{

"F": 0.95,

"L": 0.05,

},

"L":

{

"L": 0.90,

"F": 0.10,

}

}

"S":

# “S” is start

{

"": 1

# always emit the null string!

},

"F":

# 'F' indicates a fair die

{

"1": 1 / 6,

"2": 1 / 6,

"3": 1 / 6,

"4": 1 / 6,

"5": 1 / 6,

"6": 1 / 6

},

"L":

# 'L' indicates a loaded die

{

"1": 1 / 10,

"2": 1 / 10,

"3": 1 / 10,

"4": 1 / 10,

"5": 1 / 10,

"6": 1 / 2

}

}

Let’s adapt your simple Markov program to first work with our dice example…..