Approaches to prokaryotic gene finding

advertisement

Prokaryotic Gene Structure

Prokaryotic genes have a simple one-dimensional structure

5’→

5’→

←5’

←5’

ATG AAA ATG GCA . . . GCA TTG CTA TAG

Start

codon

Stop

codon

Note that the ATG codon encodes both start and methionine

Prokaryotic Gene Structure

Prokaryotic gene prediction begins with ORF finding

ATG AAA GCA ATG . . . GCA TTG CTA TAG

Possible

start

Alternate

start

Stop

codon

Because of the possibility of alternate start

sites, it’s not unusual for several ORFs to share

a common stop codon

An ORF finder needs to be able to find overlapping ORFs, whether they

end with the same stop codon, or overlap in a different frame

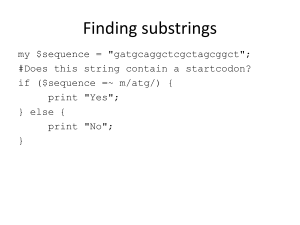

Prokaryotic Gene Structure

Prokaryotic gene prediction begins with ORF finding

'ATG(...)*?(TAA|TAG|TGA)'

A regular expression crafted to find ORFs

must also exhibit “non-greedy” behaviour

Note that many bacteria also employ rarer alternate start codons, most

commonly GTG and TTG. But we’ll pretend this doesn’t happen!

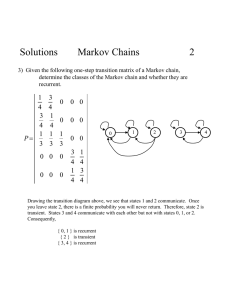

Higher Order Markov Chains

We don’t need to always just consider the most recent state

An nth order Markov process is a

stochastic process where the probabilities

associated with an event depend on the

previous n events in the state path

𝑷 𝒙𝒊 𝒙𝒊−𝟏 , 𝒙𝒊−𝟐 , … , 𝒙𝟏 = 𝑷(𝒙𝒊 |𝒙𝒊−𝟏 , … , 𝒙𝒊−𝒏 )

So far all the Markov models we have seen so far have been of order 1

In the case of a first order process this statement

reduces to our statement of the Markov property

Higher Order Markov Chains

Higher order models have an equivalent first order model

An nth order Markov chain over alphabet A

is equivalent to a first order Markov chain

over the alphabet An of n-tuples…

𝑷(𝒙𝒊 |𝒙𝒊−𝟏 , … , 𝒙𝒊−𝒏 ) =

𝑷 𝒙𝒊 , 𝒙𝒊−𝟏 , … , 𝒙𝒊−𝒏+𝟏 𝒙𝒊−𝟏 , … , 𝒙𝒊−𝒏

Practically, this says we can implement a higher order model just by

expanding the alphabet size of a first order model

This follows trivially from P(X,Y|Y) = P(X|Y)

Higher Order Markov Chains

Consider this first order Markov process

A = {A, B}

A

e

S

B

Here the alphabet A (our set of states)

consists of just A and B

How would we convert this to a second order Markov process?

Higher Order Markov Chains

Now reconfigured as a second order model

AA

AB

BA

BB

A = {AA, AB,

BA, BB}

Note how we have disallowed certain transitions (i.e. set

their probability to zero). Start and End omitted for clarity

Inhomogeneous Markov Chains

A Markov model of genes should model codon statistics

ATG AAA GCA GTC

1

2

3

In true coding genes, each of the three positions

within a codon will be statistically distinct

This can be accomplished in a few different ways…

Inhomogeneous Markov Chains

A Markov model of genes should model codon statistics

ATG AAA GCA GTC

1

2

3

𝟏

𝟐

𝟑

𝟏

𝟐

𝟑

𝒂𝒙𝟏 𝒙𝟐 𝒂𝒙𝟐 𝒙𝟑 𝒂𝒙𝟑 𝒙𝟒 𝒂𝒙𝟒 𝒙𝟓 𝒂𝒙𝟓 𝒙𝟔 𝒂𝒙𝟔 𝒙𝟕

...

One idea is to intersperse three different Markov chains in alternating fashion

This can also be recast as an HMM with additional states in obvious way

Histograms with matplot lib

We’ll use this to look at log-odds per NT distributions

import numpy as np

import pylab as P

. . .

# probs here should be your list of probabilities

# 50 here corresponds to the number of desired

n, bins, patches = P.hist(probs, 50, normed=1, histtype='stepfilled')

P.setp(patches, 'facecolor', 'g', 'alpha', 0.75)

P.show()

More histogram examples may be found at:

matplotlib.org/examples/pylab_examples/histogram_demo_extended.html