naive Bayes

advertisement

Why does it work?

We have not addressed the question of why does

this classifier performs well, given that the

assumptions are unlikely to be satisfied.

The linear form of the classifiers provides some hints.

Bayesian Learning

CS446 -FALL ‘14

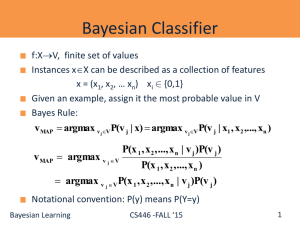

Naïve Bayes: Two Classes

•In the case of two classes we have that:

We have:

log

w i x i b A = 1-B; Log(B/A) = -C.

i

P(v

0

|

x

)

Then:

j

•but since

Exp(-C) = B/A =

P(v j 1 | x ) 1 - P(v j 0 | x ) = (1-A)/A = 1/A – 1

= + Exp(-C) = 1/A

•We get (plug in (2) in (1); some algebra):

A = 1/(1+Exp(-C))

P(v

P(v

j

j

1|x )

1|x )

1

1 exp(-

i

w i x i b)

•Which is simply the logistic (sigmoid) function

Bayesian Learning

CS446 -FALL ‘14

Note this is a bit different than

Graphical model. It encodes

the previous linearization.

the NB independence

Rather than a single function,

assumption in the edge

here we have argmax over

structure (siblings are

several different functions.

independent given parents)

Another look at Naive Bayes

Pr(l)

l

Pr( 2 | l)

Pr( 1 | l)

Pr( n | l)

1 2 3

x1xx21 xx2 x2 3 xx33 x 4

n

x n-1 x n

D

ˆ

c[ 0 ,l] logPr[ 0 ,l] log | { (x, j) | j l } | / | S |

c[ ,l]

| { (x, j) | (x) 1 j l } |

D

D

ˆ

ˆ

logPr[ ,l] / logPr[ 0 ,l] log

| { (x, j) | j l } |

n

prediction (x) argmax {l0.1} c[xi ,l] i (x)

Linear Statistical Queries

Model (Roth’99)

Bayesian Learning

i 0

CS446 -FALL ‘14

3

Another view of Markov Models

Input:

x ( (w 1 : t 1 ),...(w i-1 : t i-1 ), (w i : ?), (w i 1 : t i 1 )...)

States:

Observations:

t i -1

t

w i -1 w i

t i1

w i1

T

W

Assumptions: Pr(w i | t i ) Pr(w i | x)

Pr(t i 1 | t i ) Pr(t i 1 | t i , t i-1 ,..., t 1 )

Prediction: predict tT that maximizes

Pr(t | t i-1 ) Pr(w i | t) Pr(t i 1 | t)

Bayesian Learning

CS446 -FALL ‘14

4

Another View of Markov Models

Input:

x ( (w 1 : t 1 ),...(w i-1 : t i-1 ), (w i : ?), (w i 1 : t i 1 )...)

States:

Observations:

t i -1

t

w i -1 w i

t i1

w i1

T

W

As for NB: features are pairs and singletons of t‘s, w’s

| { (t, t' ) | t t 1 t' t 2 } |

D

D

ˆ

ˆ

c[tHMM

logPmodel

r[tc1 ,[tt 21]t/2 log

Otherwise, c[ ,t] 0

is

a linear

,t 2 ] Pr[1,t 1 ]c

[ wlog

t, t]

1 t 2 ,t 1 ]

| { (t, t' ) | t t 1 } |

(over pairs of states and states/obs)

prediction (x) argmax {tT} c[xi ,t] (x) Only 3 active features

This can be extended to an argmax that maximizes the prediction of

the whole state sequence and computed, as before, via Viterbi.

Bayesian Learning

CS446 -FALL ‘14

5

Learning with Probabilistic Classifiers

Learning Theory

Err S (h) | { x S | h(x) l } | / | S |

We showed that probabilistic predictions can be viewed as predictions via

Linear Statistical Queries Models (Roth’99).

The low expressivity explains Generalization+Robustness

Is that all?

It does not explain why is it possible to (approximately) fit the data with

these models. Namely, is there a reason to believe that these hypotheses

minimize the empirical error on the sample?

In General, No. (Unless it corresponds to some probabilistic assumptions

that hold).

Bayesian Learning

CS446 -FALL ‘14

6

Example: probabilistic classifiers

If hypothesis does not fit the

training data - augment set of

features (forget assumptions)

Pr(l)

l

Pr( 2 | l)

Pr( 1 | l)

Pr( n | l)

1 2 3

x1xx12 x 2 x 3 x 3x 3 x 4

States:

t i -1

Observations:

n

xxnn-1 x n

t

w i -1 w i

t i1

w i1

T

W

Features are pairs and singletons of t‘s, w’s

Additional features are included

Bayesian Learning

CS446 -FALL ‘14

7

Learning Protocol: Practice

LSQ hypotheses are computed directly:

Choose features

Compute coefficients (NB way, HMM way, etc.)

If hypothesis does not fit the training data

Augment set of features

(Assumptions will not be satisfied)

But now, you actually follow the Learning Theory Protocol:

Try to learn a hypothesis that is consistent with the data

Generalization will be a function of the low expressivity

Bayesian Learning

CS446 -FALL ‘14

8

Robustness of Probabilistic Predictors

Remaining Question: While low expressivity explains

generalization, why is it relatively easy to fit the data?

Consider all distributions with the same marginals

Pr( i | l )

(That is, a naïve Bayes classifier will predict the same

regardless of which distribution really generated the data.)

Garg&Roth (ECML’01):

Product distributions are “dense” in the space of all distributions.

Consequently, for most generating distributions the resulting

predictor’s error is close to optimal classifier (that is, given the correct

distribution)

Bayesian Learning

CS446 -FALL ‘14

9

Summary: Probabilistic Modeling

Classifiers derived from probability density

estimation models were viewed as LSQ hypotheses.

c (x) c x

i

i

i

i1

x i 2 ...x i k

Probabilistic assumptions:

+ Guiding feature selection but also

- Not allowing the use of more general features.

A unified approach: a lot of classifiers, probabilistic and others can be

viewed as linear classier over an appropriate feature space.

10

Bayesian Learning

CS446 -FALL ‘14

What’s Next?

(1) If probabilistic hypotheses are actually like other linear

functions, can we interpret the outcome of other linear learning

algorithms probabilistically?

Yes

(2) If probabilistic hypotheses are actually like other linear

functions, can you actually train them similarly (that is,

discriminatively)?

Yes.

Classification: Logistics regression/Max Entropy

HMM: can be learned as a linear model, e.g., with a version of

Perceptron (Structured Models class)

Bayesian Learning

CS446 -FALL ‘14

11

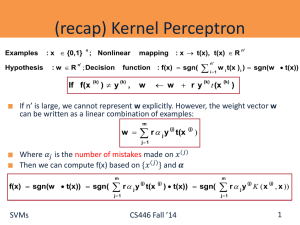

Recall: Naïve Bayes, Two Classes

In the case of two classes we have:

log

but since

P(v j 1 | x )

P(v j 0 | x )

P(v

j

i

w ixi b

1 | x ) 1 - P(v

j

0 |x )

We get (plug in (2) in (1); some algebra):

P(v

j

1|x )

1

1 exp(-

i

w i x i b)

Which is simply the logistic (sigmoid) function used in the

neural network representation.

Bayesian Learning

CS446 -FALL ‘14

12

Conditional Probabilities

(1) If probabilistic hypotheses are actually like other linear

functions, can we interpret the outcome of other linear

learning algorithms probabilistically?

Yes

General recipe

Train a classifier f using your favorite algorithm (Perceptron, SVM,

Winnow, etc). Then:

Use Sigmoid1/1+exp{-(AwTx + B)} to get an estimate for P(y | x)

A, B can be tuned using a held out that was not used for training.

Done in LBJava, for example

Bayesian Learning

CS446 -FALL ‘14

13

Logistic Regression

(2) If probabilistic hypotheses are actually like other linear

functions, can you actually train them similarly (that is,

discriminatively)?

The logistic regression model assumes the following model:

P(y= +/-1 | x,w)= [1+exp(-y(wTx + b)]-1

This is the same model we derived for naïve Bayes, only that

now we will not assume any independence assumption. We

will directly find the best w.

How?

Therefore training will be more difficult. However, the weight

vector derived will be more expressive.

It can be shown that the naïve Bayes algorithm cannot represent all

linear threshold functions.

On the other hand, NB converges to its performance faster.

14

Bayesian Learning

CS446 -FALL ‘14

Logistic Regression (2)

Given the model:

P(y= +/-1 | x,w)= [1+exp(-y(wTx + b)]-1

The goal is to find the (w, b) that maximizes the log likelihood of the data:

{x1,x2… xm}.

We are looking for (w,b) that minimizes the negative log-likelihood

minw,b 1m log P(y= +/-1 | x,w)= minw,b 1m log[1+exp(-yi(wTxi + b)]

This optimization problem is called Logistics Regression

Logistic Regression is sometimes called the Maximum Entropy model in the

NLP community (since the resulting distribution is the one that has the

largest entropy among all those that activate the same features).

Bayesian Learning

CS446 -FALL ‘14

15

Logistic Regression (3)

Using the standard mapping to linear separators through the origin, we

would like to minimize:

minw 1m log P(y= +/-1 | x,w)= minw, 1m log[1+exp(-yi(wTxi)]

To get good generalization, it is common to add a regularization term, and

the regularized logistics regression then becomes:

minw f(w) = ½ wTw + C 1m log[1+exp(-yi(wTxi)],

Empirical loss

Regularization term

Where C is a user selected parameter that balances the two terms.

Bayesian Learning

CS446 -FALL ‘14

16

Comments on discriminative Learning

minw f(w) = ½ wTw + C 1m log[1+exp(-yiwTxi)],

Regularization term

Empirical loss

Where C is a user selected parameter that balances the two terms.

Since the second term is the loss function

Therefore, regularized logistic regression can be related to other learning

methods, e.g., SVMs.

L1 SVM solves the following optimization problem:

minw f1(w) = ½ wTw + C 1m max(0,1-yi(wTxi)

L2 SVM solves the following optimization problem:

minw f2(w) = ½ wTw + C 1m (max(0,1-yiwTxi))2

Bayesian Learning

CS446 -FALL ‘14

17

Optimization: How to Solve

All methods are iterative methods, that generate a sequence wk that

converges to the optimal solution of the optimization problem above.

Many options within this category:

Iterative scaling: Low cost per iteration, slow convergence, updates

each w component at a time

Newton methods: High cost per iteration, faster convergence

non-linear conjugate gradient; quasi-Newton methods; truncated Newton

methods; trust-region newton method.

Currently: Limited memory BFGS is very popular

Stochastic Gradient Decent methods

The runtime does not depend on n=#(examples); advantage when n is very large.

Stopping criteria is a problem: method tends to be too aggressive at the beginning

and reaches a moderate accuracy quite fast, but it’s convergence becomes slow if

we are interested in more accurate solutions.

Bayesian Learning

CS446 -FALL ‘14

18

Summary

(1) If probabilistic hypotheses are actually like other linear

functions, can we interpret the outcome of other linear

learning algorithms probabilistically?

Yes

(2) If probabilistic hypotheses are actually like other linear

functions, can you actually train them similarly (that is,

discriminatively)?

Yes.

Classification: Logistic regression/Max Entropy

HMM: can be trained via Perceptron (Structured Learning Class: Spring

2016)

Bayesian Learning

CS446 -FALL ‘14

19

Conditional Probabilities

Data: Two class (Open/NotOpen Classifier)

0.1

The plot shows a

(normalized) histogram

of examples as a

function of the dot

product

act = (wTx + b)

and a couple other

functions of it.

0.09

0.08

0.07

0.06

0.05

0.04

0.03

0.02

0.01

0

In particular, we plot

the positive Sigmoid:

0

0.2

0.4

act

[1+exp(-(wTx

0.6

sigmoid(act)

0.8

1

e^act

-1

P(y= +1 | x,w)=

+ b)]

Is this really a probability distribution?

Bayesian Learning

CS446 -FALL ‘14

20

Conditional Probabilities

M apping Clas s ifie r's activation to Conditional

Probability

Plotting: For example z:

y=Prob(label=1 | f(z)=x)

(Histogram: for 0.8, # (of examples

with f(z)<0.8))

Claim: Yes; If Prob(label=1 | f(z)=x) = x

Then f(z) = f(z) is a probability dist.

That is, yes, if the graph is linear.

Theorem: Let X be a RV with

distribution F.

(1) F(X) is uniformly distributed in (0,1).

(2) If U is uniform(0,1), F-1(U) is

distributed F, where F-1(x) is the value

of y s.t. F(y) =x.

Alternatively:

1

0.8

0.6

0.4

0.2

0

0

0.2

act

f(z) is a probability if: ProbU {z| Prob[(f(z)=1 · y]} = y

Bayesian Learning

0.4

0.6

sigmoid(act)

0.8

1

e^act

Plotted for SNoW (Winnow).

Similarly, perceptron; more tuning

is required for SVMs.

CS446 -FALL ‘14

21