Lecture 06, 25 February 2014

advertisement

The Traveling Salesman Problem

in Theory & Practice

Lecture 6: Exploiting Geometry

25 February 2014

David S. Johnson

dstiflerj@gmail.com

http://davidsjohnson.net

Seeley Mudd 523, Tuesdays and Fridays

Outline

1. The k-d tree data structure

2. Exploiting k-d trees to speed up geometric tour

construction heuristics

K-d trees and the TSP

References

•

J.L. Bentley, “Multidimensional binary search trees used for

associative searching,’’ Comm. ACM 9 (1973), 509-517.

•

J.H. Friedman, J.L. Bentley, R.A. Finkel, “An algorithm for finding

best matches in logarithmic expected time,” ACM Trans. Math.

Software 3 (1977), 209-226.

•

J.L. Bentley, B.W. Weide, A.C. Yao, “Optimal expected-time

algorithms for closest point problems,” ACM Trans. Math. Software

6 (1980), 563-580.

•

J.L. Bentley, “K-d trees for semidynamic point sets,” Proc. 6th Ann.

ACM Symp on Computational Geometry, ACM, 1990, 187-197.

•

J.L. Bentley, “Experiments on traveling salesman heuristics,” Proc.

of 1st Ann. ACM-SIAM Symp. on Discrete Algorithms, 1990, 91-99.

•

J.L. Bentley, “Fast algorithms for geometric traveling salesman

problems,” ORSA J. Comput. 4 (1992), 387-411.

K-d trees [Bentley, 1975]

(Based on hierarchical partitioning, splitting at medians)

Stop partitioning is box contains k or fewer cities (typical k might be 5 or 8)

Data Elements

Array T: Permutation of Points derived from the partitioning

Array D: Array of Tree Vertices

Array H: H[i] = index of tree leaf vertex containing Point i

Point Structure:

Index of

Point

X-coord

Tree Vertex Structure:

Index L in

Array T of

First Point

Index U in

Array T of

Last Point

Widest

Dimension

Median Value

in Widest

Dimension

Leaf?

Y-coord

Deleted?

Implicit for Tree Vertex D[k]:

• Index of Median = ⎣(L+U)/2⎦

• Index of Parent = ⎣k/2⎦

• Index of Lower Child = 2k

• Index of Higher Child = 2k+1

Tree Vertex D[1]:

L=1

U=N

horizontal

0.71

0.48

Non-Leaf

0.60

Tree Vertex D[2]:

L=1

U=m

vertical

0.71

Non-Leaf

Tree Vertex D[3]:

0.48

(mth

vertex from left)

[L,U] is reordered so the city with median widestTdimension

contains coordinate

all the cities,

in position

arbitrary

order. and all cities

is in

⎣(L+U)/2⎦

to the left have widest dimension coords. ≤ the median.

L = m+1

U=N

vertical

0.60

Non-Leaf

Operations

• Construct k-d Tree: O(NlogN)

• Delete or Undelete Points (without rebalancing): O(1)

• Nearest Neighbor Search: Find undeleted point nearest to

a specified member of the point set: “Typically” O(logN)

• Fixed Radius Search: Return all undeleted points that are

within the specified distance to a specified member of the

point set: “Typically” O(logN)

• “Ball Search” – will be described later when we need it.

Finding nearest neighbor of point v

1.

Let the coordinates for v by (x,y).

2.

Use array H to find the index k of the tree vertex structure

for the leaf containing v.

3.

Find the nearest neighbor u of v among the cities in the the

interval of T specified by the tree vertex D[k].

4.

Let Δ = d(u,v). Any point (x’,y’) closer to v than u must have

x-Δ ≤ x’ ≤ x+Δ and y-Δ ≤ y’ ≤ y+Δ, so we only need consider

cities in leaves whose regions intersect this corresponding

square, which we can discover by going up the tree.

u

v

Finding nearest neighbor of point v:

The recursive search: Going up.

Initially, all four directions (up,down,left,right) are live. Set k to be the

index of the leaf that contains v.

Repeat until done:

1.

If k = 1 or all four directions are dead, we are done.

2.

Let D[k] be the current Tree Vertex.

3.

Consider the parent Tree Vertex D(⎣k/2⎦), and let D[k’] be the

sibling of D[k]. If D[k’] has already been explored, set k ←⎣k/2⎦

and continue.

4.

If the parent’s other child is in a dead direction, set k ⎣k/2⎦ and

continue.

5.

Test whether the parent’s median (in its specified direction) is

contained in the current square.

6.

1.

If yes, call “ExploreDown(k’)”.

2.

Otherwise, declare this direction dead.

Set k ←⎣k/2⎦ and continue.

Finding nearest neighbor of point v:

ExploreDown(k)

1.

If D[k] is a leaf, let w be the closest point to v in the list of points

for D[k].

1.

If d(v,w) < Δ, declare w to be the new nearest neighbor and set

Δ = d(v,w), thus shrinking the current square.

2.

Return.

2.

Otherwise, D[k] is an internal vertex, with at least one of its two

children potentially intersecting the current square. Let k’ be the

index of the child that lies to the “inside” direction of the median,

and let k’’ be the index of the other child.

3.

Call ExploreDown(k’).

4.

If the median lies entirely outside the current square, declare the

relevant direction dead and return.

5.

Otherwise, call ExploreDown(k’’) and then return.

Fixed Radius Search

Implemented just like Nearest Neighbor Search, except that Δ is

fixed and part of the query, and we return all vertices in the

encountered leaf nodes that satisfy the distance criterion.

Nearest Neighbor in “O(NlogN)”

1. Construct a k-d tree on cities.

2. Pick a starting city cπ(1) and delete it from the kd-tree.

3. While there remains an unvisited city, let the current last

city be cπ(i).

• Delete cπ(i) from the kd-tree, and use a Nearest

Neighbor Search to find the nearest unvisited

(undeleted) city to cπ(i). Call that city cπ(i+1) and declare

it the new “current last city”.

4. Add an edge from cπ(N) to cπ(i).

Greedy (Lazily) in “O(NlogN)”

Initialization

•

Construct a k-d tree on the set C of cities. Let G be a graph with vertex

set C and (initially) no edges. We will maintain the property that the kdtree contains only vertices with degree 0 or 1 in G. We will also maintain,

for each degree-1 city c, the identity tail(c) of the other end of the path

containing c.

•

For each city c, perform a Nearest Neighbor Search to identify its

nearest neighbor c’ and add the triple 〈c,c’,dist(c,c’)〉 to a priority

queue, sorted by increasing value of the third component.

In what follows, we shall say that an edge {c,c’} is “legal” if adding it to the

current graph G does not create

a) A vertex of degree 3 or

b) A cycle of length less than N.

Greedy (Lazily) in “O(NlogN)”

Choosing the next edge to add

While not yet a Hamilton Path:

•

While we haven’t yet selected a champion edge,

– Extract the minimum 〈c,c’,d(c,c’)〉 triple from the priority queue and

let Δ = d(c,c’).

– While, {c,c’} is not legal,

• If c’ = tail(c), we temporarily delete c’ from the kd-tree and do a

Nearest Neighbor Search to find a new nearest neighbor c’ for c.

– Once the we have obtained a legal {c,c’}, undelete all the cities deleted

during the interior while loop.

– If d(c,c’) ≤ Δ, declare {c,c’} to be the champion; otherwise, insert the

triple 〈c,c’d(c,c’)〉 into the priority queue -- there is no champion yet.

Greedy (Lazily) in “O(NlogN)”

Handling the Chosen Legal Edge

•

Add {c,c’} to G.

•

If either c or c’ now has degree 2, delete it (permanently) from the k-dtree. We also have to update some tail( ) values:

– If both c and c’ had degree-0 previously, set tail(c) = c’ and tail(c’) = c.

– If c was previously degree-1 and c’ was degree 0, set tail(c’) = tail(c)

and tail(tail(c)) = c’.

– If c’ was previously degree-1 and c was degree 0, set tail(c) = tail(c’)

and tail(tail(c’)) = c.

– If both c and c’ were degree-1, set tail (tail(c)) = tail(c’) and

tail(tail(c’)) = tail (c).

tail(c)

c

c’

tail(c’)

Once we have constructed a Hamilton path, we get a tour by adding an edge

between and the two endpoints of the path.

Savings Heuristic in “O(NlogN)”

•

c’ Greedy, except that the

Our implementation mimics our lazy approach to

triples in the priority queue

c will be 〈c,c’,s(c,c’)〉, the third component being

the savings from the shortcut, not the distance between c and c’. (Also, the

hub city c1 does not take part in any triple.)

•

The key difference is in computing the greatest savings for a given pair of

cities c and c’, which equals d(c1,c) + d(c1,c’) – d(c,c’).

•

Nominally, we want to find, for each c, that c’ which yields the biggest savings,

and insert the corresponding triple into the priority queue. However, this is

c1

not strictly necessary.

•

Call a city c’ “good” for c if d(c1,c’) ≤ d(c1,c) and otherwise call it “bad”

•

CLAIM: For a given c, it is not harmful to ignore bad candidates for c’.

•

PROOF: Suppose c’ is not good for c, in which case d(c1,c’) > d(c1,c). If the

edge {c,c’} provides the biggest savings overall, then it will also provides the

biggest savings for c’, and since c is good for c’ in this case, the triple involving

the edge {c,c’} will still be in the priority queue, as part of the triple 〈

c’,c,s(c’,c)〉, even under the restriction to only good candidates

Savings Heuristic in “O(NlogN)”

•

Construct a standard k-d tree as we did for Greedy.

•

For each city c, perform a modified Nearest Neighbor search to identify a good

neighbor c’ for c (if any exist) that yields the maximum savings, and add the

triple 〈c,c’,s(c,c’)〉 to the priority queue. The search proceeds as follows.

•

If there are any good cities in the leaf bucket containing c, let S be the

maximum savings for any such city, and let c’ be that city. Otherwise, let c’ be

c and set S to -∞.

•

CLAIM: If a good city c’’ for c yields savings S’’ > S, then we must have

d(c,c’’) ≤ 2d(c1,c) – S.

•

PROOF: By definition we have S’’ = d(c1,c’’) + d(c1,c) – d(c,c’’).

Hence d(c,c’’) = d(c1,c’’) + d(c1,c) – S’’ < 2d(c1,c) – S, since the fact that c’’ is good

for c implies d(c1,c’’) ≤ d(c1,c) .

•

So, from now on mimic Nearest Neighbor Search with d(c,c’) < 2d(c1,c) – S

playing the role of d(c,c’) < Δ,.

•

The rest of the implementation closely mimics that for Greedy, with our

modified Nearest Neighbor Search replacing the original.

Nearest Addition in “O(NlogN)”

Initialization

•

Construct a standard k-d tree as we did for Greedy.

•

Perform Nearest Neighbor Searches for each city c to find its nearest

neighbor n(c).

•

Use linear search to find a city c with minimum d(c,n(c)). Let c’ = n(c).

•

Let our initial tour consist of the two cities c and c’, delete c and c’ from

the k-d tree, compute the new nearest neighbors n(c) and n(c’) for c and c’,

and add the triples 〈c,n(c),d(c,n(c))〉 and 〈c’,n(c’),d(c,n(c’))〉 to a new

priority queue.

•

Inductively, we shall assume that the cities in the tour are deleted from

the k-d tree, while the non-tour cities remain undeleted. In addition, the

priority queue contains entries for every city in the current tour (and only

those), with the triples satisfying the property that, for each triple

〈

c,c’,d(c,c’)〉, if c’ is not in the current tour, then it is the nearest non-tour

city to c. Finally, we store the tour in a doubly-linked list, with each city

linked to its two tour neighbors, so that the two edges involving a given

vertex can be found in constant time.

Nearest Addition in “O(NlogN)”

Doing the Insertions

While we have not yet constructed a tour on all the cities,

•

While we have not yet found a valid candidate for the next city to be

inserted,

– Extract the minimum 〈c,c’,d(c,c’)〉 from the priority queue.

– If city c’ is currently in the tour, perform a Nearest Neighbor

Search to identify the nearest non-tour city c’’ to c, and insert the

triple 〈c,c’’,d(c,c’’)〉 into the priority queue.

•

Let {c,c1} an {c,c2} be the two tour edges involving c.

•

Determine into which of the two edges c’ can be more cheaply inserted

and perform that insertion.

•

Delete c’ from the k-d tree, compute its nearest non-tour neighbor c’’,

and insert 〈c’,c’’,d(c’,c’’)〉 into the priority queue.

Note: A simplified variant on this implementation will construct

MSTs in time “O(Nlog(N))”.

Nearest Insertion in “O(NlogN)”

• The choice of how to start and which city to insert next is the

same as for Nearest Addition.

• The new complexity is that, instead of just two choices of where

to insert the new city, now ALL tour edges are potential insertion

candidates.

• That is going to be complicated, so let’s start with something a bit

simpler and almost as good, an algorithm due to [Bentley, 1992]

that is intermediate between Nearest Addition and Nearest

Insertion:

• Nearest Augmented Addition (NA+): Choose the city c to be

inserted as in Nearest Addition, and let c’ be the nearest tour city

to it. As candidate insertion edges, consider all tour edges having

an endpoint c’’ with d(c,c’’) ≤ 2d(c,c’).

Nearest Augmented Addition in “O(NlogN)”

Finding the Best Insertion

• We maintain an additional k-d tree, this one containing all the

cities, but with all the cities initially marked “deleted” and only

undeleted when they are added to the tour.

• To find the candidate edges for city c with nearest tour neighbor

c’, we do a fixed radius search in our second kd-tree for c with

radius 2d(c,c’).

• For each city c’’ found in this search, we determine the cost of

inserting c into each of the tour edges with endpoint c’’, and insert

c into the best edge found, over all c’’ returned by the fixed

radius search.

Nearest Insertion in “O(NlogN)”

Finding the Best Insertion

• We exploit a theorem from [Bentley, 1992]:

• Best Insertion Theorem: Let c’ be the nearest tour neighbor of

non-tour city c, and for any tour city b, let longer(b) be the length

of the longer tour edge incident on b. The best tour edge into

which to insert c must have an endpoint e satisfying one of the

two following restrictions:

a) d(c,e) ≤ 1.5d(c,c’), or

b) d(c,e) ≤ 1.5longer(e).

• To exploit this, we need to use the “Ball search” operation

mentioned earlier, which we will now explain. We will prove the

theorem later.

Nearest Insertion in “O(NlogN)”

Finding the Best Insertion

Ball Search

•

Suppose we have a positive distance rad(c’) associated with each undeleted

city c’. Given a city c, return all those undeleted c’ such that d(c,c’) ≤

rad(c’).

[We wish to implement this in an environment where cities can be undeleted and the values

of rad(c’) can change dynamically.]

•

The basic idea is to store, with each leaf vertex, a list of all the undeleted

cities c’ that are within distance rad(c’) of some city in the leaf vertex.

•

To construct these lists, perform Fixed-Radius searches for each

undeleted c’, with radius set to rad(c’).

•

We can update the value of rad(c’) for a given c’ by performing a FixedRadius search for c’ with the larger of the before and after values for

rad(c’), and either adding or removing c’ from the leaf lists, as appropriate.

Nearest Insertion in “O(NlogN)”

Finding the Best Insertion

To set up our method for finding the best insertion, we maintain the property

that the undeleted cities in our k-d tree are precisely the tour cities, and that

for each such city c’’, we have rad(c’’) = 1.5longer(c’’). This involves at most

three Fixed Radius searches after each insertion: one for the inserted vertex

and one for each of its new tour neighbors.

By the Best Insertion Theorem, we can then find all potential candidates for

the best place to insert c by

1.

Doing a Fixed Radius search for c with radius 1.5d(c,c’), where c’ is

(already identified) city in the tour that is nearest to c.

2.

Doing a Ball Search for c.

The best edge will be a tour edge incident on one of our candidates, by the

Theorem.

With luck, this will yield far fewer candidates than there are cities in the

current tour, at least when the latter number number is large.

Proof of Best Insertion Theorem

Recall: c is the city to be inserted and c’ is the nearest tour city to c.

We need to show the the best place to insert c into the tour is next to a city e

satisfying one of

a)

d(c,e) ≤ 1.5d(c,c’), or

b) d(c,e) ≤ 1.5longer(e).

Observation: Denote the cost of inserting a vertex c into a tour edge {x,y},

which by definition is d(c,x) + d(c,y) - d(x,y), as cost(c,x-y). Then

cost(c,x-y) ≤ 2d(c,x).

Proof: The triangle inequality implies d(c,y) ≤ d(c,x) + d(x,y). Thus we have

cost(c,x-y) ≤ d(c,x) + (d(c,x) + d(x,y)) –d (x,y) = 2d(c,x).

We exploit this to prove the Best Insertion Theorem by contradiction. Assume

that {f,g} yields the minimum value of cost(c,x-y) for {x,y} a tour edge and c the

city we wish to insert, but neither (a) nor (b) holds when e is either f or g.

Case 1 [Long {f,g}]: d(f,g) > d(c,c’).

Let c’’ be either tour neighbor of c’.

c’’

Assume without loss of generality that d(f,g) = 1,

and hence both longer(f) and longer(g) are at

least 1.

c’

Since this is the “Long” case, this means that

d(c,c’) < 1.

By assumption we have thus have both d(c,f) >

1.5 and d(c,g) > 1.5.

By our observation, cost(c,c’-c’’) ≤ 2d(c,c’) < 2.

By definition, cost(c,f-g) = d(c,f) + d(c,g) – d(f,g)

> 1.5 + 1.5 – 1 = 2.

So cost(c,f-g) > cost(c,c’-c’’), a contradiction.

<1

c

> 1.5

> 1.5

g

f

1.5

1

1.5

Case 2 [Short {f,g}]: d(f,g) ≤ d(c,c’).

Assume without loss of generality that d(c,c’) = 1.

c’’

Then, since this is the “Short” case, we must have

that d(f,g) ≤ 1.

c’

1

By assumption we have thus have both d(c,f) > 1.5

and d(c,g) > 1.5.

c

1.5

By our observation, cost(c,c’-c’’) ≤ 2d(c,c’) = 2.

> 1.5

By definition, cost(c,f-g) = d(c,f) + d(c,g) – d(f,g) >

1.5 + 1.5 – 1 = 2.

> 1.5

So cost(c,f-g) > cost(c,c’-c’’), a contradiction.

f

≤1

g

Cheapest Insertion in “O(NlogN)”

•

Construct a standard k-d tree as we did for Greedy.

•

The intitial 2-city tour is constructed just as in Nearest Addition.

•

For subsequent insertions, we will maintain a (lazily evalutated) priority

queue whose entries are triples 〈{a,b},c,d(a,c)+d(b,c)〉 for {a,b} a tour

edge and c a non-tour city that yields the minimum value for Δ = d(a,c) +

d(b,c).

•

We determine the next insertion by repeatedly extracting the triple 〈

{a,b},c,Δ〉 with the minimum Δ from the priority queue, discarding it if

{a,b} is not a tour edge, updating and reinserting it if {a,b} remains a tour

edge but c is now in the tour, and otherwise stopping. Once we have

stopped we know that the current triple denotes the cheapest insertion,

and we perform it.

•

To compute the currently correct triple for a tour edge {a,b}, we

proceed as described on the next slide. (This computation is needed

when {a,b} first becomes a tour edge, or when a triple for {a,b} is

extracted from the priority queue and, although {a,b} remains a tour

edge, c is now in the tour.)

Cheapest Insertion in “O(NlogN)”

Finding the cheapest c for {a,b}

1)

First, use a Nearest Neighbor search to find a nearest neighbor c of a,

with ties broken in favor of the nearest neighbor that minimizes d(a,c) +

d(b,c).

2)

Next, do a fixed-radius search from b with radius d(b,c), and among the

returned cities (which must include c itself) find a city e that yields the

minimum value for d(b,e) + d(a,e).

Claim: The city e thus found yields the cheapest possible insertion for {a,b}.

Proof: Suppose not, and some city f yields a cheaper insertion. We thus

must have d(a,f) + d(b,f) < d(b,e) + d(a,e) ≤ d(a,c) + d(b,c). By step (1), we

must have d(a,f) ≥ d(a,c), which implies we must have d(b,f) < d(b,c). But this

means that f would have been considered in step (2), and so we cannot have

d(b,f) + d(a,f) < d(b,e) + d(a,e), a contradiction.

Convex Hull Cheapest Insertion in “O(NlogN)”

The same as Cheapest Insertion, except for the choice of starting tour.

Convex Hull Greatest Angle Insertion in “O(NlogN)”

A bit more complicated…

Figure from [JBMR94]

RI, RA, RA+ “O(NlogN)”

1.

Build k-d tree on cities, with all cities marked deleted (no

priority queue needed).

2. Pick two random cities c and c’ to start the tree, and mark them

undeleted.

3. While not yet done

1)

Pick a random non-tour city c.

2)

Do a Nearest Neighbor search from c to find its nearest tour neighbor c’.

3)

We then use the method for choosing the place to insert that is used by

NI, NA, or NA+, respectively.

4)

Insert c’ into the chosen edge and mark it as undeleted.

FI, FA, and FA+ in “O(NlogN)”

As usual, we start by constructing a k-d tree on all the cities, with all cities

deleted except those in the initial tour.

Then, for each city c not in our initial tour, we compute its nearest tour neighbor

c’, and enter the triple 〈c,c’,d(c,c’)〉 into a priority queue, sorted by decreasing

(rather than increasing) order of the third component.

To find the next city to insert:

1.

Extract the maximum element 〈c,c’,d(c,c’)〉 in the priority queue.

2.

Perform a Nearest Neighbor Search on c, and if it finds a tour city c’’ other

than c’, insert 〈c,c’’,d(c,c’’)〉 and go back to extracting.

3.

Otherwise, we choose to insert vertex c.

We then use the method for choosing the place to insert as used by NI, NA, or

NA+, respectively.

FI, FA, and FA+ in “O(NlogN)”

Hidden Challenge: Constructing the initial 2-city tour.

How do we identify the two most distant cities in O(NlogN) time?

Answer: It can be done.

Suppose C is the minimum-diameter circle that contains all our

cities. (This can be constructed in linear time [N. Megiddo, “Linear-time

algorithms for linear programming in R3 and related problems,” SIAM J. Comput. 12

(1983) 759-756]

.)

Claim: There is a pair {a,b} of most-distant cities, both of which lie

on circle C.

Proof by Picture: [On succeeding slides]

Slide C as far right as possible, until it hits a city c →

There are no

cities inside

this region:

Any such city

x would have

d(x,b) > d(a,b),

contradicting

the maximality

of d(a,b).

c

a

Circle of

radius d(a,b)

with center b.

C

b

{c,b} is also a maximum distance pair, and c is on the circle.

So we may assume there is a maximum distance pair {a,b} with

city a on the minimum-diameter circle containing all the cities.

there is a of

maximum-distance

pair

{a,b}

one, buta.

Case 1. Assume

The intersections

A and C are in the

half

of with

C containing

not both, endpoints on circle C.

A

C

a

Circle A of

radius d(a,b)

with center a.

b

There are no

cities in this

closed region:

Any such city

x would have

d(x,a) ≥ d(a,b),

contradicting

either the

maximality of

d(a,b) or the

assumption

that no max

distance pair

has both

endpoints on C.

Then we can slide C left by a small ε and it will still contain all cities,

but now none will be on its border. Thus, we can shrink C and still

enclose all the cities – a contradiction.

Case 2. The intersections of A and C are in the half of C not containing a.

A

x

C

a

Circle A of

radius d(a,b)

with center a.

b

All cities must

lie in this

region

There are no

cities in this

closed region:

Any such city

x would have

d(x,a) ≥ d(a,b),

contradicting

either the

maximality of

d(a,b) or the

assumption

that no max

distance pair

has both

endpoints on C.

Let x be the city on C that is closest to b,

and let X be a circle of radius d(a,b) centered at X.

All cities must lie in the darkest region (or on its boundaries).

A

x

C

a

Split C by a diameter that

intersects A between b and

the intersection of A and C

closest to x.

b

Note that both a and x must

lie on the same side of this

diameter, and somewhat

distant from it.

Now we can slide C by a small ε

in the direction indicated, and

all cities will remain inside, but

now none will be on the

boundary and we can shrink C

and still enclose them all.

Finding the farthest pair in time O(NlogN)

So now we know we can restrict attention to

cities on the minimum-diameter enclosing circle C.

Sort these cities in order around the circle in

time O(NlogN).

C

x

For a given boundary city a, we can now find the

boundary city b that is most distant from a in

constant time:

Construct the diameter of C that has c as an

endpoint, and let x be the intersection of that

diameter with the other side of the circle.

a

City b is the boundary city closest to x.

Thus the total time for finding a farthest pair is O(N) to find the smallest

enclosing circle, O(NlogN) to sort the cities on the circle, and O(N) to find the

farthest city for each boundary city and then determine the farthest over all.

Total time is hence O(NlogN), as hoped.

b

Farthest Insertion in “O(NlogN)”

• Dirty Little Secret:

• We don’t do what I just told you.

• In Jon Bentley’s Farthest Insertion code (which is used for our

results) the Initial Tour actually consists of the first city in the

input plus the city most distant from it.

• Similarly, his implementations of Nearest Addition, etc., all

start with a tour consisting of the first city in the input plus its

nearest neighbor.

• Advantages: Simpler programming.

• Disadvantages: Results depend on the order in which the input

cities are presented.

Double MST in “O(NlogN)”

•

Create k-d tree: O(NlogN)

•

Build MST using a priority queue and choosing cities to add (and where)

in the same way we chose vertices to add in Nearest Addition.

•

Duplicate the spanning tree: O(N)

•

Find Euler Tour: O(N)

•

Perform “smart shortcuts”: O(N)

Approximate Christofides in “O(NlogN)”

•

Create k-d tree: O(NlogN)

•

Build MST using a priority queue and choosing cities it add (and where)

in the same way we chose vertices to add in Nearest Addition.

•

Add a “greedy” matching on the odd-degree vertices using Nearest

Neighbor searches.

•

Find Euler Tour: O(N)

•

Perform “smart shortcuts”: O(N)

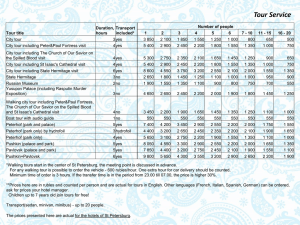

Random Euclidean Performance for N = 1,000,000

(% Excess over HK Bound)

Algorithm

Smart-Shortcut Christofides

%

9.8

Approximate Smart-Shortcut Christofides

11.2

Savings

12.2

Farthest Insertion

13.5

Greedy

14.2

Classic-Shortcut Christofides

14.5

Random Insertion

15.2

Implementation Breakdown

Algorithm

Data structures

Searches/City

Other Algs

Nearest Neighbor

1 kd

1 nn

Greedy

1 kd, 1 pq, tail

1+ nn

Savings

1 kd, 1 pq, tail

1+ nn*

Nearest Addition

1 kd, 1 pq

1+ nn

Nearest Addition+

2 kd, 1 pq

1+ nn, 1 fr

Nearest Insertion

2 kd, 1 pq

1+ nn, 1 fr, 1 ball

Cheapest Insertion

1 kd, 1 pq

2+ nn*, 2+ fr

Convex Hull, Cheapest Insertion

1 kd, 1 pq

2+ nn*, 2+ fr

Random Addition

1 kd

1 nn

Random Addition+

1 kd

1 nn, 1 fr

Random insertion

1 kd

1 nn, 1 fr, 1 ball

Farthest Addition

1 kd, 1 pq

2+ nn

Farthest Addition+

1 kd, 1 pq

2+ nn, 1 fr

Farthest Insertion

1 kd, 1 pq

2+ nn, 1 fr, 1 ball

Double MST

1 kd

1+ nn

euler tour

Approximate Christofides

1 kd

2+ nn

euler tour

convex hull

Running Times, Random Euclidean Instance

with N = 1,000,000

Normalized to a 500Mhz DEC Alpha Processor in a Compaq ES40 with 2 Gb RAM

Algorithm

secs.

Read Instance

1.0

Strip

2.8

Spacefilling Curve

%

Algorithm

secs.

%

Farthest Addition

148.7

43.1

30.1

Farthest Addition+

175.2

13.6

3.0

35.1

Random Insertion

202.9

15.1

Nearest Neighbor

25.6

23.3

Convex Hull, Cheapest Insertion

248.7

21.9

Random Addition

36.1

40.5

Cheapest Insertion

266.1

22.1

Random Addition+

60.0

15.5

Farthest Insertion

316.4

13.5

Greedy [Bentley]

60.4

14.2

Smart-Shortcut Christofides

422.9

9.8

Nearest Addition

78.1

32.6

Greedy [Johnson-McGeoch]

89.0

14.3

Nearest Addition+

95.5

27.1

2-Opt [Johnson-McGeoch]

196.0

4.7

Savings

99.6

12.1

3-Opt [Johnson-McGeoch]

224.3

2.9

Smart-Shortcut Double MST

101.4

40.0

Lin-Kernighan [Johnson-McGeoch]

574.5

2.0

Approximate Christofides

130.3

11.2

Lin-Kernighan [NYYY-6]

287.9

1.7

Nearest Insertion

130.6

27.0

Lin-Kernighan [NYYY-12]

507.6

1.5