Computability

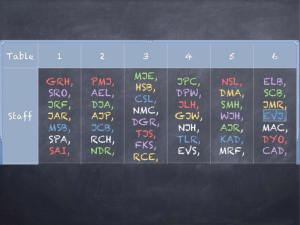

advertisement

Computability

Construct TMs. Decidability.

Preview: next class: diagonalization

and Halting theorem.

Homework/Classwork

• Last Thursday, I showed a design for a TM that

accepts {ww | w a string in {0,1}*}. It was

decidable (always halts).

• What about

– {anbncn | n>=0}

Homework

• Video: reactions to video including reading

from one of the original Turing papers?

Enumerator

• Recall: this was a type machine that takes no input

but prints out members of a language L.

– If a language is Turing Recognizable, then there exists (we

can design) an enumerator that prints out members of the

language. If there exists an enumerator for a language L,

then there is a TM that recognizes it.

– IF a language is decidable, we can build an enumerator

that prints out the strings in lexical order. If an enumerator

prints out the machines in lexical order, then it is Turing

decidable.

• Note: we aren't saying that if a given enumerator doesn't print out

the strings in lexical order, there couldn't be another one that

does….

Enumerator Homework/classwork

• Provide formal definition of enumerator

Decidable vs Turing Recognizable

• A language is decidable if there is a TM that

accepts the language and always halts.

• A language is Turing-recognizable if there is a

TM that accepts the language and may or may

not halt on some of the strings NOT in the

language.

• All decidable languages are TM recognizable.

Hierarchy Bull's eye

Each is contained in and strictly smaller than next:

FSM NDFSM regular expressions

(deterministic push down automaton)

Push Down Automation Context Free Grammars

Turing decidable nondeterministic TM decidable

Turing recognizable nondeterministic TM recognizable

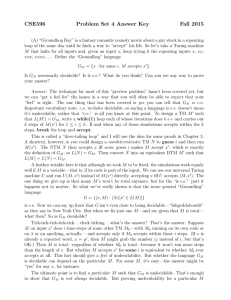

Decidability question

• The language

{<B, w> | B is a FSM that accepts w} is decidable.

That is, there exists a TM that says yes for a

<B,w> when B accepts w, and says no

(stops and says no) if B does not accept w.

• Informal proof:

– Assume some encoding of B that a TM can check. If

the first part of input doesn't fit a valid encoding for a

FSM, reject. First states of TM check the encoding

– Add to TM the simulation for B. Also record on the

tape the state and the position scanning w. Do

simulation. When done (known by position), check if

state is accepting state.

More…

• The language

{<E,w> | E is a regular expression that

generates w} is decidable

– convert E to FSM

– Build TM to simulate the FSM. Try on w.

– Accept or reject

The language {<A> | A is a FSM and

L(A) is empty} is decidable

• Design encoding for FSM: list states and list triples

representing the arcs: pairs of states and the letter. Build TM

that checks for valid encoding. Note: alphabet includes

symbol for each state.

• Grow alphabet to include marked state for every state symbol.

• Add to the TM coding that does following marking: mark start

state. Scanning the triples, mark any state that has a transition

from a marked state. Repeat for new round. Stop when no

new state has been marked in the round. So this will take at

most N rounds when N is number of states. Are any accept

states marked? If yes, accept; otherwise reject.

Context Free Grammar

• {<B, w> | B is a CFG and generates w} is

decidable.

• Informal proof:

– Generate the CFG in Chomsky normal form that is

equivalent to B.

Claim: If length of w is n, there is a derivation of w

that is not greater than 2n + 1 steps.

– Given the limit on the size of the parse

tree/number of derivations, CLAIM we can

construct a TM that tries each one.

Parse tree

2*n -1 derivations

S

A

A1

B

B1

A2

B2

…..

w1

w2

w3

…

wn

CFG case

• The fact that the number of derivations is

bounding means that the TM knows when it

has checked enough possibilities and can

reject if there isn't a parse tree!!!

Preview: Halting problem

• Take two inputs: encoding of a TM T and input

w for that TM and simulate / run T on w.

• Will show that

{<M,w>|M is a TM and w is a string and M

accepts w} is

– Turing-recognizable but not

– Turing-decidable