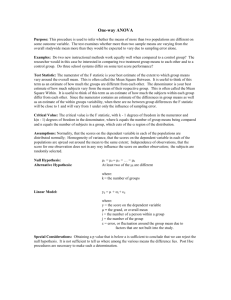

Analysis of Variance (ANOVA)

advertisement

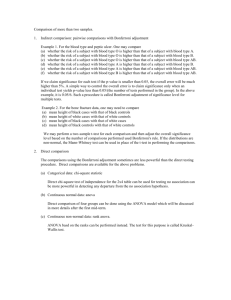

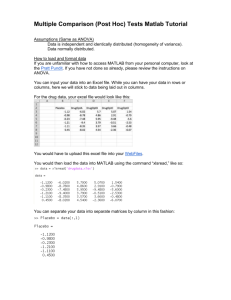

Analysis of Variance Mean Comparison Procedures 1 Learning Objectives Understand what to do when we have a significant ANOVA Know the difference between Planned Comparisons Post-Hoc Comparisons Be aware of different approaches to controlling Type I error Be able to calculate Tukey’s HSD Be able to calculate a linear contrast Understand issue of controlling type 1 error versus power Deeper understanding of what F tells us. 2 What does F tell us? If F is significant… Will at least two means be significantly different? What is F testing, exactly? Do we always have to do an F test prior to conducting group comparisons? Why or why not? 3 When F is significant… Typically want to know which groups are significantly different May have planned some comparisons in advance e.g., vs. control group May have more complicated comparisons in mind e.g., does group 1 & 2 combined differ significantly from group 3 & 4? May wish to make comparisons we didn’t plan Can potentially make lots of comparisons Consider a 1-way with 6 groups! 4 Decisions in ANOVA True World Status of Hypothesis Our Decision Reject H0 Don’t Reject H0 H0 True H0 False Type I error p= Correct decision p=1–β Correct decision p=1– Type II error p=β 5 Concerns over Type I Error How it becomes inflated in post-hoc comparisons Familywise / Experimentwise errors Type I error can have undesirable consequences Wasted additional research effort Monetary costs in implementing a program that doesn’t work Human costs in providing ineffective treatment Other… Type II error also has undesirable consequences Closing down potentially fruitful lines of research Loss costs, for not implementing a program that does work 6 Beginning at the end, posthocs… Fisher’s solution – a no-nonsense approach The Bonferroni solution – keeping it simple The Dunn–Šidák solution – one-upping Bonferroni Scheffé’s solution – keeping it very tight Tukey’s solution – keeping a balance Newman-Keuls Solution – keeping it clever Dunn’s test – what’s up with the control group? 7 Recall our ongoing example… One-Way Analysis of Variance Example Subject # 1 2 3 4 5 6 7 8 T ΣX2 n SS Mean Method 1 3 5 2 4 8 4 3 9 Method 2 4 4 3 8 7 4 2 5 Method 3 6 7 8 6 7 9 10 9 38 224 8 43.5 4.75 37 199 8 27.88 4.63 62 496 8 15.5 7.75 Total n=8 k=3 137 919 24 136.96 5.71 Source Table SSwithin SS 86.88 Df 21 MS 4.14 SSmethod 50.08 2 25.04 136.96 23 5.95 SStotal Example taken from Winer et al. (1991). Page 75 F 6.05 p< 0.01 8 The Bonferroni Inequality The multiplication rule in probability For any two independent events, A and B… the probability that A and B will occur is… P(A&B)=P(A)xP(B) Applying this to group comparisons… The probability of a type 1 error = .05 Therefore the probability of a correct decision = .95 The probability of making three correct decisions = .953 Bonferroni’s solution: α/c 9 Bonferroni t’ / Dunn’s Test Appropriate when making a few comparisons Attributes Excellent control of type 1 error Lower power, especially with high c Can be used for comparison of groups, or more complex comparisons Linear contrasts Dunn-Šidák test is a refined version of the Bonferroni test where alpha is controlled by taking into account the more precise estimate of type 1 error: ind 1 c 1 overall 10 Tukey’s approach 1) Determine r: number of groups (3 in our teaching method example) 2) Look up q from table (B.2): using r and dfW (3 & 21 rounding down to 20 = 3.578). 4) Determine HSD: HSD q MSW n 4) Check for significant differences 3.578 4.14 2.574 8 M1 M1 M2 M3 4.75 4.63 7.75 M2 M3 0.12 -3.00 -3.12 11 Student Newman-Keuls Example from One-Way ANOVA where k=7 Means C A B D G E F 2.0 C 2.4 A 0.4 2.6 B 0.6 0.2 3.6 D 1.6 1.2 1.0 4.4 G 2.4 2.0 1.8 0.8 4.8 E 2.8 2.4 2.2 1.2 0.4 5.0 F 3.0 2.6 2.4 1.4 0.6 0.2 r Intrvl. 7 1.82 6 1.75 5 1.67 4 1.56 3 1.41 2 1.17 4.54 .80 5 MSW = 0.80, dfW = 28, n=5 12 S-N-K cont’d Means 2.0 C 2.4 A 0.4 2.6 B 0.6 0.2 3.6 D 1.6 1.2 1.0 4.4 G 2.4 2.0 1.8 0.8 4.8 E 2.8 2.4 2.2 1.2 0.4 5.0 F 3.0 2.6 2.4 1.4 0.6 0.2 C A B D G * * * E * * * F * * * C A B D G E F C A B D G E F r 7 6 5 4 3 2 Intrvl. 1.82 1.75 1.67 1.56 1.41 1.17 r 7 6 5 4 3 2 13 And, finally… C A B D G E F Homogenous subsets of means… Problems with S-N-K and alternatives 14 Post-Hoc Comparison Approaches The Bonferroni Inequality Flexible Approaches for Complex Contrasts Simultaneous Interval Approach Taking magnitude into account If you have a control group 15 What if we were clever enough to plan our comparisons? Linear Contrasts Simple Comparisons To correct or not correct… 16 Orthogonal Contrasts What are they? How many are there? How do I know if my contrasts are orthogonal? When would I use one? What if my contrasts aren’t orthogonal? 17 Simple Example Three treatment levels Wish to compare A & B with C 1. A B C1 : C 2 In words, A & B combined aren’t significantly different from C 2. Next we need to derive contrast coefficients, thus we need to get coefficients that sum to zero. First, multiply both sides by 2… C1 : A B 2C 3. Then, subtract 2C from both sides. C1 : A B 2C 0 A & B have an implied coefficient of “1” 18 SS for C1 SSC T (C j T j ) 2 C 2j / n [(1x4.75) (1x4.62) (2 x7.75)]2 6.132 SSC1 50.103 2 2 2 (1 1 2 ) / 8 0.75 All contrasts have 1 df Thus, SS = MS Error term is common MSW, calculated before 19 Why? Imagine another outcome… Subject # Method 1 Method 2 Method 3 1 3 4 6 2 5 4 7 3 2 3 5 4 4 8 6 5 8 7 7 6 4 4 9 7 3 2 6 8 9 5 7 T Sigma X2 n SS Mean 38 224 8 43.50 4.75 37 199 8 27.88 4.63 53 361 8 9.88 6.63 Total n=8 Now, F = 2.60, p > .05 k=3 MSW = 3.87 128 784 24 101.33 5.33 20 Other types of contrasts Special Helmert Difference Repeated Deviation Simple Trend (Polynomial) 21 Final thoughts/questions Do we need to do an ANOVA / F test? What is your strategy for determining group differences? Which methods are best suited to your strategy / questions? 22