Intelligence d`affaires Séance 8

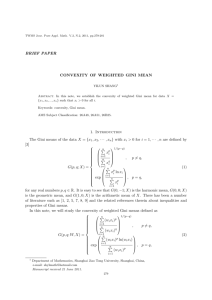

advertisement

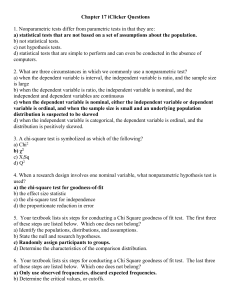

Business Intelligence and Decision Modeling Week 9 Customer Profiling Decision Trees (Part 2) CHAID CRT CHAID or CART Chi-Square Automatic Interaction Detector Based on Chi-Square All variables discretecized Dependent variable: nominal Classification and Regression Tree Variables can be discrete or continuous Based on GINI or F-Test Dependent variable: nominal or continuous Use of Decision Trees Classify observations from a target binary or nominal variable Segmentation Predictive response analysis from a target numerical variable Behaviour Decision support rules Processing Decision Tree Example: dmdata.sav Underlying Theory X2 CHAID Algorithm Selecting Variables Example Regions (4), Gender (3, including Missing) Age (6, including Missing) For each variable, collapse categories to maximize chi-square test of independence: Ex: Region (N, S, E, W,*) (WSE, N*) Select most significant variable Go to next branch … and next level Stop growing if …estimated X2 < theoretical X2 CART (Nominal Target) Nominal Targets: GINI (Impurity Reduction or Entropy) Squared probability of node membership Gini=0 when targets are perfectly classified. Gini Index =1-∑pi2 Example Prob: Bus = 0.4, Car = 0.3, Train = 0.3 Gini = 1 –(0.4^2 + 0.3^2 + 0.3^2) = 0.660 CART (Metric Target) Continuous Variables: Variance Reduction (F-test) Comparative Advantages (From Wikipedia) Simple to understand and interpret Requires little data preparation Able to handle both numerical and categorical data Uses a white box model easily explained by Boolean logic. Possible to validate a model using statistical tests Robust