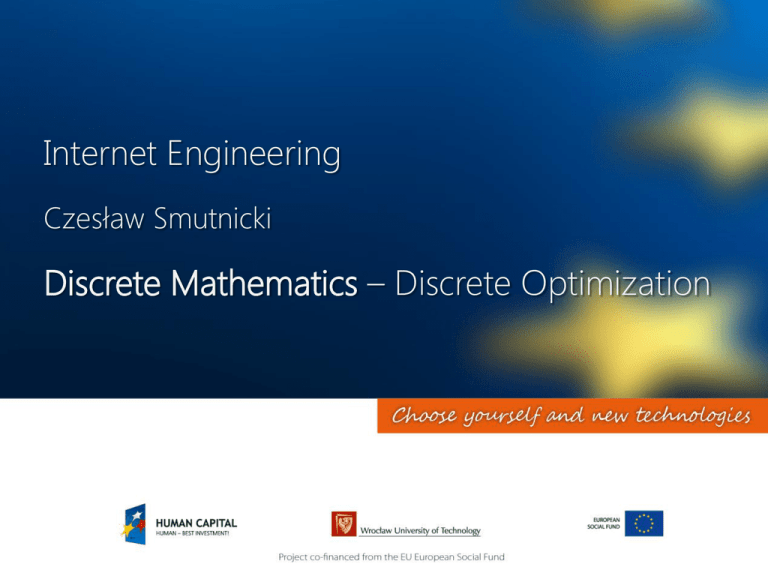

Internet Engineering

Czesław Smutnicki

Discrete Mathematics – Discrete Optimization

CONTENTS

•

•

•

•

Numerical troubles

Packages

Tools

Useful methods

OPTIMIZATION TROUBLES.

NICE BEGINNINGS OF BAD NEWS

FIND EXTREMES OF THE FUNCTION

2D

1D

DE JONG TEST FUNCTION

OPTIMIZATION TROUBLES. MULTIPLE EXTREMES

GRIEWANGK TEST FUNCTION

FIND EXTREMES OF THE FUNCTION

2D

OPTIMIZATION TROUBLES.

EXPONENTAL NUMBER OF EXTREMES

LANGERMANN TEST FUNCTION

FIND EXTREMES OF THE FUNCTION

2D

OPTIMIZATION TROUBLES.

DECEPTION POINTS

FOX HOLES TEST FUNCTION

FIND EXTREMES OF THE FUNCTION

2D

OPTIMIZATION TROUBLES.

TIME OF CALCULATIONS/COST OF CALCULATIONS

CURSE OF

DIMENSIONALITY

NPHARDNESS

LAB INSTANCE

5..20 VARIABLES

NONLINEAR FUNCTION OF 1980 VARIABLES !!!

INSTANCE FROM PRACTICE

Please wait.

Calculations will

last 3 289 years

!! ?

OPTIMIZATION TROUBLES.

SIZE OF THE SOLUTION SPACE

The smallest practical instance FT10 of the job-shop scheduling problem (waited 25

years for the solving), consists of 10 jobs, 10 machines, 100 operations; solution

space contains 1048 discrete feasible solutions; each solution has dimension 90; the

greatest currently used benchmarks have dimension 1980

SOLUTION SPACE

FT 10 corresponds to

printed area of 1032 km2

(Jupiter has 1010 km2) if

single solution is a dot

0.01 x 0.01 mm

dimension and size

OPTIMIZATION TROUBLES.

DISTRIBUTION OF THE GOAL FUNCTION VALUES

Example: job-shop

scheduling problem;

relative Hamming

distances DIST between a

feasible solution and the

„best” solution are

distributed normally in the

solution space

1,2

frequence [%]

1,0

ALL

FEAS

0,8

0,6

0,4

0,2

DIST [%]

0,0

0

10

0,25

Goal function values are

distributed normally in the

solution space;

RE

RANDOM BEST

BEST

20

30

40

50

60

70

frequence [%}

FEAS

0,20

0,15

0,10

0,05

RE [%]

0,00

0

25

50

75

100

125

150

175

200

OPTIMIZATION TROUBLES. FUR

Example: job-shop scheduling problem

SIMULATION OF GOAL FUNCTION VALUES TOWARDS CENTER OF THE SPACE

90

RE [%]

80

70

60

50

40

30

20

10

DIST [%]

0

1

21

41

61

81

101

121

141

161

181

OPTIMIZATION TROUBLES. ZOOM IN ON THE FUR

Example: job-shop scheduling problem

SIMULATION OF GOAL FUNCTION VALUES TOWARDS CENTER (ZOOM)

18

RE [%]

16

14

12

10

8

6

4

2

DIST [%]

0

0,01

0,21

0,41

0,61

0,81

1,01

1,21

1,41

1,61

1,81

OPTIMIZATION TROUBLES.

STONE FOREST

Transformation of a

sample of random

solutions from the 90D

space into 2D space.

PROPERTIES OF SOLUTION SPACE LANDSCAPE

BIG VALLEY – positive correlation between goal function value and the distance to optimal solution (the best found

solution); in the big valley the concentration of local extremes is high. The size of the valley is usually

relatively small in relation to the size of the whole solution space.

RUGGEDNESS – measure of diversity of goal function values of related (neighboring) solutions; rruggedness is

greater if diversity of the goal function value in the neighborhood of this point is greater; less differentiation

of the goal function value means the flat landscape.

THE NUMBER OF LOCAL EXTREMES (peaks) in relation to to the size of the solution space

DISTRIBUTION OF LOCAL EXTREMES experimental

OTHER MEASURES

autocorrelation function, correlation function between random trajectories, landscape statistically isotropic,

fractal landscape, correlation between genes (epitasis), correlation of the distance of fitness

CURRENT STATE IN DISCRETE OPTIMIZATION

•

•

•

•

•

•

•

•

•

•

•

•

Packages and solvers (LINDO, CPLEX, ILOG, …)

Exact methods (B&B, DP, ILP, BLP, MILP, SUB,…)

Approximate methods (…): heuristics, metaheuristics, meta2heuristics

Quality measures of approximation (absolute, relative, …)

Analysis of quality measure (worst-case, probabilistic, experimental)

Calculation cost (pessimistic, average, experimentally tested)

Approximation schemes (AS, polynomial-time PTAS, fully polynomial-time FPTAS)

Inapproximality

Useful experimental methods (…)

„No free lunch” theorem

Public benchmarks

Parallel and distributed methods: new class of algorithms

OPTIMIZATION HISTORY/TRENDS

•

•

•

•

•

•

•

•

•

•

•

•

Priority rules

Theory of NP-completeness

Plynomial-time algorithms

Exact methods (B&B, DP, ILP, BLP,…)

Approximation methods: quality analysis

Approximation schemes (AS, PTAS, FPTAS, …)

Inapproximality theory

Competitive analysis (on-line algorithms)

Metaheuristics

Theoretical foundations of metaheuristics

Parallel metahuristics

Theoretical foundations of parallel metaheuristics

APPROXIMATE METHODS

• constructive/improvement

• priority rules

• random search

• greedy randomized adaptive

• simulated annealing

• simulated jumping

• estimation of distribution

• tabu search

• adaptive memory search

• variable neighborhood search

• evolutionary, genetic search

• differential evolution

• biochemistry methods

• immunological methods

• ant colony optimization

• particle swarm optimization

• neural networks

• threshold accepting

• path search

• beam search

• scatter search

• harmony search

• path relinging

• adaptive search

• constraint satisfaction

• descending, hill climbing

• multi-agent

• memetic search

• bee search

• intelligent water drops

* * * * *

EVOLUTION: DARWIN’S VIEW.

GENETIC ALGORITHMS

GOAL OF THE NATURE? optimization, fitness, continuity preservation,

follow up changes

SUCCESION: genetic material carries data for body construction

EVOLUTION: crossing over, mutation

SELECTION: soft/hard

individual=solution=genotype≠fenotype

individual, gene, chromosome, trait

population (structure, size, composition)

crossing-over (what is the key of progress?)

mutation (insurance?)

sex ?

democracy/elitarism

theoretical properties

EVOLUTION: DARWIN’S VIEW.

COMPONENTS

GENOTYPE

CHROMOSOM

MORE …

SOLUTION

FEASIBILITY

REPAIRING

GENE EXPRESSION

FENOTYPE

CODING

CONTROL OF

POPULATION DYNAMICS

SELECTION SCHEME

MATTING POOL

LETHALITY

MUTATION

BIG VALLEY PHENOMENON

INTENSIFICATION

CROSSING OVER

OPERATOR MSXF

EVOLUTION: DARWIN’S VIEW.

COPYING FROM THE NATURE

•

•

•

•

•

•

•

•

control of population dynamics/preserving diversity

parents matching strategies: (sharing function to prevent too close relative

parents; incest preventing by using Hamming distance to evaluate genotype

similarity)

structures of the population (migration, diffusion models)

social behavior patterns (satisfied, glad, disappointed -> clonning, crossingover, mutation)

adaptive mutation

gene expression

distributed populations

…

EVOLUTION: DARWIN’S VIEW.

MULTISTEP FUSION MSXF

SOURCE SOLUTION (PARENT)

NEIGHBORHOOD OF THE SOURCE

DISTANCE TO TARGET

TRAJECTORY = GOAL ORIENTED PATH

TARGET SOLUTION

(PARENT)

TARGET NEIGHBORHOOD

SUCCESSIVE NEIGHBOURHOODS

SEARCHED IN THE STOCHASTIC WAY

DEPENDING THE DISCTANCE TO TARGET

EVOLUTION: LAMARCK/BALDWIN’S VIEW.

MEMETIC ALGORITHMS

GOAL OF THE NATURE? optimization, fitness, continuity preservation,

follow up changes, transfer knowledge to successors

SUCCESION: genetic material carries data for body building

plus acquired knowledge

EVOLUTION: crossing over, mutation, learning

SELECTION: soft/hard

individual=solution=memotype≠fenotype

•

•

•

individual, meme, chromosome, trait

population (structure, size, composition, learning)

crossing-over, mutation, learning

•

theoretical properties ?

DIFFERENTIAL EVOLUTION

Differential evolution is a subclass of genetic search methods. Democracy in creating successors with

using crossover and mutation in GS has been replaced in DE by directed changes to fathom solution

space. DE starts from the random population of individuals (solutions). In each iteration something

similar to mutation and crossover is performed, however in completely different way than in GS.

For each solution x from the space, an offspring y is generated as the trial solution being the extension of

a selected random solution a and two directional solutions b and c (analogy to parents) selected at

random. Generation is based on linear combination with some random parameters.

y a R(b c ), R [0,2]

Separate mechanism

generating

ani offspring by simple copying of the parent. Significant role

i prevents

i

i

plays the mutation, which due to specific strategy, is self-adaptive and goal-oriented with respect to the

direction, scale and range. If the trial solution is better, it is accepted; otherwise it is released. Iterations

are repeated until the fixed a priori number of iterations has been reached, or stagnation has been

detected. The method owns some specific tuned parameters: differential weight, crossover probability,

… selected experimentally.

ARTIFICIAL IMMUNE SYSTEM

fitness

LIBRARY OF ANTIBODIES

recombination

antibody = solution

antigen = problem or instance

Antigen (invasive protein) represents new problem to solve or new (or temporary) constraints set for

the solution of already solved problem. Variety of possible antigens is huge, frequently infinite.

Moreover, sequence of presented antigens is not known a priori.

Antibody (protein blocking antigent, directed against intruder) corresponds to an algorithm which

produces a solution to the problem. Variety of antibodies is usually small, however mechamisms

exist of their aggregation and recombination in order to produce new antibodies with various

properties. Patterns of antibodies are collected in the library, which constitutes memory of the

system.

Matching (fitness) is the selection of antibody for the antigen. Matching is ideal, if the antibody

allow us to generate solution of the problem which is globally optimal under given constraints.

Otherwise, certain defined measure is used to evaluate quality of the maching. Bad maching forces

the system to seek for new types of antibodies, usually by using evolution.

ANT SEARCH.

COOPERATIVE SWARMS

control

system

Pheromone

detectors

ANT

• seeks for food

• leaves pheromone on the trail

• moves at random, but prefers pheromone trails

• pheromone density decreases in time

pheromone

generator

moving

drive

ANT SEARCH.

SEEKING FOODS. DISCOVERING THE PATH

E

E

E

D

D

C

H

B

A

E

D

C

H

B

A

C

H

B

A

A

ANT SEARCH.

PHEROMONE DISTRIBUTION

m

ij ijk

k 1

ij (t n) ij (t ) ij

PARTICLE SWARM OPTIMIZATION

•

•

•

•

•

•

•

swarm is a large set of individuals (particles) moving together

each individual performs the search trajectory in the solution space

trajectories are distributed, correlated and take into account experiences of individuals

location of the individual (solution) is described by the location vector x, changes of

location is described by velocity vector v

velocity equation containts an inertiA term and two directional terms weighted by

using some random parameters

location of the individual depends on: recent (previous) position, experience (best

location up to now), location of the leader of the swarm,

the best up to now solution form the most promising direction of the search

BEE SEARCH

waggle dance = distribution of knowledge

bee trajectory = solution

hive

bee

flowers & nectar

nectar amount = goal function

visited site = neighborhood

Neighborhood search combined with random search and supported by cooperation

(learning).

•

•

•

bee swarm collects honey in hive

each bee performs the random path (solution) to the search region of nectar

selected elite bees in hive perform „waggle dance” in order to inform other bees about

promising search regions (direction, distance, quality)

TABU SEARCH

STARTING SOLUTION

•

NEIGHBOURHOOD

•

•

•

SUCCESSIVE NEIGHBOURHOODS

EXPLORED EXHAUSTIVELY

human thinking in the process of

seeking a solution

the method „best in local

neighborhood”

repeated from the best recently found

forbidding the return to solutions

already visited to prevent cyclic

(wandering around); short term

memory

ADAPTIVE MEMORY SEARCH

•

•

•

•

•

•

•

gathering data in human brain during the process of seeking a

solution

the method „best” in the current heighbourhood (a few solution

relatively close to the current)

repetition from the best recently found; intensification of the

search

operational (short term) memory: prohibition of coming back to

solutions already visited to prevent wandering

tactic memory: set direction of the search

strategic memory: selection of search regions (basins of

attraction); diversification

recency based, frequency based memory

INTELLIGENT WATER DROPS

Based on the dynamic of the river systems, action and reaction, that happen among water drops in

rivers:

•

a drop has some (static) parameters, namely velocity, soil;

•

these parameters may change during the lifetime (e.g. iterative cost)

•

drops flow from a source to destination

•

a drop starts with some initial velocity and zero soil

•

during the flow, drop removes some soil from the environment

•

speed of the drop incereases non-linearly inversely to the amount of soil; path with less soil is

faster than path with more soil

•

soil is gathered in the drop and removed from the environment

•

drop statistically prefers path with lower soil

SIMULATED ANNEALING.

COOLING SCHEMES

annealing = slow cooling of ferromagnetic or antyferromagnetic solid in order to eliminate

internal stretches

Boltzman (harmonic)

Logarithmic (Hajek lemay)

Geometric

1

Tk

k

Tk

Tk 1

ln (k 2)

Tk (a k )

Tk

T0

1 Tk 1 k 1 T0

k 0,1, ....

SIMULATED ANNEALING.

AUTOTUNING

•

•

•

•

•

Random starting solution

Sequence of k trial moves in the space

K steps in each fixed temperature

Starting temperature adjusted automatically

Adaptive speed of cooling

max maxxY maxx'N ( x) K ( x' ) K ( x)

T0

max

ln p

Tk 1

Tk

k

1 k Tk

ln(1 )

3 k

p 0.9

SIMULATED JUMPING

annealing by successive heating and cooling, in order to eliminate internal

stretches of the spin-glass solid (mixed ferromagnetic and antyferromagnetic

material); the aim is to penetrate high barriers that exist between domains

T (t 1) podgrzewan ie T (t )

R

T (t 1) studzenia T (t ) podgrzewan ia

R [0, ] [1, 2, ...N ]

(0, ]

DISCRETE OPTIMIZATION. SOLUTION SPACE PROPERTIES

DISTANCE MEASURES IN THE SOLUTION SPACE

Move type

measure

receipt

mean

A

DA (, )

number of inversion

in -1 o

n( n 1)

4

S

I

DI (, )

DS (, )

n minus the number

of cycles in -1 o

n Hn

variance

n( n 1)( 2n 5)

72

Hn H

complexity

O(n2 )

O(n)

( 2)

n

n minus the lenght of

the maximal increasing

subsequence in -1 o

n2 n

1

3

(n )

O(n log n)

SELECTED INSTANCES.

BIG VALLEY

There exists strong correlation between quality of the function

value (RE) and distance to the best solution (DIST); this correlation

is preserved after transformation of the solution to x/y coordinates

180

RE [%]

4

160

y

2

140

0

120

-2

100

-4

80

-6

60

40

-8

start

BIG VALLEY

-10

best

DIST [%]

-12

20

0

x

-14

0

5

10

15

20

25

30

-18

-14

-10

-6

-2

2

6

10

SELECTED METHODS.

RANDOM SEARCH

Random search offers slow convergence to the good

solution because it doesn’t use any information about

structure of the solution space

start

RANDOM SEARCH TRAJECTORY

best

RAN

RE [%]

40

RAN

y

0

35

-2

30

25

-4

20

-6

15

-8

10

-10

5

DIST [%]

0

0

2

4

6

8

10

12

-12

-14

-18

x

-16

-14

-12

-10

-8

-6

-4

-2

SELECTED METHODS.

SIMULATED ANNEALING

Simulated annealing offers moderate speed of convergence

to the good solution; it is much more similar to the random

search than to goal-oriented search

start

best

SIMULATED ANNEALING TRAJECTORY

40

SA

RE [%]

SA

y

0

35

-2

30

-4

25

-6

20

15

-8

10

-10

5

-12

DIST [%]

0

0

2

4

6

8

10

12

x

-14

-18

-16

-14 -12

-10

-8

-6

-4

-2

SELECTED METHODS.

TABU SEARCH

Tabu search offers quick convergence to the good solution;

this is the fast descent method supported by adaptive

memory

start

best

TABU SEARCH TRAJECTORY

40

TS

RE [%]

0

35

TS

y

-2

30

-4

25

-6

20

15

-8

10

-10

5

DIST [%]

0

0

2

4

6

8

10

12

-12

-14

-18

x

-16

-14

-12

-10

-8

-6

-4

-2

PARALLEL OPTIMIZATION: NEW CLASS OF ALGORITHMS

•

•

•

•

•

•

•

•

•

Theoretical models of parallel calculation: SISD, SIMD, MISD, MIMD

Theoretical models of memory access: EREW, CREW, CRCW

Parallel calculation environments: hardware, software, GPGPU

Shared memory programming: Pthreads (C), Java threads, Open MP (FORTRAN, C, C++)

Distributed memory programing, message-passing, object-based, Internet computing: PVM, MPI,

Sockets, Java RMI, CORBA, Globus, Condor

Measures of quality of parallel algorithms: runtime, speedup, effciency, cost

Single/multiple searching threads; granularity

Independent/cooperative search threads

Distributed (reliable) calculations in the net

PARALLEL OPTIMIZATION: FESTIVAL OF APPROACHES

•

SIMULATED ANNEALING:

–

–

–

–

–

•

Single thread, conventional SA, parallel calculation of the goal function value; fine grain;

theory of convergence

Single thread, pSA, parallel moves, subset of random trial solutions selected in the

neighborhood, parallel evaluation of trial solutions; theory of convergence

Exploration of equilibrium state at fixed temperature in parallel

Multiple independent threads; coarse grain

Multiple cooperative threads; coarse grain

GENETIC SEARCH:

–

–

–

–

–

Single thread, conventional GA, parallel calculation of the goal function value; small grain;

theory of convergence

Single thread, parallel evaluation of population;

Multiple independent threads; coarse grain

Multiple cooperative threads, distributed subpopulations: migration, diffusion, island models

…

Thank you for your attention

DISCRETE MATHEMATICS

Czesław Smutnicki