Binary Search Tree

advertisement

Introduction to

Algorithms

Jiafen Liu

Sept. 2013

Today’s Tasks

Binary Search Trees(BST)

• Analyzing height

• Informal Analysis

• Formal Proof

– Convexity lemma

– Jensen’s inequality

– Exponential height

Binary Search Tree

• Binary search tree is an popular data structure,

it supports several dynamic operations.

– Search

– Minimum

– Maximum

– Predecessor

– Successor

– Insert

– Delete

– Tree Walk

Data structure of binary search tree

• A BST tree can be represented by a

linked data structure in which each node

is an structure.

– Key field

– Satellite data

– Pointers: left, right, and parent

• For any node x, the keys in the left

subtree of x less than or equal to key[x],

and the keys in the right subtree of x are

bigger than key[x].

Different Shapes of BST

• 2 binary search trees contains the same 6 keys.

– (a) A binary search tree with height 3.

– (b) A binary search tree with height 5.

– Which one is better?

• Balanced Tree and Unbalanced Tree, What’s

the worst case of BST?

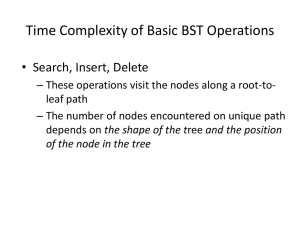

What’s the cost of basic operations?

• It takes Θ(n) time to walk an n-node

binary search tree.

• Other basic operations on a binary search

tree take time proportional to the height of

the tree Θ(h) .

Tree Walk

• If x is the root of an n-node subtree, then

the call INORDER-TREE-WALK(x) takes

Θ(n) time.

• We will prove by induction.

Proof of the theorem

Proof:

• Let T(n) denote the time taken this function.

• T(1) is a constant.

• For n > 1, suppose that function is called on a

node x whose left subtree has k nodes and

whose right subtree has n - k - 1 nodes.

T(n) = T(k) + T(n - k - 1) + d

• How to Solve it?

– substitution method

Search

TREE-SEARCH (x, k)

1 if x= NIL or k = key[x]

2 then return x

3 if k < key[x]

4 then return TREE-SEARCH(left[x], k)

5 else return TREE-SEARCH(right[x], k)

Minimum and maximum

TREE-MINIMUM (x)

1 while left[x] ≠ NIL

2

do x ← left[x]

3 return x

TREE-MAXIMUM(x)

1 while right[x] ≠ NIL

2

do x ← right[x]

3 return x

Successor and predecessor

TREE-SUCCESSOR(x)

1 if right[x] ≠ NIL

2

then return TREE-MINIMUM (right[x])

3 y ← p[x]

4 while y ≠ NIL and x = right[y]

5

do x ← y

6

y ← p[y]

7 return y

• if the right subtree of node x is empty and x has

a successor y, then y is the lowest ancestor of x

whose left child is also an ancestor of x.

Insertion

TREE-INSERT(T, z)

1 y ← NIL

2 x ← root[T]

3 while x ≠ NIL

4

do y ← x

5

if key[z] < key[x]

6

then x ← left[x]

7

else x ← right[x]

8 p[z] ← y

9 if y = NIL

10

then root[T] ← z

11 else if key[z] < key[y]

12

then left[y] ← z

13 else right[y] ← z

// Tree T was empty

Deletion

Deletion

Which node is actually

removed depends on how

many children z has.

(a)If z has no children, we

just remove it.

(b)If z has only one child, we

splice out z.

(c)If z has two children, we

splice out its successor y,

which has at most one child,

and then replace z's key and

satellite data with y's key

and satellite data.

Sorting and BST

• if there is an array A, can we sort this

array using binary search tree operations

as a black box?

– Build the binary search tree, and then

traverse it in order.

Sorting and BST

• Example: A= [3 1 8 2 6 7 5], what we will

get?

• What's the running time of the algorithm?

Cost of build a BST of n nodes

• Can anybody guess an answer?

– Θ(nlgn)

• in most case

– Θ(n2)

• in the worst case

• Does this looks familiar and remind you

of any algorithm we've seen before?

– Quicksort

• Process of Quicksort

BST sort and Quicksort

• BST sort performs the same comparisons

as Quicksort, but in a different order!

• So, the expected time to build the tree is

asymptotically the same as the running

time of Quicksort.

Informal Proof

• The depth of a node equals to the number of

comparisons made during TREE-INSERT.

• Assuming all input permutations are equally

likely, we have

Average node depth()

=

(comparison times to insert node i)

= Θ(nlgn)

= Θ(lgn)

• The expected running time of quicksort on

n elements is?

Expected tree height

• Average node depth of a randomly built

BST = Θ(lgn).

• Does this necessarily means that its

expected height is also Θ(lgn)?

Outline of Formal Proof

• Prove Jensen’s inequality, which says that

f(E[X]) ≤ E[f(X)] for any convex function f

and random variable X.

• Analyze the exponential height of a

randomly built BST on n nodes, which is

the random variable Yn= 2Xn, where Xn is

the random variable denoting the height of

the BST.

• Prove that 2E[Xn] ≤ E[2Xn] = E[Yn] = O(n3),

and hence that E[Xn] = O(lgn).

Convex functions

• A function f is convex if for all α, β≥0 such

that α+β=1, we have

f(αx+ βy) ≤αf(x)+βf(y)

for all x,y∈R.

Convexity lemma

Lemma.

• Let f be a convex function, and let α1, α2 ,

…, αn be nonnegative real numbers such

that

. Then, for any real numbers

x1, x2, …, xn, we have

• How to prove that?

Proof of convexity lemma

Proof.

• By induction on n. For n=1, we have α1=1

and hence f(α1x1) ≤α1f(x1) trivially.

• Inductive step:

why we introduce 1-αn?

By convexity

By induction

Convexity lemma: infinite case

Lemma.

• Let f be a convex function, and let α1, α2 ,

… be nonnegative real numbers such that

. Then, for any real numbers x1,

x2, … , we have

assuming that these summations exist.

• We will not prove that, but intuitively its

true.

Jensen’s inequality

• Jensen’s inequality, which says that

f(E[X]) ≤ E[f(X)] for any convex function f

and random variable X.

Proof.

By definition of expectation

By Convexity lemma (infinite case)

Analysis of BST height

• Let Xn be the random variable denoting

the height of a randomly built binary

search tree on n nodes, and let Yn= 2Xn be

its exponential height.

• If the root of the tree has rank k, then

Xn= 1 + max{Xk–1,Xn–k}

since each of the left and right subtrees

are randomly built. Hence, we have

Yn= 2·max{Yk–1,Yn–k}.

Analysis (continued)

• Define the indicator random variable Znk as

• Thus, Pr{Znk= 1} = E[Znk] = 1/n, and

Take expectation of both sides.

Analysis (continued)

Linearity of expectation.

Independence of the rank of the root from the ranks of

subtree roots.

The max of two nonnegative numbers is at most their

Each term appears

sum, and E[Znk] = 1/n.

twice, and reindex.

Analysis (continued)

• How to solve this?

– Substitution Method

• Guess E[Yn] ≤ cn3 for some positive

constant c.

• Initial conditions: for n=1, E[Y1]=2X1=2≤c,

we can pick c sufficiently large to handle.

Analysis (continued)

• Substitution:

• How to compute the expression on right?

– Integral method.

The grand finale

• By Jensen’s inequality, since f(x) = 2x is

convex, we have

What we just showed

• Taking lg of both sides yields

Post mortem

• Why bother with analyzing exponential

height?

• Why not just develop the recurrence on

Xn= 1 + max{Xk–1,Xn–k}

directly?

Answer

• The inequality max{a,b} ≤ a+b provides a

poor upper bound, since the RHS

approaches the LHS slowly as |a–b|

increases.

• The bound

allows the RHS to approach the LHS far

more quickly as |a–b| increases. By using

the convexity of f(x) = 2x via Jensen’s

inequality, we can manipulate the sum of

exponentials, resulting in a tight analysis.