LSA.303 Introduction to Computational Linguistics

advertisement

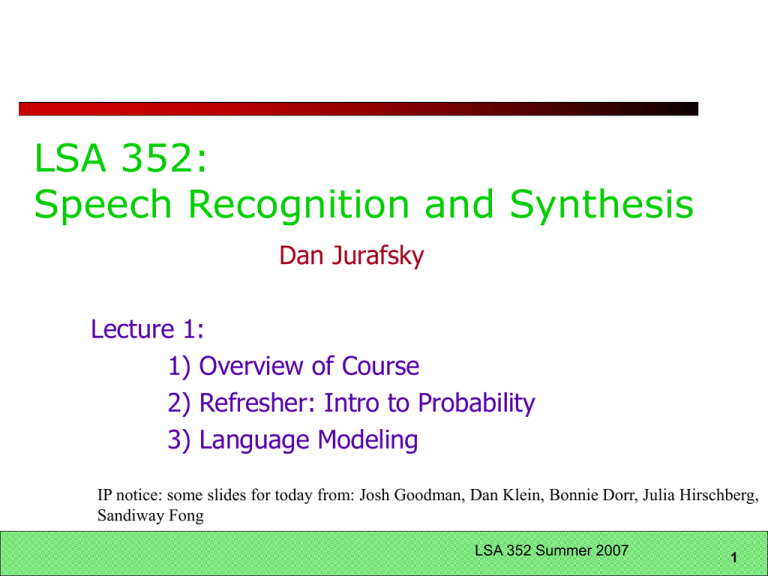

LSA 352:

Speech Recognition and Synthesis

Dan Jurafsky

Lecture 1:

1) Overview of Course

2) Refresher: Intro to Probability

3) Language Modeling

IP notice: some slides for today from: Josh Goodman, Dan Klein, Bonnie Dorr, Julia Hirschberg,

Sandiway Fong

LSA 352 Summer 2007

1

Outline

Overview of Course

Probability

Language Modeling

Language Modeling means “probabilistic grammar”

LSA 352 Summer 2007

2

Definitions

Speech Recognition

Speech-to-Text

– Input: a wavefile,

– Output: string of words

Speech Synthesis

Text-to-Speech

– Input: a string of words

– Output: a wavefile

LSA 352 Summer 2007

3

Automatic Speech Recognition (ASR)

Automatic Speech Understanding (ASU)

Applications

Dictation

Telephone-based Information (directions, air

travel, banking, etc)

Hands-free (in car)

Second language ('L2') (accent reduction)

Audio archive searching

Linguistic research

– Automatically computing word durations, etc

LSA 352 Summer 2007

4

Applications of Speech

Synthesis/Text-to-Speech (TTS)

Games

Telephone-based Information (directions, air travel,

banking, etc)

Eyes-free (in car)

Reading/speaking for disabled

Education: Reading tutors

Education: L2 learning

LSA 352 Summer 2007

5

Applications of Speaker/Lg

Recognition

Language recognition for call routing

Speaker Recognition:

Speaker verification (binary decision)

– Voice password, telephone assistant

Speaker identification (one of N)

– Criminal investigation

LSA 352 Summer 2007

6

History: foundational insights

1900s-1950s

Automaton:

Markov 1911

Turing 1936

McCulloch-Pitts neuron (1943)

– http://marr.bsee.swin.edu.au/~dtl/het704/lecture10/ann/node

1.html

– http://diwww.epfl.ch/mantra/tutorial/english/mcpits/html/

Shannon (1948) link between automata and Markov models

Human speech processing

Fletcher at Bell Labs (1920’s)

Probabilistic/Information-theoretic models

Shannon (1948)

LSA 352 Summer 2007

7

Synthesis precursors

Von Kempelen mechanical (bellows, reeds) speech

production simulacrum

1929 Channel vocoder (Dudley)

LSA 352 Summer 2007

8

History: Early Recognition

• 1920’s Radio Rex

QuickTime™ and a

TIFF (Uncompressed) decompressor

are needed to see this picture.

Celluloid dog with iron base

held within house by

electromagnet against force of

spring

Current to magnet flowed

through bridge which was

sensitive to energy at 500 Hz

500 Hz energy caused bridge to

vibrate, interrupting current,

making dog spring forward

The sound “e” (ARPAbet [eh])

in Rex has 500 Hz component

LSA 352 Summer 2007

9

History: early ASR systems

• 1950’s: Early Speech recognizers

1952: Bell Labs single-speaker digit recognizer

– Measured energy from two bands (formants)

– Built with analog electrical components

– 2% error rate for single speaker, isolated digits

1958: Dudley built classifier that used continuous spectrum

rather than just formants

1959: Denes ASR combining grammar and acoustic

probability

1960’s

FFT - Fast Fourier transform (Cooley and Tukey 1965)

LPC - linear prediction (1968)

1969 John Pierce letter “Whither Speech Recognition?”

– Random tuning of parameters,

– Lack of scientific rigor, no evaluation metrics

– Need to rely on higher level knowledge

LSA 352 Summer 2007

10

ASR: 1970’s and 1980’s

Hidden Markov Model 1972

Independent application of Baker (CMU) and Jelinek/Bahl/Mercer

lab (IBM) following work of Baum and colleagues at IDA

ARPA project 1971-1976

5-year speech understanding project: 1000 word vocab, continous

speech, multi-speaker

SDC, CMU, BBN

Only 1 CMU system achieved goal

1980’s+

Annual ARPA “Bakeoffs”

Large corpus collection

– TIMIT

– Resource Management

– Wall Street Journal

LSA 352 Summer 2007

11

State of the Art

ASR

speaker-independent, continuous, no noise, world’s

best research systems:

– Human-human speech: ~13-20% Word Error Rate

(WER)

– Human-machine speech: ~3-5% WER

TTS (demo next week)

LSA 352 Summer 2007

12

LVCSR Overview

Large Vocabulary Continuous (Speaker-Independent)

Speech Recognition

Build a statistical model of the speech-to-words

process

Collect lots of speech and transcribe all the words

Train the model on the labeled speech

Paradigm: Supervised Machine Learning + Search

LSA 352 Summer 2007

13

Unit Selection TTS Overview

Collect lots of speech (5-50 hours) from one speaker,

transcribe very carefully, all the syllables and phones

and whatnot

To synthesize a sentence, patch together syllables

and phones from the training data.

Paradigm: search

LSA 352 Summer 2007

14

Requirements and Grading

Readings:

Required Text:

Selected chapters on web from

– Jurafsky & Martin, 2000. Speech and Language Processing.

– Taylor, Paul. 2007. Text-to-Speech Synthesis.

Grading

Homework: 75% (3 homeworks, 25% each)

Participation: 25%

You may work in groups

LSA 352 Summer 2007

15

Overview of the course

http://nlp.stanford.edu/courses/lsa352/

LSA 352 Summer 2007

16

6. Introduction to Probability

Experiment (trial)

Repeatable procedure with well-defined possible outcomes

Sample Space (S)

– the set of all possible outcomes

– finite or infinite

Example

– coin toss experiment

– possible outcomes: S = {heads, tails}

Example

– die toss experiment

– possible outcomes: S = {1,2,3,4,5,6}

Qui ckT ime™ and a

T IFF (Uncompres sed) dec ompres sor

are needed to s ee this pic ture.

Quic kT ime™ and a

T IFF (Uncompress ed) decompress or

are needed to s ee this pi cture.

LSA 352 Summer 2007

Slides from Sandiway Fong

17

Introduction to Probability

Definition of sample space depends on what we are asking

Sample Space (S): the set of all possible outcomes

Example

– die toss experiment for whether the number is even or odd

– possible outcomes: {even,odd}

– not {1,2,3,4,5,6}

Quic kT ime™ and a

T IFF (Uncompress ed) decompress or

are needed to s ee this pi cture.

LSA 352 Summer 2007

18

More definitions

Events

an event is any subset of outcomes from the sample space

Example

die toss experiment

let A represent the event such that the outcome of the die toss

experiment is divisible by 3

A = {3,6}

A is a subset of the sample space S= {1,2,3,4,5,6}

Example

Draw a card from a deck

– suppose sample space S = {heart,spade,club,diamond} (four

suits)

let A represent the event of drawing a heart

let B represent the event of drawing a red card

A = {heart}

B = {heart,diamond}

QuickTime™and a

TIFF(Uncompressed) decompressor

are needed to see this pi cture.

LSA 352 Summer 2007

19

Introduction to Probability

Some definitions

Counting

– suppose operation oi can be performed in ni ways, then

– a sequence of k operations o1o2...ok

– can be performed in n1 n2 ... nk ways

Example

– die toss experiment, 6 possible outcomes

– two dice are thrown at the same time

– number of sample points in sample space = 6 6 = 36

Quic kT ime™ and a

T IFF (Uncompress ed) dec ompress or

are needed to s ee this pic ture.

LSA 352 Summer 2007

20

Definition of Probability

The probability law assigns to an event a nonnegative

number

Called P(A)

Also called the probability A

That encodes our knowledge or belief about the

collective likelihood of all the elements of A

Probability law must satisfy certain properties

LSA 352 Summer 2007

21

Probability Axioms

Nonnegativity

P(A) >= 0, for every event A

Additivity

If A and B are two disjoint events, then the

probability of their union satisfies:

P(A U B) = P(A) + P(B)

Normalization

The probability of the entire sample space S is equal

to 1, I.e. P(S) = 1.

LSA 352 Summer 2007

22

An example

An experiment involving a single coin toss

There are two possible outcomes, H and T

Sample space S is {H,T}

If coin is fair, should assign equal probabilities to 2 outcomes

Since they have to sum to 1

P({H}) = 0.5

P({T}) = 0.5

P({H,T}) = P({H})+P({T}) = 1.0

LSA 352 Summer 2007

23

Another example

Experiment involving 3 coin tosses

Outcome is a 3-long string of H or T

S ={HHH,HHT,HTH,HTT,THH,THT,TTH,TTTT}

Assume each outcome is equiprobable

“Uniform distribution”

What is probability of the event that exactly 2 heads occur?

A = {HHT,HTH,THH}

P(A) = P({HHT})+P({HTH})+P({THH})

= 1/8 + 1/8 + 1/8

=3/8

LSA 352 Summer 2007

24

Probability definitions

In summary:

Probability of drawing a spade from 52 well-shuffled playing

cards:

LSA 352 Summer 2007

25

Probabilities of two events

If two events A and B are independent

Then

P(A and B) = P(A) x P(B)

If flip a fair coin twice

What is the probability that they are both heads?

If draw a card from a deck, then put it back, draw a card from

the deck again

What is the probability that both drawn cards are hearts?

A coin is flipped twice

What is the probability that it comes up heads both times?

LSA 352 Summer 2007

26

How about non-uniform

probabilities? An example

A biased coin,

twice as likely to come up tails as heads,

is tossed twice

What is the probability that at least one head occurs?

Sample space = {hh, ht, th, tt} (h = heads, t = tails)

Sample points/probability for the event:

ht 1/3 x 2/3 = 2/9

th 2/3 x 1/3 = 2/9

hh 1/3 x 1/3= 1/9

tt 2/3 x 2/3 = 4/9

Answer: 5/9 = 0.56 (sum of weights in red)

LSA 352 Summer 2007

27

Moving toward language

What’s the probability of drawing a 2 from a

deck of 52 cards with four 2s?

4

1

P(drawing a two)

.077

52 13

What’s the probability of a random word (from

a random dictionary page) being a verb?

#of ways to get a verb

P(drawing a verb)

all words

LSA 352 Summer 2007

28

Probability and part of speech tags

• What’s the probability of a random word (from a

random dictionary page) being a verb?

P(drawing a verb)

#of ways to get a verb

all words

• How to compute each of these

• All words = just count all the words in the dictionary

• # of ways to get a verb: number of words which are

verbs!

• If a dictionary has 50,000 entries, and 10,000 are

verbs…. P(V) is 10000/50000 = 1/5 = .20

LSA 352 Summer 2007

29

Conditional Probability

A way to reason about the outcome of an experiment

based on partial information

In a word guessing game the first letter for the word

is a “t”. What is the likelihood that the second letter

is an “h”?

How likely is it that a person has a disease given that

a medical test was negative?

A spot shows up on a radar screen. How likely is it

that it corresponds to an aircraft?

LSA 352 Summer 2007

30

More precisely

Given an experiment, a corresponding sample space S, and a

probability law

Suppose we know that the outcome is within some given event B

We want to quantify the likelihood that the outcome also belongs

to some other given event A.

We need a new probability law that gives us the conditional

probability of A given B

P(A|B)

LSA 352 Summer 2007

31

An intuition

• A is “it’s raining now”.

• P(A) in dry California is .01

• B is “it was raining ten minutes ago”

• P(A|B) means “what is the probability of it raining now if it was

raining 10 minutes ago”

• P(A|B) is probably way higher than P(A)

• Perhaps P(A|B) is .10

• Intuition: The knowledge about B should change our estimate of

the probability of A.

LSA 352 Summer 2007

32

Conditional probability

One of the following 30 items is chosen at random

What is P(X), the probability that it is an X?

What is P(X|red), the probability that it is an X given

that it is red?

LSA 352 Summer 2007

33

Conditional Probability

let A and B be events

p(B|A) = the probability of event B occurring given event A occurs

definition: p(B|A) = p(A B) / p(A)

S

QuickTime™ and a

TIFF (Uncompressed) decompressor

are needed to see this picture.

LSA 352 Summer 2007

34

Conditional probability

P(A|B) = P(A B)/P(B)

Or

P( A | B)

P( A, B)

P( B)

Note: P(A,B)=P(A|B) · P(B)

Also: P(A,B) = P(B,A)

A

A,B B

LSA 352 Summer 2007

35

Independence

What is P(A,B) if A and B are independent?

P(A,B)=P(A) · P(B) iff A,B independent.

P(heads,tails) = P(heads) · P(tails) = .5 · .5 = .25

Note: P(A|B)=P(A) iff A,B independent

Also: P(B|A)=P(B) iff A,B independent

LSA 352 Summer 2007

36

Bayes Theorem

P( A | B) P( B)

P( B | A)

P( A)

• Swap the conditioning

• Sometimes easier to estimate one

kind of dependence than the other

LSA 352 Summer 2007

37

Deriving Bayes Rule

P(A B) P(B | A) P(A B)

P(A | B)

P(A)

P(B)

P(A | B)P(B) P(A B) P(B | A)P(A) P(A B)

| B)P(B) P(B | A)P(A)

P(A

P(B | A)P(A)

P(A | B)

P(B)

LSA 352 Summer 2007

38

Summary

Probability

Conditional Probability

Independence

Bayes Rule

LSA 352 Summer 2007

39

How many words?

I do uh main- mainly business data processing

Fragments

Filled pauses

Are cat and cats the same word?

Some terminology

Lemma: a set of lexical forms having the same

stem, major part of speech, and rough word sense

– Cat and cats = same lemma

Wordform: the full inflected surface form.

– Cat and cats = different wordforms

LSA 352 Summer 2007

40

How many words?

they picnicked by the pool then lay back on the grass and looked at the

stars

16 tokens

14 types

SWBD:

~20,000 wordform types,

2.4 million wordform tokens

Brown et al (1992) large corpus

583 million wordform tokens

293,181 wordform types

Let N = number of tokens, V = vocabulary = number of types

General wisdom: V > O(sqrt(N))

LSA 352 Summer 2007

41

Language Modeling

We want to compute P(w1,w2,w3,w4,w5…wn), the

probability of a sequence

Alternatively we want to compute

P(w5|w1,w2,w3,w4,w5): the probability of a word

given some previous words

The model that computes P(W) or P(wn|w1,w2…wn1) is called the language model.

A better term for this would be “The Grammar”

But “Language model” or LM is standard

LSA 352 Summer 2007

42

Computing P(W)

How to compute this joint probability:

P(“the”,”other”,”day”,”I”,”was”,”walking”,”along”,”and”,”

saw”,”a”,”lizard”)

Intuition: let’s rely on the Chain Rule of Probability

LSA 352 Summer 2007

43

The Chain Rule of Probability

Recall the definition of conditional probabilities

Rewriting:

P( A^ B)

P( A | B)

P( B)

P( A^ B) P( A | B) P( B)

More generally

P(A,B,C,D) = P(A)P(B|A)P(C|A,B)P(D|A,B,C)

In general

P(x1,x2,x3,…xn) = P(x1)P(x2|x1)P(x3|x1,x2)…P(xn|x1…xn-1)

LSA 352 Summer 2007

44

The Chain Rule Applied to joint

probability of words in sentence

P(“the big red dog was”)=

P(the)*P(big|the)*P(red|the big)*P(dog|the big red)*P(was|the

big red dog)

LSA 352 Summer 2007

45

Very easy estimate:

How to estimate?

P(the|its water is so transparent that)

P(the|its water is so transparent that)

=

C(its water is so transparent that the)

_______________________________

C(its water is so transparent that)

LSA 352 Summer 2007

46

Unfortunately

There are a lot of possible sentences

We’ll never be able to get enough data to compute

the statistics for those long prefixes

P(lizard|the,other,day,I,was,walking,along,and,saw,a)

Or

P(the|its water is so transparent that)

LSA 352 Summer 2007

47

Markov Assumption

Make the simplifying assumption

P(lizard|the,other,day,I,was,walking,along,and,saw,a)

= P(lizard|a)

Or maybe

P(lizard|the,other,day,I,was,walking,along,and,saw,a)

= P(lizard|saw,a)

LSA 352 Summer 2007

48

Markov Assumption

So for each component in the product replace with

the approximation (assuming a prefix of N)

n1

1

P(wn | w

) P(wn | w

n1

nN 1

)

Bigram version

n1

1

P(wn | w

) P(wn | wn1)

LSA 352 Summer 2007

49

Estimating bigram probabilities

The Maximum Likelihood Estimate

count(w i1,w i )

P(w i | w i1)

count(w i1 )

c(w i1,w i )

P(w i | w i1)

c(w i1)

LSA 352 Summer 2007

50

An example

<s> I am Sam </s>

<s> Sam I am </s>

<s> I do not like green eggs and ham </s>

This is the Maximum Likelihood Estimate, because it is the one

which maximizes P(Training set|Model)

LSA 352 Summer 2007

51

Maximum Likelihood Estimates

The maximum likelihood estimate of some parameter of a model

M from a training set T

Is the estimate

that maximizes the likelihood of the training set T given the

model M

Suppose the word Chinese occurs 400 times in a corpus of a

million words (Brown corpus)

What is the probability that a random word from some other text

will be “Chinese”

MLE estimate is 400/1000000 = .004

This may be a bad estimate for some other corpus

But it is the estimate that makes it most likely that “Chinese”

will occur 400 times in a million word corpus.

LSA 352 Summer 2007

52

More examples: Berkeley

Restaurant Project sentences

can you tell me about any good cantonese

restaurants close by

mid priced thai food is what i’m looking for

tell me about chez panisse

can you give me a listing of the kinds of food that are

available

i’m looking for a good place to eat breakfast

when is caffe venezia open during the day

LSA 352 Summer 2007

53

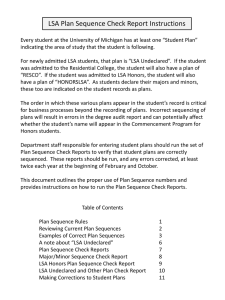

Raw bigram counts

Out of 9222 sentences

LSA 352 Summer 2007

54

Raw bigram probabilities

Normalize by unigrams:

Result:

LSA 352 Summer 2007

55

Bigram estimates of sentence

probabilities

P(<s> I want english food </s>) =

p(i|<s>) x p(want|I) x p(english|want)

x p(food|english) x p(</s>|food)

= .24 x .33 x .0011 x 0.5 x 0.68

=.000031

LSA 352 Summer 2007

56

What kinds of knowledge?

P(english|want) = .0011

P(chinese|want) = .0065

P(to|want) = .66

P(eat | to) = .28

P(food | to) = 0

P(want | spend) = 0

P (i | <s>) = .25

LSA 352 Summer 2007

57

The Shannon Visualization

Method

Generate random sentences:

Choose a random bigram <s>, w according to its probability

Now choose a random bigram (w, x) according to its probability

And so on until we choose </s>

Then string the words together

<s> I

I want

want to

to eat

eat Chinese

Chinese food

food </s>

LSA 352 Summer 2007

58

LSA 352 Summer 2007

59

Shakespeare as corpus

N=884,647 tokens, V=29,066

Shakespeare produced 300,000 bigram types out of

V2= 844 million possible bigrams: so, 99.96% of the

possible bigrams were never seen (have zero entries

in the table)

Quadrigrams worse: What's coming out looks like

Shakespeare because it is Shakespeare

LSA 352 Summer 2007

60

The wall street journal is not

shakespeare (no offense)

LSA 352 Summer 2007

61

Evaluation

We train parameters of our model on a training set.

How do we evaluate how well our model works?

We look at the models performance on some new data

This is what happens in the real world; we want to

know how our model performs on data we haven’t

seen

So a test set. A dataset which is different than our

training set

Then we need an evaluation metric to tell us how

well our model is doing on the test set.

One such metric is perplexity (to be introduced

below)

LSA 352 Summer 2007

62

Unknown words: Open versus

closed vocabulary tasks

If we know all the words in advanced

Vocabulary V is fixed

Closed vocabulary task

Often we don’t know this

Out Of Vocabulary = OOV words

Open vocabulary task

Instead: create an unknown word token <UNK>

Training of <UNK> probabilities

– Create a fixed lexicon L of size V

– At text normalization phase, any training word not in L changed to

<UNK>

– Now we train its probabilities like a normal word

At decoding time

– If text input: Use UNK probabilities for any word not in training

LSA 352 Summer 2007

63

Evaluating N-gram models

Best evaluation for an N-gram

Put model A in a speech recognizer

Run recognition, get word error rate (WER)

for A

Put model B in speech recognition, get word

error rate for B

Compare WER for A and B

In-vivo evaluation

LSA 352 Summer 2007

64

Difficulty of in-vivo evaluation of

N-gram models

In-vivo evaluation

This is really time-consuming

Can take days to run an experiment

So

As a temporary solution, in order to run experiments

To evaluate N-grams we often use an approximation

called perplexity

But perplexity is a poor approximation unless the test

data looks just like the training data

So is generally only useful in pilot experiments

(generally is not sufficient to publish)

But is helpful to think about.

LSA 352 Summer 2007

65

Perplexity

Perplexity is the probability of the test set

(assigned by the language model),

normalized by the number of words:

Chain rule:

For bigrams:

Minimizing perplexity is the same as maximizing probability

The best language model is one that best predicts an

unseen test set

LSA 352 Summer 2007

66

A totally different perplexity

Intuition

How hard is the task of recognizing digits ‘0,1,2,3,4,5,6,7,8,9,oh’:

easy, perplexity 11 (or if we ignore ‘oh’, perplexity 10)

How hard is recognizing (30,000) names at Microsoft. Hard:

perplexity = 30,000

If a system has to recognize

Operator (1 in 4)

Sales (1 in 4)

Technical Support (1 in 4)

30,000 names (1 in 120,000 each)

Perplexity is 54

Perplexity is weighted equivalent branching factor

Slide from Josh Goodman

LSA 352 Summer 2007

67

Perplexity as branching factor

LSA 352 Summer 2007

68

Lower perplexity = better model

Training 38 million words, test 1.5 million words, WSJ

LSA 352 Summer 2007

69

Lesson 1: the perils of overfitting

N-grams only work well for word prediction if the test

corpus looks like the training corpus

In real life, it often doesn’t

We need to train robust models, adapt to test set, etc

LSA 352 Summer 2007

70

Lesson 2: zeros or not?

Zipf’s Law:

A small number of events occur with high frequency

A large number of events occur with low frequency

You can quickly collect statistics on the high frequency events

You might have to wait an arbitrarily long time to get valid

statistics on low frequency events

Result:

Our estimates are sparse! no counts at all for the vast bulk of

things we want to estimate!

Some of the zeroes in the table are really zeros But others are

simply low frequency events you haven't seen yet. After all,

ANYTHING CAN HAPPEN!

How to address?

Answer:

Estimate the likelihood of unseen N-grams!

Slide adapted from Bonnie Dorr and Julia Hirschberg

LSA 352 Summer 2007

71

Smoothing is like Robin Hood:

Steal from the rich and give to the poor (in

probability mass)

Slide from Dan Klein

LSA 352 Summer 2007

72

Laplace smoothing

Also called add-one smoothing

Just add one to all the counts!

Very simple

MLE estimate:

Laplace estimate:

Reconstructed counts:

LSA 352 Summer 2007

73

Laplace smoothed bigram counts

LSA 352 Summer 2007

74

Laplace-smoothed bigrams

LSA 352 Summer 2007

75

Reconstituted counts

LSA 352 Summer 2007

76

Note big change to counts

C(count to) went from 608 to 238!

P(to|want) from .66 to .26!

Discount d= c*/c

d for “chinese food” =.10!!! A 10x reduction

So in general, Laplace is a blunt instrument

Could use more fine-grained method (add-k)

But Laplace smoothing not used for N-grams, as we have much

better methods

Despite its flaws Laplace (add-k) is however still used to smooth

other probabilistic models in NLP, especially

For pilot studies

in domains where the number of zeros isn’t so huge.

LSA 352 Summer 2007

77

Better discounting algorithms

Intuition used by many smoothing algorithms

Good-Turing

Kneser-Ney

Witten-Bell

Is to use the count of things we’ve seen once to help

estimate the count of things we’ve never seen

LSA 352 Summer 2007

78

Good-Turing: Josh Goodman

intuition

Imagine you are fishing

There are 8 species: carp, perch, whitefish, trout,

salmon, eel, catfish, bass

You have caught

10 carp, 3 perch, 2 whitefish, 1 trout, 1 salmon, 1 eel

= 18 fish

How likely is it that next species is new (i.e. catfish or

bass)

3/18

Assuming so, how likely is it that next species is

trout?

Must be less than 1/18

Slide adapted from Josh Goodman

LSA 352 Summer 2007

79

Good-Turing Intuition

Notation: Nx is the frequency-of-frequency-x

So N10=1, N1=3, etc

To estimate total number of unseen species

Use number of species (words) we’ve seen once

c0* =c1

p0 = N1/N

All other estimates are adjusted (down) to give

probabilities for unseen

Slide from Josh Goodman

LSA 352 Summer 2007

80

Good-Turing Intuition

Notation: Nx is the frequency-of-frequency-x

So N10=1, N1=3, etc

To estimate total number of unseen species

Use number of species (words) we’ve seen once

c0* =c1

p0 = N1/N p0=N1/N=3/18

All other estimates are adjusted (down) to give

probabilities for unseen

P(eel) = c*(1) = (1+1) 1/ 3 = 2/3

Slide from Josh Goodman

LSA 352 Summer 2007

81

LSA 352 Summer 2007

82

Bigram frequencies of

frequencies and GT re-estimates

LSA 352 Summer 2007

83

Complications

In practice, assume large counts (c>k for some k) are reliable:

That complicates c*, making it:

Also: we assume singleton counts c=1 are unreliable, so treat N-grams

with count of 1 as if they were count=0

Also, need the Nk to be non-zero, so we need to smooth (interpolate)

the Nk counts before computing c* from them

LSA 352 Summer 2007

84

Backoff and Interpolation

Another really useful source of knowledge

If we are estimating:

trigram p(z|xy)

but c(xyz) is zero

Use info from:

Bigram p(z|y)

Or even:

Unigram p(z)

How to combine the trigram/bigram/unigram info?

LSA 352 Summer 2007

85

Backoff versus interpolation

Backoff: use trigram if you have it, otherwise

bigram, otherwise unigram

Interpolation: mix all three

LSA 352 Summer 2007

86

Interpolation

Simple interpolation

Lambdas conditional on context:

LSA 352 Summer 2007

87

How to set the lambdas?

Use a held-out corpus

Choose lambdas which maximize the probability of

some held-out data

I.e. fix the N-gram probabilities

Then search for lambda values

That when plugged into previous equation

Give largest probability for held-out set

Can use EM to do this search

LSA 352 Summer 2007

88

Katz Backoff

LSA 352 Summer 2007

89

Why discounts P* and alpha?

MLE probabilities sum to 1

So if we used MLE probabilities but backed off to

lower order model when MLE prob is zero

We would be adding extra probability mass

And total probability would be greater than 1

LSA 352 Summer 2007

90

GT smoothed bigram probs

LSA 352 Summer 2007

91

Intuition of backoff+discounting

How much probability to assign to all the zero

trigrams?

Use GT or other discounting algorithm to tell us

How to divide that probability mass among different

contexts?

Use the N-1 gram estimates to tell us

What do we do for the unigram words not seen in

training?

Out Of Vocabulary = OOV words

LSA 352 Summer 2007

92

OOV words: <UNK> word

Out Of Vocabulary = OOV words

We don’t use GT smoothing for these

Because GT assumes we know the number of unseen events

Instead: create an unknown word token <UNK>

Training of <UNK> probabilities

– Create a fixed lexicon L of size V

– At text normalization phase, any training word not in L changed to

<UNK>

– Now we train its probabilities like a normal word

At decoding time

– If text input: Use UNK probabilities for any word not in training

LSA 352 Summer 2007

93

Practical Issues

We do everything in log space

Avoid underflow

(also adding is faster than multiplying)

LSA 352 Summer 2007

94

ARPA format

LSA 352 Summer 2007

95

LSA 352 Summer 2007

96

Language Modeling Toolkits

SRILM

CMU-Cambridge LM Toolkit

LSA 352 Summer 2007

97

Google N-Gram Release

LSA 352 Summer 2007

98

Google N-Gram Release

serve

serve

serve

serve

serve

serve

serve

serve

serve

serve

as

as

as

as

as

as

as

as

as

as

the

the

the

the

the

the

the

the

the

the

incoming 92

incubator 99

independent 794

index 223

indication 72

indicator 120

indicators 45

indispensable 111

indispensible 40

individual 234

LSA 352 Summer 2007

99

Advanced LM stuff

Current best smoothing algorithm

Kneser-Ney smoothing

Other stuff

Variable-length n-grams

Class-based n-grams

– Clustering

– Hand-built classes

Cache LMs

Topic-based LMs

Sentence mixture models

Skipping LMs

Parser-based LMs

LSA 352 Summer 2007

100

Summary

LM

N-grams

Discounting: Good-Turing

Katz backoff with Good-Turing discounting

Interpolation

Unknown words

Evaluation:

– Entropy, Entropy Rate, Cross Entropy

– Perplexity

Advanced LM algorithms

LSA 352 Summer 2007

101