Hidden Markov Models

advertisement

Hidden Markov Models

L.R Rabiner, B. H. Juang, An Introduction to Hidden Markov Models

Ara V. Nefian and Monson H. Hayeslll, Face detection and recognition using Hidden

Markov Models

戴玉書

Outline

Markov Chain & Markov Models

Hidden Markov Models

HMM Problem

-Evaluation

-Decoding

-Learning

Application

Outline

Markov Chain & Markov Models

Hidden Markov Models

HMM Problem

-Evaluation

-Decoding

-Learning

Application

Markov chain property:

Probability of each subsequent state depends only

on what was the previous state

P(sik | si1 , si 2 ,, sik 1 ) P(sik | sik 1 )

P( si1 , si 2 ,, sik ) P(sik | si1 , si 2 ,, sik 1 ) P( si1 , si 2 ,, sik 1 )

P(sik | sik 1 ) P( si1 , si 2 ,, sik 1 )

P(sik | sik 1 ) P( sik 1 | sik 2 ) P(si 2 | si1 ) P(si1 )

Markov Models

State

State

State

Outline

Markov Chain & Markov Models

Hidden Markov Models

HMM Problem

-Evaluation

-Decoding

-Learning

Application

Hidden Markov Models

If you don’t have complete state information, but some

observations at each state

N - number of states : {s1, s2 ,, sN }

M - the number of observables: {v1 , v2 ,, vM }

q1

q2

o1

q3

o2

……

q4

o3

o4

Hidden Markov Models

State:{

,

Observable:{

0.1

0.3

0.9

0.7

0.8

0.2

,

}

,

}

Hidden Markov Models

M=(A, B, )

A (aij ), aij P(s j | si )

B (bi (vm )),bi (vm ) P(vm | si )

= initial probabilities : =(i) , i = P(si)

Outline

Markov Chain & Markov Models

Hidden Markov Models

HMM Problem

-Evaluation

-Decoding

-Learning

Application

Evaluation

Determine the probability that a particular sequence

of symbols O was generated by that model

T 1

P (Q | M ) q1 aqt ,qt 1 q1 aq1 ,q2 aq1 ,q2 aqT 1 ,qT

t 1

T

P (O | Q, M ) P (ot | qt , M ) bq1 ,o1 bq2 ,o2 bqT ,oT

t 1

P(O | M ) P(O | Q, M ) P(Q | M )

allQ

Forward recursion

t (i) P(o1,...,ot , qt si | M )

Initialization:

1 (i) ibi (o1 )

Forward recursion:

N

t 1 ( j ) [ t (i)aij ]b j (ot 1 )

i 1

Termination:

N

N

i 1

i 1

P(O | M ) P(o1 , o2 ,...,oT , qT si | M ) T (i)

Backward recursion

t (i) P(ot 1, ot 2 ,...,oT | qt si , M )

Initialization:

T (i) 1

Backward recursion:

N

t (i) [ t 1 ( j )aij ]b j (ot 1 )

Termination:

j 1

N

N

i 1

i 1

P(O | M ) P(o1 o 2 , ... , oT | q1 si )P(q1 si ) 1 (i)bi (o1 ) i

Outline

Markov Chain & Markov Models

Hidden Markov Models

HMM Problem

-Evaluation

-Decoding

-Learning

Application

Decoding

Given a set of symbols O determine the most likely

sequence of hidden states Q that led to the

observations

We want to find the state sequence Q which

maximizes P(Q|o1,o2,...,oT)

Viterbi algorithm

qt-1

qt

s1

a1j

si

sN

aij

aNj

sj

General idea:

if best path ending in qt= sj goes

through qt-1= si then it should

coincide with best path ending in

qt-1= si

Viterbi algorithm

t (i) max P(q1 q t-1 , q t si , o1, o2 ... ot )

Initialization:

1 (i) max P(q1 si , o1 ) ibi (o1 )

Forward recursion:

t (j) max [ t -1 (i)a ijb j (o t )]

i

Termination:

max [ T (i )]

i

Viterbi algorithm

Outline

Markov Chain & Markov Models

Hidden Markov Models

HMM Problem

-Evaluation

-Decoding

-Learning

Application

Learning problem

Given a coarse structure of the model, determine

HMM parameters M=(A, B, ) that best fit training

data

determine these parameters

a ij P(sj | si )

Number of transition fromstatesi to states j

Number of transitions out of statesi

Number of timesobservation vm occursin statesi

bi (vm ) P(vm | si )

Number of timesin statesi

i Expectedfrequencyin statesi at timet 1

Baum-Welch algorithm

Define variable t(i,j) as the probability of being in

state si at time t and in state sj at time t+1, given the

observation sequence o1, o2, ... ,oT

Initialise: M 0

t (i, j) P(qt si , q t 1 s j | o1, o2 , ..., oT )

P(qt si , o1 o 2 ... oT ) a i j b j (ot 1 ) P(ot 2 , ... , oT | q t 1 s j )

P(o1 , o 2 ... , oT )

t (i) a ij b j (ot 1 ) t 1 (j)

t (i) a ij b j (ot 1 )t 1 (j)

i

j

Baum-Welch algorithm

Define variable k(i) as the probability of being in

state si at time t, given the observation sequence

o1,o2 ,...,oT

N

t (i) t (i, j )

j 1

T 1

T 1

aij

(i , j )

t 1

T 1

t

(i)

t 1

t

, b j ( vm )

( j)

t 1,ot vm

T 1

t

( j)

t 1

t

, (i)

1

Outline

Markov Chain & Markov Models

Hidden Markov Models

HMM Problem

-Evaluation problem

-Decoding problem

-Learning problem

Application

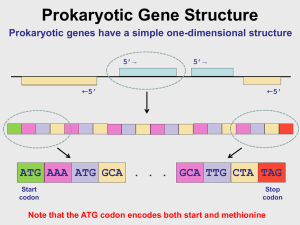

Example 1 -character recognition

s1

s2

s3

The structure of hidden states:

Observation = number of islands in the vertical slice

Example 1 -character recognition

After character image segmentation the following sequence

of island numbers in 4 slices was observed : {1,3,2,1}

Example 2- face detection & recognition

The structure of hidden states:

Example 2- face detection

A set of face images is used in the training of one

HMM model

N =6 states

Image:48, Training:9, Correct detection:90%,Pixels:60X90

Example 2- face recognition

Each individual in the database is represent by an

HMM face model

A set of images representing different instances of

same face are used to train each HMM

N =6 states

Example 2- face recognition

Image:400, Training :Half, Individual:40, Pixels:92X112