The Poisson process

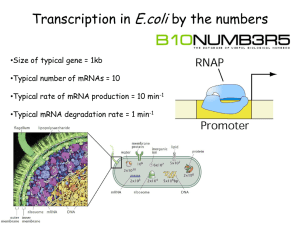

advertisement

CIS 2033 based on Dekking et al. A Modern Introduction to Probability and Statistics. 2007 Instructor Longin Jan Latecki C12: The Poisson process From Baron book: The number of rare events occurring within a fixed period of time has Poisson distribution. Essentially it means that two such events are extremely unlikely to occur simultaneously or within a very short period of time. Arrivals of jobs, telephone calls, e-mail messages, traffic accidents, network blackouts, virus attacks, errors in software, floods, and earthquakes are examples of rare events. This distribution bears the name of a famous French mathematician Siméon-Denis Poisson (1781–1840). 12.2 – Poisson Distribution Definition: A discrete RV X has a Poisson distribution with parameter µ, where µ > 0 if its probability mass function is given by P(k) P(X k) k k! e for k = 0,1,2…, where µ is the expected number of rare events, or number of successes, occurring in time interval [0, t], which is fixed for X. We can express µ = t λ, where t is the length of the interval, e.g., number of minutes. Hence λ = µ / t = number of events per time unite = probability of success. λ is also called the intensity or frequency of the Poisson process. We denote this distribution: Pois(µ) = Pois(tλ). Expectation E[X] = µ = tλ and variance Var(X) = µ = tλ Dekking 12.6 A certain brand of copper wire has flaws about every 40 centimeters. Model the locations of the flaws as a Poisson process. What is the probability of two flaws in 1 meter of wire? The expected numbers of flaws in 1 meter is 100/40 = 2.5, and hence the number of flaws X has a Pois(2.5) distribution. The answer is P(X = 2): (2.5)2 2.5 P(X 2) e 0.256 2! since P(X k) k k! e Let X1, X2, … be arrival times such that the probability of k arrivals in a given time interval [0, t] has a Poisson distribution Pois(tλ): k (t ) t P(X k) e k! The differences Ti = Xi – Xi-1 are called inter-arrival times or wait times. The inter-arrival times T1=X1, T2=X2 – X1, T3=X3 – X2 … are independent RVs, each with an Exp(λ) distribution. Hence expected inter-arrival time is E(Ti) =1/λ. Since for Poisson λ = µ / t = (number of events) / (time unite) = intensity = probability of success, we have for the exponential distribution E(Ti) =1/λ = t / µ = (time unite) / (number of events) = wait time Quick exercise 12.2 We model the arrivals of email messages at a server as a Poisson process. Suppose that on average 330 messages arrive per minute. What would you choose for the intensity λ in messages per second? What is the expectation of the interarrival time? Because there are 60 seconds in a minute, we have λ = µ / t = (number of events) / (time unite) = 330 / 60 = 5.5 Since the interarrival times have an Exp(λ) distribution, the expected time between messages is 1/λ = 0.18 second, i.e., E(T) =1/λ = t / µ = (time unite) / (number of events) = 60/330=0.18 Let X1, X2, … be arrival times such that the probability of k arrivals in a given time interval [0, t] has a Poisson distribution Pois(λt): ( t ) k t P(X k) e k! Each arrival time Xi, is a random variable with Gam(i, λ) distribution for α=i : We also observe that Gam(1, λ) = Exp(λ): a) It is reasonable to estimate λ with (nr. of cars)/(total time in sec.) = 0.192. b) 19/120 = 0.1583, and if λ = 0.192 then μ = 10 λ =1.92. Hence P(K = 0) = e-0.192*10 = 0.147 c) Again μ = 10 λ =1.92 and we have P(K = 10) = ((1.92 )10/ 10!) * e-1.92 = 2.71 * 10-5. 12.2 –Random arrivals Example: Telephone calls arrival times Calls arrive at random times, X1, X2, X3… Homegeneity aka weak stationarity: is the rate lambda at which arrivals occur in constant over time: in a subinterval of length u the expectation of the number of telephone calls is λu. Independence: The number of arrivals in disjoint time intervals are independent random variables. N(I) = total number of calls in an interval I Nt=N([0,t]) E[Nt] = t λ Divide Interval [0,t] into n intervals, each of size t/n 12.2 –Random arrivals When n is large enough, every interval Ij,n = ((j-1)t/n , jt/n] contains either 0 or 1 arrivals. Arrival: For such a large n ( n > λ t), Rj = number of arrivals in the time interval Ij,n, Rj = 0 or 1 Rj has a Ber(p) distribution for some p. Recall: (For a Bernoulli random variable) E[Rj] = 0 • (1 – p) + 1 • p = p By Homogeneity assumption for each j p = λ • length of Ij,n = λ ( t / n) Total number of calls: Nt = R1 + R2 + … + Rn. By Independence assumption Rj are independent random variables, so Nt has a Bin(n,p) distribution, with p = λ t/n, hence λ = np/t When n goes to infinity, Bin(n,p) converges to a Poisson distribution Example form Baron Book: Example 3.23 from Baron Book Baron uses λ for μ, hence and λ=np, where we have Bin(n, p). P(X k) k k! e