multicollinearity

advertisement

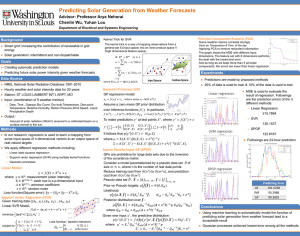

Multicollinearity Overview • This set of slides – What is multicollinearity? – What is its effects on regression analyses? • Next set of slides – How to detect multicollinearity? – How to reduce some types of multicollinearity? Multicollinearity • Multicollinearity exists when two or more of the predictors in a regression model are moderately or highly correlated. • Regression analyses most often take place on data obtained from observational studies. • In observational studies, multicollinearity happens more often than not. Types of multicollinearity • Structural multicollinearity – a mathematical artifact caused by creating new predictors from other predictors, such as, creating the predictor x2 from the predictor x. • Sample-based multicollinearity – a result of a poorly designed experiment, reliance on purely observational data, or the inability to manipulate the system on which you collect the data Example 120 BP 110 53.25 Age 47.75 97.325 Weight 89.375 2.125 BSA 1.875 8.275 Duration 4.425 72.5 Pulse 65.5 76.25 Stress 30.75 0 11 0 12 . 75 3.25 47 5 5 5 .37 7. 32 89 9 75 25 1. 8 2. 1 25 .275 4. 4 8 .5 65 .5 72 .75 6. 25 30 7 n = 20 hypertensive individuals p-1 = 6 predictor variables Example Age Weight BSA Duration Pulse Stress BP 0.659 0.950 0.866 0.293 0.721 0.164 Age Weight BSA 0.407 0.378 0.344 0.619 0.368 0.875 0.201 0.659 0.034 0.131 0.465 0.018 Duration 0.402 0.312 Blood pressure (BP) is the response. Pulse 0.506 What is effect on regression analyses if predictors are perfectly uncorrelated? Example x1 2 2 2 2 4 4 4 4 x2 5 5 7 7 5 5 7 7 y 52 51 49 46 50 48 44 43 Pearson correlation of x1 and x2 = 0.000 Example 2.5 3.5 5.5 6. 5 49.75 y 45.25 3.5 x1 2.5 x2 Regress y on x1 The regression equation is y = 48.8 - 0.63 x1 Predictor Constant x1 Coef 48.750 -0.625 Analysis of Variance Source DF SS Regression 1 3.13 Error 6 77.75 Total 7 80.88 SE Coef 4.025 1.273 MS 3.13 12.96 T 12.11 -0.49 F 0.24 P 0.000 0.641 P 0.641 Regress y on x2 The regression equation is y = 55.1 - 1.38 x2 Predictor Constant x2 Analysis of Source Regression Error Total Coef 55.125 -1.375 SE Coef 7.119 1.170 Variance DF SS 1 15.13 6 65.75 7 80.88 MS 15.13 10.96 T 7.74 -1.17 F 1.38 P 0.000 0.285 P 0.285 Regress y on x1 and x2 The regression equation is y = 57.0 - 0.63 x1 - 1.38 x2 Predictor Constant x1 x2 Coef 57.000 -0.625 -1.375 SE Coef 8.486 1.251 1.251 Analysis of Variance Source DF SS Regression 2 18.25 Error 5 62.63 Total 7 80.88 Source x1 x2 DF 1 1 Seq SS 3.13 15.13 MS 9.13 12.53 T 6.72 -0.50 -1.10 F 0.73 P 0.001 0.639 0.322 P 0.528 Regress y on x2 and x1 The regression equation is y = 57.0 - 1.38 x2 - 0.63 x1 Predictor Constant x2 x1 Coef 57.000 -1.375 -0.625 SE Coef 8.486 1.251 1.251 Analysis of Variance Source DF SS Regression 2 18.25 Error 5 62.63 Total 7 80.88 Source x2 x1 DF 1 1 Seq SS 15.13 3.13 MS 9.13 12.53 T 6.72 -1.10 -0.50 F 0.73 P 0.001 0.322 0.639 P 0.528 Summary of results Model b1 se(b1) b2 se(b2) Seq SS x1 only -0.625 1.273 --- --- SSR(X1) x2 only --- --- -1.375 1.170 SSR(X2) -0.625 1.251 -1.375 1.251 SSR(X2|X1) -0.625 1.251 -1.375 1.251 SSR(X1|X2) x1, x2 (in order) x2, x1 (in order) 3.13 15.13 15.13 3.13 If predictors are perfectly uncorrelated, then… • You get the same slope estimates regardless of the first-order regression model used. • That is, the effect on the response ascribed to a predictor doesn’t depend on the other predictors in the model. If predictors are perfectly uncorrelated, then… • The sum of squares SSR(X1) is the same as the sequential sum of squares SSR(X1|X2). • The sum of squares SSR(X2) is the same as the sequential sum of squares SSR(X2|X1). • That is, the marginal contribution of one predictor variable in reducing the error sum of squares doesn’t depend on the other predictors in the model. Do we see similar results for “real data” with nearly uncorrelated predictors? Example Age Weight BSA Duration Pulse Stress BP 0.659 0.950 0.866 0.293 0.721 0.164 Age Weight BSA 0.407 0.378 0.344 0.619 0.368 0.875 0.201 0.659 0.034 0.131 0.465 0.018 Duration 0.402 0.312 Pulse 0.506 Example 75 .125 8 . 1 2 .75 6.25 0 3 7 120 BP 110 2.125 BSA 1.875 Stress Regress BP on x1 = Stress The regression equation is BP = 113 + 0.0240 Stress Predictor Constant Stress S = 5.502 Analysis of Source Regression Error Total Coef 112.720 0.02399 SE Coef 2.193 0.03404 R-Sq = 2.7% Variance DF SS 1 15.04 18 544.96 19 560.00 T 51.39 0.70 P 0.000 0.490 R-Sq(adj) = 0.0% MS 15.04 30.28 F 0.50 P 0.490 Regress BP on x2 = BSA The regression equation is BP = 45.2 + 34.4 BSA Predictor Constant BSA S = 2.790 Coef 45.183 34.443 SE Coef 9.392 4.690 R-Sq = 75.0% Analysis of Variance Source DF SS Regression 1 419.86 Error 18 140.14 Total 19 560.00 T 4.81 7.34 P 0.000 0.000 R-Sq(adj) = 73.6% MS 419.86 7.79 F 53.93 P 0.000 Regress BP on x1 = Stress and x2 = BSA The regression equation is BP = 44.2 + 0.0217 Stress + 34.3 BSA Predictor Constant Stress BSA Analysis of Source Regression Error Total Source Stress BSA Coef 44.245 0.02166 34.334 SE Coef 9.261 0.01697 4.611 Variance DF SS 2 432.12 17 127.88 19 560.00 DF 1 1 Seq SS 15.04 417.07 MS 216.06 7.52 T 4.78 1.28 7.45 F 28.72 P 0.000 0.219 0.000 P 0.000 Regress BP on x2 = BSA and x1 = Stress The regression equation is BP = 44.2 + 34.3 BSA + 0.0217 Stress Predictor Constant BSA Stress Analysis of Source Regression Error Total Source BSA Stress Coef 44.245 34.334 0.02166 SE Coef 9.261 4.611 0.01697 Variance DF SS 2 432.12 17 127.88 19 560.00 DF 1 1 Seq SS 419.86 12.26 MS 216.06 7.52 T 4.78 7.45 1.28 P 0.000 0.000 0.219 F 28.72 P 0.000 Summary of results Model x1 only x2 only x1, x2 b1 se(b1) b2 se(b2) Seq SS 0.0240 0.0340 --- --- SSR(X1) 34.443 4.690 SSR(X2) 0.0217 0.0170 34.334 4.611 SSR(X2|X1) 0.0217 0.0170 34.334 4.611 SSR(X1|X2) --- --- (in order) x2, x1 (in order) 15.04 419.86 417.07 12.26 If predictors are nearly uncorrelated, then… • You get similar slope estimates regardless of the first-order regression model used. • The sum of squares SSR(X1) is similar to the sequential sum of squares SSR(X1|X2). • The sum of squares SSR(X2) is similar to the sequential sum of squares SSR(X2|X1). What happens if the predictor variables are highly correlated? Example Age Weight BSA Duration Pulse Stress BP 0.659 0.950 0.866 0.293 0.721 0.164 Age Weight BSA 0.407 0.378 0.344 0.619 0.368 0.875 0.201 0.659 0.034 0.131 0.465 0.018 Duration 0.402 0.312 Pulse 0.506 Example 75 .125 8 . 1 2 75 .325 3 . 89 97 120 BP 110 2.125 BSA 1.875 Weight Regress BP on x1 = Weight The regression equation is BP = 2.21 + 1.20 Weight Predictor Constant Weight S = 1.740 Coef 2.205 1.20093 SE Coef 8.663 0.09297 R-Sq = 90.3% Analysis of Variance Source DF SS Regression 1 505.47 Error 18 54.53 Total 19 560.00 T 0.25 12.92 P 0.802 0.000 R-Sq(adj) = 89.7% MS 505.47 3.03 F 166.86 P 0.000 Regress BP on x2 = BSA The regression equation is BP = 45.2 + 34.4 BSA Predictor Constant BSA S = 2.790 Analysis of Source Regression Error Total Coef 45.183 34.443 SE Coef 9.392 4.690 R-Sq = 75.0% Variance DF SS 1 419.86 18 140.14 19 560.00 T 4.81 7.34 P 0.000 0.000 R-Sq(adj) = 73.6% MS 419.86 7.79 F 53.93 P 0.000 Regress BP on x1 = Weight and x2 = BSA The regression equation is BP = 5.65 + 1.04 Weight + 5.83 BSA Predictor Constant Weight BSA Analysis of Source Regression Error Total Source Weight BSA Coef 5.653 1.0387 5.831 SE Coef 9.392 0.1927 6.063 Variance DF SS 2 508.29 17 51.71 19 560.00 DF 1 1 Seq SS 505.47 2.81 MS 254.14 3.04 T 0.60 5.39 0.96 F 83.54 P 0.555 0.000 0.350 P 0.000 Regress BP on x2 = BSA and x1 = Weight The regression equation is BP = 5.65 + 5.83 BSA + 1.04 Weight Predictor Constant BSA Weight Analysis of Source Regression Error Total Source BSA Weight Coef 5.653 5.831 1.0387 SE Coef 9.392 6.063 0.1927 Variance DF SS 2 508.29 17 51.71 19 560.00 DF 1 1 Seq SS 419.86 88.43 MS 254.14 3.04 T 0.60 0.96 5.39 F 83.54 P 0.555 0.350 0.000 P 0.000 Effect #1 of multicollinearity When predictor variables are correlated, the regression coefficient of any one variable depends on which other predictor variables are included in the model. Variables in model x1 b1 b2 1.20 ---- x2 ---- 34.4 x1, x2 1.04 5.83 Even correlated predictors not in the model can have an impact! • Regression of territory sales on territory population, per capita income, etc. • Against expectation, coefficient of territory population was determined to be negative. • Competitor’s market penetration, which was strongly positively correlated with territory population, was not included in model. • But, competitor kept sales down in territories with large populations. Effect #2 of multicollinearity When predictor variables are correlated, the precision of the estimated regression coefficients decreases as more predictor variables are added to the model. Variables in model x1 se(b1) se(b2) 0.093 ---- x2 ---- 4.69 x1, x2 0.193 6.06 Why not effects #1 and #2? 3D Scatterplot of y vs x1 vs x2 51 y 48 45 4 42 3 5 x2 6 7 2 x1 Why not effects #1 and #2? 3D Scatterplot of BP vs BSA vs Stress 128 120 BP 112 104 0 50 Stress 100 1.80 1.95 2.10 2.25 BSA Why effects #1 and #2? 3D Scatterplot of BP vs BSA vs Weight 128 120 BP 112 104 85 90 Weight 95 100 1.80 1.95 2.10 2.25 BSA Effect #3 of multicollinearity When predictor variables are correlated, the marginal contribution of any one predictor variable in reducing the error sum of squares varies, depending on which other variables are already in model. SSR(X1) = 505.47 SSR(X2) = 419.86 SSR(X1|X2) = 88.43 SSR(X2|X1) = 2.81 What is the effect on estimating mean or predicting new response? (2,92) Weight 100 95 90 85 1.75 1.85 1.95 2.05 BSA 2.15 2.25 Effect #4 of multicollinearity on estimating mean or predicting Y Weight 92 BSA 2 Fit 112.7 Fit 114.1 BSA Weight 2 92 SE Fit 0.402 SE Fit 0.624 95.0% CI (111.85,113.54) 95.0% PI (108.94,116.44) 95.0% CI (112.76,115.38) Fit SE Fit 95.0% CI 112.8 0.448 (111.93,113.83) 95.0% PI (108.06,120.08) 95.0% PI (109.08, 116.68) High multicollinearity among predictor variables does not prevent good, precise predictions of the response (within scope of model). What is effect on tests of individual slopes? The regression equation is BP = 45.2 + 34.4 BSA Predictor Constant BSA S = 2.790 Analysis of Source Regression Error Total Coef 45.183 34.443 SE Coef 9.392 4.690 R-Sq = 75.0% Variance DF SS 1 419.86 18 140.14 19 560.00 T 4.81 7.34 P 0.000 0.000 R-Sq(adj) = 73.6% MS 419.86 7.79 F 53.93 P 0.000 What is effect on tests of individual slopes? The regression equation is BP = 2.21 + 1.20 Weight Predictor Constant Weight S = 1.740 Coef 2.205 1.20093 SE Coef 8.663 0.09297 R-Sq = 90.3% Analysis of Variance Source DF SS Regression 1 505.47 Error 18 54.53 Total 19 560.00 T 0.25 12.92 P 0.802 0.000 R-Sq(adj) = 89.7% MS 505.47 3.03 F 166.86 P 0.000 What is effect on tests of individual slopes? The regression equation is BP = 5.65 + 1.04 Weight + 5.83 BSA Predictor Constant Weight BSA Coef 5.653 1.0387 5.831 Analysis of Source Regression Error Total Variance DF SS 2 508.29 17 51.71 19 560.00 Source Weight BSA DF 1 1 SE Coef 9.392 0.1927 6.063 MS 254.14 3.04 Seq SS 505.47 2.81 T 0.60 5.39 0.96 F 83.54 P 0.555 0.000 0.350 P 0.000 Effect #5 of multicollinearity on slope tests When predictor variables are correlated, hypothesis tests for βk = 0 may yield different conclusions depending on which predictor variables are in the model. Variables in model x2 b2 se(b2) t P-value 34.4 4.7 7.34 0.000 x1, x2 5.83 6.1 0.96 0.350 The major impacts on model use • In the presence of multicollinearity, it is okay to use an estimated regression model to predict y or estimate μY in scope of model. • In the presence of multicollinearity, we can no longer interpret a slope coefficient as … – the change in the mean response for each additional unit increase in xk, when all the other predictors are held constant