Slide 1

advertisement

Round and Approx: A technique

for packing problems

Nikhil Bansal (IBM Watson)

Maxim Sviridenko (IBM Watson)

Alberto Caprara (U. Bologna, Italy)

Problems

Bin Packing: Given n items, sizes s1,…,sn, s.t.

0 < si · 1. Pack all items in least number of unit size bins.

D-dim Bin Packing (with & without rotations)

1

4

3

2

5

3

6

2

4

1

6

5

Problems

d-dim Vector Packing: Each item d-dim vector.

Packing valid if each co-ordinate wise sum ·1

Bin: machine with d resources

Item: job with resource requiremts.

Valid

Invalid

Set Cover: Items i1, … , in

Sets C1,…,Cm.

Choose fewest sets s.t. each item covered.

All three bin packing problems, can be viewed as set cover.

Sets implicit: Any subset of items that fit feasibly in a bin.

Short history of bin-packing

Bin Packing: NP-Hard if need 2 or 3 bins?

(Partition Prob.)

Does not rule out Opt + 1

Asymptotic approximation: OPT + O(1)

Several constant factors in 60-70’s

APTAS: For every >0, (1+) Opt + O(1) [de la Vega, Leuker 81]

Opt + O(log2 OPT)

[Karmarkar Karp 82]

Outstanding open question: Can we get Opt + 1

No worse integrality gap for a natural LP known

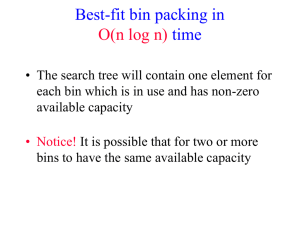

Short history of bin-packing

2-d Bin Packing: APTAS ) P=NP [B, Sviridenko 04]

Best Result: Without rotations: 1.691… [Caprara 02]

With rotations: 2

[Jansen, van Stee 05]

d-dim Vector Packing: No APTAS for d=2

Best Result: O(log d) for constant d

If d part of input, d1/2 - ) P=NP

Best for d=2 is 2 approx.

[Woeginger 97]

[Chekuri Khanna 99]

Our Results

1) 2-d Bin Packing : ln 1.691 + 1 = 1.52

Both with and without rotations

(previously 1.691 & 2)

2) d-Dim Vector Packing: 1 + ln d

(for constant d)

For d=2: get 1+ ln 2 = 1.693

(previously 2)

General Theorem

Given a packing problem, items i1,…,in

1) If can solve set covering LP

min C xC

s.t.

C: i 2 C xC ¸ 1

8 items i

2) approximation : Subset Oblivious

Then (ln + 1) approximation

d subset oblivious approximation for vector packing

1.691 algorithm of Caprara for 2d bin packing is subset ob.

Give 1.691 subset ob. approx for rotation case (new)

Subset Oblivious Algorithms

Given an instance I, with n items

(I) = all 1’s vector

(S) incidence vector for subset of items S.

There exist k weight (n - dim) vectors w1, w2,…,wk

For every subset of items S µ I, and > 0

1) OPT (I) ¸ maxi ( wi ¢ (I) )

2) Alg (S) · maxi (wi ¢ (S)) + OPT(I) + O(1)

An (easy) example

Any-Fit Bin Packing algorithm:

Consider items one by one. If current item does not fit in any

existing bin, put it in a brand new bin.

No two bins filled · 1/2

(implies ALG · 2 OPT + 1 )

Also a subset oblivious 2 approx

K=1: w(i) = si (size of item i)

1) OPT(I) ¸ i 2 I si = w ¢ (I)

[Volume Bound]

2) Alg(S) · 2 w ¢ (S) + 1

[ # bins · 2 ( total volume of S) + 1 ]

Non-Trivial Example

Asymptotic approx scheme of de la Vega, Leuker

For any > 0,

Alg · (1+) OPT + O(1/2)

We will show it is subset oblivious

1-d: Algorithm

I

0

1

1-d: Algorithm

bigs

I

0

1

1-d: Algorithm

Partition bigs into 1/2 = O(1) groups, with equal objects

I

0

1

.

I’

0

.

.

1

I’ ¸ I

1-d: Algorithm

Partition bigs into 1/2 = O(1) groups, with equal objects

I

0

1

.

I’

0

.

.

1

I’ ¸ I

I’ ¼ I

I’ – { } · I

I’ has only O(1/2) distinct sizes

LP for the big items

1/2 items types. Let ni denote # of items of type i in instance.

LP:

min C xC

s.t.

C ai,C xC ¸ ni

C indexes valid sets (at most (1/2)(1/) )

ai,C number of type i items in set C

At most 1/2 variables non-zero.

Rounding: x ! d x e

Solution (big) · Opt (big) + 1/2

8 size types i

Filling in smalls

Take solution on bigs. Fill in smalls (i.e. <) greedily.

1)

2)

If no more bins need, already optimum.

If needed, every bin (except maybe one) filled to 1-

Alg(I) · Volume(I)/(1-) +1

· Opt/(1-) +1

We will now show this is a subset oblivious algorithm !

Subset Obliviousness

LP:

min xC

C ai,C xC ¸ ni

Dual: max ni wi

i ai,C wi · 1

8 item types i

for each set C

If consider dual for subset of items S

Dual: max |type i items in S| wi

i ai,C wi · 1

for each set C

Dual polytope independent of S: Only affects objective function.

Subset Obliviousness

LP:

min xC

C ai,C xC ¸ ni

Dual: max ni wi

i ai,C wi · 1

8 item types i

for each set C.

Define vector Wv for each vertex of polytope (O(1) vertices)

LP*(S) = maxv Wv ¢ (S)

(LP Duality)

Alg(S) · LP*(S) + 1/2 = maxv Wv ¢ (S) + 1/2

Opt(I) ¸ LP(I) = maxv Wv ¢ (I)

Handling smalls: Another vector w, where w(i) = si

General Algorithm

Theorem: Can get ln + 1 approximation, if

1) Can solve set covering LP

2) approximate subset oblivious alg.

Algorithm:

Solve set covering LP, get soln x* .

Randomized Rounding with parameter > 0, i.e. choose set C

independently with prob xC*

Residual instance: Apply subset oblivious approx.

Proof of General Theorem

After randomized rounding,

Prob. element i left uncovered · e-

Pf: Prob = C: i 2 C (1- xC) · e-

( as C: i 2 C xC ¸ 1 )

E ( wi ¢ (S)) · e- wi ¢ (I)

wi ¢ (S) sharply concentrated

(variance small: proof omitted)

maxi (wi ¢ (S)) ¼ e- maxi (wi ¢ (I) )

· e- OPT(I)

But subset oblivious algorithm implies

Alg(S) · maxi (wi ¢ (S)) · e- OPT(I)

Proof of General Algorithm

Expected cost = Randomized Rounding + Residual instance cost

¼ LP cost + e- Opt

Gives + e- approximation

Optimizing , gives 1 + ln approx.

Wrapping up

d-dim vector packing: Partition Instance I into d parts I1,…,Id

Ij consists of items for which jth dim is largest

Solving Ij is just a bin packing problem

1+ for bin packing gives d+ subset oblivious algorithm

2-d bin Packing: Harder

Framework for incorporating structural info. into set cover.

Other Problems?

Questions?