Markov chain

advertisement

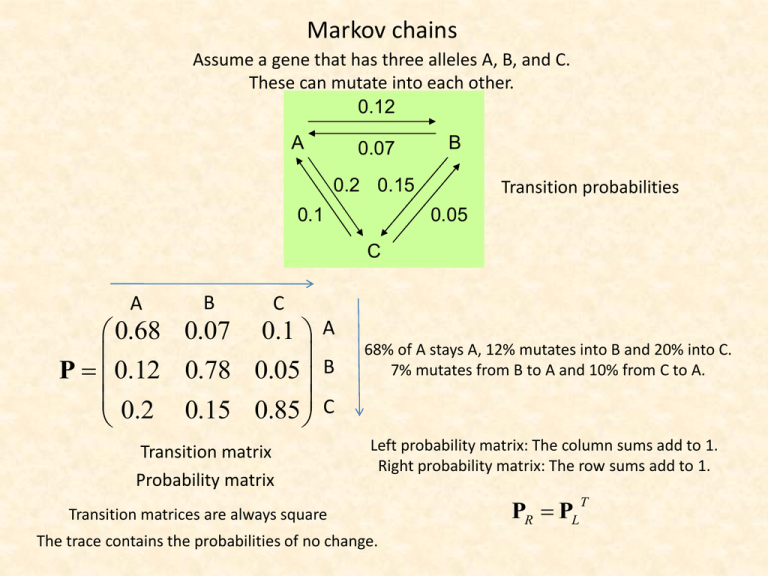

Markov chains Assume a gene that has three alleles A, B, and C. These can mutate into each other. 0.12 A 0.07 B 0.2 0.15 0.1 Transition probabilities 0.05 C A B C 0.68 0.07 0.1 A P 0.12 0.78 0.05 B 0.2 0.15 0.85 C Transition matrix Probability matrix 68% of A stays A, 12% mutates into B and 20% into C. 7% mutates from B to A and 10% from C to A. Left probability matrix: The column sums add to 1. Right probability matrix: The row sums add to 1. Transition matrices are always square The trace contains the probabilities of no change. PR PL T Calculating probabilities Probabilities to reach another state in the next step. Probabilities to reach another state in exactly two steps. 2 0.68 0.07 0.1 0.4908 0.1172 0.1565 0.68 0.07 0.1 P2 0.12 0.78 0.05 0.1852 0.6243 0.0935 P 0.12 0.78 0.05 0.2 0.15 0.85 0.324 0.2585 0.75 0.2 0.15 0.85 The probability to reach any state in exactly n steps is given by Pn Pn Pk U k U1 Assume for instance you have a virus with N strains. Assume further that at each generation a strain mutates to another strain with probabilities ai→j. The probability to stay is therefore 1-Σai→j. What is the probability that the virus is after k generations the same as at the beginning? 1 a i 1,1 p a N1 P A B C A B 0.5 0.3 0.2 1 a1,i 1 a1N C 0.05 0.8 0.15 0.3 0.1 0.6 k=5 PN A B C A B C 0.230675 0.20048 0.258105 0.47613 0.51785 0.43003 0.293195 0.28167 0.311865 Pk U k U1 Eigenvalues 0.338197 0.561803 1 Eigenvectors 0.814984 0.550947 0.368878 -0.450512 -0.797338 0.794506 -0.364472 0.246391 0.482379 Lk 0.004424 0 0 0.055966 0 0 Inverse 0.878092 0.264583 -1.107265 0.109323 -0.798204 1.231089 0.607621 0.607621 0.607621 0 0 1 ULk 0.003606 0.030834 0.368878 -0.001993 -0.044624 0.794506 -0.001613 0.013789 0.482379 ULkU-1 0.230675 0.47613 0.293195 0.20048 0.258105 0.51785 0.43003 0.28167 0.311865 Given initial allele frequencies. What are the frequencies in the next generation? 0.68 0.07 0.1 P 0.12 0.78 0.05 0.2 0.15 0.85 0 .2 P0 0.5 0 .3 Initial allele frequencies FA (1) FA (0) * p( A A) FB (0) * p( B A) FC (0) * p(C A) PA 0.68 0.07 0.1 0 .2 P0 0.5 0 .3 0 .2 PA P0 0.5 0.201 0 .3 0.68 0.07 0.1 0.2 0.201 Allele frequencies P1 PP0 0.12 0.78 0.05 0.5 0.429 in the first 0.2 0.15 0.85 0.3 0.37 generation 0.68 0.07 0.1 0.2 0.201 P1 PP0 0.12 0.78 0.05 0.5 0.429 0.2 0.15 0.85 0.3 0.37 P2 PP1 P(PP0 ) P2P0 Pn PPn1 Pn P(n)Pn1 Pn Pn P0 Xn Pn X0 U n U 1 X0 The model assumes constant transition probabilities. Transition probabilities might change. A Markov chain is a process where step n depends only on the transition probabilities at step n-1 and the realized values at step n. A Marcov chain doesn’t have a memory. p(Xn i | Xn 1, Xn 2 , Xn 3...Xn 1 ) p(Xn i | Xn 1 ) Andrey Markov (1856-1922) Does our mutation process above reach in stable allele frequencies or do they change forever? Do we get stable frequencies? X n1 X n P • X n P Xn 1Xn (P 1I) Xn 0 Xn is a steady-state, stationary probability, or equilibrium vector. The associated eigenvalue is 1. The equilibrium vector is independent of the initial conditions. The largest eigenvalue (principal eigenvalue) of every probability matrix equals 1 and there is an associated stationary probability vector that defines the equilibrium conditions (PerronFrobenius theorem). Eigenvalues and eigenvectors of probability matrices P 0.006159 0.260998 0.383385 0.312983 0.491399 0.23416 0.036019 0.314422 0.292022 0.328144 0.101216 0.277682 0.087934 0.312887 0.057607 0.245795 0.03226 0.115475 0.077524 0.008197 0.41267 0.39304 0.098784 0.004584 0.114652 Column sums 1 Eigenvalues -0.49348933 -0.22284172 -0.10044735 0.139067327 1 1 Eigenvalues -0.48893388 -0.16647268 0.047131406 0.842629825 1 1 The eigenvalues of probability matrices and their transposes are identical. 1 Eigenvectors 0.676793 0.31531 0.049124 0.188368 0.5796 0.261813 -0.31217 -0.05106 0.002974 0.4894 -0.02386 0.714055 -0.81216 -0.60912 0.32066 -0.29236 -0.49289 0.451684 -0.29421 0.21635 -0.62238 -0.2243 0.362421 0.711985 0.52432 P 0.006159 0.260998 0.23416 0.036019 0.101216 0.277682 0.245795 0.03226 0.41267 0.39304 Column sums 1 Column sums of probability matrices are 1. Row sums might be higher. 1 0 0 0 0 0 1 0 0 1 0 0 0.312983 0.292022 0.312887 0.077524 0.004584 0.491399 0.328144 0.057607 0.008197 0.114652 1 1 1 Eigenvectors 0.674168 0.386913 0.097599 0.274076 0.255996 -0.71988 -0.19397 0.230028 -0.00674 0.198906 0.254115 -0.88505 -0.29806 -0.30949 -0.74062 0.10075 -0.62536 0.443543 0.582882 0.280194 One of the eigenvalues of a probability matrix is 1. To get frequencies the eigenvector has to be rescaled (normalized). If one of the entries of P is 1, the matrix is called absorbing. In this case the eigenvector of the largest eigenvalue contains only zeros and one 1. 0 0 1 0 0 Absorbing chains become monodominant by one element. Normalizing the stationary state vector P A B C D E Eigenvalues -0.1173 0.259272 0.632003 0.716025 1 A 0.5 0.5 0 0 0 B 0.15 0.25 0.35 0 0.25 C 0.05 0 0.9 0.05 0 D 0 0 0.25 0.5 0.25 E 0 0.33 0 0.33 0.34 Eigenvalues 0.141692 0.307099 0.471674 0.065218 -0.68828 -0.49866 0.341664 -0.18563 0.315521 0.017437 0.220252 0.838656 -0.32032 0.653439 -0.71444 -0.35729 0.551392 -0.47931 -0.31915 -0.36096 Sum Largest eigenvector 0.13963502 0.14698423 0.9553975 0.17638108 0.12248686 1.54088469 Rescaled 0.09062 0.09539 0.620032 0.114467 0.079491 1 Frequencies Stationary frequencies Frequencies have to add to unity! Final frequencies The sum of the eigenvector entries have to be rescaled. 1 Xn P X0 U U X0 n n N=1000 Eigenvalues 3.14436E-23 0 0 2.46845E-15 0 0 0 0 1 Eigenvectors 0.816257937 0.17364202 0.35099 -0.42522385 -0.77775251 0.385401 -0.39103409 0.604110489 0.853388 Un 2.56661E-23 4.28627E-16 0.35099 -1.3371E-23 -1.9198E-15 0.385401 -1.2296E-23 1.49122E-15 0.853388 Inverse 1.005233 -0.2379 0.629018 UnU-1 0.220779 0.242424 0.536797 -0.07159 -0.38111 -0.93492 0.520064 0.629018 0.629018 0.220779 0.22078 0.242424 0.24242 0.536797 0.5368 Do all Markov chains converge? 0.6 0.3 C Recurrent part 0.4 0.9 B 0.1 A 0.3 Periodic chain B A 0.6 A 0.8 Closed part D You can leave every state. B 0.7 C C D State D cannot be left. The chain is absorbing. Recurrent and aperiodic chains are called ergodic. The probability matrix theorem tells that every irreducible ergodic transition matrix has a steady state vector T to which the process converges. Absorbing chains Closed part It is impossible to leave state D B A A chain is called absorbing if it containes states without exit. The other states are called transient. C D Any absorbing Markov chain finally converges to the absorbing states. Absorbing part A B C D A 0.5 0.25 0.25 0 B 0 0.5 0.25 0.25 C 0 0 0.5 0.5 D 0 0 0 1 Eigenvalues Principal eigenvector 0.5 0 0 0 0 0.5 0 0 0 0 0.5 0.707107 0.707107 0.707107 0 1 -0.70711 -0.70711 -0.70711 1 The time to reach the absorbing state Assume a druncard going randomly through five streets. In the first street is his home, in the last a bar. At either home or bar he stays. 0.5 0.5 0.5 Home Bar 0 1 1/ 2 0 0 0 1/ 2 0 P 0 1/ 2 0 1/ 2 0 0 1/ 2 0 0 0 0 1/ 2 A B C D E Eigenvalues -0.70711 0 0.707107 1 1 A 1 0 0 0 0 B 0.5 0 0.5 0 0 0.5 0.5 0.5 C 0 0.5 0 0.5 0 0 0 0 0 1 D 0 0 0.5 0 0.5 0 0.143403 0.316228 0.544526 0 -0.48961 -0.63246 -0.31898 0 0.692413 0 -0.4511 0 -0.48961 0.632456 -0.31898 0 0.143403 -0.31623 0.544526 E 0 0 0 0 1 Principal eigenvectors 1 0 0 0 0 0 0 0 0 1 The canonical form We rearrange the transition matrix to have the s absorbing states in the upper left corner and the t transient states in the lower right corner. We have four compartments I Pcanonical ss 0ts Rst Qtt A B C D E A 1 0 0 0 0 B 0.5 0 0.5 0 0 C 0 0.5 0 0.5 0 D 0 0 0.5 0 0.5 E 0 0 0 0 1 A B C D E A 1 0 0 0 0 E 0 0 0 0 1 B 0.5 0 0.5 0 0 C 0 0.5 0 0.5 0 D 0 0 0.5 0 0.5 A E B C D A 1 0 0 0 0 E 0 1 0 0 0 B 0.5 0 0 0.5 0 C 0 0 0.5 0 0.5 D 0 0.5 0 0.5 0 Transient part After n steps we have; I ss n P 0ts n Rst I ss Qtt 0ts ? n Qtt The unknown matrix contains information about the frequencies to reach an absorbing state from stateB, C, or D. I ss P 0ts n n Rst I ss Qtt 0ts I ss 3 P 0ts ? n Qtt n Rst I ss Qtt 0ts R( I Q) 2 Qtt 3 R( I Q Q 2 ) 3 Qtt Rst I ss Qtt 0ts I P 3 ss 0ts Rst I ss Qtt 0ts R( I Q Q ...Q n Qtt limn Q n 0 2 I ss 2 P 0ts 2 n 1 ) I ss 0 ts Rst (I Q)i i 0 n Qtt n 1 Multiplication of probabilities gives ever smaller values n 1 limn ( I Q)i ( I Q) 1 Simple geometric series i 0 B R(Itt Q)1 The entries nijof the matrix B contain the probabilities of ending in an absorbing state i when started in state j. N (Itt Q)1 The entries nijof the fundamental matrix N of Q contain the expected numbers of time the process is in state i when started in state j. The druncard’s walk A B C D E A 1 0 0 0 0 B 0.5 0 0.5 0 0 C 0 0.5 0 0.5 0 D 0 0 0.5 0 0.5 E 0 0 0 0 1 A B C D E A 1 0 0 0 0 E 0 0 0 0 1 B 0.5 0 0.5 0 0 C 0 0.5 0 0.5 0 D 0 0 0.5 0 0.5 A E B C D A 1 0 0 0 0 E 0 1 0 0 0 B 0.5 0 0 0.5 0 C 0 0 0.5 0 0.5 D 0 0.5 0 0.5 0 N (Itt Q)1 t Ntt It1 (Itt Q)1 It1 The sum of all rows of N gives the expected number of times the chain is is state i (afterwards it falls to the absorbing state). t is a column vector that gives the expected number of steps (starting at state i) before the chain is absorbed. B C D Q B 0 0.5 0 I 1 0 0 C 0.5 0 0.5 0 1 0 D 0 0.5 0 0 0 1 I 1 1 1 B C D (I-Q)-1 B 1.5 1 0.5 B C D NI 3 4 3 B C D I-Q B 1 -0.5 0 C -0.5 1 -0.5 D 0 -0.5 1 A E RN B 0.75 0.25 C 0.5 0.5 D 0.25 0.75 The probability of reaching the absorbing state from any of the transient states. B C D C 1 2 1 D 0.5 1 1.5 The expected number of steps to reach the absorbing state. 0.6 0.3 C 0.4 0.6 0.9 B A D 0.1 A 0.8 B 0.7 C 0.3 A A B B C C D D A A 0 0 0.4 0.4 0.6 0.6 0 0 B B 0.3 0.3 0.7 0.7 0 0 0 0 C C 0.3 0.3 0 0 0.7 0.7 0 0 A A B B C C A A 0.2 0.2 0.8 0.8 0 0 B B 0 0 0.3 0.3 0.7 0.7 C C 0.6 0.6 0 0 0.4 0.4 D D 0 0 0 0 0.9 0.9 0.1 0.1 Eigenvalues Eigenvalues -0.3 -0.3 0.1 0.1 0.7 0.7 1 1 Complex Complex eigenvalues eigenvalues -0.05 0.597913 -0.05 0.597913 -0.05 -0.597913 -0.05 -0.597913 1 0 1 0 Eigenvector Eigenvector 4 4 0.384111 0.384111 0.512148 0.512148 0.768221 0.768221 0 0 Eigenvector Eigenvector 3 3 0 0 0 0 0 0 Periodic chains do not have stable points. Expected return (recurrence) times 0.50 A B 0.15 0.05 0.35 C If we start at state D, how long does it take on average to return to D? 0.25 The rescaled eigenvector u of the probability matrix P gives the steady state frequencies to be in state i. E If u is the rescaled eigenvector of the probability matrix P, the expected return time tii of state i back to i is given by the inverse of the ith element ui of the eigenvector u. 0.33 0.05 0.33 D 0.25 0.25 A B C D E Sum P A 0.5 0.5 0 0 0 B 0.25 0.15 0.35 0 0.25 C 0.05 0 0.9 0.05 0 D 0 0 0.25 0.5 0.25 E 0 0.33 0 0.33 0.34 1 1 1 1 1 0.25 -0.77 0.295 -0.23 0.456 0.328 -0.37 -0.05 0.658 -0.57 Eigenvalue -0.21 0.212 0.655 0.732 1 tii 0.448 0.197 0.406 -0.67 -0.38 0.064 0.235 -0.88 0.262 0.317 Sum Eigenvector 0.168 0.146 0.951 0.176 0.122 1.563 A B C D E Rescaled 0.107644 0.093604 0.608424 0.11232 0.078003 1/Rescaled 9.289855 10.68333 1.64359 8.90278 12.82 1 ui In the long run it takes about 9 steps to return to D First passage times in ergodic chains 0.50 A 0.05 0.15 0.35 C 0.25 0.05 If we start at state D, how long does it take on average to reach state A? B 0.25 0.33 D D 0.33 E D 0.25 The fundamental matrix of an ergodic chain N (Itt P W) 1 W is the matrix containing only the rescaled stationary point vector. D D 0.25 0.25 0.25 0.25 C E E E 0.05 0.33 0.33 0.33 A B B D 0.0125 0.15 0.35 0.25 0.012375 A C C 0.05 0.05 A 0.00144375 A 0.00103125 …… We have to consider all possible ways from D to A. The inverse of the sum of these probabilities gives the expected number of steps to reach from point j to point k. nkk n jk t jk Applied to the original probability matrix P the wkk fundamental matrix N of P contains information on expected number of times the process is in The expected average number of steps tjk to reach from j state i when started in state j. to k comes from the entries of the fundamental matrix N divided through the respective entry of the (rescaled) stationary point vector. Average first passage time 0.50 A 0.05 P 0.15 0.35 C 0.25 0.05 B 0.25 0.33 0.33 E D 0.25 A B C D E Eigenvalue -0.117299 0.259272 0.632003 0.716025 1 Sum I A 0.5 0.5 0 0 0 B 0.15 0.25 0.35 0 0.25 C 0.05 0 0.9 0.05 0 Largest eigenvector 0.14 0.147 0.955 0.176 0.122 1.541 I-P+W D E 0 0 0.25 0.5 0.25 0 0.33 0 0.33 0.34 Rescaled 0.091 0.095 0.62 0.114 0.079 1 0.591 -0.06 0.041 0.091 0.091 -0.4 0.845 0.095 0.095 -0.23 0.62 0.27 0.72 0.37 0.62 0.114 0.114 0.064 0.614 -0.22 0.079 -0.17 0.079 -0.17 0.739 Return times 11.04 10.48 1.613 8.736 12.58 1 0 0 0 0 0 1 0 0 0 0 0 1 0 0 0 0 0 1 0 0 0 0 0 1 A B C D E W 0.091 0.095 0.62 0.114 0.079 0.091 0.095 0.62 0.114 0.079 0.091 0.095 0.62 0.114 0.079 0.091 0.095 0.62 0.114 0.079 0.091 0.095 0.62 0.114 0.079 A B C D E (I-P+W)-1 A B 1.984 0.165 1.315 1.506 -2.29 -1.05 -0.28 -0.05 0.272 0.431 C -0.08 -0.33 2.007 -0.25 -0.34 D -0.33 -0.07 -1.29 2.06 0.634 E -0.22 0.574 -2.11 0.832 1.927 A B C 0 20.07 22.78 2 0 19.26 6.935 4.935 0 20.43 18.43 20.21 20.82 18.82 28.55 A =(L22-H22)/H14 D 25.55 16.52 5.322 0 16.27 E 24.33 9.773 6.643 10.73 0 A B C D E E You have sunny, cloudy, and rainy days with respective transition probabilities. How long does it take for a sunny day to folow a rainy day? How long does it take that a sunny day comes back? Sunny Cloudy Rainy Sunny 0.5 0.25 0.5 Cloudy 0.35 0.25 0.35 Rainy 0.15 0.5 0.15 Eigenvalue Largest eigenvector -0.1 0.714 0 0.541 1 0.444 Sum 1.699 I-P+W 0.92 0.17 -0.08 -0.03 1.068 -0.03 0.111 -0.24 1.111 Return times Sunny 2.378 Cloudy 3.143 Rainy 3.826 1 0 0 Rescaled 0.42 0.318 0.261 0 1 0 0 0 1 W Sunny 0.42 0.42 0.42 Cloudy 0.318 0.318 0.318 Rainy 0.261 0.261 0.261 -1 (I-P+W) 1.072 -0.15 0.072 0.029 0.938 0.029 -0.1 0.217 0.899 Sunny Cloudy Rainy Sunny 0 2.919 2.378 Cloudy 2.857 0 2.857 Rainy 3.826 2.609 0 The construction of evolutionary trees from DNA sequence data T→C T C A→G A G→C→G T G→C→A A A C G T T C A→G A G T G C C C T Probabilities of DNA substitution We assume equal substitution Single substitution probabilities. If the total probability for a substitution is p: Parallel substitution p A T Back substitution p p p Multiple substitution C G p p(A→T)+p(A→C)+p(A→G)+p(A→A) =1 The probability that A mutates to T, C, or G is P¬A=p+p+p The probability of no mutation is pA=1-3p Independent events The probability that A mutates to T and C to G is PAC=(p)x(p) Independent events p( A B) p( A) p( B) p( A B) p( A) p( B) The probability matrix T→C T C A→G A G→C→G T G→C→A A A C G T T C A→G A G T G C C C T Single substitution Parallel substitution Back substitution Multiple substitution T A C G p p p 1 3 p 1 3 p p p p P p p 1 3 p p p p p 1 3 p A T C G What is the probability that after 5 generations A did not change? p5 (1 3 p)5 The Jukes - Cantor model (JC69) now assumes that all substitution probabilities are equal. The Jukes Cantor model assumes equal substitution probabilities within these 4 nucleotides. Arrhenius model p p p 1 3 p dP (t ) P(t ) P(t ) P(0)e t p 1 3 p p p P dt p p 1 3 p p Substitution probability after time t p p p 1 3 p A,T,G,C A Transition matrix t P(t ) P(0)t The probability that nothing changes is the zero term of the Poisson distribution P( A C, T , G) e e4 pt Substitution matrix 1 3 4 t e 4 4 1 1 e 4 t P4 4 1 1 4 t 4 4e 1 1 e 4 t 4 4 1 1 4 t e 4 4 1 3 4 t e 4 4 1 1 4 t e 4 4 1 1 4 t e 4 4 1 1 4 t e 4 4 1 1 4 t e 4 4 1 3 4 t e 4 4 1 1 4 t e 4 4 The probability of at least one substitution is 1 1 4 t e 4 4 1 1 4 t e 4 4 1 1 4 t e 4 4 1 3 4 t e 4 4 P( A C T G) e 1 e4 pt The probability to reach a nucleotide from any other is 1 (1 e 4 pt ) 4 The probability that a nucleotide doesn’t change after time t is 1 1 3 P( A A, T , C , G | A) 1 3( (1 e 4 pt )) e 4 pt 4 4 4 P( A, T , G, C A) Probability for a single difference 1 3 3 P( A A, T , C , G ) 3( (1 e 4 pt )) e 4 pt 4 4 4 What is the probability of n differences after time t? 0.35 0.3 0.25 f(p) We use the principle of maximum likelihood and the Bernoulli distribution x n x n n x 3 3 3 3 p( x, t ) p (1 p) n x e4 pt 1 ( e4 pt ) 4 4 x x 4 4 0.2 0.15 0.1 0.05 0 0 1 2 3 4 5 p 6 7 8 9 n n 3 3 1 3 ln p( x, t ) ln x ln p (n x) ln(1 p) ln x ln e 4 pt (n x) ln e4 pt ) 4 4 4 4 x x t 1 4x ln1 4 p 3n This is the mean time to get x different sites from a sequence of n nucleotides. It is also a measure of distance that dependents only on the number of substitutions 10 Homo sapiens Pan troglodytes Pan paniscus Gorilla Homo neandertalensis Phylogenetic trees are the basis of any systematic classificaton t 1 4x ln1 4 p 3n Time Divergence - number of substitutions