Bayesian Decision Theory

advertisement

CHAPTER 3:

Bayesian Decision

Theory

Souce: Alpaypin with modifications by

Christoph F. Eick;

Remark: Belief Networks will be covered

in April. Utility theory will be covered as part of

reinforcement learning.

Probability and Inference

Result of tossing a coin is {Heads,Tails}

Random var X {1,0}

Bernoulli: P {X=1} = po

Sample: X = {xt }Nt =1

Estimation: po = # {Heads}/#{Tosses} = ∑t xt / N

Prediction of next toss:

Heads if po > ½, Tails otherwise

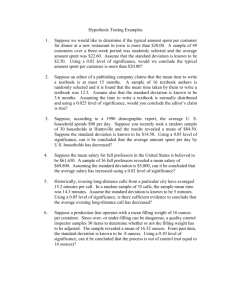

In the theory of probability and statistics, a Bernoulli trial is an experiment whose

outcome is random and can be either of two possible outcomes, "success" and "failure".

P(X=k)=

2

Binomial Distribution

3

Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1)

Classification

Credit scoring: Inputs are income and savings.

Output is low-risk vs high-risk

Input: x = [x1,x2]T ,Output: C {0,1}

Prediction:

C 1 if P (C 1 | x 1,x 2 ) 0.5

choose

C 0 otherwise

or equivalent ly

C 1 if P (C 1 | x 1,x 2 ) P (C 0 | x 1,x 2 )

choose

C 0 otherwise

4

Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1)

Bayes’ Rule

prior

posterior

likelihood

P C p x | C

P C | x

p x

evidence

P C 0 P C 1 1

p x p x | C 1P C 1 p x | C 0P C 0

p C 0 | x P C 1 | x 1

see: http://en.wikipedia.org/wiki/Bayes'_theorem

5

Bayes’ Rule: K>2 Classes

p x | Ci P Ci

P Ci | x

p x

p x | Ci P Ci

K

p x | Ck P Ck

k 1

K

P Ci 0 and P Ci 1

i 1

c hoose Ci if P Ci | x maxk P Ck | x

Remember: The disease/symptom example

6

Losses and Risks

Actions: αi

Loss of αi when the state is Ck : λik

Expected risk (Duda and Hart, 1973)

K

R i | x ik P Ck | x

k 1

c hoosei if R i | x mink R k | x

Remark:

λik is the cost of choosing i when k is correct!

If we use accuracy/error, then

λik := If i=k then 0 else 1!

7

Losses and Risks: 0/1 Loss

0 if i k

ik

1 if i k

K

Ri | x ik P Ck | x

k 1

P Ck | x

k i

1 P Ci | x

For minimum risk, choose the most probable class

Remark: This strategy is not optimal in other cases

8

Losses and Risks: Reject

0 if i k

ik if i K 1, 0 1

1 otherwise

Risk for

reject

K

R K 1 | x P Ck | x

k 1

R i | x P Ck | x 1 P Ci | x

k i

choose Ci

if P Ci | x P Ck | x k i and P Ci | x 1

reject

otherwise

9

Example and Homework!

C1=has cancer

C2=has not cancer

12=9

21=72

Homework:

a) Determine the optimal decision making strategy

Inputs: P(C1|x), P(C2|x)

Decision Making Strategy:…

b) Now assume we also have a reject option and the cost for making no

decision are 3:

reject,2=3

reject, 1=3

Inputs: P(C1|x), P(C2|x)

Decision Making Strategy: …

Ungraded Homework: to be discussed Feb. 6!

10

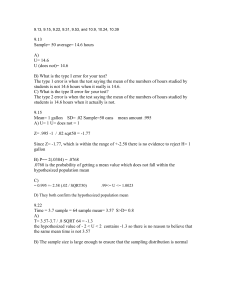

Homework:

a) Determine the optimal decision making strategy

Input: P(C1|x),

R(1|x)=9xP(C2)

R(2|x)=72xP(C1)

R(reject|x)=3

Setting those equal receive:

9xP(C2)=72xP(C1) (P(C2)/P(C1))=8; additionally using P(C1)+P(C2)=1

we receive: P(C1)=1/9 and P(C2)=8/9 and the risk-minimizing decision

rule becomes: IF P(C1)>1/9 THEN choose C1 ELSE choose C2

b) Now assume we also have a reject option and the cost for making no

decision are 3:

reject,2=3

reject, 1=3

Input: P(C1|x)

First we find equating R(reject|x) with R(1|x) and R(2|x):

If P(C2)≥1/3 P(C1) ≤2/3 reject should be preferred over class1 and

P(C1)≥1/24 reject should be preferred over class2. Combining this

knowledge with the previous decision rule we receive:

IF P(C1)[0,1/24] THEN choose class2

ELSE IF P(C1)[2/3,1] THEN choose class1

ELSE choose reject

11

Discriminant Functions

c hooseCi if gi x maxk gk x

gi x , i 1,, K

R i | x

gi x P C i | x

p x | C P C

i

i

K decision regions R1,...,RK

Ri x | gi x maxk gk x

12

Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1)