Factorial Invariance - Texas Tech University Departments

advertisement

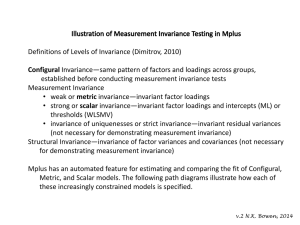

Factorial Invariance: Why It's Important and How to Test for It Todd D. Little University of Kansas Director, Quantitative Training Program Director, Center for Research Methods and Data Analysis Director, Undergraduate Social and Behavioral Sciences Methodology Minor Member, Developmental Psychology Training Program crmda.KU.edu Colloquium presented 5-24-2012 @ University of Turku, Finland Special Thanks to: Mijke Rhemtulla & Wei Wu crmda.KU.edu 1 Comparing Across Groups or Across Time • In order to compare constructs across two or more groups OR across two or more time points, the equivalence of measurement must be established. • This need is at the heart of the concept of Factorial Invariance. • Factorial Invariance is assumed in any cross-group or cross-time comparison • SEM is an ideal procedure to test this assumption. Comparing Across Groups or Across Time • Meredith provides the definitive rationale for the conditions under which invariance will hold (OR not)…Selection Theorem • Note, Pearson originated selection theorem at the turn of the century Which posits: if the selection process effects only the true score variances of a set of indicators, invariance will hold Classical Measurement Theorem Xi = Ti + Si + ei Where, Xi is a person’s observed score on an item, Ti is the 'true' score (i.e., what we hope to measure), Si is the item-specific, yet reliable, component, and ei is random error, or noise. Note that Si and ei are assumed to be normally distributed (with mean of zero) and uncorrelated with each other. And, across all items in a domain, the Sis are uncorrelated with each other, as are the eis. Selection Theorem on Measurement Theorem X1 = T1 + S1 + e1 Selection Process X2 = T2 + S2 + e2 X3 = T3 + S3 + e3 Levels Of Invariance • There are four levels of invariance: 1) Configural invariance - the pattern of fixed & free parameters is the same. 2) Weak factorial invariance - the relative factor loadings are proportionally equal across groups. 3) Strong factorial invariance - the relative indicator means are proportionally equal across groups. 4) Strict factorial invariance - the indicator residuals are exactly equal across groups (this level is not recommended). The Covariance Structures Model where... Σ = matrix of model-implied indicator variances and covariances Λ = matrix of factor loadings Ψ = matrix of latent variables / common factor variances and covariances Θ = matrix of unique factor variances (i.e., S + e and all covariances are usually 0) The Mean Structures Model where... μ = vector of model-implied indicator means τ = vector of indicator intercepts Λ = matrix of factor loadings α = vector of factor means Factorial Invariance • An ideal method for investigating the degree of invariance characterizing an instrument is multiplegroup (or multiple-occasion) confirmatory factor analysis; or mean and covariance structures (MACS) models • MACS models involve specifying the same factor model in multiple groups (occasions) simultaneously and sequentially imposing a series of cross-group (or occasion) constraints. Some Equations Configural invariance: Same factor loading pattern across groups, no constraints. (g) (g) (g) (g) (g) (g) (g) (g) (g) Weak (metric) invariance: Factor loadings proportionally equal across groups. (g) (g) (g) (g) (g) (g) Strong (scalar) invariance: Loadings & intercepts proportionally equal across groups. (g) (g) (g) (g) (g) Strict invariance: Add unique variances to be exactly equal across groups. (g) (g) (g) (g) Models and Invariance • It is useful to remember that all models are, strictly speaking, incorrect. Invariance models are no exception. "...invariance is a convenient fiction created to help insecure humans make sense out of a universe in which there may be no sense." (Horn, McArdle, & Mason, 1983, p. 186). Measured vs. Latent Variables • Measured (Manifest) Variables • • • Observable Directly Measurable A proxy for intended construct • Latent Variables • • • • The construct of interest Invisible Must be inferred from measured variables Usually ‘Causes’ the measured variables (cf. reflective indicators vs. formative indicators) • What you wish you could measure directly Manifest vs. Latent Variables • “Indicators are our worldly window into the latent space” • John R. Nesselroade Manifest vs. Latent Variables Ψ11 ξ λ11 1 λ21 λ31 X1 X2 X3 θ11 θ22 θ33 Selection Theorem Ψ11 Selection Influence Ψ11 Group (Time) 1 λ11 λ21 Group (Time) 2 λ31 λ11 λ21 λ31 X1 X2 X3 X1 X2 X3 θ11 θ22 θ33 θ11 θ22 θ33 Estimating Latent Variables Implied variance/covariance matrix Ψ11 X1 ξ λ11 X2 X3 X1 11y1111 + θ11 1 λ21 X2 11y1121 21y1121 + θ22 X3 11y1131 21y1131 λ31 X1 X2 X3 θ11 θ22 θ33 31y1131 + θ33 To solve for the parameters of a latent construct, it is necessary to set a scale (and make sure the parameters are identified) 17 Scale Setting and Identification Three methods of scale-setting (part of identification process) Arbitrary metric methods: • Fix the latent variance at 1.0; latent mean at 0 •(reference-group method) • Fix a loading at 1.0; an indicator’s intercept at 0 •(marker-variable method) Non-Arbitrary metric method • Constrain the average of loadings to be 1 and the average of intercepts at 0 •(effects-coding method; Little, Slegers, & Card, 2006) 18 1. Fix the Latent Variance to 1.0 and Latent mean to 0.0) Implied variance/covariance matrix 1.0* X1 ξ λ11 1 λ21 X2 X1 1112 + q11 X2 11 1 21 1212 + q22 X3 11 1 31 21 1 31 λ31 X3 1312 + q33 Three methods of setting scale 1) Fix latent variance (Ψ11) X1 X2 X3 θ11 θ22 θ33 19 2. Fix a Marker Variable to 1.0 (and its intercept to 0.0) Implied variance/covariance matrix Ψ11 X1 ξ 1.0* 1 λ21 X2 X1 y11 q11 X2 1y1121 21y1121 q22 X3 1y1131 21y1131 X3 31y1131 q33 λ31 X1 X2 X3 θ11 θ22 θ33 20 3. Constrain Loadings to Average 1.0 (and the intercepts to average 0.0) Implied variance/covariance matrix Ψ11 X1 ξ λ11= 3-λ21-λ31 1 λ21 X2 X1 (3-21- 31)y11(321- 31) + q11 X2 (3-21- 31)y1121 21y1121 + q22 X3 (3-21- 31) y1131 21y1131 X3 31y1131 + q33 λ31 X1 X2 X3 θ11 θ22 θ33 21 Configural invariance xx 1* Group 1: .57 1 .61 2 .63 .63 .59 1* .60 1 2 3 4 5 6 .12 .10 .10 .11 .10 .07 xx 1* Group 2: .64 1 .66 2 .71 .59 .55 1* .57 1 2 3 4 5 6 .09 .11 .07 .11 .07 .06 Configural invariance xx 1* Group 1: .57 1 .51 2 .63 .63 .59 1* .60 1 2 3 4 5 6 .12 .10 .10 .11 .10 .07 xx 1* Group 2: .64 1 .76 2 .71 .59 .55 1* .57 1 2 3 4 5 6 .09 .11 .07 .11 .07 .06 Configural invariance -.07 1* Group 1: .57 1 .61 2 .63 .63 .59 1* .60 1 2 3 4 5 6 .12 .10 .10 .11 .10 .07 -.32 1* Group 2: .64 1 .66 2 .71 .56 .55 1* .57 1 2 3 4 5 6 .09 .11 .07 .11 .07 .06 Weak factorial invariance (equate λs across groups) PS(2,1) Group 1: PS(1,1) LY(1,1) LY(2,1) 1 2 LY(3,1) 2 LY(4,2) 3 TE(2,2) TE(1,1) Note: Variances are now Freed in group 2 1 1* TE(3,3) LY(5,2) 1* PS(2,2) LY(6,2) 4 5 6 TE(4,4) TE(5,5) TE(6,6) PS(2,1) PS(1,1) Group 2: =LY(1,1) 1 TE(1,1) e 1 =LY(2,1) 2 =LY(3,1) 2 TE(2,2) e PS(2,2) =LY(4,2) =LY(5,2) =LY(6,2) 3 TE(3,3) 4 5 6 TE(4,4) TE(5,5) TE(6,6) F: Test of Weak Factorial Invariance 1* (9.2.1.TwoGroup.Loadings.FactorID) -.07 -.33 Positive Negative 1.2 2 .58 Great + Glad .12 .09 1* .85 .59 .64 .62 .59 .61 Cheerful + Good Happy + Super Terrible + Sad Down + Blue Unhappy + Bad .11 .10 .10 .07 .11 .11 .10 .07 .07 .06 Model Fit: χ2(20, n=759)=49.0; RMSEA=.062(.040-.084); CFI=.99; M: Test of Weak Factorial Invariance (9.2.1.TwoGroup.Loadings.MarkerID) .33 .41 1* Great + Glad .12 .09 -.03 -.12 Positive 1.02 1.11 .39 .33 Negative 1* .95 .97 Cheerful + Good Happy + Super Terrible + Sad Down + Blue Unhappy + Bad .11 .10 .10 .07 .11 .11 .10 .07 .07 .06 Model Fit: χ2(20, n=759)=49.0; RMSEA=.062(.040-.084); CFI=.99; NNFI=.99 EF: Test of Weak Factorial Invariance (9.2.1.TwoGroup.Loadings.EffectsID) .36 .44 Positive .96 .98 Great + Glad .12 .09 -.03 -.12 1.06 .37 .31 Negative 1.03 .97 1.00 Cheerful + Good Happy + Super Terrible + Sad Down + Blue Unhappy + Bad .11 .10 .10 .07 .11 .11 .10 .07 .07 .06 Model Fit: χ2(20, n=759)=49.0; RMSEA=.062(.040-.084); CFI=.99; NNFI=.99 Results Test of Weak Factorial Invariance • The results of the two-group model with equality constraints on the corresponding loadings provides a test of proportional equivalence of the loadings: Nested significance test: (χ2(20, n=759) = 49.0) - (χ2(16, n=759) = 46.0) = Δχ2(4, n=759) = 3.0, p > .50 The difference in χ2 is non-significant and therefore the constraints are supported. The loadings are invariant across the two age groups. “Reasonableness” tests: RMSEA: weak invariance = .062(.040-.084) versus configural = .069(.046-.093) The two RMSEAs fall within one another’s confidence intervals. CFI: weak invariance = .99 versus configural = .99 The CFIs are virtually identical (one rule of thumb is ΔCFI <= .01 is acceptable). (9.2.TwoGroup. Loadings) Adding information about means • When we regress indicators on to constructs we can • • also estimate the intercept of the indicator. This information can be used to estimate the Latent mean of a construct Equivalence of the loading intercepts across groups is, in fact, a critical criterion to pass in order to say that one has strong factorial invariance. Adding information about means 1* 1 1 2 TY(1) TY(2) AL(1) 3 AL(2) 2 4 5 TY(3) TY(4) X TY(5) 1* 6 TY(6) Adding information about means (9.3.0.TwoGroups.FreeMeans) 1 1 3.14 3.07 0* 0* 2 2.99 2.85 3 3.07 2.98 0* 0* 2 4 5 1.70 1.72 6 1.53 1.58 1.55 1.55 X Model Fit: χ2(20, n=759) = 49.0 (note that model fit does not change) Strong factorial invariance (aka. loading invariance) – Factor Identification Method (9.3.1.TwoGroups.Intercepts.FactorID) -.07 -.33 1* 1.22 1 .58 .59 1 3.15 0* -.16 .64 2 2.97 0* .04 .62 3 4 3.08 1* 0.85 2 .59 .61 5 1.70 6 1.55 1.54 X Model Fit: χ2(24, n=759) = 58.4, RMSEA = .061(.041;.081), NNFI = .986, CFI = .989 Strong factorial invariance (aka. loading invariance) – Marker Var. Identification Method (9.3.1.TwoGroups.Intercepts.MarkerID) -.03 -.12 .33 .40 1 1* 1.03 1 0* 3.15 3.06 1.11 2 -.28 1.70 1.72 1* 3 4 -.43 .39 .33 2 .95 .97 5 0* 6 -.06 -.12 X Model Fit: χ2(24, n=759) = 58.4, RMSEA = .061(.041;.081), NNFI = .986, CFI = .989 Strong factorial invariance (aka. loading invariance) – Effects Identification Method (9.3.1.TwoGroups.Intercepts.EffectsID) -.03 -.12 .36 .44 1 .95 .98 1 .23 3.07 2.97 1.06 2 -.05 1.59 1.62 1.03 3 4 -.18 .37 .31 2 .97 1.00 5 .06 6 -.00 -.06 X Model Fit: χ2(24, n=759) = 58.4, RMSEA = .061(.041;.081), NNFI = .986, CFI = .989 How Are the Means Reproduced? Indicator mean = intercept + loading(Latent Mean) i.e., Mean of Y = intercept + slope (X) For Positive Affect then: Group 1 (7th grade): _ Y = τ + λ (α) 3.14 ≈ 3.15 + .58(0) 2.99 ≈ 2.97 + .59(0) 3.07 ≈ 3.08 + .64(0) Group 2 (8th grade): _ Y = τ + λ (α) 3.07 ≈ 3.15 + .58(-.16) = 3.06 2.85 ≈ 2.97 + .59(-.16) = 2.88 2.97 ≈ 3.08 + .64(-.16) = 2.98 Note: in the raw metric the observed difference would be -.10 3.14 vs. 3.07 = -.07 2.99 vs. 2.85 = -.14 gives an average of -.10 observed 3.07 vs. 2.97 = -.10 ============== i.e. averaging: 3.07 - 2.96 = -.10 The complete model with means, std’s, and r’s (9.7.1.Phantom variables.With Means.FactorID) 1* -.07 -.32 Positive 3 1.0* (in group 1) Negative 4 1.11 1.0* (in group 2) (in group 1) 1* .92 (in group 2) Estimated only in group 2! Group 1 = 0 0* Positive 1 .58 3.15 .59 2.97 -.16 .04 (z=2.02) (z=0.53) .62 .64 3.08 X 1.70 Negative 2 .59 1.54 0* .61 1.54 Model Fit: χ2(24, n=759) = 58.4, RMSEA = .061(.041;.081), NNFI = .986, CFI=.989 The complete model with means, std’s, and r’s (9.7.2.Phantom variables.With Means.MarkerID) 1* -.07 -.32 Positive 3 Negative 4 .62 .57 .58 .64 (in group 1) 0* (in group 2) Positive 1 1* 0* 1.03 -.28 1* (in group 1) 3.15 3.06 1.70 1.72 1.11 -.43 1* X 0* (in group 2) Negative 2 .95 -.06 0* .97 -.12 Model Fit: χ2(24, n=759) = 58.4, RMSEA = .061(.041;.081), NNFI = .986, CFI=.989 The complete model with means, std’s, and r’s (9.7.3.Phantom variables.With Means.EffectsID) 1* -.07 -.32 Positive 3 Negative 4 .61 .56 .60 .67 (in group 1) 0* (in group 2) Positive 1 .96 .23 .98 -.05 1* (in group 1) 3.07 2.97 1.59 1.62 1.06 -.18 1.03 X .06 (in group 2) Negative 2 .97 -.00 0* 1.00 -.06 Model Fit: χ2(24, n=759) = 58.4, RMSEA = .061(.041;.081), NNFI = .986, CFI=.989 Effect size of latent mean differences Cohen’s d = (M2 – M1) / SDpooled where SDpooled = √[(n1Var1 + n2Var2)/(n1+n2)] Effect size of latent mean differences Cohen’s d = (M2 – M1) / SDpooled where SDpooled = √[(n1Var1 + n2Var2)/(n1+n2)] Latent d = (α2j – α1j) / √ψpooled where √ψpooled = √[(n1 ψ1jj + n2 ψ2jj)/(n1+n2)] Effect size of latent mean differences Cohen’s d = (M2 – M1) / SDpooled where SDpooled = √[(n1Var1 + n2Var2)/(n1+n2)] Latent d = (α2j – α1j) / √ψpooled where √ψpooled = √[(n1 ψ1jj + n2 ψ2jj)/(n1+n2)] dpositive = (-.16 – 0) / 1.05 where √ψpooled = √[(380*1 + 379*1.22)/(380+379)] -.152 = Comparing parameters across groups 1. Configural Invariance Inter-occular/model fit Test 2. Invariance of Loadings RMSEA/CFI difference Test 3. Invariance of Intercepts RMSEA/CFI difference Test 4. Invariance of Variance/ Covariance Matrix χ2 difference test 5. Invariance of Variances χ2 difference test 6. Invariance of Correlations/Covariances χ2 difference test 3b or 7. Invariance of Latent Means χ2 difference test The ‘Null’ Model • The standard ‘null’ model assumes that all • • covariances are zero – only variances are estimated In longitudinal research, a more appropriate ‘null’ model is to assume that the variances of each corresponding indicator are equal at each time point and their means (intercepts) are also equal at each time point (see Widaman & Thompson). In multiple-group settings, a more appropriate ‘null’ model is to assume that the variances of each corresponding indicator are equal across groups and their means are also equal across groups. 44 References Byrne, B. M., Shavelson, R. J., & Muthén, B. (1989). Testing for the equivalence of factor covariance and mean structures: The issue of partial measurement invariance. Psychological Bulletin, 105, 456-466. Cheung, G. W., & Rensvold, R. B. (1999). Testing factorial invariance across groups: A reconceptualization and proposed new method. Journal of Management, 25, 1-27. Gonzalez, R., & Griffin, D. (2001). Testing parameters in structural equation modeling: Every “one” matters. Psychological Methods, 6, 258-269. Kaiser, H. F., & Dickman, K. (1962). Sample and population score matrices and sample correlation matrices from an arbitrary population correlation matrix. Psychometrika, 27, 179-182. Kaplan, D. (1989). Power of the likelihood ratio test in multiple group confirmatory factor analysis under partial measurement invariance. Educational and Psychological Measurement, 49, 579-586. Little, T. D., Slegers, D. W., & Card, N. A. (2006). A non-arbitrary method of identifying and scaling latent variables in SEM and MACS models. Structural Equation Modeling, 13, 59-72. MacCallum, R. C., Roznowski, M., & Necowitz, L. B. (1992). Model modification in covariance structure analysis: The problem of capitalization on chance. Psychological Bulletin, 111, 490-504. Meredith, W. (1993). Measurement invariance, factor analysis and factorial invariance. Psychometrika, 58, 525-543. Steenkamp, J.-B. E. M., & Baumgartner, H. (1998). Assessing measurement invariance in cross-national consumer research. Journal of Consumer Research, 25, 78-90. 45 Factorial Invariance: Why It's Important and How to Test for It Todd D. Little University of Kansas Director, Quantitative Training Program Director, Center for Research Methods and Data Analysis Director, Undergraduate Social and Behavioral Sciences Methodology Minor Member, Developmental Psychology Training Program crmda.KU.edu Colloquium presented 5-24-2012 @ University of Turku, Finland Special Thanks to: Mijke Rhemtulla & Wei Wu crmda.KU.edu 46 Update Dr. Todd Little is currently at Texas Tech University Director, Institute for Measurement, Methodology, Analysis and Policy (IMMAP) Director, “Stats Camp” Professor, Educational Psychology and Leadership Email: yhat@ttu.edu IMMAP (immap.educ.ttu.edu) Stats Camp (Statscamp.org) www.Quant.KU.edu 47