Review3

advertisement

Questions and Topics Review Dec. 10, 2013

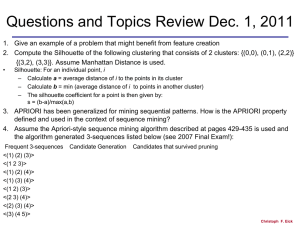

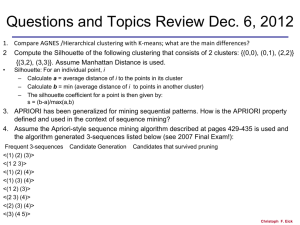

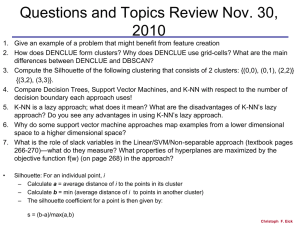

1. Compare AGNES /Hierarchical clustering with K-means; what are the main differences?

2. K-means has a runtime complexity of O(t*k*n*d), where t is the number of iterations, d is the

dimensionality of the datasets, k is the number of clusters in the dataset, and n is the number of

objects in the dataset. Explain! In general, is K-means an efficient clustering algorithm; give a

reason for this answer, by discussing its runtime by referring to its runtime complexity formula! [5]

The number of attributes an object has!

3. Assume the Apriori-style sequence mining algorithm described at pages 429-435 is used and

the algorithm generated 3-sequences listed below (see 2007 Final Exam!):

Frequent 3-sequences

<(1) (2) (3)>

<(1 2 3)>

<(1) (2) (4)>

<(1) (3) (4)>

<(1 2) (3)>

<(2 3) (4)>

<(2) (3) (4)>

<(3) (4 5)>

Candidate Generation

Candidates that survived pruning

Christoph F. Eick

Answers Question 1

a.

a. AGNES creates set of clustering/a dendrogram; K-Means creates a single clustering

b. K-means forms cluster by using an iteration procedure which minimizes an objective functions,

AGNES forms the dendrogram by merging the closest 2 clusters until a single cluster is obtained

c. …

0.2

0.15

0.1

0.05

0

1

3

2

5

4

6

Christoph F. Eick

Answers Questions 2&3

3) Association Rule and Sequence Mining [15]

a) Assume the Apriori-style sequence mining algorithm described at pages 429-435 is

used and the algorithm generated 3-sequences listed below:

Frequent 3-sequences Candidate Generation Candidates that survived pruning

<(1) (2) (3)>

Candidate Generation:

Candidates that

<(1) (2) (3) (4)> survived

survived pruning:

<(1 2 3)>

<(1 2 3) (4)> pruned, (1 3) (4) is infrequent

<(1) (2) (3) (4)>

<(1) (2) (4)>

<(1) (3) (4 5)> pruned (1) (4 5) is infrequent

<(1) (3) (4)>

<(1 2) (3) (4)> pruned, (1 2) (4) is infrequent

<(1 2) (3)>

What if the ans are correct, but this part of

<(2 3) (4 5)> pruned, (2) (4 5) is infrequent

description isn’t giving?? Do I need to take

<(2 3) (4)>

any points off? ? Give an extra point if

<(2) (3) (4 5)>pruned, (2) (4 5) is infrequent

<(2) (3) (4)>

<(3) (4 5)>

explanation is correct and present;

otherwise subtract a point; more

than 2 errors: 2 points or less!

What candidate 4-sequences are generated from this 3-sequence set? Which of the

generated 4-sequences survive the pruning step? Use format of Figure 7.6 in the textbook

on page 435 to describe your answer! [7]

Answer Question 2:

t: #iteration k: number of clusters n: #objects-to-be-clustered d:#attributes

In each iteration, all the n points are compared to k centroids to assign them to nearest

centroid, which is O(k*n), each distance computations complexity is O(d). Therefore,

Christoph F. Eick

O(t*k*n*d).

Questions and Topics Review Dec. 10, 2013

4. Gaussian Kernel Density Estimation and DENCLUE

a. Assume we have a 2D dataset X containing 4 objects : X={(1,0), (0,1), (1,2) (3,4)}; moreover, we use the

Gaussian kernel density function to measure the density of X. Assume we want to compute the density at

point (1,1) and you can also assume h=1 (=1) and that we use Manhattan distance as the distance function!.

Give a sketch how the Gaussian Kernel Density Estimation approach determines the density for point (1, 1).

Be specific!

b. What is a density attractor?. How does DENCLUE form clusters.?

5) PageRank [8]

a) What does the PageRank compute? What are the challenges in using the PageRank algorithm in practice? [3]

b) Give the equation system that PAGERANK would use for the webpage structure given below. Give a sketch of

an approach that determines the page rank of the 4 pages from this equation system! [5]

P1

P2

P3

P4

Christoph F. Eick

Answer Question4

4. Gaussian Kernel Density Estimation and DENCLUE

a. Assume we have a 2D dataset X containing 4 objects : X={(1,0), (0,1), (1,2) (3,4)}; moreover, we use the

Gaussian kernel density function to measure the density of X. Assume we want to compute the density at

point (1,1) and you can also assume h=1 (=1) and that we use Manhattan distance as the distance function!.

Give a sketch how the Gaussian Kernel Density Estimation approach determines the density for point (1, 1).

Be specific!

b. What is a density attractor?. How does DENCLUE form clusters.?

a. The density of (1,1) is computed as follows:

fX((1,1))= e-1/2 + e-1/2 + e-1/2 + e-25/2

b. A density attractor is a local maximum of a density function. DENCLUE iterates over

the objects in the dataset and uses hill climbing to associate each point with a density

attractor. Next, if forms clusters such that each cluster contains objects in the dataset

that are associated with the same clusters; objects who belong to a cluster whose

density (of its attractor) is below a user defined threshold are considered as outliers.

f Gaussian ( x , y ) e

d ( x , y )2

2 2

f

D

Gaussian

( x ) i 1 e

N

d ( x , xi ) 2

2 2

Christoph F. Eick

Answers Questions 5 and 6

5a) What does the PageRank compute? What are the challenges in using the PageRank

algorithm in practice? [3]

It computes the probability of a webpage to be assessed. [1]

As there are a lot of webpage and links finding an efficient scalable algorithm is a

major challenge [2]

5b) Give the equation system that PAGERANK would use for the webpage structure

given below. Give a sketch of an approach that determines the page rank of the 4 pages

from this equation system! [5]

PR(P1)= (1-d) + d * (PR(P3)/2 + PR(P4)/3)

PR(P2)= (1-d) + d * (PR(P3)/2 + PR(P4)/3 + PR(P1))

PR(P3)= (1-d) + d*PR(P4)/3

PR(P4)=1-d

[One solution: Initial all page ranks with 1 [0.5] and then update the PageRank of each

page using the above 4 equations until there is some convergence[1].

6) A Delaunay triangulation for a set P of points in a plane is a triangulation DT(P)

such that no point in P is inside the circumcircle of any triangle in DT(P).

Christoph F. Eick

Questions and Topics Review Dec. 10, 2013

6.

7.

a)

What is a Delaunay triangulation?

SVM

The soft margin support vector machine solves the following optimization problem:

What does the second term minimize? Depict all non-zero i in the figure below! What is the advantage of the sof

margin approach over the linear SVM approach? [5]

b) Referring to the figure above, explain how examples are classified by SVMs! What is the relationship between

i and example i being classified correctly? [4]

Christoph F. Eick

Answer Question 7

a. Minimizes the error which is measured as the distance to the class’ hyperplane

for points that are on the wrong side of the hyperplane [1.5]Depict [2]; distances

to wrong hyperplane at most 1 point]. Can deal with classification problems in

which the examples are not linearly separable[1.5].

b.The middle hyperplane is used to classify the examples[1.5]. If i less equal to

half of the width of the hyperplane the example is classified correctly.

The length of the arrow for point i

is the value of i; for points i

without arrow i=0.

Christoph F. Eick