NOTE

advertisement

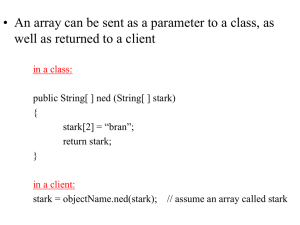

Servers, R and Wild Mice Robert William Davies Feb 5, 2014 Overview • 1 - How to be a good server citizen • 2 – Some useful tricks in R (including ESS) • 3 – Data processing – my wildmice pipeline 1 - How to be a good server citizen • Three basic things – CPU usage • cat /proc/cpuinfo • top • htop – RAM (memory) usage • top • htop – Disk IO and space • iostat • df -h Cat /proc/cpuinfo rwdavies@dense:~$ processor : vendor_id : cpu family : model : model name : stepping : microcode : cpu MHz : cache size : physical id : cat /proc/cpuinfo | head 0 AuthenticAMD 21 2 AMD Opteron(tm) Processor 6344 0 0x600081c 1400.000 2048 KB 0 rwdavies@dense:~$ cat /proc/cpuinfo | grep processor | wc -l 48 Memory can be in RAM or in swap Htop and top Swap is BAD Ideal use – 0 (in this case it is probably residual) RAM – 512GB total 142 in use (rest free) Load average – average over 1, 5, 15 minutes 48 cores rwdavies@dense:~$ iostat -m -x 2 disk use Relatively unused High sequential reading (fast!) Also note state – D = no IO There are also ways to optimize disk use for different IO requirements on a server – ask Winni Get sizes of directories Disk usage Get available disk space How to be a good server citizen Take away • CPU usage – Different servers different philosophies – At minimum, try for load <=number of cores • RAM – High memory jobs can take down a server very easily and will make others mad at you – best to avoid • Disk IO – For IO bound jobs you often get better combined throughput from running one or a few jobs than many in parallel – Also don’t forget to try and avoid clogging up disks • P.s. – A good server uptime is 100% 2 – Some useful tricks in R (including ESS) • R is a commonly used programming language / statistical environment • Pros – (Almost) everyone uses it – Very easy to use • Cons – It’s slow – It can’t do X • But! R can be faster, and it might be able to do X! Here I’ll show a few tricks ESS (Emacs speaks statistics) • Emacs is a general purpose text editor • Lots of programs exist for using R • There exists an extension to emacs called ESS allowing you to use R within emacs • This allows you to analyze data on a server very nicely using a split screen environment and keyboard shortcuts to run your code I have code on the left An R terminal on the right Running a line of code ctrl-c ctr-j Running a paragraph of code ctrl-c ctrl-p Switching windows ctrl-x o Google: ESS cheat sheet http://ess.r-project.org/refcard.pdf C- = ctrl M- = option key R – mclapply • lapply – apply a function to members of a list • mclapply – do it multicore! • Note there exists a spawning cost depending on memory of current R job Not 19X faster due to chromosome size differences Also I ran this on a 48 core server with a load of 40 /dev/shm • (This might be an Ubuntu Linux thing?) • Not really an R thing but can be quite useful for R multicore • /dev/shm uses RAM but operates like disk for many input / output things • Example: You loop on 2,000 elements, each of which generates an object of size 10Mb. You can pass that all back at once to R (very slow) or write to /dev/shm and read the files back in (faster) ff • Using save, load is an easy way to interact with data most of time time. ff allows you to use variables like a pointer to RAM Bonus – you can use mclapply to different entries without collisions! (same entries = collisions) Rcpp Some things R is not very good at – like for loops I write the c++ code as a text vector You can call fancy R things from c++ Simple example of c++ use in R: for a reference genome (say 60,000,000), coded as integer 0 to 3, determine the number of each possible Kmer of size K (ignoring converses for now) I then “compile” it here (takes ~1-3 seconds for simple (<1000) line things) I often just pass in lists, pass out lists (Note that upon making this slide I realized there is an easy way to do this using vectors) table(4^0 * ref[seq(1,refT-K)] + 4^1 * ref[seq(2,refTK+1)] + … ) and adjusting for NAs Complicated example I actually use • Want to do an EM HMM with 2000 subjects and up to 500,000 SNPs with 30 rounds of updating • Using R – pretty fast, fairly memory efficient – Use Rcpp to make c++ forward backward code in R – for iteration from 1 to 30 • mclapply on 2000 subjects – Run using Rcpp code, write output to /dev/shm/ • Aggregate results from /dev/shm to get new parameters – Write output (huge) to ff for easy downstream analysis 2 – Some useful tricks in R (including ESS) – Take away • A lot of people complain about R being slow but it’s really not that slow – Many packages such as Rcpp, ff, multicore, etc, let your code run much faster – Also, vector or matrix based R is pretty much as fast as anything • If 1 month after starting to use R you are still copying and pasting, stop what you’re doing and take a day to learn ESS or something similar – If you don’t use R often you can probably ignore this – (P.s. I copied and pasted for 2 or 3 years before using ESS) 3 – Data processing – my wildmice pipeline • We have data on 69 mice • Primary goals of this study – Recombination • Build rate maps for different subspecies • Find motifs – Population genetics • Relatedness, history, variation • Admixture Caroli N=1 - 40X – WDIS = Wild derived inbred strain Famulus N=1 - 40X - WDIS F WildSpret N=1 - 40X - WDIS W WildDom N=1 - 40X - WDIS W W Migration France M. m. Domesticus N=20 - 10X - Wild C weight Classical 0.5 M. m. musculus M. m. Castaneus Taiwan 0.2 N=1 - 40X - WDIS N=10 - 30X - Wild WildCast 10 s.e. 0.1 N=13- 40X – Lab strains WildMus India 0 0.0 W N=1 - 40X - WDIS N=20 - 10X - Wild 0.3 0.4 6 pops – 20 French, 20 Taiwan, 10 Indian, 17 Strains, 1 Fam, 1 Caroli bwa aln –q 10 Stampy –bamkeepgoodreads Add Read group info Merge into library level BAM using picard MergeSamFiles Picard markDuplicates Merge into sample level BAM Use GATK RealignerTargetCreator on each population Realign using GATK IndelRealigner per BAM Use GATK UnifedGenotyper on each population to create a list of putative variant sites. GATK BaseRecalibrator to generate recalibration tables per mouse GaTK PrintReads to apply recalibration 69 analysis ready BAMS! Example for 1 mus caroli (~2.5 GB genome ~50X coverage) Downloaded 95GB of gzipped .sra (15 files) Turned back into FQs (relatively fast) (30 files) bwa – about 2 days at 40 AMD cores (86 GB output, 30 files) Merged 30 -> 15 files (215 GB) stampy – cluster 3 – about 2-3 days, 1500 jobs (293 GB output, 1500 files) Merge stampy jobs together, turn into BAMs (220 GB 15 files) Merge library BAMs together, then remove duplicates per library, then merge and sort into final BAM (1 output, took about 2 days, 1 AMD) 1BAM, 170 GB Indel realignment – find intervals – 16 Intel cores, fast (30 mins) Apply realignment – 1 intel core – slower 1 BAM, 170 GB BQSR – call putative set of variants – 16 intel cores – (<2 hours) BQSR – generate recalibration tables – 16 intel cores – 10.2 hours (note – used relatively new GATK which allows multi-threading for this) BQSR – output – 1 Intel core – 37.6 hours 1 BAM, 231 GB NOTE: GATK also has scatter-gather for cluster work – probably worthwhile to investigate if you’re working on a project with 10T+ data Wildmice – calling variants • We made two sets of callsets – 3 population specific (Indian, French, Taiwanese), principally for estimating recombination rate • FP susceptible – prioritize low error at the expense of sensitivity – Combined – for pop gen • We used the GATK to call variants and VQSR to filter What is the VQSR? (Variant Quality Score Recalibrator) model PDF 12 12 HaplotypeScore HaplotypeScore 10 10 lod !4 8 !2 6 0 4 2 2 4 8 6 ! filtered ! retained 4 2 0 0 !2 !1 0 1 2 3 4 !2 !1 0 QD 1 2 3 4 QD 10 8 training 6 4 ! neg ! pos HaplotypeScore Take raw callset. Split into known and novel (array, dbSNP, etc) Split into known and novel Fit a 12 Gaussian Mixture Model on QC parameters on known Keep10the novel that’s close to the GMM, remove if far away 12 HaplotypeScore outcome 8 6 4 2 2 0 0 !2 !1 0 QD 1 2 3 4 Ti/Tv -> Expect ~2.15 genome wide Higher in genic regions novelty !2 !1 0 QD 1 2 3 4 ! novel ! known 8 It’s a good idea to benchmark your callsets and decide on the one with the parameters that suit the needs of your project (like sensitivity (finding everything) vs specificity (being right)) Population Training Sensitivity HetsInHomE chrXHetE nSNPs TiTv arrayCon arraySen French Array Filtered 95 0.64 1.97 12,957,830 2.20 99.08 94.02 French Array Filtered 97 0.72 2.28 14,606,149 2.19 99.07 96.01 French Array Filtered 99 1.12 3.62 17,353,264 2.16 99.06 98.09 French Array Not Filt 95 2.06 5.82 18,071,593 2.14 99.07 96.58 French Array Not Filt 97 2.97 8.24 19,369,816 2.10 99.07 98.01 French French French French French Array Not Filt 17 Strains 17 Strains 17 Strains Hard Filters 99 95 97 99 NA 6.11 1.29 2.20 4.19 5.36 15.73 3.89 6.52 11.63 16.37 22,008,978 16,805,717 18,547,713 20,843,679 19,805,592 2.01 2.14 2.11 2.04 2.06 99.06 99.07 99.07 99.06 99.09 99.20 93.49 96.49 98.62 96.96 Sensitivity – You set this – How much of your training set do you want to recover HetsInHomE – Look at homozygous regions in the mouse – how many hets do you see chrXHetE – Look at chromosome X in males – how many hets do you see nSNPs – number of SNPs TiTv – transition transversion ratio – expect ~2.15 for real, 0.5 for FP arrayCon – Concordance with array genotypes arraySen – Sensitivity for polymorphic array sites We chose a dataset for recombination rate estimation with low error rate but still a good number of SNPs Population Training Sensitivity HetsInHomE chrXHetE nSNPs TiTv arrayCon arraySen Taiwan Array Not Filt 95 2.05 11.20 36,344,063 2.12 NA NA Taiwan Array Not Filt 97 2.87 14.67 39,183,932 2.10 NA NA Taiwan Taiwan Taiwan Taiwan Taiwan Array Not Filt 17 Strains 17 Strains 17 Strains Hard Filters 99 95 97 99 NA 6.34 1.83 2.16 3.66 6.11 25.57 10.32 11.20 15.80 19.44 42,864,322 29,748,456 34,112,325 39,549,666 33,692,857 2.05 2.11 2.11 2.08 2.04 NA NA NA NA NA NA NA NA NA NA Indian Array Not Filt 95 1.11 1.80 66,190,390 2.18 NA NA Indian Array Not Filt 97 1.59 2.57 71,134,757 2.16 NA NA Indian Indian Indian Indian Array Not Filt 17 Strains 17 Strains 17 Strains 99 95 97 99 3.70 0.67 1.09 2.63 5.56 1.16 1.63 3.31 78,220,348 57,674,209 65,981,654 75,103,886 2.11 2.18 2.17 2.13 NA NA NA NA NA NA NA NA Indian Hard Filters NA 5.41 72.61 78,487,616 2.10 NA NA All Array Not Filt 95 1.90 8.95 140,827,810 2.04 99.07 96.74 All Array Not Filt 97 2.38 13.99 160,447,255 2.03 99.07 98.20 All Array Not Filt 99 4.52 22.73 184,977,157 1.99 99.06 99.36 Some of the datasets are extremely big Combined datasets allow us to better evaluate differences between populations Notes – VQSR sensitivity not always “calibrated” – Note: Be VERY skeptical of the work of others wrt sensitivity, specificity, that depends on NGS. Different filtering on different datasets can often explain alot French and Taiwanese very inbred, not so for the Indian mice Homozygosity (Blue) for chromosome 19 10 10 6 4 10 0 Taiwan 10 20 30 Position Mbp Homozygosity = red Huge Taiwan and French bottleneck, India OK 20 India 5 Mouse 0 2 15 Mouse 8 20 France Homozygosity (Red) for chromosome 19 40 50 60 30 Position Mbp 40 50 60 Caroli WildMus WildDom Classical Recent Admixture is visible in French and Taiwanese populations France Migration weight WildCast 0.5 Taiwan India 0 WildSpret 10 s.e. 0.0 Famulus 0.1 0.2 0.3 0.4 Admixture / introgression common Our Domesticus hotspots are enriched in an already known Domesticus motif 0.4 0.2 0.3 Broad scale correlation is conserved between subspecies, like in humans vs chimps 0.1 Pearson correlation 0.5 0.6 French hotspots are cold in Taiwan and vice-versa 0 1000 2000 3000 Window size (kb) 4000 5000 Conclusions • 1 – Don’t crash the server • 2 – There are tricks to make R faster • 3 – Sequencing data is big, slow and unwieldy. But it can tell us a lot Acknowledgements • • • • • • Simon Myers – supervisor Jonathan Flint, Richard Mott – collaborators Oliver Venn – Recombination work for wild mice Kiran Garimella – GATK guru Cai Na – Pre-processing pipeline Winni Kretzschmar – ESS, and many other things he does I copy • Amelie Baud, Binnaz Yalcin, Xiangchao Gan and many others for the wild mice