Lecture 11, 8 April 2014

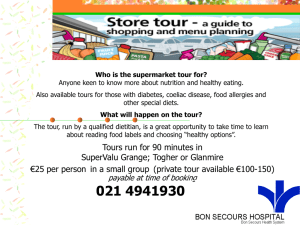

advertisement

The Traveling Salesman Problem

in Theory & Practice

Lecture 11: Branch & Cut & Concorde

8 April 2014

David S. Johnson

dstiflerj@gmail.com

http://davidsjohnson.net

Seeley Mudd 523, Tuesdays and Fridays

Outline

1. Cutting Planes

2. Branch and Bound

Presentation strongly

reliant on [ABCC06]

3. Performance of Concorde

4. Student Presentation by Chun Ye:

“Improving Christofides’ Algorithm for the Metric s-t

Path TSP”

Branch & Cut

Combine Branch-&-Bound with Cutting Planes

•

The term “branch & cut” was coined by Manfred Padberg and Giovanni

Rinaldi in their paper [Padberg & Rinaldi, “Optimization of a 532-city

traveling salesman problem by branch-and-cut,” Operations Res. Lett.

6 (1987), 1-7.

•

First known use of the approach was in Saman Hong’s 1972 PhD thesis

A Linear Programming Approach for the Traveling Salesman Problem at

Johns Hopkins University.

– Although, handicapped by his LP solver, he could only solve 20-city

problems. This was not all that impressive, since Dantzig et al. had already

done 42 cities in 1954, and Held & Karp did 64 in 1971.

– Even worse: Our permutation based B&B approach, using NN + iteration,

solves such instances in 0.01 seconds with just 165 lines of unsophisticated

code.

– Machines were a bit slower in 1971 than our 3.5Ghz processor, but

– A 1968 era PDP 10 was probably about a 0.5Mhz machine (1 μs cycle time,

2.1 μs per addition), so roughly 7000 time slower.

– Our code would thus still have taken only 70 seconds on such a machine.

– Moral : Ideas can be much more important than (initial) performance.

Refresher: The Cutting Plane Approach

Solve the edge-based integer programming formulation

for the TSP, as follows:

1. Start by solving a weak linear programming

relaxation.

2. While the LP solution is not a tour,

a. Identify a valid inequality that holds for all tours

but not the current solution (a “cutting plane” or

“cut” for short) .

b. Add it to the formulation and re-solve.

Refresher: Branch & Bound for the TSP

Assume edge lengths are integers, and we have an algorithm ALB that computes a lower bound

on the TSP length when certain constraints are satisfied, such as a set of edges being “fixed”

(forced to be in the tour, or forced not to be in the tour), and which, for some subproblems,

may produce a tour as well.

•

Start with an initial heuristic-created “champion” tour TUB, an upper bound UB =

length(TUB) on the optimal tour length, and a single “live” subproblem in which no edge is

fixed.

•

While there is a live subproblem, pick one, say subproblem P, and apply algorithm ALB to it.

–

–

•

If LB > UB-1, delete subproblem P and all its ancestors that no longer have live children.

No improved tour is possible in this case (since tour length is an integer).

Otherwise, we have LB ≤ UB-1.

1.

Pick an edge e that is unfixed in P and create two new subproblems as its children, one

with e forced to be in the tour, and one with e forced not to be in the tour.

2.

If algorithm ALB produced a tour T, and length(T) < UB

1.

Set UB = length(T) and TUB = T.

2.

Delete all subproblems with current LB > UB-1, as well as their children and

their ancestors that no longer have any live children.

Halt. Our current champion is an optimal tour.

Some Key Implementation Issues for

Branch & Cut

Coverage by [ABCC06]

•

How do we find the initial tour (upper bound)?

•

How do we find violated inequalities (cuts)?

•

What are the best inequalities to add?

Chapter 15, 64 pages

Chapters 5-11, 216 pages

–

Based on speed of generating them

–

Based on effectiveness in improving lower bounds

Split when we (1) Can’t find any

more cuts, or (2) reach point of

1 page, somewhere: diminishing returns.

•

How do we decide when to split a case?

•

How do we choose the variable on which to split a case?

•

How do we pick the next subcase to work on? Chapter 14, 1

Chapter 14, 14 pages

Subproblem with

smallest lower bound.

paragraph:

--------------------------------------------------------------------•

How do we manage the inequalities?

–

Solving the LP may take too long if there are too many inequalities

–

Some inequalities may lose their effectiveness in later subcases

•

How do we cope with repeatedly solving LPs with millions of

variables?

•

What LP code do we use and how do we apply it?

Chapter 12, 28 pages

Chapter 13, 38 pages

Finding Cuts: The Template Approach

Look for cuts (preferably facet-inducing) with structures from a

(prioritized) predefined list.

•

•

•

•

•

Subtour Cuts

Combs

Clique-Tree Inequalities

Path Inequalities

…

For each cut class, can have an ordered sequence of stronger and stronger

(possibly slower and slower) cut-finding heuristics, up to exact algorithms,

should they exist.

(These heuristics typically assume that our current LP solution satisfies all

the degree-2 constraints.)

Heuristics for finding violated

subtour constraints

Connected Component Test

•

•

•

•

Construct a graph G, where e is an edge if variable xe > 0.

Compute the connected components of G (running time linear in the number

of e with xe > 0).

If the graph is not connected, we get a subtour cut for each connected

component.

Solve the resulting LP, and repeat the above if the resulting graph is still not

connected.

The cuts found in this way already get us very close to the Held-Karp bound.

For random Euclidean instances with coordinates in [0,106], ABCC06 got

• Within 0.409% of the HK bound for an instance with N = 1,000, and

• Within 0.394% of the HK bound for an instance with N = 100,000.

But note that the connected graph may only be connected because of edges with

very low values for xe.

To deal with this, one can use a “parametric connectivity” test.

Heuristics for finding violated

subtour constraints

Parametric Connectivity Test

•

•

Start with a graph G with no edges, and with every city c being a connected

component of size 1 with weight(c) = 0.

For all edges e = {u,v} of our TSP instance, in non-increasing order by the value of xe.

– Find the the connected components Su and Sv containing u and v (using the “unionfind” data structure).

– If Su = Sv, increase weight(Su) by xe.

– Otherwise,

• Set Su = Su U Sv, and increase weight(Su) by weight(Sv) + xe.

• If now δx(Su) = 2|Su| - 2weight(Su) < 2, add the corresponding subtour cut and continue.

– If there are now just two connected components, quit the loop (which took time

O(mlogN), where m is the number of e with xe > 0).

• Solve the resulting LP.

Repeat the above until the process yields no new cuts.

1,000 Cities

100,000 Cities

Connectivity

- 0.409%

- 0.394%

+ Parametric Connectivity

- 0.005%

- 0.029%

Heuristics for finding violated

subtour constraints

Interval Test

•

Let (c0, c1, …, cN-1) be the current champion tour in order. Note that every set

consisting of a subinterval (ci,ci+1,…,ck) of this order induces a subtour

constraint with S = {ci,ci+1,…,ck}.

•

For each i, 0 ≤ i < N-1, consider the set {ci,…ct} for the t that minimizes δx({ci,…ct}).

If this minimum is less than 2, we have a subtour cut, for a total of as many as N-2

cuts. Add them all.

1,000 Cities

100,000 Cities

Connectivity

- 0.409%

- 0.394%

+ Parametric Connectivity

- 0.005%

- 0.029%

= HK

- 0.0008%

+ Interval Test

•

With the right algorithms and data structures, this can be done in O(mlogN) time,

where m is the number of edges e with xe > 0.

General Speedup Trick: Safe Shrinking

Exploiting the “shrinking” of a set of cities S, given a current LP

solution x:

•

Replace S by a single city σ and x by a new function x’, where for all

distinct c,c’ in C-S, x’(u,v) = x(u,v), and for all c in C-S,

x’(c,σ) = ∑v∈Sx(c,v).

•

Find cut in the shrunken graph, then unshrink it back to a cut in the

original graph.

•

A set S is “safe” for shrinking if, whenever there is a violated TSP cut

for x, then there is also one for x’.

•

It is “template safe” for a given type of cut (subtour, comb, etc.) if,

whenever there is a violated cut in the given template for x, then there

is also one for x’.

•

A natural candidate: Edges e with xe = 1.

•

Unfortunately, not always safe…

Unsafe Edges

1.0

0.5

0.5

0.5

1.0

1.0

0.5

0.5

Violated Comb

0.5

1.0

0.5

0.5

0.5

1.0

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

Convex Combination of Two Tours

Edge Safety Theorem

[Padberg & Rinaldi, “Facet identification for the symmetric traveling

salesman problem,” Math. Programming 47 (1990), 219-257]

Theorem: If x(u,v) = 1 and there exists a vertex w with

x(w,u) + x(w,v) = 1,

in the solution to the current LP, then it is safe to shrink edge e = {u,v}.

Proof: Suppose that x’ has no violated TSP cuts. Then it must be a convex

combination of tours through the shrunken graph.

Lemma: If x is a convex combination of tours in a shrunken (or unshrunken) graph,

and we have x(e) = 1, then every tour in the convex combination contains edge e.

Proof of Lemma: The tours containing e must have coefficients summing to 1 in the

combination, meaning no other tours can have coefficients greater than 0, and hence

none are part of the combination. QED

Let σ be the result of the merging of u and v. By our hypothesis, we have x’(w,σ) = 1,

so every tour T in our convex combination of optimal tours uses edge {w,σ}. For each

such T, let αT be its multiplier in the convex combination.

Edge Safety Theorem

Proof Continued

If we restrict attention to the edges e of these tours that do not involve σ, and hence have xe =

x’e, then we have that the same convex combination precisely sums to xe for all such e.

Consider a particular tour T in the combination, and let z1 be the city (other than w) that is

adjacent to σ in the tour, as in the figure below (where at least one of the two edges {z1,u} and

{z1,v} must be present in the original graph).

z1

Original

Graph

αT

…

u

v

β

1-β

w

zk

z1

αT

…

σ

zk

Shrunken

Graph

w

Because of the tour multiplier, we must have x’{z1,σ} ≥ αT and x’{z1,σ} ≥ αT. Thus in the original

graph, the min cut between w and z1 in the graph induced by those two cities and {u,v} must be at

least αT and there must be a flow of size αT between w and z1.

This flow must be partitioned among the four paths (w,u,z1), (w,v,z1), (w,u,v,z1), and (w,v,u,z1). So

the tour T in the shrunken graph can be replaced by 4 (or fewer) tours in the original graph, with

multipliers summing to αT.

This holds for all tours in the convex combination for the shrunken graph, perhaps involving

other tour neighbors zi of σ. We thus get a convex combination of tours. I claim that the union

of all these tours in the original graph is a convex combination of optimal tours with edge

weights matching those under x.

Edge Safety Theorem

Proof Continued

By the previous argument, we have a convex combination of tours in the original

graph whose value ye for any edge e satisfies ye ≤ xe.

I claim that in fact we must have ye = xe for edges e. For note that, since x is

the solution to a valid LP, we have

∑exed(e)

≤ OPT.

So if for any e we have ye < xe, we would have a convex combination y of tours

with

∑eyed(e)

<

∑exed(e)

≤ OPT,

and so at least one tour of length less than OPT, a contradiction.

Thus x must be a convex combination of tours, as desired. QED

Note that this theorem can be applied sequentially, leading to the merging of

many edges, and in particular long paths. This can greatly reduce the size of the

graph and consequently speed up our cut finding heuristics and algorithms.

Untangling Convex Combinations

The previous discussion reminds us of the problem of degeneracy, where the final LP

(no more TSP cuts possible) still contains fractional values, and so represents a

convex combination of tours rather than a single tour.

This is not a problem if our champion tour already has length equal to the LP solution

value, but what if not?

[ABCC06] doesn’t seem to address this issue, so it probably is a rare occurrence.

The likely approach is to use heuristics, and exploit the fact that the graph is

probably now very sparse and can be made much smaller.

•

Only e such that xe > 0 can be in a tour.

•

All edges e with xe = 1 must be in an optimal tour, and so maximal paths of such

edges can be collapsed into a single forced edge representing that path.

Such an approach can also be used even before the we have an unimprovable LP, as a

way to potentially find new champion tours.

Managing and Solving the LP’s:

Core Sets

Problem: Our LP’s potentially involve billions of variables.

Solution: “Core sets”

•

•

•

•

We observe that only a relatively small number of edges typically get

non-zero values when solving our LP’s.

If we knew which ones they would be, we could simply eliminate them

from the formulation by fixing their values at 0.

Since we do not know them in advance, Concorde uses a simple heuristic

to define a “core set” of edges that are allowed non-zero values.

Standard possibilities:

–

–

–

•

•

The edges in some collection of good tours.

The edges to the k-nearest neighbors for each city for some k.

A combination of the two.

Concorde uses the union of the edges occurring in the tours resulting

from 10 runs of Chained Lin-Kernighan for its initial core set.

Thereafter, it will delete an edge e from the core set if for some

constant L1 consecutive LP solves its value remains below a tolerance e1.

Typical values are L1 = 200 and e1 = 0.0001.

Managing and Solving the LP’s:

Adding edges to the Core Set

•

In solving the LPs, Concorde computes both a primal and a dual solution.

•

Consequently, it has access to reduced costs cj - yTAj for all the edges, including those not

in the core.

•

Edges with negative reduced costs are candidates for addition to the core (just as non-basic

core variables with negative reduced costs are candidates for the entering variable in a step

of the primal simplex algorithm).

•

These are added to a queue, and every so often, the 100 with the most negative reduced

costs are added to the LP. It is then re-solved and the new reduced costs for the remaining

edges in the queue are computed. Any edge with reduced cost greater than some e2 < 0 is

removed from the queue. Concorde uses e2 = - 0.0001.

•

One difficulty: For very large instances, computing the reduced costs for all non-core edges

can be very expensive because there are so many of them ( ~500 billion for N = 106).

Concorde’s solutions:

–

Heuristics for approximating the reduced costs quickly (followed by exact pricing of the good

candidates).

–

Only price a fraction of the non-core edges each time, cycling through all of them over many

iterations.

–

Alternate this with cycling through just those non-core edges that are within the 50 nearest

neighbors of some city.

–

For geometric instances, the list of edges to consider can be substantially pruned based on the city

coordinates and values from the current dual solution.

Managing Cuts

•

Start with just the degree-2 constraints.

–

Note that we do not need an integer solution (as I described last time, using b-matching).

–

A fractional solution, with edge values in {0,½,1} will suffice for getting us started.

–

This can be accomplished without solving the LP, via a primal-dual algorithm.

•

Subsequently, when a cut is found by a separation routine, it is appended to

the end of a queue of cuts waiting to be added to the core LP. If the queue

is small, we may call these routines many times, thus adding many cuts to

the queue.

•

When the cut-finding process stops, we repeated the following process

until the queue is empty or we have added 250 cuts to the core LP:

–

Take the first cut from the queue

–

Check to confirm that it is still violated by the current x by at least some small tolerance (say

0.002). This is needed since the cut may have been found for some earlier value of x.

–

•

If the cut is still valid in this sense, add it to the core LP. Otherwise, discard it.

Cuts are deleted if the dual variables for them are less than some fixed

tolerance (say 0.001) for 10 consecutive LP solves.

Storing Cuts

•

Problem: It is not necessarily efficient to store cuts as an actual inequality

on variables (or, worse yet, a vector of length |C|), since this can be a very

inefficient use of space.

•

81 Gbytes for TSPLIB instance pla7397 in vector form.

•

This can be reduced to 96 Mbytes by more efficient representations.

–

Lists of sets

–

Variable-length codes to point to sets (based on their frequency of occurrence)

–

Intervals represented by their endpoints, or better yet, their first endpoint and the interval

length

–

Etc.

•

We need to do some computation to decode the representations (and their

effect on the core set of variables) but this is a worthwhile tradeoff.

•

Also, it pays to choose, among the many equivalent representations, the one

that leads to the fewest non-zeros in the core-set LP formulation.

–

For instance, one can choose to represent a subtour inequality by either S or C-S, and the

smaller of those two sets is likely to be better in this regard.

Solving the LP’s

•

Use the Dual Steepest Edge variant of the Simplex algorithm.

•

Used the CPLEX package when [ABCC06] was being written.

•

Current Concorde package includes its own LP solving package (QSopt),

tailored for solving the kinds of LP’s encountered in Concorde.

•

I am omitting loads of details.

•

(as I have in all the other Concorde-related issues I have discussed).

•

One detail that should be discussed: Round-off error and valid bounds.

Coping with Round-Off Error

Commercial Linear Programming codes use floating point arithmetic.

Their arithmetic routines presumably meet the IEEE standard, but this still means that

one can only report results to within some fixed tolerance.

Given this, our LP solutions only generate imprecise lower bounds.

And may be in error since the solution may violate some of the LP cut constraints if it is

very close to the bound of the cut, and merely setting tolerances may not suffice.

One frequently used approach: Use exact-arithmetic LP codes (or exact-arithmetic hand

computations in the case of [DFJ54]). Unfortunately, this is very slow.

Concorde’s approach:

•

Start with the solution to the dual (which it already computes).

•

In the exact arithmetic world, this equals the primal opt, and any feasible solution to

the dual is a lower bound on the primal opt.

•

Find a fixed-precision feasible solution to the dual by exploiting the fact that all the

dual constraints have unique slack variables. (In Concorde’s case that precision is 32

bits each to the right and left of the decimal point).

•

This remains quite close to the floating point optimal.

Branching

Actually there are two types of branching in Concorde.

1. Edge Branching (xe = 1 or xe = 0).

2. Subtour Branching [Clochard and Naddef, “Using path inequalities in a branch and cut

code for the symmetric traveling salesman problem,” Third IPCO Conference (1993),

291-311].

For some subset S of cities that does not yield a violated subtour inequality,

break into cases depending on whether δx(S) ≤ 2 or δx(S) ≥ 4.

This covers all possibilities, since in a tour we cannot have an odd value for

δx(S).

Before we split the root subproblem, we first fix as many of the edge values at

0 or 1 as possible, using the reduced costs λe of the edges in the final LP and the

“integrality gap” Δ between the length Length(T*) of our current best tour and

the LP solution:

xe = 1 if λe < -(Δ-1) and xe = 0 if λe > (Δ-1).

(In each case, fixing the variable to the other choice from {0,1} would cause the

LB to grow larger than Length(T*) – 1, allowing us to prune the subproblem.)

Choosing the Split

Edge Candidates:

For each fractional variable xe, estimate the change (z0 or z1) in LP objective

value that would result from setting xe to either 0 or 1 in our current LP and

making a single dual Simplex pivot.

Rank the choices by the formula

p(z0, z1, γ) = (γ∙minimum(z0, z1) + maximum(z0, z1))/(γ+1),

with γ = 10, saving the top 5 as candidates.

(It is more important to improve the smaller bound than the larger.)

Subtour Candidates:

For each of the 3500 sets S involved in our cuts that have δx(S) closest to

3, rank each choice in an analogous way and save the top 25 candidates.

Get another 25 candidates from a separate, more combinatorial approach.

Ranking the Candidates: Strong Branching

Each candidate consists of a pair of constraint sets which produce a disjoint partition

of the possibilities, either

•

(xe = 1, xe = 0), or

•

(δx(S) ≤ 2, δx(S) ≥ 4)

Denote these pairs by (P01, P11), (P02, P12), …, (P0k, P1k).

For each Pij, add the corresponding constraints to the LP, and starting with the

current basic solution, perform a fixed (limited) number of dual-steepest-edge

Simplex pivots and let zij be the resulting dual objective value. (If the problem is

infeasible, set zij = length(T*)).

Pick the candidate that maximizes p(z0j, z1j, 100).

For the most difficult instances, we can take this one step further (“tentative

branching”), whose added cost may be justified by the need for fewer subproblems.

•

Take the top h candidates according to the above ranking.

•

For each use the full cutting plane approach to get a lower bound żij.

•

Then rank these by p(ż0j, ż1j, 10) and take the best.

Branching Performance

Start with a root LP with value 0.00009%

below the optimal tour length. (Tour found

by Held Kelsgaun using a variant of his

“LKH” algorithm. More to come about how

the root LP was built).

Computations performed on a network of

250 2.6 Ghz AMD Opteron processors.

The CPU times are the sum of the times

used on the individual processors.

pla85900

# of Subproblems

CPU Time

>> 3,000

>> 4 years

Tentative Branching, h = 4

1,149

1.8 years

Tentative Branching, h = 32

243

0.8 years

Strong Branching

More on pla85900’s Solution

More on pla85900’s Solution

Not solved with vanilla Concorde. Instead:

• Perform an initial run (without branching) to drive up the root solution.

Result: bound 0.0802% below optimal, 22.6 CPU days.

•

Perform a run with an upper bound of 142,320,000 (below optimal) and

starting with cuts from previous run.

Result: bound 0.0456% below optimal, 144.0 CPU days.

•

Perform a run with an upper bound of 142,340,000 (still below opt), and

starting with the cuts from previous runs.

Result: bound 0.0395% below optimal, 227.3 CPU days.

•

Perform a run with the true upper bound, starting with the cuts from all

the previous runs and running until there were 1000 active search nodes.

Result: bound 0.0324% below optimal, 492.8 CPU days.

•

Repeat previous step, but including the new cuts it generated.

Result: bound 0.0304% below optimal, 740.0 CPU days.

•

Over the course of a year, repeatedly apply all of Concorde’s separation

routines to further drive up the root bound.

Result: bound 0.00087% below optimal, lots of CPU days…

More on pla85900’s Solution

Cutting Planes in the pla85900 root LP

Type

Number

Subtours

1,030

Combs

2,787

Paths and Stars

4,048

Bipartition

41

Domino Parity

164

Others (Local Cuts)

809

With this last root LP, Concorde finally solved

pla85900 in just 2,719.5 CPU days

Adding the additional cuts found to the root LP led

to the even better root gap of 0.00009% and the

results quoted 3 slides back.

Vanilla Concorde

Random Euclidean Instances

N

Samples

ABCC06

2d

Mean

ABCC06

2d

Samples

iMac14

2d

Mean

iMac14

2d

Samples

iMac14

3d

Mean

iMac14

3d

Samples

iMac14

7d

Mean

iMac14

7d

100

10,000

0.7

200

10,000

3.6

300

10,000

10.6

400

10,000

25.9

500

10,000

50.2

1,000

20.9

1,000

6.6

1,000

2.6

600

10,000

92.0

1,000

35.2

1,000

9.8

1,000

4.1

700

10,000

154.5

1,000

52.3

1,000

13.2

1,000

5.6

800

10,000

250.4

1,000

81.7

1,000

17.4

1,000

6.6

900

10,000

384.7

1,000

116.0

1,000

21.0

1,000

8.1

1000

10,000

601.6

277

158.6

1,000

29.4

1,000

9.1

1500

1,000

3,290.9

1,000

61.3

1,000

10.6

2000

1,000

14,065.6

1,000

27.1

2500

1,000

53,737.9

100

45.7

7d requires a version of Concorde modified for higher fixed-precision.

(Modified by Jeffrey Stoltz with hints from Bill Cook.)

Next Time

More properties of Random Euclidean Instances

(as revealed by Concorde)