Item - PARCC

PARCC Core Leadership Group

Item Review Meeting

January 2013

1

Note: all items included in this presentation are for illustrative training purposes only.

They are not representative of PARCC assessment items.

2

Overview of Training

1.

Charge as Committee Member

2.

Purpose of PARCC Summative Assessments

3.

PARCC Summative Assessments (PBA, EOY)

4.

Item Review Process

5.

Item Review Criteria

6.

Apply Criteria 1-9 to Item Review

Item Review Committee Charge

3

• Your role is to provide expert CONTENT review of items and tasks.

• You will use information provided in this item review training and will apply item review criteria to review the items.

• You should focus exclusively on PARCC’s item review criteria during your review.

Item Review Committee

4

Please also note the following:

• Passage review committees have already approved the passages according to PARCC content and bias/sensitivity guidelines.

• Bias/sensitivity item review committees will apply bias/sensitivity guidelines to all items at a different time.

• Other concerns identified will be placed in “the parking lot” for consideration by PARCC leadership.

Purpose of PARCC Summative

Assessments

• Determine whether students are college- and career-ready or on track

• Assess the full range of the Common Core State

Standards (CCSS) for reading, writing, and language

• Measure the full range of student performance, including the performance of high- and lowperforming students

• Provide data for accountability, including measures of growth

5

• Incorporate innovative approaches throughout the system

Performance-Based Assessment

6

Composed of Three Tasks

• Literary Analysis Task (LAT)

• Research Simulation Task (RST)

• Narrative Task (NT)

Eligible Item Types for Performance-Based Assessment (PBA)

• Evidence-Based Selected Response (EBSR)

• Technology-Enhanced Constructed Response (TECR)

• Prose-Constructed Response (PCR)

Performance-Based Assessment

A.

Literary Analysis Tasks — The Literature Task plays an important role in honing students’ ability to read complex text closely, a skill that research reveals as the most significant factor differentiating college-ready from non-college-ready readers. This task will ask students to carefully consider literature worthy of close study and compose an analytic essay .

B.

Research Simulation Task — The Research Simulation Task is an assessment component worthy of student preparation because it asks students to exercise the careerand college- readiness skills of observation, deduction, and proper use and evaluation of evidence across text types.

In this task, students will analyze an informational topic presented through several articles or multimedia stimuli, the first text being an anchor text that introduces the topic.

Students will engage with the texts by answering a series of questions and synthesizing information from multiple sources in order to write two analytic essays.

C. Narrative Writing Task

—

The Narrative Task broadens the way in which students may use this type of writing. Narrative writing can be used to convey experiences or events, real or imaginary. In this task, students may be asked to write a story, detail a scientific process, write a historical account of important figures, or to describe an account of events, scenes or objects, for example

.

Pg 13 Item Guidelines

7

8

End-of-Year Assessment

Focused on supporting Reading Comprehension Claims

• Item development must be informed by CCSS and

Evidence Statements

Eligible Item Types for End-of-Year (EOY)

• Evidence-Based Selected Response (EBSR)

• Technology-Enhanced Constructed Response

(TECR)

9

Evidence-Based Selected Response

(EBSR) Items:

EBSR items:

Are designed to measure Reading Standard 1 and at least one other Reading Standard.

May have one part.

• In grade 3, a one part EBSR is allowable because Reading Standard 1 evidence 1 is distinctly different from Reading Standard 1 in grades 4-11.

• In grades 4-11, a one part EBSR is allowable when there are multiple correct responses that elicit multiple evidences to support a generalization, conclusion or inference.

Pgs 27 -29 Item Guidelines

10

Evidence-Based Selected Response

(EBSR) Items:

May have multiple parts.

The Item Guidelines document describes how PART A and PART B may function in a multiple part EBSR.

Among things to consider:

• Each part must consist of a selected-response question with a minimum of four choices.

• In the first part, students select a correct answer among a minimum of four choices.

(continued on next slide)

Pgs 27 -29 Item Guidelines

11

Evidence-Based Selected Response

(EBSR) Items:

In additional parts, one format requires students to select among a minimum of four choices to demonstrate the ability to locate and/or connect details/evidence from the text that explains, justifies, or applies the answer chosen for the first part of the item.

There is no requirement for one-to-one alignment between Part A options and Part B options.

EBSR items can meet evidence statements that specify “explain,”

“provide an analysis,” etc., by selecting options that comprise explanation or analysis.

12

Evidence-Based Selected Response

(EBSR) Items: Variable Elements

EBSR items may possess the following characteristics:

For items with one correct response, four answer choices are requisite. For those items with two correct responses, six answer choices are requisite. For those items with three correct response (allowed only in grades 6-11), seven answer choices are requisite.

Pgs 27 -29 Item Guidelines

Evidence-Based Selected Response

(EBSR) Items:

For items with one correct response in Part A and one correct response in Part B, there is no partial credit. One part items do not offer partial credit.

For those items with one or more correct responses in Part A and more than one correct response in Part B, partial credit should be available.

To receive partial credit, students must answer Part A correctly AND select at least one correct response in Part B.

This will earn the student 1 point. For these items, to receive full credit, students must answer both Part A and Part B correctly.

13

When an item allows for more than one correct choice, each correct choice must be equally defensible.

Pgs 27 -29 Item Guidelines

Evidence-Based Selected Response

(EBSR) Items:

14

EBSR items can be written with an inference to be drawn in Part A and a requirement for students to find another example of how that inference applies in a different part of the text for Part B.

Example 1:

Part A – What character trait does Snow White reveal when Snow White does X?

Part B—Which paragraph from the passage best shows additional evidence of this character trait?

Example 2:

Part A—What theme is revealed in the passage?

Part B—Which paragraph from the passage best shows this same theme?

Example 3:

Part A—What is the point of view/perspective in this passage?

Part B—Which paragraph from the passage best shows this same point of view/perspective (or the opposite point of view/perspective)?

See the Item Guidelines document pages 27 -29 for other types of EBSR items.

Note: all items included in this presentation are for illustrative training purposes only. They are not representative of

PARCC assessment items.

15

Additional Considerations for

Distracters

The primary purpose of a distracter is to provide evidence that a student is not able to meet the standard(s) assessed due to student misconceptions.

Distracters must be plausible responses to item stems.

The written style of all distracters in an item should be similar to that of the correct response(s), but need not be “perfectly parallel” in length, grammatical function, or in use of punctuation.

The content of the distracters, rather than the parallelism of style, is the primary focus for distracter choices.

Answer responses are not ordered alphabetically by first word or from short to long, etc. They may be ordered in any sequence as is appropriate to the content measured by the specific item.

16

Additional Considerations for

Distracters

If answer responses are quotations or paraphrased textual evidence, place the answers in the order they appear in the passage.

Particular care must be taken for Part B in EBSR items (where students are asked to select evidence from the text) such that achieving parallelism in distracters does not overly influence distracter wording.

In Part B, when writing the distracters for evidences, all of the answer choices must be the same type of citation of evidence (e.g. all quotes or all paraphrases).

All answer choices for Part B (distracters) must be accurate/relevant/from the passage (whether exact citations or paraphrases). All distracters must originate accurately from the text. In

Part A, distracters may be written as plausible misreadings of the text.

Sample Evidence-Based Selected

Response (EBSR)

Part A: What does the word “regal” mean as it is used in the passage?

A.

generous

B.

threatening

C.

kingly*

D.

uninterested

Part B: Which of the phrases from the passage best helps the reader understand the meaning of “regal?”

A.

“wagging their tails as they awoke”

B.

“the wolves, who were shy”

C.

“their sounds and movements expressed goodwill”

D.

“with his head high and his chest out”*

17

Note: all items included in this presentation are for illustrative training purposes only. They are not representative of

PARCC assessment items.

Technology-Enhanced Constructed

Response (TECR) Items:

18

TECR items must:

Allow for machine scoring

Award the student two (2) points for full credit

Be delivered and responded to using technology, allowing for a variety of technology-enhanced student responses, including but not limited to the following:

• drag and drop

• highlighting the text

• annotating text

• other negotiated methods

Pgs 29 -31 Item Guidelines

Technology-Enhanced Constructed

Response (TECR):

19

TECR items may possess the following characteristics:

When a TECR uses an EBSR structure (e.g. with Part A

[measuring one or more of standards 2-9] and Part B

[measuring standard 1]), use the same rules as applied for

EBSR.

Use the same guidelines for distractors for EBSR items for

TECR items where applicable.

For TECR items, partial credit may be offered when an item allows for partial comprehension of the texts to be demonstrated.

Pgs 29 -31 Item Guidelines

Sample Technology-Enhanced

Constructed Response (TECR) Item

Part A : Below are three claims that one could make based on the article

“Earhart’s Final Resting Place Believed Found.”

Claims

Earhart and Noonan lived as castaways on

Nikumaroro Island.

Earhart and Noonan’s plane crashed into the

Pacific Ocean.

People don’t really know where Earhart and

Noonan died.

Highlight the claim that is supported by the most relevant and sufficient evidence within “Earhart’s Final Resting Place Believed Found.”

Part B : Click on two facts within the article that best provide evidence to support the claim selected in Part A.

20

Note: all items included in this presentation are for illustrative training purposes only. They are not representative of

PARCC assessment items.

21

Prose Constructed-Response (PCR):

Required Elements

PCR items must:

• Visibly align questions/tasks with specific Standards; that is, the actual language of the Standards should be used in the prompts/questions

• Elicit evidence(s) supporting the Sub Claim for Written

Expression and the Sub Claim for Conventions and

Knowledge of Language

Pgs 32 -33 Item Guidelines

Prose Constructed-Response (PCR):

Required Elements

PCR items must:

• Establish a clear purpose for writing, modeling the language found in the Writing Standards

• Specify the audience to be addressed

• State clearly the topic, issue, or idea to be addressed

• Reference the source text (or texts) serving as the stimulus

(or stimuli) for a student response

• Specify the desired form or genre of the student response

22

Note: Standardized wording for PCRs is under discussion.

Pgs 32 -33 Item Guidelines

23

Prose Constructed-Response (PCR):

Required Elements

• Elicit evidence(s) aligned with at least one Reading

Standard (even when not scored for a sub claim associated with the Major Claim for Reading

Complex Text)

• Allow students to earn partial credit

Pgs 32 -33 Item Guidelines

Prose Constructed-Response (PCR):

Required Elements

24

In addition, prose constructed-response items must provide all students the opportunity to demonstrate a full range of sophistication and nuance in their responses.

• Prose constructed-response items must be designed to elicit meaningful responses on aspects of a text that may be discussed tangentially or in great detail and elaboration, thereby enabling measurement of the full range of student performance.

Pgs 32 -33 Item Guidelines

25

Prose Constructed-Response (PCR):

Narrative Description

Narrative writing takes two distinct forms in the PARCC assessment system:

1) Narrative Story

2) Narrative Description*

26

Prose Constructed-Response (PCR):

Narrative Story

Narrative Story – is about imagined situations and characters. It uses time as its deep structure.

Prose Constructed-Response (PCR):

Narrative Story- Scenarios

Scenarios should be associated with the text and should culminate by stating the objective of the final prose constructed response for the task.

Scenarios for Narrative Writing –Narrative Story Task Model :

• Today you will read [fill in the text type/title]. As you read, pay close attention to [fill in general focus of PCRs] as you answer the questions to prepare to write a narrative story.

27

Note: all items included in this presentation are for illustrative training purposes only. They are not representative of

PARCC assessment items.

Sample Prose-Constructed

Response (PCR) Item

Use what you have learned from reading “Daedulus and Icarus” by Ovid and “To a Friend Whose Work Has Come to Triumph” by Anne Sexton to write an essay that analyzes how Icarus’s experience of flying is portrayed differently in the two texts.

As a starting point, you may want to consider what is emphasized, absent, or different in the two texts, but feel free to develop your own focus for analysis.

Develop your essay by providing textual evidence from both texts. Be sure to follow the conventions of standard English.

28

Note: all items included in this presentation are for illustrative training purposes only. They are not representative of

PARCC assessment items.

Additional Considerations for

Vocabulary Items

Several styles for presenting vocabulary words/phrases are viable. In considering which means is best for presenting the vocabulary words/phrases, item writers should use the means that most efficiently directs students to the word/phrase in the text, while allowing students to see the full relevant context for determining the meaning of the word/phrase.

The part of a vocabulary item that asks for word or phrase meaning should not use qualifiers (e.g., best, most likely: Which of the phrases from the excerpt best helps the reader understand the meaning of “XXX”?

The part of a vocabulary item that asks for support may use qualifiers if needed:

Which sentence from the excerpt best supports the response in Part A?

Distractors should always be syntactically plausible. This is especially important in vocabulary items.

29

Additional Considerations for

Vocabulary Items

When writing vocabulary items use the following formats:

Part A - “What is the meaning of the word XXX as it is used in [paragraph 13, or line, or text]?—Part A wording

Part B - “Which of the phrases from the excerpt helps the reader understand the meaning of XXX? Unless referencing in this way creates a problem for the item. In this case, the item may require the use of a text box approach where the options for Part B come only from the excerpted text.

Use of Technology Enhancement:

To measure vocabulary using a drag/drop technology use the following format:

“Drag the sentences from the passage into your notes that help create the meaning of the word “XXX” as it is used in the passage.”

“Drag the words/phrases from the passage into your notes that help create the tone of the passage.”

Note: Give a selection of sentences or words/phrases from which to choose where not all are correct.

30

Item Review Process

31

Step 1 - Each reviewer on your team will read the passage/text and the items independently.

Step 2 - For each item, check to make sure the item meets the evidence statements/standards noted in the metadata for the item. If the item is aligned, continue to review the item. If the item is not aligned, but can be edited to align with the evidence statements/standards, note in comments how to align the item. If the item cannot be aligned, reject the item and move on to review the next item.

Step 3 - Review the item in terms of other criteria on the criteria evaluation sheet. Make comments to reflect needs for revision/strengths noted.

32

Item Review Process

Step 4 - The facilitator will go item by item to determine which items are to be accepted, accepted with edits, or rejected. If all members of a group have marked an item “accepted” or “rejected,” no discussion occurs. Participants discuss those items where there is not 100% agreement.

Step 5- Allow a maximum of 10 minutes per item for consensus building to determine whether to accept, accept with edits, or to reject an item. If consensus cannot be achieved, move on. The team will return to these items on the last day of the review.

(continued)

Item Review Criteria

33

1.

Does the item allow for the student to demonstrate the intended evidence statement(s) and to demonstrate the standard(s) to be measured?

2.

Is the wording of the item clear, concise, and appropriate for the intended grade level?

3.

Does the item provide sufficient information and direction for the student to respond completely?

4.

Is the item free from internal clueing and miscues?

5.

Do the graphics and stimuli included as part of the item accurately and appropriately represent the applicable content knowledge?

Item Review Criteria

34

6.

Are any graphics included as part of the item clear and appropriate for the intended grade level?

7.

If the item has a technology-based stimulus or requires a technology-based response, is the technology design effective and grade appropriate?

8.

Is the scoring guide/rubric clear, correct, and aligned with the expectations for performance that are expressed in the item or task?

9.

If the item is part of a PBA task, does it contribute to the focus and coherence of the task model?

(continued)

35

Criterion 1. Alignment to the CCSS and Evidence Statements

Does the item allow for the student to demonstrate the intended evidence statement(s) and to demonstrate the CCSS to be measured?

Criterion 1. Alignment to the CCSS and Evidence Statements

36

An item should:

be aligned to more than one CCSS and corresponding evidence statements.

• Focus is no longer on a one-to-one relationship between an item and tested skill

• All reading comprehension items will align to a focus standard as well as RL 1 or RI 1

elicit the intended evidence(s) to demonstrate ability with the skills expressed in the standard(s).

• Items should focus on important aspects of the text and be worth answering

• Items are intended to provide evidence to support the claims

37

Criterion 1. Alignment to the CCSS and Evidence Statements

Vocabulary items should:

target Tier 2 academic vocabulary

• Words with wide use across academic subjects

• Words that are important for students to know

have distracters in the same part of speech as the assessed word

elicit evidence from at least one language standard and from reading standard RI1 or RL1

Pgs 25-26 Item Guidelines

Criterion 1. Alignment to the CCSS and Evidence Statements

38 Note: all items included in this presentation are for illustrative training purposes only. They are not representative of PARCC assessment items.

39

Criterion 2. Language Clarity and

Appropriateness

Is the wording of the item clear, concise, and appropriate for the intended grade level?

40

Criterion 2: Language Clarity and

Appropriateness

Items should:

convey a clearly defined task or problem in concise and direct language

use the language of the evidence statements and standards when appropriate

focus on what is important to learn rather than on trivial content

use a variety of approaches rather than a canned approach

Criterion 2: Language Clarity and

Appropriateness

Example from Grade 10: Poor Clarity

What was the problem that Galileo’s experiment did not solve?

A.

the influence of air pressure on the tubes’ water level

Example from Grade 10: Improved Clarity

According to the excerpt, what problem did Galileo’s experiment fail to solve?

A.

the influence of air pressure on the glass tube’s water level

41

Note: all items included in this presentation are for illustrative training purposes only. They are not representative of

PARCC assessment items.

42

Criterion 3. Clarity of the Student

Directions

Does the item provide sufficient information and direction for the student to respond completely?

43

Criterion 3: Clarity of the Student

Directions

Items should:

pose the central idea in the stem and not in the answer choices

provide directions that are specific and direct

require no background knowledge

Criterion 3: Clarity of the Student

Directions

Example of Poor Clarity in Student Directions

44 Note: all items included in this presentation are for illustrative training purposes only. They are not representative of PARCC assessment items.

Criterion 3: Clarity of the Student

Directions

Example of Improved Clarity in Student Directions

45

Note: all items included in this presentation are for illustrative training purposes only. They are not representative of

PARCC assessment items.

46

Criterion 4: Avoiding Clueing and

Miscues

Is the item free from internal clueing and miscues?

47

Criterion 4: Avoiding Clueing and

Miscues

Options should NOT echo a stem word.

Distractors should be plausible so that students will consider them carefully.

Items should not be answerable by reading other items in the set.

Criterion 4: Avoiding Clueing and

Miscues

Example of Miscue:

How is the life cycle of a frog similar to the life cycle of the butterfly?

A.

Both turn into a pupa.

B.

Both breathe with gills.

C.

Both begin life as an egg.*

D.

Both break out as a chrysalis.

48

Note: all items included in this presentation are for illustrative training purposes only. They are not representative of

PARCC assessment items.

49

Criterion 5: Accuracy of Content

Represented by Graphics

Do the graphics and stimuli included as part of the item accurately and appropriately represent the applicable content knowledge?

50

Criterion 5: Accuracy of Content

Represented by Graphics

Items should:

• not include graphics simply for the sake of including graphics.

• use graphics that allow students to understand the problem or task or demonstrate ability to solve a problem or task.

• elicit the intended evidence(s) to demonstrate ability with the skills expressed in the standard(s).

51

Criterion 6: Clarity and

Appropriateness of Graphics

Are any graphics included as part of the item clear and appropriate for the intended grade level?

52

Criterion 6: Clarity and

Appropriateness of Graphics

Items should:

• include graphics that are not too complex in structure or language for the intended grade level

• utilize graphics that allow students to understand the problem or task or demonstrate ability to solve a problem or task

• have figures, graphs, charts, and diagrams precisely labeled

53

Criterion 7: Effectiveness and Grade

Appropriateness of Technology

If the item has a technology-based stimulus or requires a technology-based response, is the technology design effective and grade appropriate?

54

Criterion 7: Effectiveness and Grade

Appropriateness of Technology

Items should:

• include the number of options and correct answers that are appropriate for the grade level.

• use a format that is sufficiently simple and interesting for students.

• include directions that are clear and detailed.

Criterion 7: Effectiveness and Grade

Appropriateness of Technology

Grade 3: Example of Effective and Grade-Appropriate Use of Technology

Drag the words from the word box into the correct locations on the graphic to show the life cycle of a butterfly as described in “ How Animals Live.

”

Words:

Pupa

Egg

Adult

Larva

55

Note: all items included in this presentation are for illustrative training purposes only. They are not representative of

PARCC assessment items.

56

Criterion 8: Clarity and Alignment of

Performance Expectations to Scoring

Guide

Is the scoring guide/rubric clear, correct, and aligned with the expectations for performance that are expressed in the item or task?

57

Criterion 8: Clarity and Alignment of

Performance Expectations to Scoring

Guide

Scoring Guidelines for EBSRs and TECRs:

Partial credit is allowable and desirable. See the specifics for scoring in the Item Guidelines document.

Pgs 27 -31 Item Guidelines

58

Criterion 9: Alignment of PBA Task to Model

If the item is part of a PBA task, does it contribute to the focus and coherence of the task model?

Criterion 9: Alignment of PBA Task to Model

59

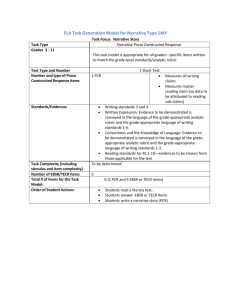

Sample Task Generation Model

ELA Task Generation Model 5A.4

Task Focus: Comparing themes and topics

Task Type

Grade

Number and type of Texts

Number and type of Prose Constructed Response

Items

Number and type of EBSR and/or TECR reading items

Literary Analysis

5

1 Extended Literature Text

1 Additional Literature Text

Both texts must be stories from the same genre (e.g., mysteries and adventure stories) with similar themes and topics.

1 Analytic PCR

Measures reading literature sub-claim using standards 1 and

9

Measures all writing claims

• 6 total items = 12 points

Items that do not measure reading sub-claim for vocabulary are designed to measure reading literature sub-claim

• 2 of 6 items(4 points) to measure the reading sub-claim for vocabulary (one per text)

• 4 of 6 items (8 points) measuring standards RL 2,3 and 5

To be determined Task Complexity (including text, item, and task complexity)

Total # of Items for the Task Model:

Order of Student Actions:

7

Students read extended literature text

Students respond to 1 item to measure the reading sub-claim for vocabulary

Students respond to 2 EBSR or TECR items

Students read 1 additional literature texts

Students respond to 1 item to measure the reading sub-claim for vocabulary

Students respond to 2 EBSR or TECR items

Students respond to 1 PCR

60

Criterion 9: Alignment of PBA Task to Model

Task Model Review for PBA Tasks

Task Alignment with the TGM

1.

Does the provided task match the task generation model designated in the metadata provided?

Task Directions

2.

Are the directions for the task clear, coherent, and appropriate for the intended grade level?

61