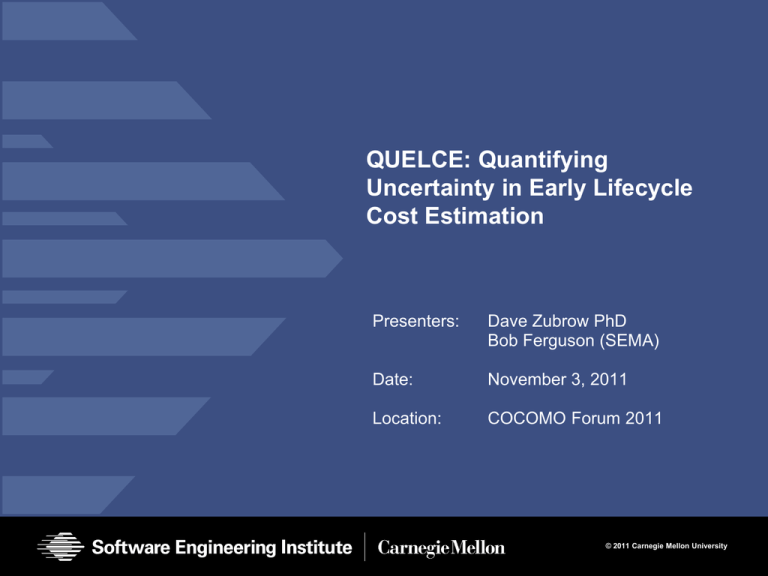

QUELCE: Quantifying Uncertainty in Early Lifecycle Cost Estimation

advertisement

QUELCE: Quantifying

Uncertainty in Early Lifecycle

Cost Estimation

Presenters:

Dave Zubrow PhD

Bob Ferguson (SEMA)

Date:

November 3, 2011

Location:

COCOMO Forum 2011

© 2011 Carnegie Mellon University

DOD Acquisition Process and GAO KnowledgeBased Acquisition Practices

“Establishing realistic cost and schedule estimates that are

matched to available resources:

Cost and schedule estimates are often based on overly optimistic

assumptions. Our previous work shows that without the ability to

generate reliable cost estimates, programs are at risk of experiencing

cost overruns, missed deadlines, and performance shortfalls. Inaccurate

estimates do not provide the necessary foundation for sufficient funding

commitments. Engineering knowledge is required to achieve more

accurate, reliable cost estimates at the outset of a program.

Source: GAO 11-499T

© 2011 Carnegie Mellon University

2

DOD Acquisition Process and GAO KnowledgeBased Acquisition Practices

Source: GAO 11-499T

© 2011 Carnegie Mellon University

3

Estimating the Cost of Development at Milestone A

Challenges:

1) change and uncertainty

2) optimistic judgment

Acquisition Phases and Decision Milestones

Materiel

Solution

Technology

Development

A

Est. $

Cost Estimate

Based on:

• Limited Information

• Expert Judgment

• Analogies

Approval

B

Engineering

& Manufacturing

C

Est. $$

Production

& Deployment

Est. $$$

Y

N

Delay

© 2011 Carnegie Mellon University

4

QUELCE for Producing Milestone A Estimates

Brainstorm

Change Drivers

and Define States

Produce BBN

Model of

Reduced Matrix

Develop Cause

and Effect

Matrix of Change

Drivers

Rate Relationships,

Restructure and

Reduce using DSM

Assign Probabilities and

Conditional Probabilities

to Nodes in BBN

Map BBN Change

Factor Output States

to COCOMO Cost

Driver Values

Define Scenarios of

Program Execution

Use Monte Carlo to

Select Combinations

of BBN Outputs

to Produce Cost

Estimate Distributions

© 2011 Carnegie Mellon University

5

Change Drivers and States

Driver

Nominal

State 1

State 2

State 3

State 4

State 5

Stable

Users added

Additional (foreign)

customer

Additional deliverable

(e.g. training &

manuals)

Production

downsized

Scope Reduction

(funding reduction)

defined

New condition

New mission

New echelon

Program

becomes Joint

Stable

Addition

Subtraction

Variance

Trade-offs

[performance vs

affordaility, etc.]

Established

Funding delays tie up

resources {e.g.

operational test}

FFRDC ceiling issue

Funding change for

end of year

Funding spread

out

Obligated vs.

allocated funds

shifted

Stable

Joint service program

loses particpant

Senator did not get

re-elected

Change in senior

pentagon staff

Advocate

requires change

in mission scope

Service owner

different than

CONOPS users

Selected Trade

studies are

sufficient

Technology does not

achieve satisfactory

performance

Selected solution

Technology not

Technology is too

New technology not

cannot achieve desired performing as

expensive

testing well

outcome

expected

Scope Definition

Mission / CONOPS

Capability Definition

Funding Schedule

Advocacy Change

Closing Technical Gaps

(CBA)

Factors seeded by, but not limited to,

Probability of Program Success

(PoPS) factors.

© 2011 Carnegie Mellon University

6

ocol

f P rot

Com p

lexity

o

ompl

exity

Cost/

C

Test

Mono

lithic

Depe

nden

c

GUIWork

flow

ies

rovis

ionin

g

i on/P

Auto

-Dete

ct

Legac

y Cod

e

y

Comp

atibil

it

ards

Backw

Depe

nden

cies

Vend

or

izatio

n

Staff

Ski lls

et

Optim

Per fo

rman

ce

of De

pl oym

Diver

sity

User

rol Pl

ane

Cont

User

Driver Cross

Impact Matrix

Plane

ents

Cause and Effect Matrix for Change Drivers

User Plane

Control Plane

User

Diversity of Deployments

Performance Optimization

Staff Skillset

Vendor Dependencies

Backwards Compatibility

Legacy Code

Auto-Detection/Provisioning

GUI- Workflow

Monolithic Dependencies

Test Cost/Complexity

Complexity of Protocol

Each cell gets a value (blank, 1, 2, or 3) to reflect the perceived cause-effect

relationship of the row heading to the column heading)

Note: The sum of a column represents a dependency score for the column header.

The sum of a row is the value of the driving force of the row header

© 2011 Carnegie Mellon University

7

Reduced Cause and Effect Matrix

1

1

1

2

2

1

2

2

1

1

2

1

2

2

2

0

1

1

1

0

1

1

1

1

1

1

1

1

1

1

1

1

2

1

1

1

1

2

1

1

2

1

2

2

1

1

1

1

1

1

1

2

2

1

2

2

2

1

1

1

1

2

1

1

2

2

2

0

2

1

1

1

1

1

1

1

1

1

2

1

1

1

1

1

1

2

2

2

2

2

3

1

2

1

2

1

1

1

1

1

1

1

1

1

1

1

1

2

1

1

3

2

1

1

1

1

1

1

2

2

2

2

Indicates remaining cycle that must be removed

2

0

0

6

0

4

1

1

1

9

4

5

4

12

4

8

1

7

2

7

0

13

3

4

1

10

3

15

2

18

2

7

3

7

1

8

0

8

1

14

0

17

0

17

0

15

0

12

0

9

0

10

0

13

0

11

0

20

0

19

0

5

0

5

0

6

29

16

6

34

27

29

21

33

16

5

10

5

19

12

14

6

5

6

10

7

7

4

0

0

2

2

0

0

0

2

0

0

0

17

0

Use Design Structure Matrix techniques to reduce so an acyclic graph can be produced.

© 2011 Carnegie Mellon University

8

Number right of diagonal

1

1

3

3

1

2

0

1

3

2

1

0

Total

2

1

1

2

1

Product Challenge

2

2

Project Challenge

2

Size

1

1

3

Contractor Performance

2

2

2

3

1

1

1

2

2

2

1

2

Data Ownership

Standards/Certifications

1

1

1

0

3

2

Test & Evaluation

2

1

1

1

1

1

1

1

3

1

1

1

1

2

0

Cost Estimate

2

2

1

2

1

2

1

1

1

2

2

2

1

2

1

2

1

Industry Company Assessment

1

2

1

Production Quantity

1

2

2

2

Contract Award

2

1

Sustainment Issues

2

2

2

1

1

1

2

PO Process Performance

1

1

1

3

Information sharing

2

0

2

1

2

2

0

2

3

2

Supply Chain Vulnerabilities

3

3

Scope Responsibility

Manning at program office

Prog Mgt Structure

Project Social / Dev Env

Program Mgt - Contractor Relations

Acquisition Management

Funding Schedule

Functional Solution Criteria (measure)

Scope Definition

Functional Measures

Interdependency

Systems Design

3

Interoperability

Advocacy Change

3

3

Closing Technical Gaps (CBA)

Capability Definition

Mission / CONOPS

Change in Strategic Vision

Capability Definition

Advocacy Change

Closing Technical Gaps (CBA)

Building Technical Capability & Capacity (CBA)

Interoperability

Systems Design

Interdependency

Functional Measures

Scope Definition

Functional Solution Criteria (measure)

Funding Schedule

Acquisition Management

Program Mgt - Contractor Relations

Project Social / Dev Env

Prog Mgt Structure

Manning at program office

Scope Responsibility

Standards/Certifications

Supply Chain Vulnerabilities

Information sharing

PO Process Performance

Sustainment Issues

Contract Award

Production Quantity

Data Ownership

Industry Company Assessment

Cost Estimate

Test & Evaluation

Contractor Performance

Size

Project Challenge

Product Challenge

Totals

0

Below diagonal

0

Change in Strategic Vision

Causes

Mission / CONOPS

Effects

Building Technical Capability & Capacity (CBA)

Change Drivers - Cause & Effects Matrix

0

0

0

0

0

0

1

3

5

0

0

1

0

2

2

2

1

2

5

2

4

3

0

0

0

0

0

0

0

0

0

0

0

0

0

BBN of Reduced Cause and Effect Matrix

Translate the C-E Matrix

into a BBN.

Orange nodes are

program change factors.

Green nodes are outputs

that will link to COCOMO

cost drivers.

These output nodes were

selected as an example

and represent sets of

COCOMO cost drivers.

© 2011 Carnegie Mellon University

9

Assign Probabilities and Conditional

Probabilities to BBN Nodes

Use expert judgment to

assign probabilities and

conditional probabilities

to the nodes.

These assignments

could also be empirically

based if the data are

available.

Capability Definition

is affected by

CONOPS and

Strategic Vision

© 2011 Carnegie Mellon University

10

Define Scenarios of Program Execution

Scenarios for alternate futures specify nominal or non-nominal states for selected

change drivers to test alternative results.

© 2011 Carnegie Mellon University

11

Map BBN Change Factor Output States to

COCOMO Cost Driver Values

Drivers

XL

VL

L

6.20

5.07

7.07

5.48

7.80

4.96

4.05

5.65

4.38

6.24

0.49

0.60

2.12

1.59

1.43

1.62

1.33

1.30

1.43

0.83

0.95

0.87

1.26

1.12

1.10

1.14

PREC

FLEX

RESL

TEAM

PMAT

RCPX

RUSE

PDIF

PERS

PREX

FCIL

SCED

N

H

VH

Scale Factors

3.72 2.48 1.24

3.04 2.03 1.01

4.24 2.83 1.41

3.29 2.19 1.10

4.68 3.12 1.56

Effort Multipliers

1.00 1.33 1.91

1.00 1.07 1.15

1.00 1.29 1.81

1.00 0.83 0.63

1.00 0.87 0.74

1.00 0.87 0.73

1.00 1.00 1.00

XH

Product Project

0.00

0.00

0.00

0.00

0.00

<X>

<X>

<X>

2.72

1.24

2.61

0.50

0.62

0.62

X

X

X

<X>

<X>

<X>

<X>

<X>

<X>

BBN output states are mapped to values of COCOMO cost drivers.

Currently done with expert judgment. Later could be done using a data-based

algorithm.

Distributions of BBN outputs used in next step.

© 2011 Carnegie Mellon University

12

Use Monte Carlo to Select Combinations of BBN

Outputs to Produce Cost Estimate Distributions

4

BBN Outputs

4

Mapped

COCOMO

value

Using distribution of BBN outputs, Monte Carlo simulation is used to produce the

distribution of the cost estimation for each defined scenario.

© 2011 Carnegie Mellon University

13

Task 2: Develop Efficient Techniques To Calibrate

Expert Judgment of Program Uncertainties

Solution

Step 2: Expert

takes series of

domain specific

tests

Step 3: Expert reduces

overconfidence

Step 1: Expert

virtual training

of “reference

points”

Step 4: Expert

renders calibrated

estimate of size

Domain-Specific

“reference points”

1)

Size of ground combat vehicle

targeting feature xyz in 2002

consisted of 25 KSLOC Ada

2)

Size of Army artillery firing

capability feature abc in 2007

consisted of 18 KSLOC C++

3)

…

Un-Calibrated

Calibrated

Calibrated = more

realistic size and

wider range to

reflect true expert

uncertainty

Estimate of SW Size

© 2011 Carnegie Mellon University

14

Next Steps

Classroom experiments with software & systems engineering graduate

students

• Structured feedback for early validation & refinement of our method, initially:

– Selected steps at least through reducing the client’s dependency matrix at the

University of Arizona

– Calibrating expert judgment & reconciliation of differences in judgment at Carnegie

Mellon University

Invitation to Participate

• Many pieces and parts to test and improve

• Empirically validating the overall approach

– Retrospective: projects that were estimated and have recorded change history

• Testing BBN output parameters and mapping to estimation parameters

• Fitting to additional estimation tools and cost estimation relationships (CER)

• Do new estimates properly inform decisions about risk and

change management? (secondary benefit)

© 2011 Carnegie Mellon University

15

Contact Information

Presenters/ Points of Contact

Dave Zubrow

SEMA

Telephone: +1 412-268-5243

Email: dz@sei.cmu.edu

Bob Ferguson

SEMA

Telephone: +1 412-268-9750

Email: rwf@sei.cmu.edu

Web:

www.sei.cmu.edu

www.sei.cmu.edu/measurement/

U.S. mail:

Software Engineering Institute

Customer Relations

4500 Fifth Avenue

Pittsburgh, PA 15213-2612

USA

Customer Relations

Email: info@sei.cmu.edu

Telephone:

+1 412-268-5800

SEI Phone:

+1 412-268-5800

SEI Fax:

+1 412-268-6257

© 2011 Carnegie Mellon University

16

NO WARRANTY

THIS CARNEGIE MELLON UNIVERSITY AND SOFTWARE ENGINEERING INSTITUTE

MATERIAL IS FURNISHED ON AN “AS-IS" BASIS. CARNEGIE MELLON UNIVERSITY

MAKES NO WARRANTIES OF ANY KIND, EITHER EXPRESSED OR IMPLIED, AS TO

ANY MATTER INCLUDING, BUT NOT LIMITED TO, WARRANTY OF FITNESS FOR

PURPOSE OR MERCHANTABILITY, EXCLUSIVITY, OR RESULTS OBTAINED FROM

USE OF THE MATERIAL. CARNEGIE MELLON UNIVERSITY DOES NOT MAKE ANY

WARRANTY OF ANY KIND WITH RESPECT TO FREEDOM FROM PATENT,

TRADEMARK, OR COPYRIGHT INFRINGEMENT.

Use of any trademarks in this presentation is not intended in any way to infringe on the

rights of the trademark holder.

This Presentation may be reproduced in its entirety, without modification, and freely

distributed in written or electronic form without requesting formal permission. Permission

is required for any other use. Requests for permission should be directed to the Software

Engineering Institute at permission@sei.cmu.edu.

This work was created in the performance of Federal Government Contract Number

FA8721-05-C-0003 with Carnegie Mellon University for the operation of the Software

Engineering Institute, a federally funded research and development center. The

Government of the United States has a royalty-free government-purpose license to use,

duplicate, or disclose the work, in whole or in part and in any manner, and to have or

permit others to do so, for government purposes pursuant to the copyright license under

the clause at 252.227-7013.

© 2011 Carnegie Mellon University

17