PPTX

advertisement

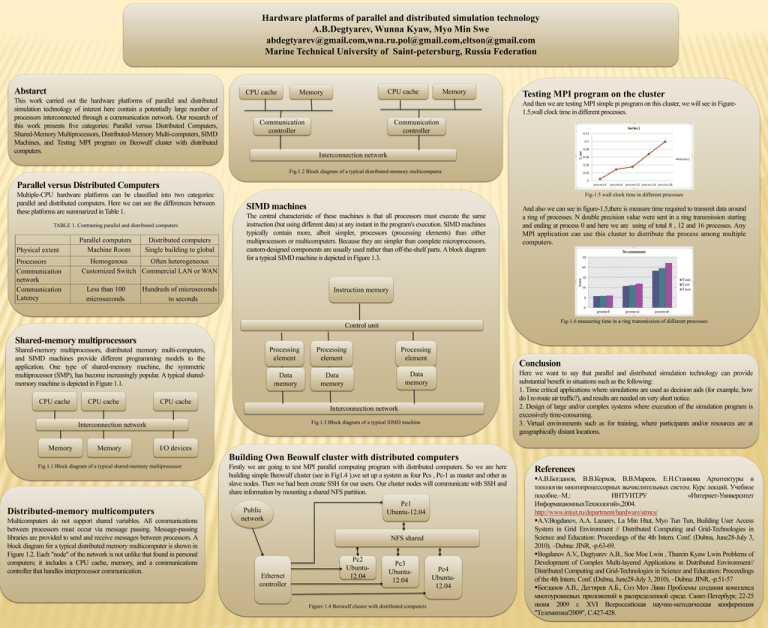

Hardware platforms of parallel and distributed simulation technology A.B.Degtyarev, Wunna Kyaw, Myo Min Swe abdegtyarev@gmail.com,wna.ru.pol@gmail.com,eltson@gmail.com Marine Technical University of Saint-petersburg, Russia Federation Abstarct CPU cache This work carried out the hardware platforms of parallel and distributed simulation technology of interest here contain a potentially large number of processors interconnected through a communication network. Our research of this work presents five categories: Parallel versus Distributed Computers, Shared-Memory Multiprocessors, Distributed-Memory Multi-computers, SIMD Machines, and Testing MPI program on Beowulf cluster with distributed computers. CPU cache Memory Memory Testing MPI program on the cluster And then we are testing MPI simple pi program on this cluster, we will see in Figure1.5,wall clock time in different processes. Communication controller Communication controller Interconnection network Fig.1.2 Block diagram of a typical distributed-memory multicomputer. Parallel versus Distributed Computers Multiple-CPU hardware platforms can be classified into two categories: parallel and distributed computers. Here we can see the differences between these platforms are summarized in Table 1. TABLE 1. Contrasting parallel and distributed computers Physical extent Processors Communication network Communication Latency Parallel computers Machine Room Distributed computers Single building to global Homogenous Often heterogeneous Customized Switch Commercial LAN or WAN Less than 100 microseconds Fig-1.5 wall clock time in different processes SIMD machines The central characteristic of these machines is that all processors must execute the same instruction (but using different data) at any instant in the program's execution. SIMD machines typically contain more, albeit simpler, processors (processing elements) than either multiprocessors or multicomputers. Because they are simpler than complete microprocessors, custom-designed components are usually used rather than off-the-shelf parts. A block diagram for a typical SIMD machine is depicted in Figure 1.3. Hundreds of microseconds to seconds And also we can see in figure-1.5,there is measure time required to transmit data around a ring of processes. N double precision value were sent in a ring transmission starting and ending at process 0 and here we are using of total 8 , 12 and 16 processes. Any MPI application can use this cluster to distribute the process among multiple computers. Instruction memory Fig-1.6 measuring time in a ring transmission of different processes Control unit Shared-memory multiprocessors Shared-memory multiprocessors, distributed memory multi-computers, and SIMD machines provide different programming models to the application. One type of shared-memory machine, the symmetric multiprocessor (SMP), has become increasingly popular. A typical sharedmemory machine is depicted in Figure 1.1. CPU cache CPU cache Processing element Processing element Processing element Data memory Data memory Data memory Conclusion Here we want to say that parallel and distributed simulation technology can provide substantial benefit in situations such as the following: 1. Time critical applications where simulations are used as decision aids (for example, how do I re-route air traffic?), and results are needed on very short notice. 2. Design of large and/or complex systems where execution of the simulation program is excessively time-consuming. 3. Virtual environments such as for training, where participants and/or resources are at geographically distant locations. CPU cache Interconnection network Fig.1.3 Block diagram of a typical SIMD machine Interconnection network Memory Memory I/O devices Building Own Beowulf cluster with distributed computers Fig.1.1 Block diagram of a typical shared-memory multiprocessor Distributed-memory multicomputers Multicomputers do not support shared variables. All communications between processors must occur via message passing. Message-passing libraries are provided to send and receive messages between processors. A block diagram for a typical distributed memory multicomputer is shown in Figure 1.2. Each "node" of the network is not unlike that found in personal computers; it includes a CPU cache, memory, and a communications controller that handles interprocessor communication. Firstly we are going to test MPI parallel computing program with distributed computers. So we are here building simple Beowulf cluster (see in Fig1.4 ),we set up a system as four Pcs , Pc-1 as master and other as slave nodes. Then we had been create SSH for our users. Our cluster nodes will communicate with SSH and share information by mounting a shared NFS partition. Pc1 Ubuntu-12.04 Public network NFS shared Ethernet controller Pc2 Ubuntu12.04 Pc3 Ubuntu12.04 Figure 1.4 Beowulf cluster with distributed computers Pc4 Ubuntu12.04 References А.В.Богданов, В.В.Корхов, В.В.Мареев, Е.Н.Станкова Архитектуры и топологии многопроцессорных вычислительных систем. Курс лекций. Учебное пособие.–М.: ИНТУИТ.РУ «Интернет-Университет ИнформационныхТехнологий»,2004. http://www.intuit.ru/department/hardware/atmcs/ A.V.Bogdanov, A.A. Lazarev, La Min Htut, Myo Tun Tun, Building User Access System in Grid Environment // Distributed Computing and Grid-Technologies in Science and Education: Proceedings of the 4th Intern. Conf. (Dubna, June28-July 3, 2010). –Dubna: JINR, -p.63-69. Bogdanov A.V., Degtyarev A.B., Soe Moe Lwin , Thurein Kyaw Lwin Problems of Development of Complex Multi-layered Applications in Distributed Environment// Distributed Computing and Grid-Technologies in Science and Education: Proceedings of the 4th Intern. Conf. (Dubna, June28-July 3, 2010). –Dubna: JINR, -p.51-57 Богданов А.В., Дегтярев А.Б., Соэ Моэ Лвин Проблемы создания комплекса многоуровневых приложений в распределенной среде. Санкт-Петербург, 22-25 июня 2009 г. XVI Всероссийская научно-методическая конференция "Телематика'2009", C.427-428.